Linux(Ubuntu)下搭建Hadoop环境

大致步骤

- 创建虚拟机(创建1台之后,可以进行克隆)

- 配置jdk

- 配置ssh、rsync

- 配置Hadoop

1.创建虚拟机

此处不做过多解释。我的是vmware+Ubuntu(18.04.1)

2.配置jdk

- 更新源sudo apt-get update,之前没有更新,找不到jdk

sudo apt-get update

- 配置jdk。 在之前可以将服务器改成国外的服务器(在"软件和更新"里面可以选择服务器),这样下载速度会更快。直接用命令行安装比去官网下载更快,而且它自己就会添加到环境变量

sudo apt-get install default-jdk

3.配置ssh、rsync

sudo apt-get install ssh

sudo apt-get install rsync

ssh-keygen -t dsa -P ' ' -f ~/.ssh/id_dsa

cat ~/.ssh/id_dsa.pub >> ~/.ssh/authorized_keys

4.配置Hadoop

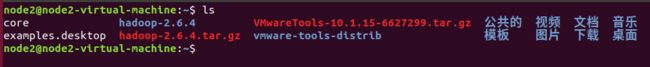

sudo wget https://archive.apache.org/dist/hadoop/common/hadoop-2.6.4/hadoop2.6.4.tar.gz

sudo tar -zxvf hadoop-2.6.4.tar.gz

sudo mv hadoop-2.6.4 /usr/local/hadoop

ll /usr/local/hadoop

sudo gedit ~/.bashrc

在里面末尾输入以下内容:

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

export HADOOP_HOME=/usr/local/hadoop

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_OPTS="-Djava.library.path=$HADOOP_HOME/lib"

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

source ~/.bashrc

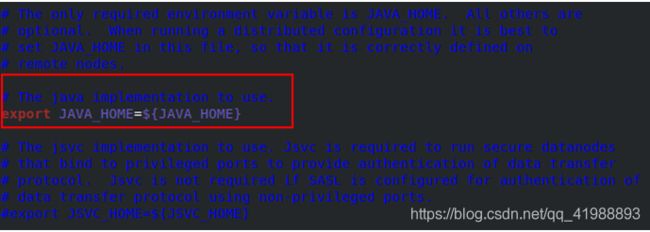

sudo gedit /usr/local/hadoop/etc/hadoop/hadoop-env.sh

将下图红色部分改为:

export JAVA_HOME=/usr/lib/jvm/java-11-openjdk-amd64

sudo gedit /usr/local/hadoop/etc/hadoop/core-site.xml

修改如下:

<configuration>

<property>

<name>fs.default.name</name>

<value>hdfs://localhost:9000</value>

</property>

</configuration>

sudo gedit /usr/local/hadoop/etc/hadoop/yarn-site.xml

修改如下:

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.nodemanager.aux-services.mapreduce.shuffle.class</name>

<value>org.apache.hadoop.mapred.ShuffleHandler</value>

</property>

sudo gedit /usr/local/hadoop/etc/hadoop/mapred-site.xml

修改如下:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

</configuration>

sudo gedit /usr/local/hadoop/etc/hadoop/hdfs-site.xml

修改如下:

<configuration>

<property>

<name>dfs.replication</name>

<value>3</value>

</property>

<property>

<name>dfs.namenode.name.dir</name>

<value>file:/usr/local/hadoop/hadoop_data/hdfs/namenode</value>

</property>

<property>

<name>dfs.datanode.data.dir</name>

<value>file:/usr/local/hadoop/hadoop_data/hdfs/datanode</value>

</property>

</configuration>

sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/namenode

创建datanode目录

sudo mkdir -p /usr/local/hadoop/hadoop_data/hdfs/datanode

更改目录所有者:

sudo chown node2:node2 -R /usr/local/hadoop

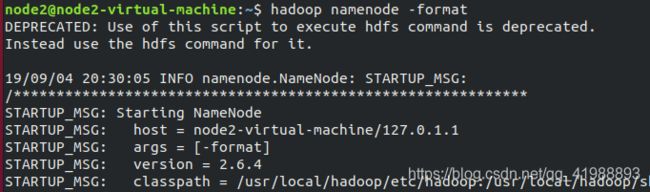

格式化HDFS:

hadoop namenode -format

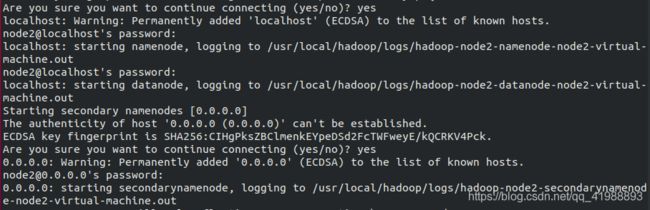

2.同时启动 start-all.sh

这里我采用同时启动。此外,停止服务是stop-all.sh

jps

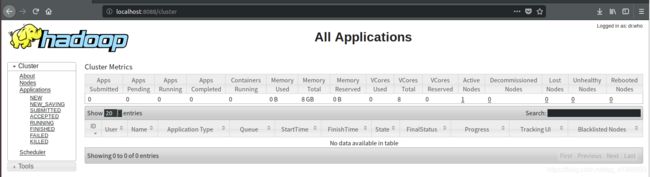

http://localhost:8088

可以看到如下图所示:

至此,大功告成!后面还需要搭建集群,可以采取克隆虚拟机的方式,这里不再叙述。

更新一下,这里建议大家用virtualbox+Ubuntu14.0,因为后期我在本机搭建伪分布式的时候,要设置网卡1、网卡2,vmware感觉不太方便。用Ubuntu14是因为要修改ip,18、16版本修改ip比14略麻烦,为了简便一点所以建议14版。说多了都是泪啊~