tensorlayer学习日志14_chapter5_5.3

第5.3节讲的是文本简单处理,课程源码在这里: https://github.com/tensorlayer/tensorlayer/blob/master/example/tutorial_word2vec_basic.py

但是Step 7: Evaluate by analogy questions.我没能实现,主要是不知道,questions-words.txt是个什么东东,有机会再来试试吧~~

import sys

import time

import numpy as np

import tensorflow as tf

import tensorlayer as tl

from six.moves import xrange

words = tl.files.load_matt_mahoney_text8_dataset()

data_size = len(words)

print(data_size)

print(words[0:10])

resume = False

_UNK = "_UNK"

vocabulary_size = 50000

batch_size = 128

embedding_size = 128

skip_window = 1

num_skips = 2

num_sampled = 64

learning_rate = 1.0

n_epoch = 12

model_file_name = "model_word2vec_50k_128"

num_steps = int((data_size / batch_size) * n_epoch)

print('%d Steps in a Epoch, total Epochs %d' % (int(data_size / batch_size), n_epoch))

print(' learning_rate: %f' % learning_rate)

print(' batch_size: %d' % batch_size)

if resume:

print("Load existing data and dictionaries" + "!" * 10)

all_var = tl.files.load_npy_to_any(name=model_file_name + '.npy')

data = all_var['data']

count = all_var['count']

dictionary = all_var['dictionary']

reverse_dictionary = all_var['reverse_dictionary']

else:

data, count, dictionary, reverse_dictionary = tl.nlp.build_words_dataset(words, vocabulary_size, True, _UNK)

print('~~~~~~~~~~~~~Most 5 common words (+UNK)~~~~~~~~~~~~')

print(count[:5])

print('~~~~~~~~~~~~~~~~~~~~~~Sample data~~~~~~~~~~~~~~~~~')

print(data[:10], [reverse_dictionary[i] for i in data[:10]])

print('~~~~~~~~~~~~~只看周围两个单词,左右各一个~~~~~~~~~~~~')

batch, labels, data_index = tl.nlp.generate_skip_gram_batch(data=data, \

batch_size=8, num_skips=2, skip_window=1, data_index=0)

for i in range(8):

print(batch[i], reverse_dictionary[batch[i]], '->', labels[i, 0], reverse_dictionary[labels[i, 0]])

print('~~~~~~~~~~~~~只看周围四个单词,左右各两个~~~~~~~~~~~~')

batch, labels, data_index = tl.nlp.generate_skip_gram_batch(data=data, \

batch_size=8, num_skips=4, skip_window=2, data_index=0)

for i in range(8):

print(batch[i], reverse_dictionary[batch[i]], '->', labels[i, 0], reverse_dictionary[labels[i, 0]])

print('~~~~~~~~~~~~~~Build Skip-Gram model~~~~~~~~~~~~~')

valid_size = 16

valid_window = 100

valid_examples = np.random.choice(valid_window, valid_size, replace=False)

train_inputs = tf.placeholder(tf.int32, shape=[batch_size])

train_labels = tf.placeholder(tf.int32, shape=[batch_size, 1])

valid_dataset = tf.constant(valid_examples, dtype=tf.int32)

emb_net = tl.layers.Word2vecEmbeddingInputlayer(

inputs=train_inputs,

train_labels=train_labels,

vocabulary_size=vocabulary_size,

embedding_size=embedding_size,

num_sampled=num_sampled,

name='word2vec_layer')

cost = emb_net.nce_cost

train_params = emb_net.all_params

# train_op = tf.train.GradientDescentOptimizer(learning_rate).minimize(cost, var_list=train_params)

train_op = tf.train.AdagradOptimizer(learning_rate).minimize(cost, var_list=train_params)

normalized_embeddings = emb_net.normalized_embeddings

valid_embed = tf.nn.embedding_lookup(normalized_embeddings, valid_dataset)

similarity = tf.matmul(valid_embed, normalized_embeddings, transpose_b=True)

print('~~~~~~~~~~~~~~~~~Start training~~~~~~~~~~~~~~~~')

sess = tf.InteractiveSession()

tl.layers.initialize_global_variables(sess)

if resume:

print("Load existing model" + "!" * 10)

# Load from ckpt or npz file

# saver = tf.train.Saver()

# saver.restore(sess, model_file_name+'.ckpt')

tl.files.load_and_assign_npz_dict(name=model_file_name + '.npz', sess=sess)

emb_net.print_params()

emb_net.print_layers()

# save vocabulary to txt

tl.nlp.save_vocab(count, name='vocab_text8.txt')

average_loss = 0

step = 0

print_freq=100000

while step < num_steps:

start_time = time.time()

batch_inputs, batch_labels, data_index = tl.nlp.generate_skip_gram_batch(data=data, \

batch_size=batch_size, num_skips=num_skips, skip_window=skip_window, data_index=data_index)

feed_dict = {train_inputs: batch_inputs, train_labels: batch_labels}

_, loss_val = sess.run([train_op, cost], feed_dict=feed_dict)

average_loss += loss_val

if step % print_freq == 0:

if step > 0:

average_loss /= print_freq

print("~~~~~~~~Average loss at step %d/%d. loss: %f took: %fs~~~~~~~~~" % \

(step, num_steps, average_loss, time.time() - start_time))

average_loss = 0

if step % (print_freq * 2) == 0:

sim = similarity.eval(session=sess)

for i in xrange(valid_size):

valid_word = reverse_dictionary[valid_examples[i]]

top_k = 8

nearest = (-sim[i, :]).argsort()[1:top_k + 1]

log_str = "------Nearest to %s:" % valid_word

for k in xrange(top_k):

close_word = reverse_dictionary[nearest[k]]

log_str = "%s %s," % (log_str, close_word)

print(log_str)

if (step % (print_freq * 5) == 0) and (step != 0):

print("******Save model, data and dictionaries***" + "!" * 10)

# Save to ckpt or npz file

# saver = tf.train.Saver()

# save_path = saver.save(sess, model_file_name+'.ckpt')

tl.files.save_npz_dict(emb_net.all_params, name=model_file_name + '.npz', sess=sess)

tl.files.save_any_to_npy(

save_dict={

'data': data,

'count': count,

'dictionary': dictionary,

'reverse_dictionary': reverse_dictionary

}, name=model_file_name + '.npy'

)

step += 1

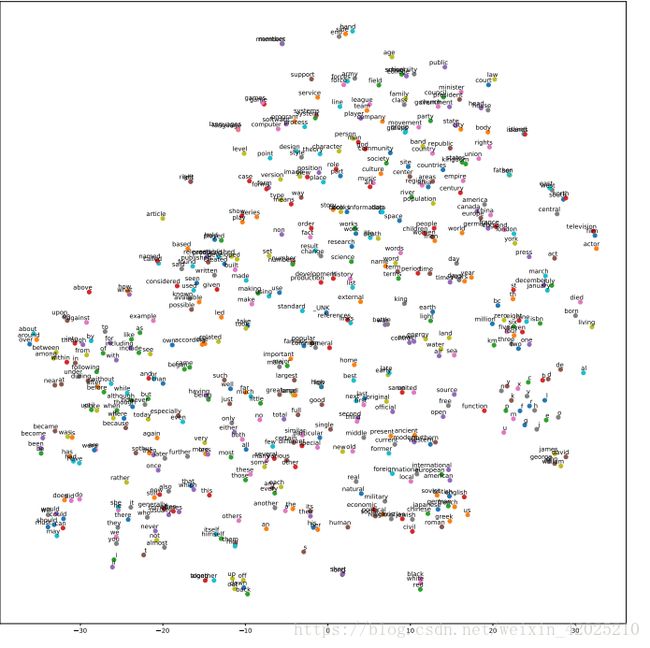

print('~~~~~~~~~~~~~~~Visualize t-SNE~~~~~~~~~~~~~~~~~~~~~~')

final_embeddings = sess.run(normalized_embeddings)

tl.visualize.tsne_embedding(final_embeddings, reverse_dictionary, plot_only=500, \

second=10, saveable=True, name='word2vec_basic',fig_idx=1988)

我是缩水训练了n_epoch = 12轮,嫌输出太多,就改成print_freq=100000,输出如下:

[TL] Load or Download matt_mahoney_text8 Dataset> data\mm_test8

17005207

['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against']

132853 Steps in a Epoch, total Epochs 12

learning_rate: 1.000000

batch_size: 128

[TL] Real vocabulary size 253854

[TL] Limited vocabulary size 50000

~~~~~~~~~~~~~Most 5 common words (+UNK)~~~~~~~~~~~~

[['_UNK', 418391], ('the', 1061396), ('of', 593677), ('and', 416629), ('one', 411764)]

~~~~~~~~~~~~~~~~~~~~~~Sample data~~~~~~~~~~~~~~~~~

[5234, 3081, 12, 6, 195, 2, 3134, 46, 59, 156] ['anarchism', 'originated', 'as', 'a', 'term', 'of', 'abuse', 'first', 'used', 'against']

~~~~~~~~~~~~~只看周围两个单词,左右各一个~~~~~~~~~~~~

3081 originated -> 12 as

3081 originated -> 5234 anarchism

12 as -> 6 a

12 as -> 3081 originated

6 a -> 195 term

6 a -> 12 as

195 term -> 2 of

195 term -> 6 a

~~~~~~~~~~~~~只看周围四个单词,左右各两个~~~~~~~~~~~~

12 as -> 5234 anarchism

12 as -> 6 a

12 as -> 195 term

12 as -> 3081 originated

6 a -> 3081 originated

6 a -> 12 as

6 a -> 2 of

6 a -> 195 term

~~~~~~~~~~~~~~Build Skip-Gram model~~~~~~~~~~~~~

[TL] Word2vecEmbeddingInputlayer word2vec_layer: (50000, 128)

~~~~~~~~~~~~~~~~~Start training~~~~~~~~~~~~~~~~

[TL] param 0: word2vec_layer/embeddings:0 (50000, 128) float32_ref (mean: -0.00022887902741786093, median: -0.0002027750015258789, std: 0.5774627327919006)

[TL] param 1: word2vec_layer/nce_weights:0 (50000, 128) float32_ref (mean: -9.809378752834164e-06, median: -1.1922868907277007e-05, std: 0.026393720880150795)

[TL] param 2: word2vec_layer/nce_biases:0 (50000,) float32_ref (mean: 0.0 , median: 0.0 , std: 0.0 )

[TL] num of params: 12850000

[TL] layer 0: word2vec_layer/embedding_lookup:0 (128, 128) float32

[TL] 50000 vocab saved to vocab_text8.txt in C:\bbbb\学习\python教材\jfj\一起玩转Tensorlayer

~~~~~~~~Average loss at step 0/1594238. loss: 285.750641 took: 0.015627s~~~~~~~~~

------Nearest to over: antonia, note, jennifer, digested, rates, louise, mutinied, dalglish,

------Nearest to his: took, allman, planckian, motorways, adolph, bader, contraindicated, overworked,

------Nearest to when: sunni, eugen, intruder, jeanette, laconia, recorded, comte, tautology,

------Nearest to its: templar, trouser, gough, gini, menstrual, sizable, bawerk, sag,

------Nearest to eight: improvement, jamiroquai, duodecimal, amalthea, reflections, assay, duarte, tov,

------Nearest to use: dagmar, appeared, frame, sv, starboard, bypass, phenomenalism, amygdalin,

------Nearest to nine: recognise, wilmington, wankel, biathletes, cdot, mem, infantile, panel,

------Nearest to are: yoga, brandy, govern, quadrangle, penitence, add, dieter, ostia,

------Nearest to three: transfiguration, disbandment, contains, mini, campus, tezuka, dilute, rob,

------Nearest to some: false, axe, kalat, continuation, tortuous, fixture, rebuttal, berthold,

------Nearest to united: equivalently, dili, juvenile, prototype, domenico, today, sardinian, bush,

------Nearest to first: mets, chieftain, keiretsu, wonder, schwarzschild, federer, responsibility, cryptanalysis,

------Nearest to up: ditko, weicker, subspace, maia, upright, acrobatics, amperes, alvaro,

------Nearest to time: menthol, antares, dale, countrymen, foyle, dao, omniscient, nobles,

------Nearest to th: pilgrim, higham, msg, keralite, configured, mcdonalds, generalizing, katakana,

------Nearest to who: generality, balfour, rede, farad, recessive, suspicions, copyleft, diluted,

~~~~~~~~Average loss at step 100000/1594238. loss: 9.816838 took: 0.000000s~~~~~~~~~

~~~~~~~~Average loss at step 200000/1594238. loss: 4.424414 took: 0.015627s~~~~~~~~~

------Nearest to over: off, through, about, marienburg, within, around, behind, monies,

------Nearest to his: her, their, its, our, your, my, whose, the,

------Nearest to when: although, if, while, though, where, before, however, after,

------Nearest to its: their, his, the, her, our, whose, your, any,

------Nearest to eight: six, seven, nine, four, five, three, zero, two,

------Nearest to use: support, cause, need, allow, using, representation, practice, share,

------Nearest to nine: eight, seven, six, four, five, three, zero, two,

------Nearest to are: were, have, is, contain, include, tend, require, exist,

------Nearest to three: four, five, six, seven, two, eight, nine, zero,

------Nearest to some: many, several, any, certain, these, both, all, various,

------Nearest to united: european, confederate, senate, southern, university, northern, including, national,

------Nearest to first: last, second, next, third, fourth, final, same, original,

------Nearest to up: off, out, down, them, him, forward, together, back,

------Nearest to time: thing, nosferatu, period, shiki, day, credo, macphail, stars,

------Nearest to th: bc, fourth, six, one, rd, fifth, zero, four,

------Nearest to who: never, actually, she, often, still, frequently, also, which,

~~~~~~~~Average loss at step 300000/1594238. loss: 4.273012 took: 0.000000s~~~~~~~~~

~~~~~~~~Average loss at step 400000/1594238. loss: 4.185988 took: 0.000000s~~~~~~~~~

------Nearest to over: around, within, about, through, across, approximately, off, killing,

------Nearest to his: her, their, my, its, your, our, the, whose,

------Nearest to when: if, before, while, after, although, though, where, unless,

------Nearest to its: their, his, the, her, our, whose, my, some,

------Nearest to eight: nine, six, seven, five, four, three, zero, two,

------Nearest to use: practice, need, share, usage, applications, reach, support, allow,

------Nearest to nine: eight, seven, six, five, four, zero, three, two,

------Nearest to are: were, have, is, contain, include, tend, remain, require,

------Nearest to three: four, five, six, seven, two, eight, nine, one,

------Nearest to some: many, several, any, numerous, certain, these, various, all,

------Nearest to united: confederate, southern, communist, grand, yugoslav, senate, european, baltic,

------Nearest to first: second, last, next, third, oldest, fourth, previous, best,

------Nearest to up: off, out, down, him, back, them, together, forth,

------Nearest to time: day, nosferatu, observed, length, measures, thing, times, writing,

------Nearest to th: rd, fourth, sixth, fifth, nine, nd, seventh, zero,

------Nearest to who: he, she, never, actually, still, they, often, which,

~~~~~~~~Average loss at step 500000/1594238. loss: 4.121214 took: 0.000000s~~~~~~~~~

******Save model, data and dictionaries***!!!!!!!!!!

[TL] [*] Model saved in npz_dict model_word2vec_50k_128.npz

~~~~~~~~Average loss at step 600000/1594238. loss: 4.112394 took: 0.015627s~~~~~~~~~

------Nearest to over: around, across, through, about, within, throughout, approximately, off,

------Nearest to his: her, their, my, your, its, our, the, whose,

------Nearest to when: if, before, while, although, unless, after, though, where,

------Nearest to its: their, his, the, her, our, whose, your, any,

------Nearest to eight: seven, six, nine, five, four, three, zero, two,

------Nearest to use: need, practice, allow, usage, sense, support, applications, share,

------Nearest to nine: eight, seven, six, five, four, zero, three, births,

------Nearest to are: were, have, remain, is, contain, tend, although, represent,

------Nearest to three: five, four, two, six, seven, eight, nine, one,

------Nearest to some: many, several, certain, any, numerous, these, various, this,

------Nearest to united: confederate, communist, yugoslav, baltic, arab, frankish, usa, battle,

------Nearest to first: second, last, next, third, oldest, previous, earliest, fourth,

------Nearest to up: off, out, down, forth, together, them, him, back,

------Nearest to time: xli, renminbi, week, length, dreidel, eras, hours, opportunity,

------Nearest to th: rd, nd, sixth, fifth, fourth, seventh, nine, twentieth,

------Nearest to who: actually, often, he, never, she, still, reportedly, nor,

~~~~~~~~Average loss at step 700000/1594238. loss: 4.070077 took: 0.015626s~~~~~~~~~

~~~~~~~~Average loss at step 800000/1594238. loss: 4.040599 took: 0.015627s~~~~~~~~~

------Nearest to over: across, about, approximately, around, off, beyond, past, throughout,

------Nearest to his: her, their, my, your, its, our, the, whose,

------Nearest to when: if, before, where, while, unless, after, though, although,

------Nearest to its: their, his, the, her, whose, our, some, inferential,

------Nearest to eight: six, seven, nine, four, five, three, zero, two,

------Nearest to use: need, practice, using, usage, allow, sense, cause, offer,

------Nearest to nine: eight, six, seven, five, four, zero, three, two,

------Nearest to are: were, have, is, contain, remain, include, tend, although,

------Nearest to three: four, five, six, seven, two, eight, nine, zero,

------Nearest to some: many, several, certain, numerous, these, any, various, this,

------Nearest to united: confederate, communist, yugoslav, baltic, u, university, neighboring, caribbean,

------Nearest to first: second, last, third, next, fourth, oldest, previous, earliest,

------Nearest to up: off, down, him, them, out, forth, back, forward,

------Nearest to time: period, year, nosferatu, successful, observed, segment, saying, sense,

------Nearest to th: nd, rd, fifth, seventh, fourth, sixth, ninth, st,

------Nearest to who: actually, which, reportedly, never, he, often, she, whom,

~~~~~~~~Average loss at step 900000/1594238. loss: 4.047786 took: 0.015627s~~~~~~~~~

~~~~~~~~Average loss at step 1000000/1594238. loss: 4.000875 took: 0.000000s~~~~~~~~~

------Nearest to over: about, around, approximately, across, beyond, off, throughout, through,

------Nearest to his: her, their, my, your, its, the, our, this,

------Nearest to when: if, although, where, though, while, before, after, unless,

------Nearest to its: their, his, the, whose, her, our, carom, any,

------Nearest to eight: seven, nine, four, six, five, three, zero, two,

------Nearest to use: need, usage, applications, sense, allow, practice, mention, share,

------Nearest to nine: eight, seven, six, five, four, zero, three, two,

------Nearest to are: were, have, contain, is, include, tend, require, these,

------Nearest to three: four, five, two, six, seven, eight, nine, zero,

------Nearest to some: many, several, any, certain, numerous, these, various, most,

------Nearest to united: confederate, communist, yugoslav, u, frankish, usa, republican, holden,

------Nearest to first: second, last, third, next, fourth, oldest, earliest, latest,

------Nearest to up: off, down, out, them, back, together, forth, him,

------Nearest to time: hours, period, lengths, observed, sense, day, length, saying,

------Nearest to th: nd, sixth, rd, ninth, fifth, seventh, fourth, st,

------Nearest to who: which, never, reportedly, actually, whom, he, she, initially,

******Save model, data and dictionaries***!!!!!!!!!!

[TL] [*] Model saved in npz_dict model_word2vec_50k_128.npz

~~~~~~~~Average loss at step 1100000/1594238. loss: 4.019906 took: 0.000000s~~~~~~~~~

~~~~~~~~Average loss at step 1200000/1594238. loss: 4.011443 took: 0.000000s~~~~~~~~~

------Nearest to over: about, across, around, approximately, throughout, through, off, within,

------Nearest to his: her, their, my, your, its, our, the, whose,

------Nearest to when: if, before, unless, where, while, although, after, though,

------Nearest to its: their, his, the, whose, her, our, any, some,

------Nearest to eight: seven, nine, six, five, four, three, zero, two,

------Nearest to use: usage, applications, using, practice, mention, need, sense, employ,

------Nearest to nine: seven, eight, six, five, four, three, zero, two,

------Nearest to are: were, have, is, contain, include, remain, represent, exist,

------Nearest to three: five, four, seven, two, six, eight, nine, one,

------Nearest to some: many, several, numerous, certain, any, all, these, various,

------Nearest to united: confederate, yugoslav, communist, frankish, neighboring, oas, u, holden,

------Nearest to first: last, second, next, third, oldest, earliest, fourth, latest,

------Nearest to up: off, down, out, together, them, forth, him, back,

------Nearest to time: day, segment, year, period, month, length, times, hours,

------Nearest to th: nd, rd, sixth, fifth, fourth, ninth, seventh, twentieth,

------Nearest to who: which, never, reportedly, he, often, actually, they, she,

~~~~~~~~Average loss at step 1300000/1594238. loss: 3.963830 took: 0.015627s~~~~~~~~~

~~~~~~~~Average loss at step 1400000/1594238. loss: 3.993019 took: 0.000000s~~~~~~~~~

------Nearest to over: across, around, about, approximately, through, throughout, within, off,

------Nearest to his: her, their, my, its, our, the, your, whose,

------Nearest to when: if, where, before, while, unless, after, although, though,

------Nearest to its: their, his, the, whose, her, our, any, some,

------Nearest to eight: seven, nine, six, five, four, three, zero, two,

------Nearest to use: practice, usage, sense, need, applications, mention, reach, share,

------Nearest to nine: eight, seven, six, five, four, zero, three, two,

------Nearest to are: were, have, is, contain, include, remain, comprise, tend,

------Nearest to three: four, five, six, two, seven, eight, nine, zero,

------Nearest to some: many, several, numerous, certain, any, various, these, most,

------Nearest to united: confederate, usa, u, communist, arab, frankish, holden, oas,

------Nearest to first: second, last, third, fourth, next, earliest, oldest, latest,

------Nearest to up: off, out, down, forth, together, them, him, back,

------Nearest to time: day, period, times, life, periods, eras, masses, length,

------Nearest to th: nd, rd, ninth, seventh, fourth, sixth, fifth, st,

------Nearest to who: reportedly, which, never, he, nevertheless, often, seldom, actually,

~~~~~~~~Average loss at step 1500000/1594238. loss: 3.958364 took: 0.000000s~~~~~~~~~

******Save model, data and dictionaries***!!!!!!!!!!

[TL] [*] Model saved in npz_dict model_word2vec_50k_128.npz

~~~~~~~~~~~~~~~Visualize t-SNE~~~~~~~~~~~~~~~~~~~~~~

[Finished in 3668.3s]从结果来看相当不错,特别是 数字 和 his 两类:

------Nearest to his: her, their, my, its, our, the, your, whose,

------Nearest to when: if, where, before, while, unless, after, although, though,

------Nearest to its: their, his, the, whose, her, our, any, some,

------Nearest to eight: seven, nine, six, five, four, three, zero, two,

------Nearest to use: practice, usage, sense, need, applications, mention, reach, share,

------Nearest to nine: eight, seven, six, five, four, zero, three, two,