基于Tensorflow的Facenet 人脸识别实现

1. 开发环境

OS:ubuntu16.04

tensorflow版本:1.12.0

python版本:3.6.8

2. 下载源码到本地

facenet官方github: https://github.com/davidsandberg/facenet.git

git clone https://github.com/davidsandberg/facenet.git在requirements.txt文件看到要安装相关的依赖库,自己用pip指令安装一下就好了

tensorflow==1.7

scipy

scikit-learn

opencv-python

h5py

matplotlib

Pillow

requests

psutil

配置环境,gedit ~/.bashrc,添加“export PYTHONPATH=$(pwd)/src”,然后source ~/.bashrc,这样环境就配置完成了。

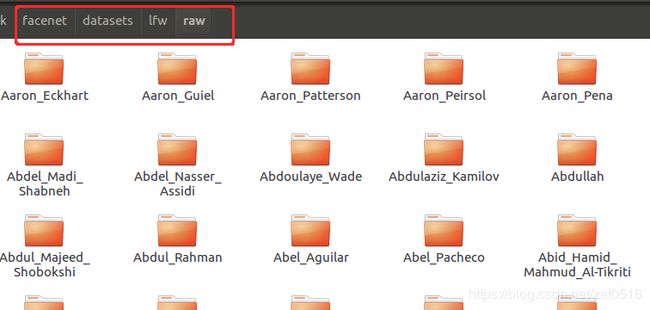

3.下载LFW数据集

点击网址下载:http://vis-www.cs.umass.edu/lfw/lfw.tgz

cd facenet,mkdir -p datasets/lfw/raw,这个指令是在你下载的facenet所在文件夹下新建datasets/lfw/raw这几个文件夹,然后将你下载的LFW数据集解压到raw这个文件夹下。

4.数据预处理

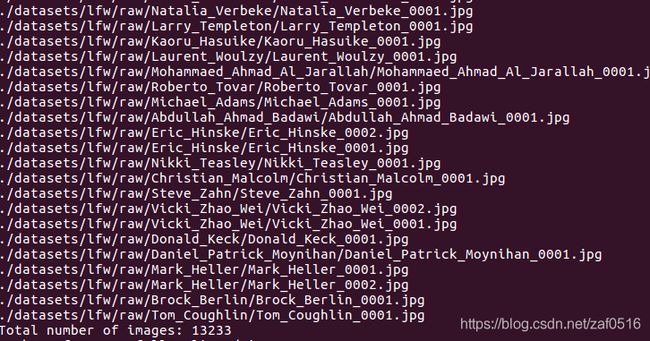

- 对LFW图片预处理

lfw的图片原图尺寸为 250*250,我们要修改图片尺寸,使其大小和预训练模型的图片输入尺寸一致,即160*160,转换后的数据集存储在 facenet/datasets/lfw/raw文件夹内。

- LFW人脸数据集对齐

align_dataset_mtcnn.py 会对dataset的图片进行人脸检测,进一步细化人脸图片,然后再把人脸图片尺寸修改为160×160的尺寸。进入到facenet/src 目录下,把align_dataset_mtcnn.py 文件拷贝到src目录:

cd facenet/src

python ./src/align/align_dataset_mtcnn.py ./datasets/lfw/raw ./datasets/lfw/lfw_mtcnnpy_160 --image_size 160 --margin 32 --random_order --gpu_memory_fraction 0.25打印如下表示成功:

5.结果比对

请查看facenet/data/pairs.txt的数据,匹配的人名和编号如下:

A 1 4,表示A的第一张和第四张是一个人。相反另一种表示就是不匹配的。

6.遇到的问题

问题一:ValueError: Input 0 of node Reshape was passed int32 from batch_join:1 incompatible with expected int64.

解决办法:打开validate_on_lfw.py,找到这三个地方,data_flow_ops.FIFOQueue,labels_placeholder,control_placeholder,将他们的tf.int32全部换成tf.int64重新运行即可。

其它问题:在执行的之后有到一两个问题,但是发现我解压的20170512-110547文件夹的内容被不明力量的给修改了,你可以检查一下自己的,然后重新解压即可。

validate_on_lfw.py流程解析

源代码如下:

"""Validate a face recognizer on the "Labeled Faces in the Wild" dataset (http://vis-www.cs.umass.edu/lfw/).

Embeddings are calculated using the pairs from http://vis-www.cs.umass.edu/lfw/pairs.txt and the ROC curve

is calculated and plotted. Both the model metagraph and the model parameters need to exist

in the same directory, and the metagraph should have the extension '.meta'.

"""

# MIT License

#

# Copyright (c) 2016 David Sandberg

#

# Permission is hereby granted, free of charge, to any person obtaining a copy

# of this software and associated documentation files (the "Software"), to deal

# in the Software without restriction, including without limitation the rights

# to use, copy, modify, merge, publish, distribute, sublicense, and/or sell

# copies of the Software, and to permit persons to whom the Software is

# furnished to do so, subject to the following conditions:

#

# The above copyright notice and this permission notice shall be included in all

# copies or substantial portions of the Software.

#

# THE SOFTWARE IS PROVIDED "AS IS", WITHOUT WARRANTY OF ANY KIND, EXPRESS OR

# IMPLIED, INCLUDING BUT NOT LIMITED TO THE WARRANTIES OF MERCHANTABILITY,

# FITNESS FOR A PARTICULAR PURPOSE AND NONINFRINGEMENT. IN NO EVENT SHALL THE

# AUTHORS OR COPYRIGHT HOLDERS BE LIABLE FOR ANY CLAIM, DAMAGES OR OTHER

# LIABILITY, WHETHER IN AN ACTION OF CONTRACT, TORT OR OTHERWISE, ARISING FROM,

# OUT OF OR IN CONNECTION WITH THE SOFTWARE OR THE USE OR OTHER DEALINGS IN THE

# SOFTWARE.

from __future__ import absolute_import

from __future__ import division

from __future__ import print_function

import tensorflow as tf

import numpy as np

import argparse

import facenet

import lfw

import os

import sys

from tensorflow.python.ops import data_flow_ops

from sklearn import metrics

from scipy.optimize import brentq

from scipy import interpolate

def main(args):

with tf.Graph().as_default():

with tf.Session() as sess:

# Read the file containing the pairs used for testing

# 读入pairs文件

pairs = lfw.read_pairs(os.path.expanduser(args.lfw_pairs))

# Get the paths for the corresponding images

# 获取文件路径和是否匹配的关系对

paths, actual_issame = lfw.get_paths(os.path.expanduser(args.lfw_dir), pairs)

image_paths_placeholder = tf.placeholder(tf.string, shape=(None,1), name='image_paths')

labels_placeholder = tf.placeholder(tf.int64, shape=(None,1), name='labels')

batch_size_placeholder = tf.placeholder(tf.int32, name='batch_size')

control_placeholder = tf.placeholder(tf.int64, shape=(None,1), name='control')

phase_train_placeholder = tf.placeholder(tf.bool, name='phase_train')

nrof_preprocess_threads = 4

image_size = (args.image_size, args.image_size)

eval_input_queue = data_flow_ops.FIFOQueue(capacity=2000000,

dtypes=[tf.string, tf.int64, tf.int64],

shapes=[(1,), (1,), (1,)],

shared_name=None, name=None)

eval_enqueue_op = eval_input_queue.enqueue_many([image_paths_placeholder, labels_placeholder, control_placeholder], name='eval_enqueue_op')

image_batch, label_batch = facenet.create_input_pipeline(eval_input_queue, image_size, nrof_preprocess_threads, batch_size_placeholder)

# Load the model

# 加载模型

input_map = {'image_batch': image_batch, 'label_batch': label_batch, 'phase_train': phase_train_placeholder}

facenet.load_model(args.model, input_map=input_map)

# Get output tensor

# 获取输入输出的张量

embeddings = tf.get_default_graph().get_tensor_by_name("embeddings:0")

#

coord = tf.train.Coordinator()

tf.train.start_queue_runners(coord=coord, sess=sess)

evaluate(sess, eval_enqueue_op, image_paths_placeholder, labels_placeholder, phase_train_placeholder, batch_size_placeholder, control_placeholder,

embeddings, label_batch, paths, actual_issame, args.lfw_batch_size, args.lfw_nrof_folds, args.distance_metric, args.subtract_mean,

args.use_flipped_images, args.use_fixed_image_standardization)

def evaluate(sess, enqueue_op, image_paths_placeholder, labels_placeholder, phase_train_placeholder, batch_size_placeholder, control_placeholder,

embeddings, labels, image_paths, actual_issame, batch_size, nrof_folds, distance_metric, subtract_mean, use_flipped_images, use_fixed_image_standardization):

# Run forward pass to calculate embeddings

# 使用前向传播来验证

print('Runnning forward pass on LFW images')

# Enqueue one epoch of image paths and labels

nrof_embeddings = len(actual_issame)*2 # nrof_pairs * nrof_images_per_pair

nrof_flips = 2 if use_flipped_images else 1

nrof_images = nrof_embeddings * nrof_flips

labels_array = np.expand_dims(np.arange(0,nrof_images),1)

image_paths_array = np.expand_dims(np.repeat(np.array(image_paths),nrof_flips),1)

control_array = np.zeros_like(labels_array, np.int32)

if use_fixed_image_standardization:

control_array += np.ones_like(labels_array)*facenet.FIXED_STANDARDIZATION

if use_flipped_images:

# Flip every second image

control_array += (labels_array % 2)*facenet.FLIP

sess.run(enqueue_op, {image_paths_placeholder: image_paths_array, labels_placeholder: labels_array, control_placeholder: control_array})

embedding_size = int(embeddings.get_shape()[1])

assert nrof_images % batch_size == 0, 'The number of LFW images must be an integer multiple of the LFW batch size'

nrof_batches = nrof_images // batch_size

emb_array = np.zeros((nrof_images, embedding_size))

lab_array = np.zeros((nrof_images,))

for i in range(nrof_batches):

feed_dict = {phase_train_placeholder:False, batch_size_placeholder:batch_size}

emb, lab = sess.run([embeddings, labels], feed_dict=feed_dict)

lab_array[lab] = lab

emb_array[lab, :] = emb

if i % 10 == 9:

print('.', end='')

sys.stdout.flush()

print('')

embeddings = np.zeros((nrof_embeddings, embedding_size*nrof_flips))

if use_flipped_images:

# Concatenate embeddings for flipped and non flipped version of the images

embeddings[:,:embedding_size] = emb_array[0::2,:]

embeddings[:,embedding_size:] = emb_array[1::2,:]

else:

embeddings = emb_array

assert np.array_equal(lab_array, np.arange(nrof_images))==True, 'Wrong labels used for evaluation, possibly caused by training examples left in the input pipeline'

# 使用十折交叉验证计算准确率和验证率

tpr, fpr, accuracy, val, val_std, far = lfw.evaluate(embeddings, actual_issame, nrof_folds=nrof_folds, distance_metric=distance_metric, subtract_mean=subtract_mean)

print('Accuracy: %2.5f+-%2.5f' % (np.mean(accuracy), np.std(accuracy)))

print('Validation rate: %2.5f+-%2.5f @ FAR=%2.5f' % (val, val_std, far))

# 得到auc和等错误率eer的值

auc = metrics.auc(fpr, tpr)

print('Area Under Curve (AUC): %1.3f' % auc)

eer = brentq(lambda x: 1. - x - interpolate.interp1d(fpr, tpr)(x), 0., 1.)

print('Equal Error Rate (EER): %1.3f' % eer)

def parse_arguments(argv):

parser = argparse.ArgumentParser()

parser.add_argument('lfw_dir', type=str,

help='Path to the data directory containing aligned LFW face patches.')

parser.add_argument('--lfw_batch_size', type=int,

help='Number of images to process in a batch in the LFW test set.', default=100)

parser.add_argument('model', type=str,

help='Could be either a directory containing the meta_file and ckpt_file or a model protobuf (.pb) file')

parser.add_argument('--image_size', type=int,

help='Image size (height, width) in pixels.', default=160)

parser.add_argument('--lfw_pairs', type=str,

help='The file containing the pairs to use for validation.', default='data/pairs.txt')

parser.add_argument('--lfw_nrof_folds', type=int,

help='Number of folds to use for cross validation. Mainly used for testing.', default=10)

parser.add_argument('--distance_metric', type=int,

help='Distance metric 0:euclidian, 1:cosine similarity.', default=0)

parser.add_argument('--use_flipped_images',

help='Concatenates embeddings for the image and its horizontally flipped counterpart.', action='store_true')

parser.add_argument('--subtract_mean',

help='Subtract feature mean before calculating distance.', action='store_true')

parser.add_argument('--use_fixed_image_standardization',

help='Performs fixed standardization of images.', action='store_true')

return parser.parse_args(argv)

if __name__ == '__main__':

main(parse_arguments(sys.argv[1:]))

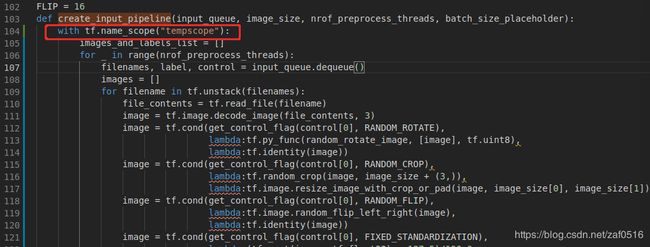

问题二:KeyError: "The name 'decode_image/cond_jpeg/is_png' refers to an Operation not in the graph."

解决办法:2.在facenet.py代码中找到create_input_pipeline 再添加一行语句 with tf.name_scope("tempscope"):就可以完美解决(貌似Tensorflow 1.10及以上版本才修复这个bug)。

https://github.com/davidsandberg/facenet/issues/852

https://www.twblogs.net/a/5c7bd842bd9eee31cea5e951/zh-cn

6.下载已训练的模型

选择20170512-110547这个 model 下载,如果没办法越墙可以在处model下载此训练好的模型。下载后把其解压到虚拟环境下的任何你喜欢的目录,比如我的,解压后存放的路径为 ./facenet/models/20170512-110547

7.测试模型准确率

python ./src/validate_on_lfw.py ./datasets/lfw/lfw_mtcnnpy_160 ./models/facenet/20170512-110547

结果如下:

2019-07-22 11:20:19.900552: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: SSE4.1 SSE4.2 AVX AVX2 FMA

2019-07-22 11:20:20.007808: I tensorflow/stream_executor/cuda/cuda_gpu_executor.cc:964] successful NUMA node read from SysFS had negative value (-1), but there must be at least one NUMA node, so returning NUMA node zero

2019-07-22 11:20:20.008594: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1432] Found device 0 with properties:

name: GeForce GTX 1080 Ti major: 6 minor: 1 memoryClockRate(GHz): 1.582

pciBusID: 0000:01:00.0

totalMemory: 10.92GiB freeMemory: 10.30GiB

2019-07-22 11:20:20.008611: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1511] Adding visible gpu devices: 0

2019-07-22 11:20:20.211275: I tensorflow/core/common_runtime/gpu/gpu_device.cc:982] Device interconnect StreamExecutor with strength 1 edge matrix:

2019-07-22 11:20:20.211304: I tensorflow/core/common_runtime/gpu/gpu_device.cc:988] 0

2019-07-22 11:20:20.211324: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1001] 0: N

2019-07-22 11:20:20.211525: I tensorflow/core/common_runtime/gpu/gpu_device.cc:1115] Created TensorFlow device (/job:localhost/replica:0/task:0/device:GPU:0 with 9959 MB memory) -> physical GPU (device: 0, name: GeForce GTX 1080 Ti, pci bus id: 0000:01:00.0, compute capability: 6.1)

WARNING:tensorflow:From /home/ubuntu/code/mywork/facenet/src/facenet.py:135: batch_join (from tensorflow.python.training.input) is deprecated and will be removed in a future version.

Instructions for updating:

Queue-based input pipelines have been replaced by `tf.data`. Use `tf.data.Dataset.interleave(...).batch(batch_size)` (or `padded_batch(...)` if `dynamic_pad=True`).

WARNING:tensorflow:From /home/ubuntu/anaconda3/lib/python3.6/site-packages/tensorflow/python/training/input.py:734: QueueRunner.__init__ (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

WARNING:tensorflow:From /home/ubuntu/anaconda3/lib/python3.6/site-packages/tensorflow/python/training/input.py:734: add_queue_runner (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

Model directory: ./models/facenet/20170512-110547

Metagraph file: model-20170512-110547.meta

Checkpoint file: model-20170512-110547.ckpt-250000

2019-07-22 11:20:22.297420: W tensorflow/core/graph/graph_constructor.cc:1265] Importing a graph with a lower producer version 21 into an existing graph with producer version 27. Shape inference will have run different parts of the graph with different producer versions.

WARNING:tensorflow:The saved meta_graph is possibly from an older release:

'model_variables' collection should be of type 'byte_list', but instead is of type 'node_list'.

WARNING:tensorflow:From ./src/validate_on_lfw.py:83: start_queue_runners (from tensorflow.python.training.queue_runner_impl) is deprecated and will be removed in a future version.

Instructions for updating:

To construct input pipelines, use the `tf.data` module.

Runnning forward pass on LFW images

............

Accuracy: 0.99183+-0.00320

Validation rate: 0.97467+-0.01477 @ FAR=0.00133

Area Under Curve (AUC): 1.000

Equal Error Rate (EER): 0.007

2019-07-22 11:21:03.284365: W tensorflow/core/kernels/queue_base.cc:285] _4_FIFOQueueV2_1: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.284965: W tensorflow/core/kernels/queue_base.cc:277] _2_input_producer/fraction_of_32_full/fraction_of_32_full: Skipping cancelled enqueue attempt with queue not closed

2019-07-22 11:21:03.285518: W tensorflow/core/kernels/queue_base.cc:285] _4_FIFOQueueV2_1: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.285981: W tensorflow/core/kernels/queue_base.cc:285] _1_FIFOQueueV2: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.285992: W tensorflow/core/kernels/queue_base.cc:285] _1_FIFOQueueV2: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.286087: W tensorflow/core/kernels/queue_base.cc:285] _4_FIFOQueueV2_1: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.286098: W tensorflow/core/kernels/queue_base.cc:285] _4_FIFOQueueV2_1: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.286542: W tensorflow/core/kernels/queue_base.cc:285] _1_FIFOQueueV2: Skipping cancelled dequeue attempt with queue not closed

2019-07-22 11:21:03.286566: W tensorflow/core/kernels/queue_base.cc:285] _1_FIFOQueueV2: Skipping cancelled dequeue attempt with queue not closed

参考网址:

https://blog.csdn.net/sinat_36742186/article/details/84667702

https://blog.csdn.net/niutianzhuang/article/details/79191167

https://github.com/chenlinzhong/face-login

https://blog.csdn.net/chzylucky/article/details/79680986

https://blog.csdn.net/tmosk/article/details/78087122

https://mp.weixin.qq.com/s/1kgbYScIujSjCRvfPGw0tg?

https://blog.csdn.net/hcstar/article/details/79859025

https://blog.csdn.net/cloudox_/article/details/78646063

https://blog.csdn.net/Gavinmiaoc/article/details/80482197