RS实战2——LFM算法实践(基于movielens数据集)

文章目录

- 1.movielens数据集介绍

- 1.1数据格式

- 1.2稀疏度

- 2.工具函数书写

- 2.1 get_item_info

- 2.2 get_ave_score

- 2.3 get_train_data

- 3.LFM模型

- 3.1模型初始化 init_model

- 3.2计算两个向量的余弦距离model_predict

- 3.3LFM训练模型 lfm_train

- 3.4给出推荐的电影列表 give_recom_result

- 3.5 辅助交互 ana_recom_result

- 3.6综合 model_train_process

- 4.运行结果:

- 4.1工具函数运行结果

- 4.2lfm模型运行结果

https://github.com/zhangdiandian0127/RecommendationSystem-Practice

1.movielens数据集介绍

MovieLens数据集包含多个用户对多部电影的评级数据,也包括电影元数据信息和用户属性信息。

这个数据集经常用来做推荐系统,机器学习算法的测试数据集。尤其在推荐系统领域,很多著名论文都是基于这个数据集的。(PS: 它是某次具有历史意义的推荐系统竞赛所用的数据集)。

下载地址为:http://files.grouplens.org/datasets/movielens/,有好几种版本,对应不同数据量,为训练方便起见,本文所用的数据为1M的部分数据。

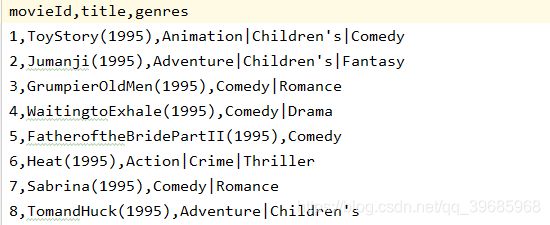

1.1数据格式

| movieId | title | genres |

|---|---|---|

| 电影id | 电影名称 | 电影类别 |

| userId | movieId | rating | timestamp |

|---|---|---|---|

| 用户id | 电影id | 评分 | 时间戳 |

1.2稀疏度

选择后的用户数:48,评分数:6889,电影数:27278

稀疏度:1-(6889/(48*27278)) ≈ 0.99474

2.工具函数书写

2.1 get_item_info

- 作用:处理item信息

- 输入:item的信息文件

- 返回:一个dict,key:a dict:key itemid,value:[title,genre]

def get_item_info(input_file):

"""

:param input_file: item info file

:return: a dict:key itemid,value:[title,genre]

"""

if not os.path.exists(input_file):

return {}

item_info = {} #用来存放处理后的文本信息,是一个dict字典类型

linenum = 0

fp = open(input_file,'r',encoding='utf-8')

for line in fp:

if linenum == 0:

linenum += 1

continue

item = line.strip().split(',')

if len(item) < 3:

continue

elif len(item) == 3:

itemid,title,genre = item[0],item[1],item[2]

elif len(item) > 3:

itemid = item[0]

genre = item[-1]

title = ",".join(item[1:-1])

item_info[itemid] = [title,genre]

fp.close()

return item_info

2.2 get_ave_score

- 作用:获取各item收到的平均打分

- 输入:ratings 评分文件

- 返回:a dict,key:itemid,value:ave_score

def get_ave_score(input_file):

"""

get item ave rating score

:param input_file: user rating file

:return: a dict,key:itemid,value:ave_score

"""

if not os.path.exists(input_file):

return {}

linenum = 0

record_dict = {}

score_dict = {}

fp = open(input_file,'r',encoding='utf-8')

for line in fp:

if linenum == 0:

linenum += 1

continue

item = line.strip().split(',')

if len(item) < 4:

continue

userid,itemid,rating = item[0],item[1],float(item[2])

if itemid not in record_dict:

record_dict[itemid] = [0,0]

record_dict[itemid][0] += 1

record_dict[itemid][1] += rating

fp.close()

for itemid in record_dict:

score_dict[itemid] = round(record_dict[itemid][1]/record_dict[itemid][0],3)

return score_dict

2.3 get_train_data

- 作用:获取训练数据

- 输入:ratings 评分文件

- 返回:a list:[(userid,itemid,label),(userid1,itemid1,label),…],每一个三元组都是一个userid对一个itemid的标签信息

- 值得注意的是我们这里人为定义的:大于等于4分的是正样本,反之为负样本

def get_train_data(input_file):

"""

get train data for LFM model train

:param input_file: user item rating file

:return: a list:[(userid,itemid,label),(userid1,itemid1,label)]

"""

if not os.path.exists(input_file):

return []

score_dict = get_ave_score(input_file)

neg_dict = {}

pos_dict = {}

train_data = []

linenum = 0

score_thr = 4.0#定义以4.0为分界线,pos和neg

fp = open(input_file,'r',encoding='utf-8')

for line in fp:

if linenum == 0:

linenum += 1

continue

item = line.strip().split(',')

if len(item) < 4:

continue

userid,itemid,rating = item[0],item[1],float(item[2])

if userid not in pos_dict:

pos_dict[userid] = []

if userid not in neg_dict:

neg_dict[userid] = []

if rating >= score_thr:

pos_dict[userid].append((itemid,1))

else:

score = score_dict.get(itemid,0)

neg_dict[userid].append((itemid,score))

fp.close()

for userid in pos_dict:

data_num = min(len(pos_dict[userid]),len(neg_dict.get(userid,[])))

if data_num > 0:

train_data += [(userid,zuhe[0],zuhe[1])for zuhe in pos_dict[userid]][:data_num]

else:

continue

sorted_neg_list = sorted(neg_dict[userid],key = lambda element:element[1],reverse=True)[:data_num]

train_data += [(userid,zuhe[0],0) for zuhe in sorted_neg_list]

# if userid == "47":

# print(len(pos_dict[userid]))

# print(len(neg_dict[userid]))

# print(sorted_neg_list)

return train_data

3.LFM模型

3.1模型初始化 init_model

- 作用:用服从标准正态分布的数据初始化向量(即理论篇中说到的Puk和Qki)

- 输入:向量长度

- 返回:一组服从标准正态分布的数据

def init_model(vector_len):

"""

:param vector_len: the len of vector

:return: a ndarray

"""

return np.random.randn(vector_len)

3.2计算两个向量的余弦距离model_predict

- 作用:计算两个向量的余弦距离

- 输入:两个向量

- 返回:余弦距离

def model_predict(user_vector,item_vector):

"""

distance between user_vector and item_vector

:param user_vector: model produce user vector

:param item_vector: model produce item vector

:return: a num

"""

res = np.dot(user_vector,item_vector)/(np.linalg.norm(user_vector)*np.linalg.norm(item_vector))

return res

3.3LFM训练模型 lfm_train

def lfm_train(train_data,F,alpha,beta,step):

"""

:param train_data: train_data for lfm

:param F: user vector len,item vector len

:param alpha: regularization factor

:param beta: learning rate

:param step: iteration num

:return: dict: key itemid,value :list

dict :key userid,value:list

"""

user_vec = {}

item_vec = {}

for step_index in range(step):

for data_instance in train_data:

userid,itemid,label = data_instance

if userid not in user_vec:

user_vec[userid] = init_model(F) #初始化,F个参数,标准正态分布

if itemid not in item_vec:

item_vec[itemid] = init_model(F) #初始化

#模型迭代部分

delta = label - model_predict(user_vec[userid],item_vec[itemid]) #预测与实际的差

for index in range(F):#index就是f

#出于工程角度的考虑,这里没有照搬公式的2倍,而是1倍,效果是一样的

user_vec[userid][index] += beta*(delta*item_vec[itemid][index] - alpha*user_vec[userid][index])#这里没有照搬ppt的2倍

item_vec[itemid][index] += beta*(delta*user_vec[userid][index] - alpha*item_vec[itemid][index])

beta = beta * 0.9 #参数衰减,目的是:在每一轮迭代的时候,接近收敛时,能慢一点

return user_vec,item_vec

3.4给出推荐的电影列表 give_recom_result

def give_recom_result(user_vec,item_vec,userid):

"""

use lfm model result give fix userid recom result

:param user_vec: model result

:param item_vec: model result

:param userid:fix userid

:return:a list:[(itemid,score)(itemid1,score1)]

"""

fix_num = 5 #排序,推荐前fix_num个结果

if userid not in user_vec:

return []

record = {}

recom_list = []

user_vector = user_vec[userid]

for itemid in item_vec:

item_vector = item_vec[itemid]

res = np.dot(user_vector,item_vector)/(np.linalg.norm((user_vector)*np.linalg.norm(item_vector))) #余弦距离

record[itemid] = res

record_list = list(record.items())

#排序

for zuhe in sorted(record.items(), key=lambda rec: record_list[1], reverse=True)[:fix_num]:

itemid = zuhe[0]

score = round(zuhe[1],3)

recom_list.append((itemid,score))

return recom_list

3.5 辅助交互 ana_recom_result

def ana_recom_result(train_data,userid,recom_list):

"""

debug recom result for userid

:param train_data: train data for userid

:param userid: fix userid

:param recom_list: recom result by lfm

:return: no return

"""

item_info = read.get_item_info("../data/movies.txt")

for data_instance in train_data:

tmp_userid,itemid,label = data_instance

if tmp_userid == userid and label == 1:

print(item_info[itemid])

print("前n个推荐电影为:")

cnt = 1

for zuhe in recom_list:

print(cnt,item_info[zuhe[0]])

cnt += 1

3.6综合 model_train_process

def model_train_process():

"""

test lfm model train

:return:

"""

train_data = read.get_train_data("../data/ratings.txt")

user_vec,item_vec = lfm_train(train_data,50,0.01,0.1,50)

recom_list = give_recom_result(user_vec,item_vec,'4')

print(recom_list)

ana_recom_result(train_data,'4',recom_list)

return user_vec,item_vec

4.运行结果:

4.1工具函数运行结果

if __name__ == '__main__':

# item_dict = get_item_info("..//data//movies.txt")

# print(len(item_dict))

# print(item_dict["1"])

# print(item_dict["11"])

# score_dict = get_ave_score("../data/ratings.txt")

# print(len(score_dict))

# print(score_dict["1"])

train_data = get_train_data("../data/ratings.txt")

print(train_data)

print(len(train_data))

# for a,b,c in train_data:

# if type(b) == type([]):

# print(b)

4.2lfm模型运行结果

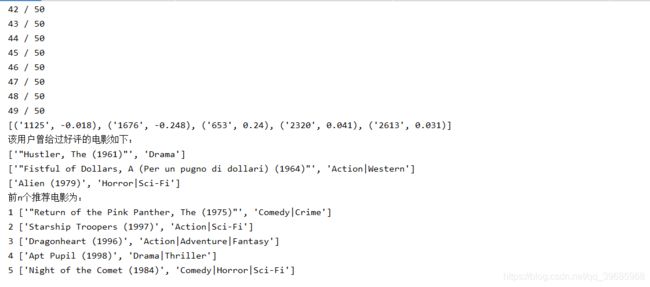

if __name__ == '__main__':

model_train_process()

这里,由于model_train_process()函数中,指定了,userid为12,即给出userid == 4的前fix_num即5个推荐结果

看起来,推荐结果还是比较符合用户胃口的——crime,action,horror。下一步将在更大的数据集上跑模型,并设置验证集,计算精确率、召回率等指标。