显著性目标检测之Towards High-Resolution Salient Object Detection

Towards High-Resolution Salient Object Detection

文章目录

- Towards High-Resolution Salient Object Detection

- 主要贡献

- 针对问题

- 主要方法

- GSN&LRN

- APS

- GLFN

- 实验细节

- 相关链接

原始文档: https://www.yuque.com/lart/papers/fgwcg5

主要贡献

- 提供了第一个高分辨率的显著性目标检测数据集

- 指出了当前显著性检测模型在高分辨率图像任务上的不足, 这里从高分辨率图像数据集入手, 给出了一个研究的新思路

- 针对高分辨率图像给出了自己的解决方案: 通过集成both global semantic information and local high-resolution details来应对这个挑战

- 提出了一种patch的采集策略, 将局部的处理集中在目标的边界部分(所谓较难的区域), 从而更有效的实现patch的处理.

针对问题

首先高分辨率显著性检测存在的必要性:

- 对于密集预测任务(例如, 分割任务), 低分辨率的图像会导致边缘的模糊

- 现在的大多数电子产品获取的图像大多数分辨率较高, 我们需要针对它们进行处理, 所以有必要研究高分辨率图像上的显著性目标检测

现有方法在高分辨率目标检测任务上的不足:

- 现有数据集多为低分辨率图片, 模型也大多使用低分辨率图片作为输入进行训练

- 现有模型往往通过下采样的过程来获得高层的语义信息, 在不断地降低分辨率的过程中, 细节信息逐渐丢失, 对于高分辨率图像而言尤其如此.

现有研究在高分辨率显著性目标检测任务上的不足:

- 目前没有专门的高分辨率显著性目标检测数据集, 这样使得现有的研究往往更多基于低分辨率图像开展, 很少有人研究在高分辨率图像上的显著性目标检测任务

现有的处理高分辨率图像的主要思路:

- The first is simply increasing the input size to maintain a relative high resolution and object details after a series of pooling operations. However, the large input size results in significant increases in memory usage. Moreover, it remains a question that if we can effectively extract details from lower-level layers in such a deep network through back propagation.

- The second method is partitioning inputs into patches and making predictions patch-by-patch. However, this type of method is time-consuming and can easily be affected by background noise.

- The third one includes some post-processing methods such as CRF or graph cuts, which can address this issue to a certain degree. But very few works attempted to solve it directly within the neural network training process.

As a result, the problem of applying DNNs for high-resolution salient object detection is fairly unsolved.

主要方法

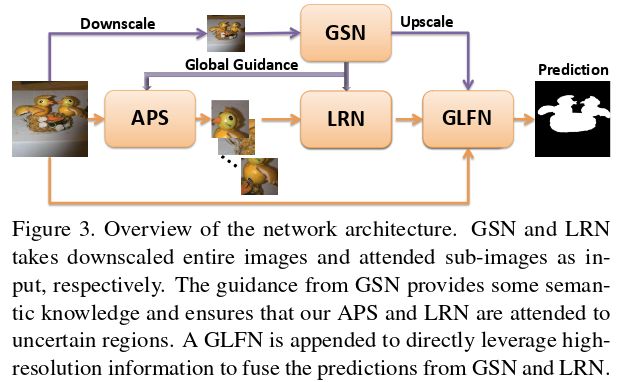

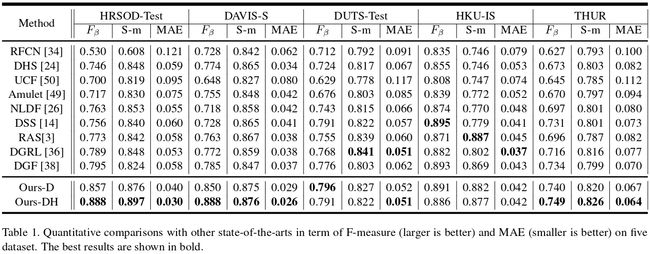

这里给出了完整的网络结构. 各个模块主要作用如下:

- Global Semantic Net-work (GSN): for extracting semantic information

- Local Refinement Network (LRN): for optimizing local details

- Attended Patch Sampling (APS) scheme: is proposed to enforce LRN to focus on uncertain regions, and this scheme provides a good trade-off between performance and efficiency

- Global-Local Fusion Network (GLFN): is proposed to enforce spatial consistency and further boost performance at high resolution.

GSN旨在从全局角度提取语义知识。在GSN的指导下,LRN旨在细化不确定的子区域。最后,GLFN将高分辨率图像作为输入,并进一步加强了GSN和LRN融合预测的空间一致性。

网络流程:

- 输入图像I通过下采样获得低分辨率图片(384x384), 之后通过GSN处理后得到粗略预测, 之后通过上采样恢复分原始辨率, 得到Fi.

- 然后将图像送入APS模块, 来生成M个子图像块, 其中对于第i个输入图像Ii的划分的M个块中的第m个块可以表示为PIim.

- 之后将每个PIim送入LRN获得细化的显著性预测结果RIim, 在这个过程中用到了来自GSN的语义引导.

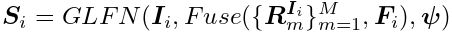

- GSN和LRN的输出经过融合处理后, 送到GLFN得到最终的预测Si.

GSN&LRN

对于这里的构造而言, We adopt the same backbone for GSN and LRN. Our model is simply built on the FCN architecture with the pre-trained 16-layer VGG network.

对于GSN和LRN而言, 由于输入的内容不同, 所以有着各自的缺点:

- The saliency maps generated by GSN are based on the full image and embedded with rich contextual information. As a result, GSN is competent in giving a rough saliency prediction but insufficient to precisely localize salient objects.

- In contrary, LRN takes sub-images as input, avoiding down-sampling which results in the loss of details. However, since sub-images are too local to indicate which area is more salient, LRN may be confused about which region should be highlighted. Also, LRN alone may have false alarms in some locally salient regions.

因此, 文章引入了semantic guidance from GSN to LRN, 这能够enhance global contextual knowledge while maintain high-resolution details. 如图4b所示, 给定GSN的粗略预测Fi, 首先根据LRN中的patch PIim的位置, 从Fi中剪裁一个patch PFim. 然后将PFim和LRN对应的特征图进行拼接进行处理.

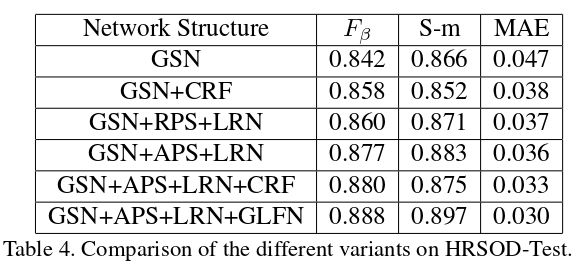

APS

传统的基于patch的方法, 通常利用滑窗或者超像素的方法来推断出每个patch, 这样很费时间. 这里注意到来自GSN的粗略显著性图实际上对于大多数像素而言预测是比较正确的, 所以这里考虑基于这样一种知识, 来更有效的提取patch.

这里实际上够早了一种分层级的预测方法, GSN可以为容易的区域提供良好的预测, 而LRN则是对于复杂的难的区域进行细致的预测, 使得模型更加有效和准确.

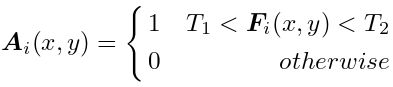

通过GSN的结果的引导, 可以生成附加在不确定区域的子图. 这里首先使用注意力图Ai来指示所有不确定的像素:

实际上所有在阈值T1和T2之间的像素值都要被认定为是不确定区域, 指示为1. 之后依据如下的算法进行确定:

算法中, 使用了几个常数:

- D是基本剪裁尺寸, 文中使用384

- n用来控制不同patch的重叠程度, 文中使用5

- r是一个用来生成不同大小的子图的随机值, 文中满足: r ∈ [ − D 6 , D 6 ] r \in [-\frac{D}{6}, \frac{D}{6}] r∈[−6D,6D]

- T1和T2是两个阈值, 分别设置为50和200

- w表示Ai中non-zero区域的宽度

- XL和XR是Ai中非零区域最左和最右的x坐标

We have performed grid search for setting these hyper-parameters and found that the results were not sen-sitive to their specific choices.

GLFN

最终的预测结果通过融合来自GSN和LRN的结果可以获得. 关于融合的策略, 一种简单的方式是直接用LRN结果中的RIim来替换对应于GSN结果Fi中的对应的不确定区域. 对重叠区域取平均即可. 然而, this kind of fusion lacks spatial consistency and does not leverage rich details in original high-resolution images.

这里提出直接训练网络来整合高分辨率的信息来帮助GSN和LRN的融合. To maintain all the high-resolution details from images, this network should not include any pooling layers or convolutional layers with large strides. 由于GPU内存的限制, 这里提出了一个轻量的网络Global-Local Fusion Network (GLFN)来处理这个问题. 具体结构如图5.

- High-resolution RGB images and combined maps from GSN and LRN are concatenated together to be the inputs of GLFN.

- GLFN consists of some convolution layers with dense connectivity. We set the growth rate g to be 2 for saving memory. We let the bottleneck layers (1×1 convolution) produce 4g feature maps.

- On the top of these densely connected layers, we add four dilated convolutional layers to enlarge receptive field. All the dilated convolutional layers have the same kernel size and output channels, i.e., k = 3 and c = 2. The rates of the four dilated convolutional layers are set with dilation = 1,6,12,18, respectively.

- At last, a 3×3 convolution is appended for final prediction.

- What is worth mentioning is that our proposed GLFN has an extremely small model size (i.e., 11.9 kB).

实验细节

- All experiments are conducted on a PC with an i7-8700 CPU and a 1080 Ti GPU, with the Caffe toolbox.

- In our method, every stage is trained to minimize a pixelwise softmax loss function, by using the stochastic gradient descent (SGD).

- Empirically, the momentum parameter is set to 0.9 and the weight decay is set to 0.0005.

- For GSN and LRN:

- The inputs are first warped into 384×384 and the batch size is set to 32.

- The weights in block 1 to block 5 are initialized with the pre-trained VGG model, while weight parameters of newly-added convolutional layers are randomly initialized by using the “msra” method.

- The learning rates of the pre-trained and newly-added layers are set to 1e-3 and 1e-2, respectively.

- GLFN:

- It is trained from scratch, and its weight parameters of convolutional layers are also randomly initialized by using the “msra” method.

- Its inputs are warped into 1024×1024 and the batch size is set to 2.

相关链接

- http://openaccess.thecvf.com/content_ICCV_2019/papers/Zeng_Towards_High-Resolution_Salient_Object_Detection_ICCV_2019_paper.pdf