ffplay播放器原理剖析

*****************************************************************************

* ffplay系列博客: *

* ffplay播放器原理剖析 *

* ffplay播放器音视频同步原理 *

* ffplay播放控制代码分析 *

* 视频主观质量对比工具(Visual comparision tool based on ffplay) *

*****************************************************************************

ffplay是使用ffmpeg api开发的功能完善的开源播放器,弄懂ffplay原理可以帮助我们很好的理解播放器的工作机制,但是目前很少看到关于ffplay的系统介绍的文章,所以下面基于ffmpeg-3.1.1的源代码来剖析ffplay的工作机制。

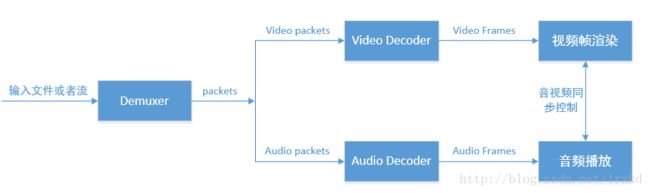

播放器框架

首先,一个简单的通用播放器的基本框架图如下:

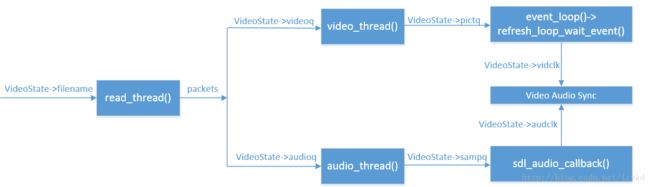

ffplay的总体框架解读

在ffplay中,各个线程角色如下:

read_thread()线程扮演着图中Demuxer的角色。

video_thread()线程扮演着图中Video Decoder的角色。

audio_thread()线程扮演着图中Audio Decoder的角色。

主线程中的event_loop()函数循环调用refresh_loop_wait_event()则扮演着图中 视频渲染的角色。

回调函数sdl_audio_callback扮演图中音频播放的角色。VideoState结构体变量则扮演者各个线程之间的信使。

因此ffplay的基本框架图如下:

1、read_thread线程负责读取文件内容,将video和audio内容分离出来生成packet,将packet输出到packet队列中,包括Video Packet Queue和Audio Packet Queue(不考虑subtitle)。

2、video_thread线程负责读取Video Packets Queue队列,将video packet解码得到Video Frame,将Video Frame输出到Video Frame Queue队列中。

3、audio_thread线程负责读取Audio Packets Queue队列,将audio packet解码得到Audio Frame,将Audio Frame输出到Audio Frame Queue队列中。

4、主函数(主线程)->event_loop()->refresh_loop_wait_event()负责读取Video Frame Queue中的video frame,调用SDL进行显示(其中包括了音视频同步控制的相关操作)。

5、SDL的回调函数sdl_audio_callback()负责读取Audio Frame Queue中的audio frame,对其进行处理后,将数据返回给SDL,然后SDL进行音频播放。

ffplay数据的流通

研究数据的流通可以帮助理解播放器的工作机制。ffplay中有两个关键队列packet queue和frame queue,把往队列中添加成员的操作称之为生产,从队列中取走成员的操作称之为消耗。通过分析各个队列的生产和消耗可以帮助我们弄明白数据是如何流通的。

Packet Queue,Video(Audio) Frame Queue的生产和消耗

read_thread()解析

read_thread()负责packet queue的生产,包括Video Packet Queue(is->videoq)和Audio Packet Queue(is->audioq)的生产。

调用关系:read_thread() -> packet_queue_put() -> packet_queue_put_private()

read_thread()的主干代码及相关函数代码如下:

- static int read_thread(void *arg)

- {

- VideoState *is = arg;

- ......

- for (;;) {

- ret = av_read_frame(ic, pkt); // 从源文件中读取内容到pkt结构中

- /* check if packet is in play range specified by user, then queue, otherwise discard */

- stream_start_time = ic->streams[pkt->stream_index]->start_time;

- // 下面的duration是通过命令传递给ffplay的指定播放时长的参数,所以判断pkt的时间戳是否在duration内

- pkt_ts = pkt->pts == AV_NOPTS_VALUE ? pkt->dts : pkt->pts;

- pkt_in_play_range = duration == AV_NOPTS_VALUE ||

- (pkt_ts - (stream_start_time != AV_NOPTS_VALUE ? stream_start_time : 0)) *

- av_q2d(ic->streams[pkt->stream_index]->time_base) -

- (double)(start_time != AV_NOPTS_VALUE ? start_time : 0) / 1000000

- <= ((double)duration / 1000000);

- if (pkt->stream_index == is->audio_stream && pkt_in_play_range) {

- packet_queue_put(&is->audioq, pkt); // 读到的pkt为audio,放入audio queue(is->audioq)

- } else if (pkt->stream_index == is->video_stream && pkt_in_play_range

- && !(is->video_st->disposition & AV_DISPOSITION_ATTACHED_PIC)) {

- packet_queue_put(&is->videoq, pkt); // 读到的pkt为video,放入video queue(is->videoq)

- } else if (pkt->stream_index == is->subtitle_stream && pkt_in_play_range) {

- packet_queue_put(&is->subtitleq, pkt); // 读到的pkt为subtitle,放到subtitile queue中

- } else {

- av_packet_unref(pkt);

- }

- }

- ......

- }

- static int packet_queue_put(PacketQueue *q, AVPacket *pkt)

- {

- int ret;

- SDL_LockMutex(q->mutex); // packet queue的写和读操作是在不同线程中,需要加锁互斥访问

- ret = packet_queue_put_private(q, pkt);

- SDL_UnlockMutex(q->mutex);

- if (pkt != &flush_pkt && ret < 0)

- av_packet_unref(pkt);

- return ret;

- }

- static int packet_queue_put_private(PacketQueue *q, AVPacket *pkt)

- {

- // PacketQueue为链表,往链表末尾追加成员

- MyAVPacketList *pkt1;

- if (q->abort_request)

- return -1;

- pkt1 = av_malloc(sizeof(MyAVPacketList)); //创建packet

- if (!pkt1)

- return -1;

- pkt1->pkt = *pkt;

- pkt1->next = NULL;

- if (pkt == &flush_pkt)

- q->serial++;

- pkt1->serial = q->serial;

- if (!q->last_pkt)

- q->first_pkt = pkt1;

- else

- q->last_pkt->next = pkt1; //将新pkt放到PacketQueue链表的末尾

- q->last_pkt = pkt1; // 更新链表尾巴

- q->nb_packets++; // 链表packet个数加1

- q->size += pkt1->pkt.size + sizeof(*pkt1); //更新链表size

- q->duration += pkt1->pkt.duration; //更新链表所有packets的总duration

- /* XXX: should duplicate packet data in DV case */

- SDL_CondSignal(q->cond); //通知packet_queue_get()函数,链表中有新packet了。

- return 0;

- }

video_thread()解析:

video_thread()负责Video Packet Queue(is->videoq)的消耗和Video Frame Queue(is->pictq)的生产。

Video Packet Queue消耗的函数调用关系:video_thread() -> get_video_frame() -> packet_queue_get(&is->videoq)

Video Frame Queue生产的函数调用关系:video_thread() -> queue_picture() -> frame_queue_push(&is->pictq)

video_thread()的主干代码及相关代码如下:

- static int video_thread(void *arg)

- {

- ......

- for (;;) {

- ret = get_video_frame(is, frame, &pkt, &serial); // 获取解码图像frame

- ......

- ret = queue_picture(is, frame, pts, duration, av_frame_get_pkt_pos(frame), serial); //将解码图像放到队列Video Frame Queue中

- }

- .......

- }

- // 获取解码图像frame

- static int get_video_frame(VideoState *is, AVFrame *frame, AVPacket *pkt, int *serial)

- {

- int got_picture;

- if (packet_queue_get(&is->videoq, pkt, 1, serial) < 0) //从队列中取packet

- return -1;

- ......

- if(avcodec_decode_video2(is->video_st->codec, frame, &got_picture, pkt) < 0) //解码packet,获取解码图像frame

- return 0;

- ......

- }

- // packet_queue_get用来获取链表packet queue的第一个packet,与packet_queue_put_private对应,一个读一个写

- static int packet_queue_get(PacketQueue *q, AVPacket *pkt, int block, int *serial)

- {

- MyAVPacketList *pkt1;

- int ret;

- SDL_LockMutex(q->mutex); // packet queue的写和读操作是在不同线程中,需要加锁互斥访问

- for (;;) {

- if (q->abort_request) {

- ret = -1;

- break;

- }

- pkt1 = q->first_pkt; //获取链表的第一个packet(链表头)

- if (pkt1) {

- q->first_pkt = pkt1->next; //packet queue链表头更新,指向第二个元素

- if (!q->first_pkt)

- q->last_pkt = NULL;

- q->nb_packets--;

- q->size -= pkt1->pkt.size + sizeof(*pkt1);

- *pkt = pkt1->pkt; //返回链表第一个packet的内容

- if (serial)

- *serial = pkt1->serial;

- av_free(pkt1);

- ret = 1;

- break;

- } else if (!block) { // packet queue链表是空的,非阻塞条件下,直接返回ret=0

- ret = 0;

- break;

- } else {

- SDL_CondWait(q->cond, q->mutex); // packet queue链表是空的(阻塞条件下),则等待read_thread读取packet

- }

- }

- SDL_UnlockMutex(q->mutex);

- return ret;

- }

- // queue_picture函数将解码出来的Video Frame(src_frame)追加到队列Video Frame Queue的末尾。

- static int queue_picture(VideoState *is, AVFrame *src_frame, double pts, double duration, int64_t pos, int serial)

- {

- Frame *vp;

- // 探测video frame queue是否有空的Frame,如果有则返回空的Frame(vp),接下来将src_frame中的视频内容拷贝到其中

- if (!(vp = frame_queue_peek_writable(&is->pictq)))

- return -1;

- vp->sar = src_frame->sample_aspect_ratio;

- /* alloc or resize hardware picture buffer */

- // 是否需要给vp->bmp分配? vp->bmp是一个SDL_Overlay,存放yuv数据,然后在SDL_surface上进行显示

- if (!vp->bmp || vp->reallocate || !vp->allocated ||

- vp->width != src_frame->width ||

- vp->height != src_frame->height) {

- SDL_Event event;

- vp->allocated = 0;

- vp->reallocate = 0;

- vp->width = src_frame->width;

- vp->height = src_frame->height;

- /* the allocation must be done in the main thread to avoid

- locking problems. */

- event.type = FF_ALLOC_EVENT;

- event.user.data1 = is;

- SDL_PushEvent(&event); // 在主线程的event_loop函数中,收到FF_ALLOC_EVENT事件后,会调用alloc_picture创建vp->bmp

- /* wait until the picture is allocated */

- SDL_LockMutex(is->pictq.mutex);

- while (!vp->allocated && !is->videoq.abort_request) {

- SDL_CondWait(is->pictq.cond, is->pictq.mutex);

- }

- /* if the queue is aborted, we have to pop the pending ALLOC event or wait for the allocation to complete */

- if (is->videoq.abort_request && SDL_PeepEvents(&event, 1, SDL_GETEVENT, SDL_EVENTMASK(FF_ALLOC_EVENT)) != 1) {

- while (!vp->allocated && !is->abort_request) {

- SDL_CondWait(is->pictq.cond, is->pictq.mutex);

- }

- }

- SDL_UnlockMutex(is->pictq.mutex);

- if (is->videoq.abort_request)

- return -1;

- }

- /* if the frame is not skipped, then display it */

- // 将解码出来的yuv数据拷贝到vp->bmp中,供后续显示线程显示

- if (vp->bmp) {

- uint8_t *data[4];

- int linesize[4];

- /* get a pointer on the bitmap */

- SDL_LockYUVOverlay (vp->bmp); //对SDL_Overlay操作前,先Lock

- data[0] = vp->bmp->pixels[0];

- data[1] = vp->bmp->pixels[2];

- data[2] = vp->bmp->pixels[1];

- linesize[0] = vp->bmp->pitches[0];

- linesize[1] = vp->bmp->pitches[2];

- linesize[2] = vp->bmp->pitches[1];

- #if CONFIG_AVFILTER

- // FIXME use direct rendering

- // 将解码出来的yuv数据拷贝到vp->bmp中,供后续显示线程显示

- av_image_copy(data, linesize, (const uint8_t **)src_frame->data, src_frame->linesize,

- src_frame->format, vp->width, vp->height);

- #else

- {

- AVDictionaryEntry *e = av_dict_get(sws_dict, "sws_flags", NULL, 0);

- if (e) {

- const AVClass *class = sws_get_class();

- const AVOption *o = av_opt_find(&class, "sws_flags", NULL, 0,

- AV_OPT_SEARCH_FAKE_OBJ);

- int ret = av_opt_eval_flags(&class, o, e->value, &sws_flags);

- if (ret < 0)

- exit(1);

- }

- }

- is->img_convert_ctx = sws_getCachedContext(is->img_convert_ctx,

- vp->width, vp->height, src_frame->format, vp->width, vp->height,

- AV_PIX_FMT_YUV420P, sws_flags, NULL, NULL, NULL);

- if (!is->img_convert_ctx) {

- av_log(NULL, AV_LOG_FATAL, "Cannot initialize the conversion context\n");

- exit(1);

- }

- sws_scale(is->img_convert_ctx, src_frame->data, src_frame->linesize,

- 0, vp->height, data, linesize);

- #endif

- /* workaround SDL PITCH_WORKAROUND */

- duplicate_right_border_pixels(vp->bmp);

- /* update the bitmap content */

- SDL_UnlockYUVOverlay(vp->bmp); //对SDL_Overlay操作完成,UnLock

- vp->pts = pts;

- vp->duration = duration;

- vp->pos = pos;

- vp->serial = serial;

- /* now we can update the picture count */

- frame_queue_push(&is->pictq); //更新frame queue队列,增加了新的一帧Frame用于显示

- }

- return 0;

- }

audio_thread()解析

audio_thread()负责Audio Packet Queue(is->audioq)的消耗和Audio Sample Queue(is->sampq)的生产。

Audio Packet Queue消耗的函数调用关系:audio_thread() -> decoder_decode_frame() -> packet_queue_get(is->audioq)

Audio Sample Queue生产的函数调用关系:audio_thread() -> frame_queue_push(&is->sampq)。

audio_thread()的主干代码及相关代码如下:

- static int audio_thread(void *arg)

- {

- VideoState *is = arg;

- AVFrame *frame = av_frame_alloc();

- Frame *af;

- ......

- do {

- // 解码音频packet,解码后的音频数据放在frame中

- if ((got_frame = decoder_decode_frame(&is->auddec, frame, NULL)) < 0)

- goto the_end;

- if (got_frame) {

- ......

- #if CONFIG_AVFILTER

- ......

- while ((ret = av_buffersink_get_frame_flags(is->out_audio_filter, frame, 0)) >= 0) {

- tb = is->out_audio_filter->inputs[0]->time_base;

- #endif

- //探测audio sample queue是否有空的Frame,如果有则返回空的Frame(af),接下来将解码的音频sample内容拷贝到其中

- if (!(af = frame_queue_peek_writable(&is->sampq)))

- goto the_end;

- af->pts = (frame->pts == AV_NOPTS_VALUE) ? NAN : frame->pts * av_q2d(tb);

- af->pos = av_frame_get_pkt_pos(frame);

- af->serial = is->auddec.pkt_serial;

- af->duration = av_q2d((AVRational){frame->nb_samples, frame->sample_rate});

- av_frame_move_ref(af->frame, frame); //将解码后的音频数据放到audio sample queue(af)中,后续提供给音频播放设备播放

- frame_queue_push(&is->sampq); //更新frame queue队列,增加了新的audio sample用于后续播放

- #if CONFIG_AVFILTER

- if (is->audioq.serial != is->auddec.pkt_serial)

- break;

- }

- if (ret == AVERROR_EOF)

- is->auddec.finished = is->auddec.pkt_serial;

- #endif

- }

- } while (ret >= 0 || ret == AVERROR(EAGAIN) || ret == AVERROR_EOF);

- the_end:

- #if CONFIG_AVFILTER

- avfilter_graph_free(&is->agraph);

- #endif

- av_frame_free(&frame);

- return ret;

- }

音频回调函数sdl_audio_callback()解析

- static void sdl_audio_callback(void *opaque, Uint8 *stream, int len)

- {

- VideoState *is = opaque;

- int audio_size, len1;

- audio_callback_time = av_gettime_relative();

- while (len > 0) {

- if (is->audio_buf_index >= is->audio_buf_size) {

- // audio_decode_frame将Audio Sample Queue中的解码后音频数据进行转换,然后存储到is->audio_buf中。

- audio_size = audio_decode_frame(is);

- if (audio_size < 0) {

- /* if error, just output silence */

- is->audio_buf = NULL;

- is->audio_buf_size = SDL_AUDIO_MIN_BUFFER_SIZE / is->audio_tgt.frame_size * is->audio_tgt.frame_size;

- } else {

- if (is->show_mode != SHOW_MODE_VIDEO)

- update_sample_display(is, (int16_t *)is->audio_buf, audio_size);

- is->audio_buf_size = audio_size;

- }

- is->audio_buf_index = 0;

- }

- len1 = is->audio_buf_size - is->audio_buf_index;

- if (len1 > len)

- len1 = len;

- // 将音频数据拷贝到stream中,供SDL进行播放使用

- if (!is->muted && is->audio_buf && is->audio_volume == SDL_MIX_MAXVOLUME)

- memcpy(stream, (uint8_t *)is->audio_buf + is->audio_buf_index, len1);

- else {

- memset(stream, 0, len1);

- if (!is->muted && is->audio_buf)

- SDL_MixAudio(stream, (uint8_t *)is->audio_buf + is->audio_buf_index, len1, is->audio_volume);

- }

- len -= len1;

- stream += len1;

- is->audio_buf_index += len1;

- }

- is->audio_write_buf_size = is->audio_buf_size - is->audio_buf_index;

- /* Let's assume the audio driver that is used by SDL has two periods. */

- if (!isnan(is->audio_clock)) { // 更新audio clock,用于音视频同步

- set_clock_at(&is->audclk, is->audio_clock - (double)(2 * is->audio_hw_buf_size + is->audio_write_buf_size) / is->audio_tgt.bytes_per_sec, is->audio_clock_serial, audio_callback_time / 1000000.0);

- sync_clock_to_slave(&is->extclk, &is->audclk);

- }

- }

- static int audio_decode_frame(VideoState *is)

- {

- int data_size, resampled_data_size;

- int64_t dec_channel_layout;

- av_unused double audio_clock0;

- int wanted_nb_samples;

- Frame *af;

- if (is->paused)

- return -1;

- do {

- ... ...

- //探测audio sample queue是否有可读的Frame,如果有则返回该Frame(af),

- if (!(af = frame_queue_peek_readable(&is->sampq)))

- return -1;

- frame_queue_next(&is->sampq);

- } while (af->serial != is->audioq.serial);

- data_size = av_samples_get_buffer_size(NULL, av_frame_get_channels(af->frame),

- af->frame->nb_samples,

- af->frame->format, 1);

- dec_channel_layout =

- (af->frame->channel_layout && av_frame_get_channels(af->frame) == av_get_channel_layout_nb_channels(af->frame->channel_layout)) ?

- af->frame->channel_layout : av_get_default_channel_layout(av_frame_get_channels(af->frame));

- wanted_nb_samples = synchronize_audio(is, af->frame->nb_samples);

- if (af->frame->format != is->audio_src.fmt ||

- dec_channel_layout != is->audio_src.channel_layout ||

- af->frame->sample_rate != is->audio_src.freq ||

- (wanted_nb_samples != af->frame->nb_samples && !is->swr_ctx)) {

- swr_free(&is->swr_ctx);

- is->swr_ctx = swr_alloc_set_opts(NULL,

- is->audio_tgt.channel_layout, is->audio_tgt.fmt, is->audio_tgt.freq,

- dec_channel_layout, af->frame->format, af->frame->sample_rate,

- 0, NULL);

- if (!is->swr_ctx || swr_init(is->swr_ctx) < 0) {

- av_log(NULL, AV_LOG_ERROR,

- "Cannot create sample rate converter for conversion of %d Hz %s %d channels to %d Hz %s %d channels!\n",

- af->frame->sample_rate, av_get_sample_fmt_name(af->frame->format), av_frame_get_channels(af->frame),

- is->audio_tgt.freq, av_get_sample_fmt_name(is->audio_tgt.fmt), is->audio_tgt.channels);

- swr_free(&is->swr_ctx);

- return -1;

- }

- is->audio_src.channel_layout = dec_channel_layout;

- is->audio_src.channels = av_frame_get_channels(af->frame);

- is->audio_src.freq = af->frame->sample_rate;

- is->audio_src.fmt = af->frame->format;

- }

- if (is->swr_ctx) { //对解码后音频数据转换,符合目标输出格式

- const uint8_t **in = (const uint8_t **)af->frame->extended_data;

- uint8_t **out = &is->audio_buf1;

- int out_count = (int64_t)wanted_nb_samples * is->audio_tgt.freq / af->frame->sample_rate + 256;

- int out_size = av_samples_get_buffer_size(NULL, is->audio_tgt.channels, out_count, is->audio_tgt.fmt, 0);

- int len2;

- if (out_size < 0) {

- av_log(NULL, AV_LOG_ERROR, "av_samples_get_buffer_size() failed\n");

- return -1;

- }

- if (wanted_nb_samples != af->frame->nb_samples) {

- if (swr_set_compensation(is->swr_ctx, (wanted_nb_samples - af->frame->nb_samples) * is->audio_tgt.freq / af->frame->sample_rate,

- wanted_nb_samples * is->audio_tgt.freq / af->frame->sample_rate) < 0) {

- av_log(NULL, AV_LOG_ERROR, "swr_set_compensation() failed\n");

- return -1;

- }

- }

- av_fast_malloc(&is->audio_buf1, &is->audio_buf1_size, out_size);

- if (!is->audio_buf1)

- return AVERROR(ENOMEM);

- len2 = swr_convert(is->swr_ctx, out, out_count, in, af->frame->nb_samples);

- if (len2 < 0) {

- av_log(NULL, AV_LOG_ERROR, "swr_convert() failed\n");

- return -1;

- }

- if (len2 == out_count) {

- av_log(NULL, AV_LOG_WARNING, "audio buffer is probably too small\n");

- if (swr_init(is->swr_ctx) < 0)

- swr_free(&is->swr_ctx);

- }

- is->audio_buf = is->audio_buf1;

- resampled_data_size = len2 * is->audio_tgt.channels * av_get_bytes_per_sample(is->audio_tgt.fmt);

- } else {

- is->audio_buf = af->frame->data[0]; //audio_buf指向解码后音频数据

- resampled_data_size = data_size;

- }

- audio_clock0 = is->audio_clock;

- /* update the audio clock with the pts */

- if (!isnan(af->pts))

- is->audio_clock = af->pts + (double) af->frame->nb_samples / af->frame->sample_rate;

- else

- is->audio_clock = NAN;

- is->audio_clock_serial = af->serial;

- return resampled_data_size;

- }

主线程视频渲染解析

- static void event_loop(VideoState *cur_stream)

- {

- SDL_Event event;

- double incr, pos, frac;

- for (;;) {

- ......

- refresh_loop_wait_event(cur_stream, &event);

- switch (event.type) {

- case SDL_KEYDOWN:

- ......

- }

- }

- }

- static void refresh_loop_wait_event(VideoState *is, SDL_Event *event) {

- double remaining_time = 0.0;

- // 调用SDL_PeepEvents前先调用SDL_PumpEvents,将输入设备的事件抽到事件队列中

- SDL_PumpEvents();

- while (!SDL_PeepEvents(event, 1, SDL_GETEVENT, SDL_ALLEVENTS)) {

- // 从事件队列中拿一个事件,放到event中,如果没有事件,则进入循环中

- if (!cursor_hidden && av_gettime_relative() - cursor_last_shown > CURSOR_HIDE_DELAY) {

- SDL_ShowCursor(0); //隐藏鼠标

- cursor_hidden = 1;

- }

- if (remaining_time > 0.0) // 在video_refresh函数中,根据当前帧显示时刻和实际时刻计算需要sleep的时间,保证帧按时显示

- av_usleep((int64_t)(remaining_time * 1000000.0));

- remaining_time = REFRESH_RATE;

- if (is->show_mode != SHOW_MODE_NONE && (!is->paused || is->force_refresh))

- video_refresh(is, &remaining_time);

- SDL_PumpEvents();

- }

- }

- /* called to display each frame */

- static void video_refresh(void *opaque, double *remaining_time)

- {

- VideoState *is = opaque;

- double time;

- Frame *sp, *sp2;

- if (!is->paused && get_master_sync_type(is) == AV_SYNC_EXTERNAL_CLOCK && is->realtime)

- check_external_clock_speed(is);

- ......

- if (is->video_st) {

- retry:

- if (frame_queue_nb_remaining(&is->pictq) == 0) {

- // nothing to do, no picture to display in the queue

- } else {

- double last_duration, duration, delay;

- Frame *vp, *lastvp;

- /* dequeue the picture */

- lastvp = frame_queue_peek_last(&is->pictq); //取Video Frame Queue上一帧图像

- vp = frame_queue_peek(&is->pictq); //取Video Frame Queue当前帧图像

- ......

- if (is->paused)

- goto display;

- /* compute nominal last_duration */

- last_duration = vp_duration(is, lastvp, vp); //计算两帧之间的时间间隔

- delay = compute_target_delay(last_duration, is); //计算当前帧与上一帧渲染的时间差

- time= av_gettime_relative()/1000000.0;

- //is->frame_timer + delay是当前帧渲染的时刻,如果当前时间还没到帧渲染的时刻,那就要sleep了

- if (time < is->frame_timer + delay) { // remaining_time为需要sleep的时间

- *remaining_time = FFMIN(is->frame_timer + delay - time, *remaining_time);

- goto display;

- }

- is->frame_timer += delay;

- if (delay > 0 && time - is->frame_timer > AV_SYNC_THRESHOLD_MAX)

- is->frame_timer = time;

- SDL_LockMutex(is->pictq.mutex);

- if (!isnan(vp->pts))

- update_video_pts(is, vp->pts, vp->pos, vp->serial);

- SDL_UnlockMutex(is->pictq.mutex);

- if (frame_queue_nb_remaining(&is->pictq) > 1) {

- Frame *nextvp = frame_queue_peek_next(&is->pictq);

- duration = vp_duration(is, vp, nextvp);

- // 如果当前帧显示时刻早于实际时刻,说明解码慢了,帧到的晚了,需要丢弃不能用于显示了,不然音视频不同步了。

- if(!is->step && (framedrop>0 || (framedrop && get_master_sync_type(is) != AV_SYNC_VIDEO_MASTER)) && time > is->frame_timer + duration){

- is->frame_drops_late++;

- frame_queue_next(&is->pictq);

- goto retry;

- }

- }

- ......

- frame_queue_next(&is->pictq);

- is->force_refresh = 1; //显示当前帧

- if (is->step && !is->paused)

- stream_toggle_pause(is);

- }

- display:

- /* display picture */

- if (!display_disable && is->force_refresh && is->show_mode == SHOW_MODE_VIDEO && is->pictq.rindex_shown)

- video_display(is);

- }

- is->force_refresh = 0;

- ......

- }

- static void video_display(VideoState *is)

- {

- if (!screen)

- video_open(is, 0, NULL);

- if (is->audio_st && is->show_mode != SHOW_MODE_VIDEO)

- video_audio_display(is);

- else if (is->video_st)

- video_image_display(is);

- }

- static void video_image_display(VideoState *is)

- {

- Frame *vp;

- Frame *sp;

- SDL_Rect rect;

- int i;

- vp = frame_queue_peek_last(&is->pictq);

- if (vp->bmp) {

- ......

- calculate_display_rect(&rect, is->xleft, is->ytop, is->width, is->height, vp->width, vp->height, vp->sar);

- SDL_DisplayYUVOverlay(vp->bmp, &rect); //显示当前帧

- ......

- }

- }

至此,ffplay正常播放流程基本解析完成了。后面有空,会继续分析ffplay的音视频同步机制、事件响应机制等等。

转载地址: https://blog.csdn.net/dssxk/article/details/50403018