tf.estimator API技术手册(16)——自定义Estimator

tf.estimator API技术手册(16)——自定义Estimator

- (一)前 言

- (二)自定义estimator的一般步骤

- (三)准备训练数据

- (四)自定义estimator实践

- (1)创建特征列

- (2)编写模型函数

- (3)创建estimator实例

- (4)开始训练

- (5)使用tensorboard查看训练日志

- (6)进行预测、评估

- (7)完整代码

- (五)总 结

(一)前 言

在该系列教程的前15章内,我们介绍了各种类型的estimator,他们都是预先定义好的estimator,可以直接使用,非常便捷,但随着任务的变化,我们往往需要不同的网络结构来适应新的任务,这时我们就可以通过自定义estimator来满足我们的需求。

从上图我们可以看到预创建的 Estimator 是 tf.estimator.Estimator 基类的子类,而自定义 Estimator 是 tf.estimator.Estimator 的实例。

(二)自定义estimator的一般步骤

Created with Raphaël 2.2.0 开 始 创建特征列 编写模型函数 创建estimator实例 训练、评估、预测 结 束

(三)准备训练数据

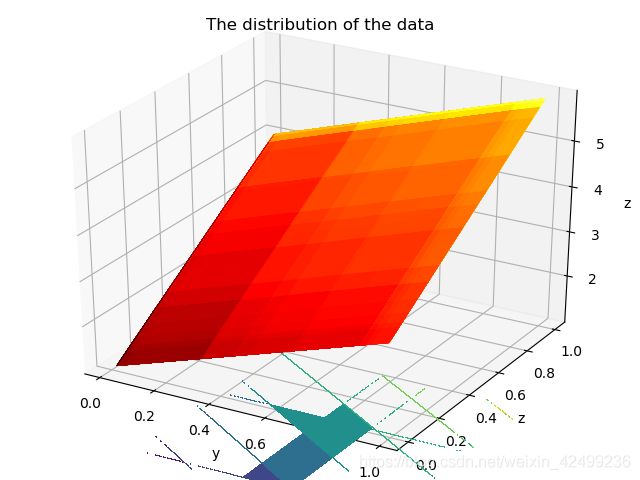

通过以下代码来生成一个具有两个特征的线性数据:

import numpy as np

import matplotlib.pyplot as plt

from mpl_toolkits.mplot3d import Axes3D

att = np.float32(np.random.rand(100, 2))

label = 2 * att[:, 0] + 3 * att[:, 1] + 1

# 将数据存为NPZ文件,方便之后读取

np.savez(r'C:\Users\12394\PycharmProjects\TensorFlow\blog.npz', att = att, label = label)

data = np.load(r'C:\Users\12394\PycharmProjects\TensorFlow\blog.npz')

fig = plt.figure()

ax = Axes3D(fig)

X, Y = np.meshgrid(data['att'][:, 0], data['att'][:, 1])

Z = 2 *X + 3 * Y + 1

ax.plot_surface(X, Y, Z, rstride = 1, cstride = 1, cmap = plt.cm.hot)

ax.contourf(X, Y, Z, zdir = 'z', offset = -1, camp = plt.cm.hot)

ax.set_title('The distribution of the data')

ax.set_ylabel('z')

ax.set_xlabel('y')

ax.set_zlabel('z')

plt.show()

(四)自定义estimator实践

接下来我们按照(二)中的步骤来自定义estimator

(1)创建特征列

# 创建一个二维的特征列

feature_columns = [tf.feature_column.numeric_column('att', shape= [2])]

(2)编写模型函数

我们这里使用一个具有两个隐层的深度网络,我们要使用的模型函数必须要具有以下结构:

def my_model_fn(

features, # 由数据输入函数输入的特征

labels, # 由数据输入函数输入的标签值

mode, # 一个tf.estimator.ModeKeys的实例

params): # 其他的参数设置

这里需要介绍一下tf.estimator.ModeKeys,它共有三种,分别对应不同的操作:

| 类型 | 对应操作 |

|---|---|

| tf.estimator.ModeKeys.TRAIN | 训 练 |

| tf.estimator.ModeKeys.PREDICT | 预 测 |

| tf.estimator.ModeKeys. EVAL | 评 估 |

当指定不同的ModeKeys的时候,estimator会执行对应的操作,接下来开始编写模型函数:

def network(x, feature_column):

"""

:purpose: 定义模型结构,这里我们通过tf.layers来实现

:param x: 输入层张量

:return: 返回前向传播的结果

"""

net = tf.feature_column.input_layer(x, feature_column)

net = tf.layers.Dense(units= 100)(net)

net = tf.layers.Dense(units= 100)(net)

net = tf.layers.Dense(units= 1)(net)

return net

def my_model_fn(

features,

labels,

mode,

params

):

"""

:purpose: 模型函数

:param features: 输入函数中返回的数据特征

:param labels: 数据标签

:param mode: 表示调用程序是请求训练、预测还是评估

:param params: 可传递的额外的参数

:return: 返回模型训练过程需要使用的损失函数、训练过程和评测方法

"""

# 定义神经网络的结构并通过输入得到前向传播的结果

predict = network(features, params['feature_columns'])

# 如果在预测模式,那么只需要将结果返回即可

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(

mode= mode,

predictions= {'result': predict}

)

# 定义损失函数

loss = tf.reduce_mean(predict - labels)

# 定义优化函数

optimizer = tf.train.GradientDescentOptimizer(learning_rate= params['learning_rate'])

# 定义训练过程

train_op = optimizer.minimize(loss= loss, global_step= tf.train.get_global_step())

# 定义评测标准,在运行评估操作的时候会计算这里定义的所有评测标准

eval_metric_ops = tf.metrics.accuracy(predict, labels, name= 'acc')

if mode == tf.estimator.ModeKeys.TRAIN:

return tf.estimator.EstimatorSpec(

mode= mode,

loss= loss,

train_op= train_op,

eval_metric_ops= {'accuracy': eval_metric_ops})

# 定义评估操作

if mode == tf.estimator.ModeKeys.EVAL:

return tf.estimator.EstimatorSpec(

mode = mode,

loss = loss,

eval_metric_ops= {'accuracy': eval_metric_ops})

# 特别注意这里的eval_metric_ops赋予的值必须是一个字典

(3)创建estimator实例

# 通过自定义的方式生成Estimator类,这里需要提供模型定义的函数并通过params参数指定模型定义时使用的超参数

# 设置学习率和特征列

model_params = {'learning_rate': 0.01,

'feature_columns': feature_columns

}

estimator = tf.estimator.Estimator(model_fn= my_model_fn,

params= model_params,

model_dir= r'C:\Users\12394\PycharmProjects\TensorFlow\logs')

(4)开始训练

首先编写一个数据输入函数:

def input_fn():

"""

:purpose: 输入训练数据

:return: 特征值和标签值

"""

data = np.load(r'C:\Users\12394\PycharmProjects\TensorFlow\blog.npz')

dataset = tf.data.Dataset.from_tensor_slices((data['att'], data['label'])).batch(5)

iterator = dataset.make_one_shot_iterator()

att, label = iterator.get_next()

return {'att': att}, label

开始训练:

estimator.train(input_fn= input_fn, steps= 30000)

点击运行,出现以下显示:

INFO:tensorflow:Using default config.

INFO:tensorflow:Using config: {'_model_dir': 'C:\\Users\\12394\\PycharmProjects\\TensorFlow\\logs', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_service': None, '_cluster_spec': <tensorflow.python.training.server_lib.ClusterSpec object at 0x0000020DAE68BEF0>, '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Create CheckpointSaverHook.

INFO:tensorflow:Graph was finalized.

2019-02-04 14:03:24.806571: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

2019-02-04 14:03:25.595779: E tensorflow/stream_executor/cuda/cuda_driver.cc:300] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

2019-02-04 14:03:25.601682: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:163] retrieving CUDA diagnostic information for host: DESKTOP-N58V3DN

2019-02-04 14:03:25.602140: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:170] hostname: DESKTOP-N58V3DN

INFO:tensorflow:Restoring parameters from C:\Users\12394\PycharmProjects\TensorFlow\logs\model.ckpt-20

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Saving checkpoints for 20 into C:\Users\12394\PycharmProjects\TensorFlow\logs\model.ckpt.

INFO:tensorflow:loss = -5.801062, step = 21

INFO:tensorflow:Saving checkpoints for 40 into C:\Users\12394\PycharmProjects\TensorFlow\logs\model.ckpt.

INFO:tensorflow:Loss for final step: -10.126386.

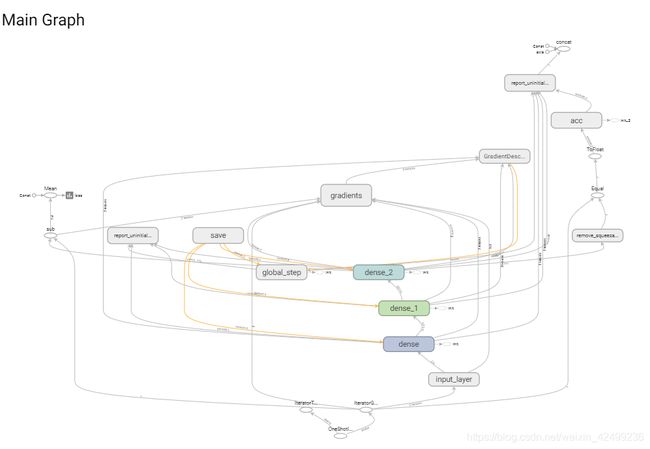

(5)使用tensorboard查看训练日志

tensorboard.exe --logdir='C:/Users/12394/PycharmProjects/TensorFlow/logs'

(6)进行预测、评估

进行评估:

estimator.evaluate(input_fn=input_fn, steps= 200)

# 输 出:

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Starting evaluation at 2019-02-04-06:03:26

INFO:tensorflow:Graph was finalized.

INFO:tensorflow:Restoring parameters from C:\Users\12394\PycharmProjects\TensorFlow\logs\model.ckpt-40

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

INFO:tensorflow:Evaluation [20/200]

INFO:tensorflow:Finished evaluation at 2019-02-04-06:03:26

INFO:tensorflow:Saving dict for global step 40: accuracy = 0.0, global_step = 40, loss = -11.250569

INFO:tensorflow:Saving 'checkpoint_path' summary for global step 40: C:\Users\12394\PycharmProjects\TensorFlow\logs\model.ckpt-40

进行预测

predictions = estimator.predict(input_fn= input_fn)

for item in predictions:

print(item['result'])

# 输 出:

C:\Users\12394\Anaconda3\python.exe C:/Users/12394/PycharmProjects/TensorFlow/exercise.py

INFO:tensorflow:Using default config.

INFO:tensorflow:Using config: {'_model_dir': 'C:\\Users\\12394\\PycharmProjects\\TensorFlow\\logs', '_tf_random_seed': None, '_save_summary_steps': 100, '_save_checkpoints_steps': None, '_save_checkpoints_secs': 600, '_session_config': allow_soft_placement: true

graph_options {

rewrite_options {

meta_optimizer_iterations: ONE

}

}

, '_keep_checkpoint_max': 5, '_keep_checkpoint_every_n_hours': 10000, '_log_step_count_steps': 100, '_train_distribute': None, '_device_fn': None, '_protocol': None, '_eval_distribute': None, '_experimental_distribute': None, '_service': None, '_cluster_spec': <tensorflow.python.training.server_lib.ClusterSpec object at 0x0000010A7BF9D048>, '_task_type': 'worker', '_task_id': 0, '_global_id_in_cluster': 0, '_master': '', '_evaluation_master': '', '_is_chief': True, '_num_ps_replicas': 0, '_num_worker_replicas': 1}

INFO:tensorflow:Calling model_fn.

INFO:tensorflow:Done calling model_fn.

INFO:tensorflow:Graph was finalized.

2019-02-04 14:11:42.490008: I tensorflow/core/platform/cpu_feature_guard.cc:141] Your CPU supports instructions that this TensorFlow binary was not compiled to use: AVX2

2019-02-04 14:11:43.269364: E tensorflow/stream_executor/cuda/cuda_driver.cc:300] failed call to cuInit: CUDA_ERROR_NO_DEVICE: no CUDA-capable device is detected

2019-02-04 14:11:43.274245: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:163] retrieving CUDA diagnostic information for host: DESKTOP-N58V3DN

2019-02-04 14:11:43.275104: I tensorflow/stream_executor/cuda/cuda_diagnostics.cc:170] hostname: DESKTOP-N58V3DN

INFO:tensorflow:Restoring parameters from C:\Users\12394\PycharmProjects\TensorFlow\logs\model.ckpt-40

INFO:tensorflow:Running local_init_op.

INFO:tensorflow:Done running local_init_op.

[-9.149635]

[-6.8121824]

(7)完整代码

# Encoding = UTF-8

# By Mingqi Yuan, 2019/2/3

import numpy as np

import tensorflow as tf

from tensorflow import keras

# 打印训练信息

tf.logging.set_verbosity(tf.logging.INFO)

def network(x, feature_column):

"""

:purpose: 定义模型结构,这里我们通过tf.layers来实现

:param x: 输入层张量

:return: 返回前向传播的结果

"""

net = tf.feature_column.input_layer(x, feature_column)

net = tf.layers.Dense(units= 100)(net)

net = tf.layers.Dense(units= 100)(net)

net = tf.layers.Dense(units= 1)(net)

return net

def my_model_fn(

features,

labels,

mode,

params

):

"""

:purpose: 模型函数

:param features: 输入函数中返回的数据特征

:param labels: 数据标签

:param mode: 表示调用程序是请求训练、预测还是评估

:param params: 可传递的额外的参数

:return: 返回模型训练过程需要使用的损失函数、训练过程和评测方法

"""

# 定义神经网络的结构并通过输入得到前向传播的结果

predict = network(features, params['feature_columns'])

# 如果在预测模式,那么只需要将结果返回即可

if mode == tf.estimator.ModeKeys.PREDICT:

return tf.estimator.EstimatorSpec(

mode= mode,

predictions= {'result': predict}

)

# 定义损失函数

loss = tf.reduce_mean(predict - labels)

# 定义优化函数

optimizer = tf.train.GradientDescentOptimizer(learning_rate= params['learning_rate'])

# 定义训练过程

train_op = optimizer.minimize(loss= loss, global_step= tf.train.get_global_step())

# 定义评测标准,在运行评估操作的时候会计算这里定义的所有评测标准

eval_metric_ops = tf.metrics.accuracy(predict, labels, name= 'acc')

if mode == tf.estimator.ModeKeys.TRAIN:

return tf.estimator.EstimatorSpec(

mode= mode,

loss= loss,

train_op= train_op,

eval_metric_ops= {'accuracy': eval_metric_ops})

# 定义评估操作

if mode == tf.estimator.ModeKeys.EVAL:

return tf.estimator.EstimatorSpec(

mode = mode,

loss = loss,

eval_metric_ops= {'accuracy': eval_metric_ops})

# 通过自定义的方式生成Estimator类,这里需要提供模型定义的函数并通过params参数指定模型定义时使用的超参数

feature_columns = [tf.feature_column.numeric_column('att', shape= [2])]

model_params = {'learning_rate': 0.01,

'feature_columns': feature_columns

}

estimator = tf.estimator.Estimator(model_fn= my_model_fn,

params= model_params,

model_dir= r'C:\Users\12394\PycharmProjects\TensorFlow\logs')

def input_fn():

"""

:purpose: 输入训练数据

:return: 特征值和标签值

"""

data = np.load(r'C:\Users\12394\PycharmProjects\TensorFlow\blog.npz')

dataset = tf.data.Dataset.from_tensor_slices((data['att'], data['label'])).batch(5)

iterator = dataset.make_one_shot_iterator()

att, label = iterator.get_next()

return {'att': att}, label

# 训 练

estimator.train(input_fn= input_fn, steps= 30000)

# 评 估

estimator.evaluate(input_fn=input_fn, steps= 200)

# 预 测

predictions = estimator.predict(input_fn= input_fn)

for item in predictions:

print(item['result'])

(五)总 结

在这一节中我们介绍了自定义estimator的一般方法,有任何的问题可以在评论区留言,我会尽快回复,谢谢支持!