手写HashMap(JDK8)第一天

一,分析HashMap结构

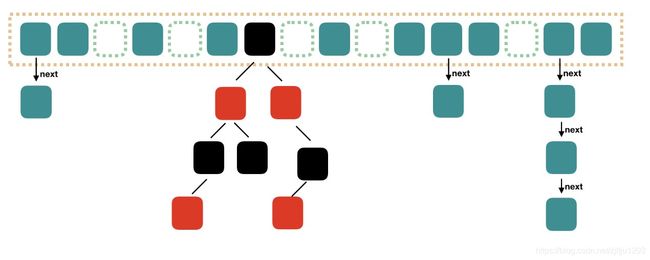

首先先看一下hashMap的结构图(从ImportNew上摘过来的图):

JDK8对hashMap做了调整,使用数组+链表+红黑树结构。看几个重要的HashMap参数指标:

//初始化容器容量,默认大小16

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4;

//最大容量,Integer.MAX_VALUE的一半,超过这个容量,

static final int MAXIMUM_CAPACITY = 1 << 30;

//默认容载因子,表示容器的使用情况,也是容器扩容的重要指标之一

static final float DEFAULT_LOAD_FACTOR = 0.75f;

//扩容指标,容器根据该值判断是否需要扩容

transient int threshold;

//容载因子

transient final float loadFactor;

//数组

transient ImitatedWritingHashMap.Node[] table;

影响HashMap性能的两个重要参数是Capacity和Factor。Capacity表明了HashMap的基础容量,Factor表明了容器的利用率。Factor越大,填满的元素越多,空间利用率越高,但冲突的机会加大了;反之,Factor越小,填满的元素越少,冲突的机会减小,但空间浪费多了。

threshold。该指标表示HashMap扩容的临界值,当达到这个值时扩容。该值使用tableSizeFor(Capacity)计算出结果。

还有一个指标:TREEIFY_THRESHOLD。该指标表示HashMap又链表,改造为红黑树的标准。默认为8,当链表长度达到8时,链表自动转化为红黑树。

今天是手写HashMap第一天,今天主要写Hash的几个主要方法:

1.构造器

2.扩容函数

3.查找节点函数

4.get

5.put

6.hash函数

7.HashMap使用链表,创建一个Node静态内部类,作为链表。(今天只写链表,红黑树先不考虑)

二,实现代码

import java.io.Serializable;

import java.util.Map;

import java.util.Objects;

import java.util.Set;

public class ImitatedWritingHashMap extends AbstractMap implements Map, Cloneable, Serializable {

//初始化容器容量,默认大小16

static final int DEFAULT_INITIAL_CAPACITY = 1 << 4;

//最大容量,Integer.MAX_VALUE的一半,超过这个容量,

static final int MAXIMUM_CAPACITY = 1 << 30;

//默认容载因子,表示容器的使用情况,也是容器扩容的重要指标之一

static final float DEFAULT_LOAD_FACTOR = 0.75f;

//扩容指标,容器根据该值判断是否需要扩容

transient int threshold;

transient final float loadFactor;

//map桶数组

transient ImitatedWritingHashMap.Node[] table;

transient Set> entrySet;

//map长度

transient int size;

transient int modCount;

private K key;

private V value;

public ImitatedWritingHashMap() {

this.loadFactor = DEFAULT_LOAD_FACTOR;

System.out.println("默认初始化构造器");

}

public ImitatedWritingHashMap(int initialCapacity, float loadFactor) {

System.out.println("(容器容量:+" + initialCapacity + ",容载因子:" + loadFactor + ")构造器");

if (initialCapacity < 0)

throw new IllegalArgumentException("Illegal initial capacity: " + initialCapacity);

if (initialCapacity > MAXIMUM_CAPACITY)

initialCapacity = MAXIMUM_CAPACITY;

if (loadFactor <= 0 || Float.isNaN(loadFactor))

throw new IllegalArgumentException("Illegal load factor: " + loadFactor);

this.loadFactor = loadFactor;

//初步计算一个阙值

this.threshold = tableSizeFor(initialCapacity);

}

public ImitatedWritingHashMap(int initialCapacity) {

this(initialCapacity, DEFAULT_LOAD_FACTOR);

}

//用作链表

static class Node implements Map.Entry {

int hash;

K key;

V value;

Node next;

public Node(int hash, K key, V value, Node next) {

this.hash = hash;

this.key = key;

this.value = value;

this.next = next;

}

@Override

public K getKey() {

return key;

}

@Override

public V getValue() {

return value;

}

@Override

public V setValue(V newValue) {

V oldValue = value;

value = newValue;

return oldValue;

}

@Override

public String toString() {

return "Node{" +

"key=" + key +

", value=" + value +

'}';

}

@Override

public boolean equals(Object o) {

if (this == o) return true;

if (o == null || getClass() != o.getClass()) return false;

Node node = (Node) o;

return Objects.equals(key, node.key) &&

Objects.equals(value, node.value);

}

@Override

public int hashCode() {

return Objects.hash(key, value);

}

}

//计算需要数组的长度

final int tableSizeFor(int initialCapacity) {

int n = initialCapacity - 1;

n |= n >>> 1;

n |= n >>> 2;

n |= n >>> 4;

n |= n >>> 8;

n |= n >>> 16;

return (n < 0) ? 1 : (n >= MAXIMUM_CAPACITY) ? MAXIMUM_CAPACITY : n + 1;

}

//初始化或重新规划

ImitatedWritingHashMap.Node[] resize() {

ImitatedWritingHashMap.Node[] oldTab = table;

//旧容器容量

int oldCap = (oldTab == null) ? 0 : oldTab.length;

//旧阙值

int oldThr = threshold;

int newCap, newThr = 0;

if (oldCap > 0) {

if (oldCap >= MAXIMUM_CAPACITY) {

threshold = Integer.MAX_VALUE;

return oldTab;

} else if ((newCap = oldCap << 1) < MAXIMUM_CAPACITY && oldCap >= DEFAULT_INITIAL_CAPACITY) {

newThr = oldThr << 1;

}

} else if (oldThr > 0)

newCap = oldThr;

else {

newCap = DEFAULT_INITIAL_CAPACITY;

newThr = (int) (DEFAULT_LOAD_FACTOR * DEFAULT_INITIAL_CAPACITY);

}

if (newThr == 0) {

float ft = (float) newCap * loadFactor;

newThr = (newCap < MAXIMUM_CAPACITY && ft < (float) MAXIMUM_CAPACITY ? (int) ft : Integer.MAX_VALUE);

}

threshold = newThr;

ImitatedWritingHashMap.Node[] newTab = (ImitatedWritingHashMap.Node[]) new ImitatedWritingHashMap.Node[newCap];

table = newTab;

//扩容原集合

if (oldTab != null) {

for (int j = 0; j < oldCap; ++j) {

ImitatedWritingHashMap.Node oldNode;

if((oldNode=oldTab[j])!=null){

oldTab[j] = null;

if (oldNode.next == null)

newTab[oldNode.hash & (newCap - 1)] = oldNode;

else if(false){

System.out.println("备用节点,用于红黑树处理");

}else{

ImitatedWritingHashMap.Node loHead = null, loTail = null; //

ImitatedWritingHashMap.Node hiHead = null, hiTail = null;

ImitatedWritingHashMap.Node next;

/**

* 核心难点

*

* 将链表 拆分为两个链表(这里本人也不清楚拆分成两个重新组合链表的优点,欢迎大神指点。。。)

*/

do {

next = oldNode.next;

if ((oldNode.hash & oldCap) == 0) {

if (loTail == null)

loHead = oldNode;

else

loTail.next = oldNode;

loTail = oldNode;

}

else {

if (hiTail == null)

hiHead = oldNode;

else

hiTail.next = oldNode;

hiTail = oldNode;

}

} while ((oldNode = next) != null);

if (loTail != null) {

loTail.next = null;

newTab[j] = loHead;

}

if (hiTail != null) {

hiTail.next = null;

newTab[j + oldCap] = hiHead;

}

}

}

}

}

return newTab;

}

/**

*

* @param hash 哈希值

* @param key 键值

* @param value 值

* @param onlyIfAbsent 如果为true,不覆盖已有的值

* @param evict 备用,LinkedHashMap有用

* @return

*/

V putVal(int hash, K key, V value, boolean onlyIfAbsent, boolean evict) {

System.out.println("putVal: "+hash+","+key+","+value);

ImitatedWritingHashMap.Node[] hashTab;

ImitatedWritingHashMap.Node tempNode;

int n, i;

if ((hashTab = table) == null || (n = hashTab.length) == 0)

n = (hashTab = resize()).length;

if ((tempNode = hashTab[i = (n - 1) & hash]) == null)

hashTab[i] = newNode(hash, key, value, null);

else{

ImitatedWritingHashMap.Node insertNode; K k;

//找到重复项

if (tempNode.hash == hash &&

((k = tempNode.key) == key || (key != null && key.equals(k))))

insertNode = tempNode;

else if(false)

System.out.println("红黑树插入预留位置");

else{

for (int binCount = 0; ; ++binCount) {

if ((insertNode = tempNode.next) == null) {

tempNode.next = newNode(hash, key, value, null);

if (binCount >= 8) // -1 for 1st

System.out.println("链表转红黑树预留位置。。。");

break;

}

if (insertNode.hash == hash &&

((k = insertNode.key) == key || (key != null && key.equals(k))))

break;

tempNode = insertNode;

}

}

if (insertNode != null) {

V oldValue = insertNode.value;

if (!onlyIfAbsent || oldValue == null)

insertNode.value = value;

return oldValue;

}

}

++modCount;

if (++size > threshold)

resize();

//afterNodeInsertion(evict);

return null;

}

@Override

public final Object put(Object k, Object v) {

K key =(K)k;

V value =(V)v;

return putVal(hash(key), key, value, false, true);

}

@Override

public Object get(Object key) {

ImitatedWritingHashMap.Node e;

return (e = getNode(hash(key), key)) == null ? null : e.value;

}

final ImitatedWritingHashMap.Node getNode(int hash, Object key) {

ImitatedWritingHashMap.Node[] tab; ImitatedWritingHashMap.Node first, e; int n; K k;

if ((tab = table) != null && (n = tab.length) > 0 && (first = tab[(n - 1) & hash]) != null) {

if (first.hash == hash && ((k = first.key) == key || (key != null && key.equals(k))))

return first;

if ((e = first.next) != null) {

if (false){

System.out.println("红黑树查找....");

}

}

}

return null;

}

static final int hash(Object key) {

int h;

return (key == null) ? 0 : (h = key.hashCode()) ^ (h >>> 16);

}

ImitatedWritingHashMap.Node newNode(int hash, K key, V value, ImitatedWritingHashMap.Node next) {

return new ImitatedWritingHashMap.Node<>(hash, key, value, next);

}

public static void main(String[] args) {

ImitatedWritingHashMap imitatedWritingHashMap = new ImitatedWritingHashMap(3,0.5f);

imitatedWritingHashMap.put("1","1");

System.out.println(imitatedWritingHashMap.get("1"));

}

@Override

public Set entrySet() {

return null;

}

}