HF-Net(一)基于NetVLAD的global descriptor的特征提取

参考:HF-Net git地址

0.数据准备及预训练权重

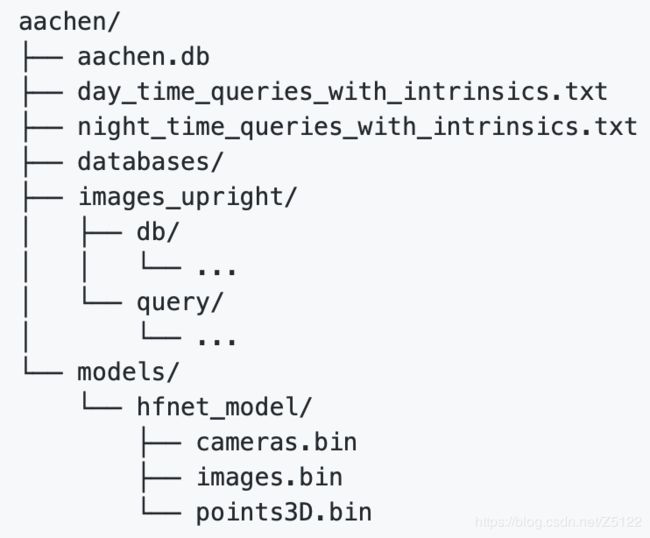

以Aachen Day-Night dataset为例,该数据集目录结构如下:aachen存放在编译HF-Net时设置的DATA_PATH下

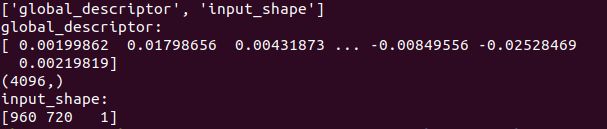

基于NetVLAD的global descriptor的特征提取即对$DATA_PATH/aachen/images_upright/query下的图片生成对应的global descriptor特征(使用的是sgg中的conv3_3)

Aachen Day-Night dataset 检索的一个标准数据集

权重设置:DATA_PATH路径下创建weights文件夹,该文件夹下存放预训练好的NetVLAD权重

1.提取特征核心代码

import numpy as np

import argparse

import yaml

import logging

from pathlib import Path

from tqdm import tqdm

from pprint import pformat

logging.basicConfig(format='[%(asctime)s %(levelname)s] %(message)s',datefmt='%m/%d/%Y %H:%M:%S',level=logging.INFO)

from hfnet.models import get_model # noqa: E402

from hfnet.datasets import get_dataset # noqa: E402

from hfnet.utils import tools # noqa: E402

from hfnet.settings import EXPER_PATH, DATA_PATH # noqa: E402

if __name__ == '__main__':

parser = argparse.ArgumentParser()

parser.add_argument('config', type=str)

parser.add_argument('export_name', type=str)

parser.add_argument('--keys', type=str, default='*')

parser.add_argument('--exper_name', type=str)

parser.add_argument('--as_dataset', action='store_true')

args = parser.parse_args()

export_name = args.export_name

exper_name = args.exper_name

with open(args.config, 'r') as f:

config = yaml.load(f)

keys = '*' if args.keys == '*' else args.keys.split(',')

if args.as_dataset:

base_dir = Path(DATA_PATH, export_name)

else:

base_dir = Path(EXPER_PATH, 'exports')

base_dir = Path(base_dir, ((exper_name+'/') if exper_name else '') + export_name)

base_dir.mkdir(parents=True, exist_ok=True)

if exper_name:

# Update only the model config (not the dataset)

with open(Path(EXPER_PATH, exper_name, 'config.yaml'), 'r') as f:

config['model'] = tools.dict_update(

yaml.load(f)['model'], config.get('model', {}))

checkpoint_path = Path(EXPER_PATH, exper_name)

if config.get('weights', None):

checkpoint_path = Path(checkpoint_path, config['weights'])

else:

if config.get('weights', None):

checkpoint_path = Path(DATA_PATH, 'weights', config['weights'])

else:

checkpoint_path = None

logging.info('No weights provided.')

logging.info(f'Starting export with configuration:\n{pformat(config)}')

with get_model(config['model']['name'])(

data_shape={'image': [None, None, None, config['model']['image_channels']]},

**config['model']) as net:

if checkpoint_path is not None:

net.load(str(checkpoint_path))

dataset = get_dataset(config['data']['name'])(**config['data'])

test_set = dataset.get_test_set()

for data in tqdm(test_set):

predictions = net.predict(data, keys=keys)

predictions['input_shape'] = data['image'].shape

name = data['name'].decode('utf-8')

Path(base_dir, Path(name).parent).mkdir(parents=True, exist_ok=True)

np.savez(Path(base_dir, '{}.npz'.format(name)), **predictions)

如果想要使用自己的数据集进行测试,需要做如下改进:

1.1找到hfnet/hfnet/configs路径,复制一份比如netvlad_export_aachen.yaml,修改其中参数: data/name设为存放在DATA_PATH下的你的图像路径名,该文件夹设置db和query文件夹,其中db存放底库数据,query存放查询图像

设置load_db/load_queries均为true进行global descriptors生成,如果load_db设为为false,则底库图像不进行特征提取,load_queries同理类似

1.2$DATA_PATH/路径下设置一个文件夹存储图片,下面设置两个文件夹一个db一个query,与1.1中图像路径名对应起来

1.3找到hfnet/hfnet/datasets路径,复制一份aachen.py,和1.2中文件夹名一致,修改其中几个参数:首先将其中的类名改成与1.2中文件夹名一致,且首字母大写,设置dataset_folder,指向1.2中存放图片路径

2.执行特征提取

python3 hfnet/export_predictions.py hfnet/configs/netvlad_export_aachen.yaml netvlad/aachen --keys global_descriptor

#第二个文件为参数设置文件,第三个参数为保存输出结果路径

#如果自己设定待特征提取的数据集,第二个参数yaml文件指向1.1中自己复制命名的对应文件,第三个参数改为自己想要存放输出结果的路径即可