CS231n Spring 2019 Assignment 2—Dropout

Dropout

- Dropout forward pass

- Dropout backward pass

- Fully-connected nets with Dropout

- 结果

- 链接

上次我们实现了Batch Normalization和Layer Normalization,感觉刚接触还是有一点小难度的,这次要实现的是一个正则化手段——Dropout,在2012年就有这方面的研究:Improving neural networks by preventing co-adaptation of feature detectors,在2014年还是同样的团队提出了Dropout: A Simple Way to Prevent Neural Networks fromOverfitting,在本次作业中主要需要学习阅读的是:

- Neural Networks Part 2: Setting up the Data and the Loss,里面提到很多正则化方法,包括Dropout

- Lecture 8

最后要完成的是Dropout.ipynb,这次会相对简单一点,编写dropout_forward、dropout_backward并把它加进之前的全连接层里面,放在ReLU层的后面。

Dropout forward pass

dropout是为了解决过拟合提出的一种正则化手段,在训练的时候,会以p的概率保留神经元的激活值(有些框架要注意p到底是keep prob如tensorflow还是drop prob 如PyTorch;还有注意与教程中提到的DropConnect的区分,DropConnect是使神经元之间的权重置为0),其他就置为0。关于dropout为什么起作用,有两个理解:

- 当过拟合时网络会有冗余的表达能力,这时dropout就能防止特征间的相互适应

- 因为每次随机丢弃,这相当于训练了很多子模型,最后是这些子模型的融合,而我们知道模型的融合是对结果有提升的

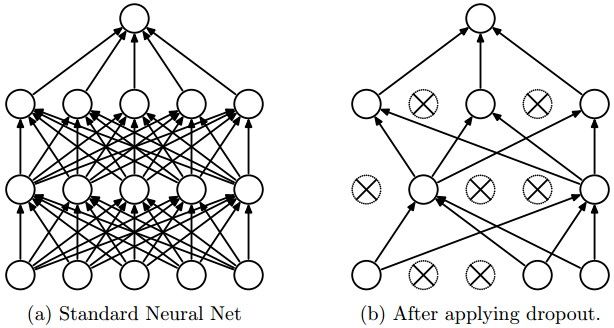

dropout过程如下图所表示的:

dropout在训练时的前向传播和测试时的前向传播是不一样的,既然我们在训练时以一定的概率p保留了激活值,这是有一点的随机性的(具体是对哪些神经元),所以为了在测试时神经元的激活值符合训练时的期望,就要在测试时乘以概率p,这是一种普通的做法;还有一种更喜欢用的就是inverted dropout,就是前向传播测试阶段的不作任何改动,而是在训练阶段除以p,这样来两个阶段的期望也就一样了,这就好像我们在Batch Normalization测试阶段用的是训练阶段一直累计的滑动平均值一样,我们就是为了在测试阶段来Average out randomness。直接看代码,训练阶段主要用了一个mask的思想,测试使不需要任何别的操作,在这里实现的就是inverted dropout:

dropout_forward(x, dropout_param)—>return out, cache

def dropout_forward(x, dropout_param):

"""

Performs the forward pass for (inverted) dropout.

Inputs:

- x: Input data, of any shape

- dropout_param: A dictionary with the following keys:

- p: Dropout parameter. We keep each neuron output with probability p.

- mode: 'test' or 'train'. If the mode is train, then perform dropout;

if the mode is test, then just return the input.

- seed: Seed for the random number generator. Passing seed makes this

function deterministic, which is needed for gradient checking but not

in real networks.

Outputs:

- out: Array of the same shape as x.

- cache: tuple (dropout_param, mask). In training mode, mask is the dropout

mask that was used to multiply the input; in test mode, mask is None.

NOTE: Please implement **inverted** dropout, not the vanilla version of dropout.

See http://cs231n.github.io/neural-networks-2/#reg for more details.

NOTE 2: Keep in mind that p is the probability of **keep** a neuron

output; this might be contrary to some sources, where it is referred to

as the probability of dropping a neuron output.

"""

p, mode = dropout_param['p'], dropout_param['mode']

if 'seed' in dropout_param:

np.random.seed(dropout_param['seed'])

mask = None

out = None

if mode == 'train':

#######################################################################

# TODO: Implement training phase forward pass for inverted dropout. #

# Store the dropout mask in the mask variable. #

#######################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

mask = (np.random.rand(*x.shape) < p) / p #

out = x * mask

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

#######################################################################

# END OF YOUR CODE #

#######################################################################

elif mode == 'test':

#######################################################################

# TODO: Implement the test phase forward pass for inverted dropout. #

#######################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

out = x

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

#######################################################################

# END OF YOUR CODE #

#######################################################################

cache = (dropout_param, mask)

out = out.astype(x.dtype, copy=False)

return out, cache

一点注释:

有人看到mask = (np.random.rand(*x.shape) < p) / p,想到一个bool型的怎么还可以除以一个p呢?其实运算到这里时(np.random.rand(*x.shape) < p)确实是一个bool型的,但它也是0、1表示的,False在下步除以p的时候会以0.代替,True在下步除以p的时候会以1.代替

Dropout backward pass

反向传播时只要有之前前向传播保存的mask,就能知道是哪些神经元被保留了,哪些神经元是被置为0了,所以只把上游梯度传给哪些被保留的就好了,在测试模式下还是不作任何别的操作,看code:

dropout_backward(dout, cache)—>return dx

def dropout_backward(dout, cache):

"""

Perform the backward pass for (inverted) dropout.

Inputs:

- dout: Upstream derivatives, of any shape

- cache: (dropout_param, mask) from dropout_forward.

"""

dropout_param, mask = cache

mode = dropout_param['mode']

dx = None

if mode == 'train':

#######################################################################

# TODO: Implement training phase backward pass for inverted dropout #

#######################################################################

# *****START OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

dx = mask * dout

# *****END OF YOUR CODE (DO NOT DELETE/MODIFY THIS LINE)*****

#######################################################################

# END OF YOUR CODE #

#######################################################################

elif mode == 'test':

dx = dout

return dx

Fully-connected nets with Dropout

这时可以在上次任意多层之间的ReLU层后加上Dropout层了,可以把之前的pass位置取代掉,这在我上上篇中其实已经放上去了。

结果

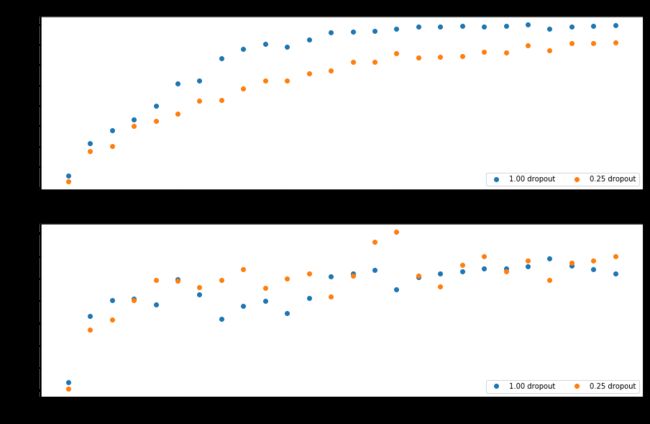

具体结果可见:Dropout.ipynb,里面也有对问题的一些回答。在作业里面用500个样本训练了两层的网络,分别用了Dropout为0.25的保留概率和不用Dropout,也就是p=1。得到训练准确率和验证准确率,得到如下结果图:

从图中可以看出:加了dropout的训练准确率有所下降,但是验证准确率上升,可以看到缩小了两者之间的gap, 所以说是dropout充当了regularization的作用。

链接

前后面的作业博文请见:

- 上一篇的博文:Batch Normalization

- 下一篇的博文:Convolutional Networks卷积神经网络