Pytorch实现ResNet34网络

1.ResNet介绍

ResNet(Residual Neural Network)由微软研究院的何恺明等四名华人提出,ResNet的结构可以极快的加速神经网络的训练,模型的准确率也有比较大的提升。同时ResNet的推广性非常好,甚至可以直接用到InceptionNet网络中。

传统的卷积网络或者全连接网络在信息传递的时候或多或少会存在信息丢失,损耗等问题,同时还有导致梯度消失或者梯度爆炸,导致很深的网络无法训练。ResNet在一定程度上解决了这个问题,通过直接将输入信息绕道传到输出,保护信息的完整性,整个网络只需要学习输入、输出差别的那一部分,简化学习目标和难度。

ResNet最大的区别在于有很多的旁路将输入直接连接到后面的层,这种结构也被称为short cut(捷径)或者skip connections。

ResNet残差块(residual block)如图所示。

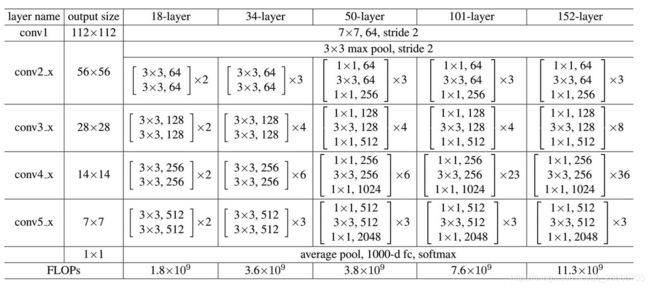

2.网络结构

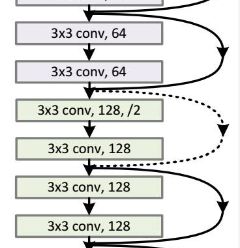

ResNet网络是参考了VGG19的网络,在其基础上进行了修改,并通过短路机制加入了残差单元,变化主要体现在ResNet直接使用stride=2的卷积做下采样,并且用global average pool层替换了全连接层。下图展示VGG-19网络、直连34层网络和ResNet的34层网络的结构对比。

上图的ResNet34网络有”实线“和”虚线“两种连接方式,实线的Connection部分都是执行3x3x64的卷积,他们的channel个数一致,所以采用计算方式: y=F(x)+x;

虚线的的Connection部分分别是3x3x64和3x3x128的卷积操作,采用的计算方式:y=F(x)+Wx;

其中W是卷积操作,用来调整x的channel维度的。

3. Pytorch实现ResNet34

3.1 残差块实现

import torch

import torch.nn as nn

import torchvision

from torch.nn import functional as F

import torch.utils.data as Data

# 定义残差块

class ResidualBlock(nn.Module):

def __init__(self, in_channels, out_channels, stride=1, shortcut=None):

super(ResidualBlock, self).__init__()

self.left = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=3, stride=stride, padding=1),

nn.BatchNorm2d(num_features=out_channels), # 批归一化

nn.ReLU(inplace=True), # 将计算得到的值直接覆盖之前的值,能够节省运算内存,不用多存储其他变量

nn.Conv2d(out_channels, out_channels, kernel_size=3,stride=stride, padding=1),

nn.BatchNorm2d(num_features=out_channels)

)

self.right = shortcut

def forward(self, x):

out = self.left(x)

residual = x if self.right is None else self.right(x)

out += residual

return F.relu(out)

可以看出ResidualBlock分为左右两个部分,左边是普通的卷积操作,而右边是shortcut,当前没有定义,可能是None,如果是None则相当于直接是原来的x不经过任何操作,也或者是经过一些操作。

3.2 ResNet实现

# 实现主module:ResNet34

# ResNet34 包含多个layer,每个layer又包含多个residual block

# 用子module来实现residual block,用_make_layer函数来实现layer

class ResNet(nn.Module):

def __init__(self, num_classes=1000):

super(ResNet, self).__init__()

# 前几层图像转换

# ResNet前两层与GoogleNet一样,每个卷积层多加一个BN层

# 输出通道64,步幅为2的7*7卷积层,连接步幅为2的3*3最大池化层

self.pre = nn.Sequential(

nn.Conv2d(3, 64, 7, 2, 3),

nn.BatchNorm2d(64),

nn.ReLU(),

nn.MaxPool2d(3, 2, 1)

)

# 重复的layer,分别有3,4,6,3个residual block

self.layer1 = self._make_layer(64, 128, 3)

self.layer2 = self._make_layer(128, 256, 4, stride=2)

self.layer3 = self._make_layer(256, 512, 6, stride=2)

self.layer4 = self._make_layer(512, 512, 3, stride=2)

self.fc = nn.Linear(512, num_classes)

# 构建layer,包含多个residual block

def _make_layer(self, in_channels, out_channels, block_num, stride=1):

# 刚开始两个channel可能不同,所以right通过shortcut把通道也变为outchannel

shortcut = nn.Sequential(

nn.Conv2d(in_channels, out_channels, kernel_size=1, stride=stride),

nn.BatchNorm2d(out_channels)

)

layers = []

layers.append(ResidualBlock(in_channels, out_channels, 1, shortcut))

# 之后的channel相同并且 w h也同,而经过ResidualBlock其w h不变,

for i in range(1, block_num):

layers.append(ResidualBlock(out_channels, out_channels))

return nn.Sequential(*layers)

def forward(self, x):

x = self.pre(x)

x = self.layer1(x)

x = self.layer2(x)

x = self.layer3(x)

x = self.layer4(x)

x = F.avg_pool2d(x, 7) # 如果图片大小为224 ,经过多个ResidualBlock到这里刚好为7,所以做一个池化,为1,

# 所以如果图片大小小于224,都可以传入的,因为经过7的池化,肯定为1,但是大于224则不一定

print(x.shape)

x = x.view(x.size(0), -1)

out = self.fc(x)

return out

ResNet前两层跟GoogLeNet一样:在输出通道为64,步幅为2的77卷积层后接步幅为2的33的最大池化层。不同之处在于ResNet每个卷积层后增加了批量归一化层。

_make_layer方法将多个残差块构成一个layer。

在残差层有多个残差块的时候,第一个残差块是带shorcut的,因为第一个ResidualBlock是传入了stride的,所以可能造成w和h的变化,而右边经过shortcut则通过计算是刚好能和左边保持一致的,所以能直接加,而后面的几个残差块是没有传入stride的,经过计算发现left的结果会保持输入的w h 都不变,所以shortcut可以为None。

最后加入全局平均池化层后接上全连接层输出。

输出ResNet34网络结构

ResNet(

(pre): Sequential(

(0): Conv2d(3, 64, kernel_size=(7, 7), stride=(2, 2), padding=(3, 3))

(1): BatchNorm2d(64, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU()

(3): MaxPool2d(kernel_size=3, stride=2, padding=1, dilation=1, ceil_mode=False)

)

(layer1): Sequential(

(0): ResidualBlock(

(left): Sequential(

(0): Conv2d(64, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(right): Sequential(

(0): Conv2d(64, 128, kernel_size=(1, 1), stride=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(left): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(left): Sequential(

(0): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(128, 128, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(128, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer2): Sequential(

(0): ResidualBlock(

(left): Sequential(

(0): Conv2d(128, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(right): Sequential(

(0): Conv2d(128, 256, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(left): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(left): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(3): ResidualBlock(

(left): Sequential(

(0): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(256, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer3): Sequential(

(0): ResidualBlock(

(left): Sequential(

(0): Conv2d(256, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(right): Sequential(

(0): Conv2d(256, 512, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(3): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(4): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(5): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(layer4): Sequential(

(0): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

(right): Sequential(

(0): Conv2d(512, 512, kernel_size=(1, 1), stride=(2, 2))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(1): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

(2): ResidualBlock(

(left): Sequential(

(0): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(1): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

(2): ReLU(inplace=True)

(3): Conv2d(512, 512, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(4): BatchNorm2d(512, eps=1e-05, momentum=0.1, affine=True, track_running_stats=True)

)

)

)

(fc): Linear(in_features=512, out_features=1000, bias=True)

)