kubernetes集群——高可用(haproxy+keeplived分布式)搭建应用

server1 172.25.10.1 harbor仓库

server2 172.25.10.2 haproxy+keepalived

server3 172.25.10.3 haproxy+keepalived

server4 172.25.10.4 master:server

server5 172.25.10.5 master

server6 172.25.10.6 master

server7 172.25.10.7 worke node

三、配置调度器haproxy

在server2/3上

yum install haproxy keepalived -y

haproxy配置:

cd /etc/haproxy/

vim haproxy.cfg

注意当haproxy和与k8s集群master节点在同一台主机上不能使用6443端口

当前我们是分开的,所以不影响

listen admin_status 方便在浏览器监控,可以不指定

bind *:80 监控80端口

mode http

stats uri /status 访问地址:/status

frontend main *:6443 指定端口

mode tcp

default_backend apiserver

backend apiserver

balance roundrobin

mode tcp

server4 app1 172.25.10.4:6443 check 后端多个master节点

server5 app2 172.25.10.5:6443 check

server6 app3 172.25.10.6:6443 check

systemctl enable --now haproxy.service 开机启动

[root@server2 haproxy]# netstat -atnpl | grep 6443 查看端口

tcp 0 0 0.0.0.0:6443 0.0.0.0:* LISTEN 24278/haproxy

vim haproxy.cfg 内容如下

四、配置高可用keepalived

[root@server2 keepalived]# vim keepalived.cfg

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost 邮箱

}

notification_email_from keepalived@localhost 服务邮箱

smtp_server 127.0.0.1 本机回环网路地址

smtp_connect_timeout 30

router_id LVS_DEVEL

vrrp_skip_check_adv_addr

#vrrp_strict

vrrp_garp_interval 0

vrrp_gna_interval 0

}

vrrp_instance VI_1 {

state MASTER 备机server3为BACKUP

interface eth0

virtual_router_id 51

priority 100 备机server3为优先级为50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script {

check_haproxy

}

virtual_ipaddress {

172.25.10.100 vip

}

}

virtual_server 172.25.10.100 443 {

delay_loop 6 健康检测时间间隔

lb_algo rr 轮询

lb_kind DR DR模式

#persistence_timeout 50 注释持续连接;当前是均衡调度

protocol TCP 使用tcp模式

real_server 172.25.10.4 6443 {

weight 1

TCP_CHECK {

connect_timeout 3 后端的master主机数量

retry 3 失败之后重试次数

delay_beforce_retry 3 重试间隔时间

}

}

real_server 172.25.10.5 6443 {

weight 1

TCP_CHECK {

connect_timeout 3

retry 3

delay_beforce_retry

}

}

real_server 172.25.10.6 6443 {

weight 1

TCP_CHECK {

connect_timeout 3

retry 3

delay_beforce_retry

}

}

}

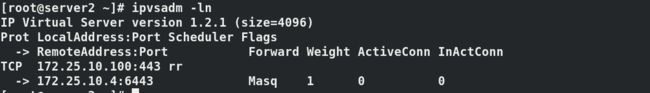

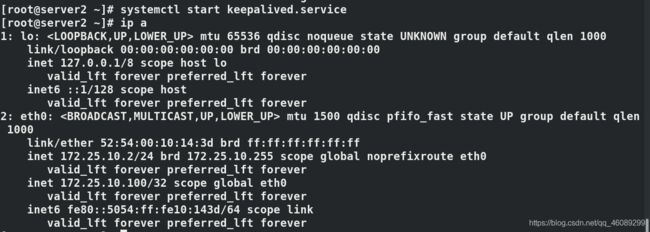

server2/3:systemctl enable --now keepalived.service

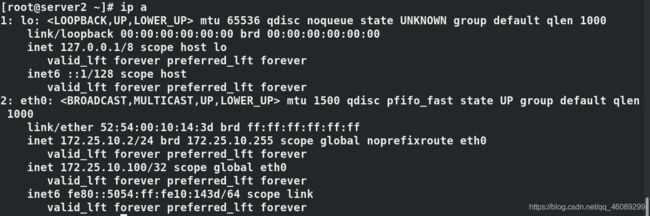

vip已经出现在server2上

五、接下来配置k8s集群

5.1所有节点server4/5/6/7进行以下操作(安装docker)

yum install docker-ce -y

[root@server4 ~]# cd /etc/docker/

[root@server4 docker]# ls

daemon.json reg.westos.org #(harbor)仓库认证证书

[root@server4 docker]# cat daemon.json

{

"registry-mirrors": ["https://reg.westos.org"], 仓库指向

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2",

"storage-opts": [

"overlay2.override_kernel_check=true"

]

}

[root@server4 ~]# systemctl enable --now docker

swapoff -a 关闭swap分区

[root@server4 docker]# vim /etc/fstab

[root@server4 docker]# cat /etc/fstab 禁用swap

#/dev/mapper/rhel-swap swap swap defaults 0 0

[root@server4 ~]# cat /etc/hosts

172.25.10.1 server1 reg.westos.org

[root@server4 ~]# cat /etc/sysctl.d/k8s.conf

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

[root@server4 ~]# sysctl --system

[root@server4 ~]# systemctl restart docker

5.2安装k8s

server4/5/6/7: yum install -y ipvsadm

加载内核模块:(kube_proxy使用IPVS模式)

ip_vs_rr

ip_vs_wrr

ip_vs_sh

nf_conntrack_ipv4

[root@server4 yum.repos.d]# cat k8s.repo

[Kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

指定版本号与仓库中的相同

yum install -y kubeadm-1.18.3-0 kubelete-1.18.3-0 kubectl-1.18.3-0

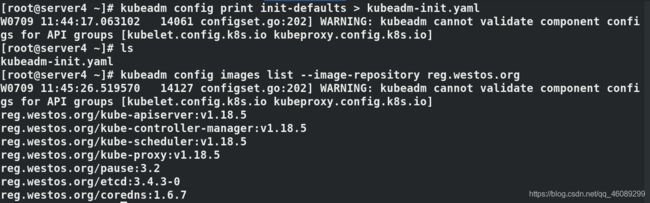

在server4生成kubeadm-init.yaml文件

kubeadm config print init-defaults > kubeadm-init.yaml

vim kubeadm-init.yaml 编辑

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.25.10.4 #指定当前主机ip

bindPort: 6443 端口

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server4 主机名称

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.25.10.100:6443" 指定集群高可用的vip及端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: reg.westos.org/library 指定仓库位置

kind: ClusterConfiguration

kubernetesVersion: v1.18.3 注意版本

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 指定pod网段

serviceSubnet: 10.96.0.0/12 指定服务网段

scheduler: {}

--- 指定使用的模式为ipvs,若不指定默认使用的是iptables,也可以每个主机加载ipvs内核模块

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs

列出镜像

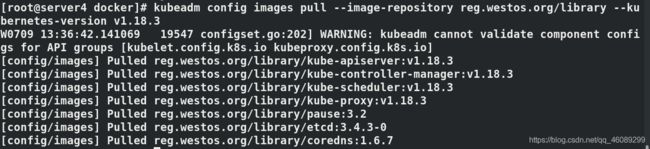

本地harbor仓库预先拉取镜像

master节点添加vip

yum install -y arptables_jf 所有master 节点安装

先给server4添加vip,其他主机需要在成为备选master主机之后并且能在高可用节点上看到后端的主机然后在添加vip

[root@server4 ~]# ip addr add 172.25.10.100/24 dev eth0 临时添加

[root@server4 ~]# arptables -A INPUT -d 172.25.10.100 -j DROP 拒绝所有访问172.25.10.100的IP主机来源

[root@server4 ~]# arptables -A OUPUT -s 172.25.10.100 -j mangle --mangle-ip-s 172.25.10.4 172.25.10.100——>172.25.10.4

[root@server4 ~]# arptables-save > /etc/sysconfig/arptables 保存策略信息

[root@server4 ~]# cat /etc/sysconfig/arptables 查看

*filter

:INPUT ACCEPT

:OUTPUT ACCEPT

:FORWARD ACCEPT

-A INPUT -j DROP -d 172.25.10.100

-A INPUT -j mangle -s 172.25.10.100 --mangle-ip-s 172.25.10.4

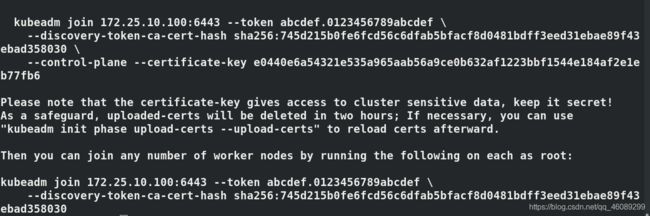

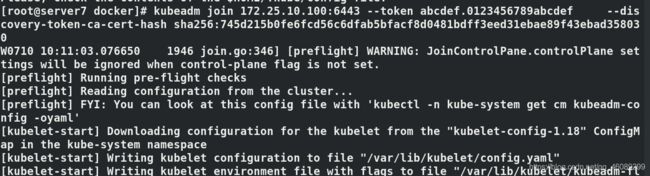

初始化

--upload-certs:同步多个master节点证书

[root@server4 ~]# kubeadm init --config kubeadm-init.yaml --upload-certs

第一个join是添加master

第一个join是添加worker

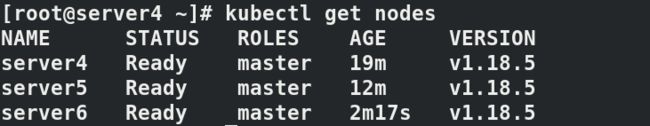

server4成为master

[root@server4 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

server4 Ready master 5m43s v1.18.5

每次初始化都要重新拷贝文件

cp /etc/kubernetes/admin.conf ~/.kube/config

server5/6相同的操作

[root@server5 ~]# ip addr add 172.25.10.100/24 dev eth0

[root@server5 ~]# arptables -A INPUT -d 172.25.10.100 -j DROP

[root@server5 ~]# arptables -A OUTPUT -s 172.25.10.100 -j mangle --mangle-ip-s 172.25.10.5

[root@server5 ~]# arptables-save > /etc/sysconfig/arptables

[root@server5 ~]# cat /etc/sysconfig/arptables

*filter

:INPUT ACCEPT

:OUTPUT ACCEPT

:FORWARD ACCEPT

-A INPUT -j DROP -d 172.25.10.100

-A OUTPUT -j mangle -s 172.25.10.100 --mangle-ip-s 172.25.10.5

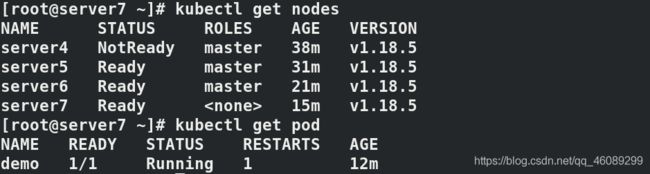

在server4查看所有master的信息

[root@server4 ~]# kubectl -n kube-system describe cm kubeadm-config

运行网络插件

vim kube-flannel.yml

image: flannel:v0.12.0-amd64 仓库中必须有此镜像

[root@server2 ~]# systemctl stop keepalived.service 停止服务

[root@server4 ~]# poweroff 关闭当前master节点

在server3上查看server4已经被移出高可用集群

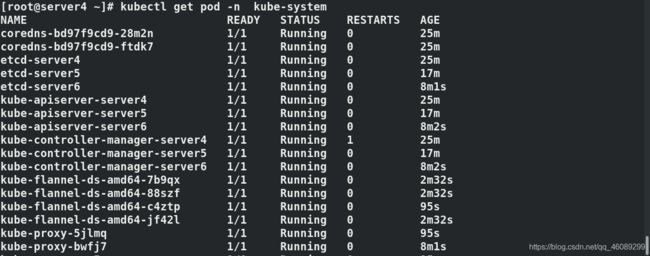

查看pod依然运行

注意此时集群不能在有任何一台master主机宕掉,否则会变成单点集群;最低必须有三台master才可以高可用

启动server2的keepalived服务VIP已经被夺回,server2的优先级高于server3

启动server4需要重新添加VIP和启动服务。

[root@server4 ~]# ip addr add 172.25.10.100/24 dev eth0

[root@server4 ~]# systemctl start arptables.service

[root@server4 ~]# kubectl get pod

NAME READY STATUS RESTARTS AGE

demo 1/1 Running 1 14m

[root@server4 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

server4 Ready master 41m v1.18.5

server5 Ready master 33m v1.18.5

server6 Ready master 23m v1.18.5

server7 Ready 17m v1.18.5

补充

当haproxy+keepalived与k8s的master节点存在于一台主机上特别注意端口不能同时使用6443

以下是配置文件内的不同之处,具体的k8s部署和docker安装与上面的分布式相同

在同一主机上不需要再设置arptables防火墙策略

vim /etc/keepalived/keepalived.cfg

global_defs {

notification_email {

root@localhost

}

notification_email_from keepalived@localhost

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script check_haproxy {

script "/etc/keepalived/check_haproxy.sh"

interval 5

}

vrrp_instance VI_1 {

state MASTER //备机设置为BACKUP

interface eth0

virtual_router_id 51

priority 100 //备机设置低优先级50

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

track_script { 指定脚本

check_haproxy

}

virtual_ipaddress {

172.25.0.100

}

}

}

编辑脚本

cd /etc/keepalived/

vim check_haproxy.sh

#!/bin/bash

systemctl status haproxy &> /dev/null

if [ $? != 0 ];then

systemctl stop keepalived

fi

cat /etc/haproxy/haproxy.cfg

#---------------------------------------------------------------------

# Example configuration for a possible web application. See the

# full configuration options online.

#

# http://haproxy.1wt.eu/download/1.4/doc/configuration.txt

#

#---------------------------------------------------------------------

#---------------------------------------------------------------------

# Global settings

#---------------------------------------------------------------------

global

# to have these messages end up in /var/log/haproxy.log you will

# need to:

#

# 1) configure syslog to accept network log events. This is done

# by adding the '-r' option to the SYSLOGD_OPTIONS in

# /etc/sysconfig/syslog

#

# 2) configure local2 events to go to the /var/log/haproxy.log

# file. A line like the following can be added to

# /etc/sysconfig/syslog

#

# local2.* /var/log/haproxy.log

#

log 127.0.0.1 local2

chroot /var/lib/haproxy

pidfile /var/run/haproxy.pid

maxconn 4000

user haproxy

group haproxy

daemon

# turn on stats unix socket

stats socket /var/lib/haproxy/stats

#---------------------------------------------------------------------

# common defaults that all the 'listen' and 'backend' sections will

# use if not designated in their block

#---------------------------------------------------------------------

defaults

mode http

log global

option httplog

option dontlognull

option http-server-close

option forwardfor except 127.0.0.0/8

option redispatch

retries 3

timeout http-request 10s

timeout queue 1m

timeout connect 10s

timeout client 1m

timeout server 1m

timeout http-keep-alive 10s

timeout check 10s

maxconn 3000

#---------------------------------------------------------------------

# main frontend which proxys to the backends

#---------------------------------------------------------------------

frontend main *:8443 在同一个主机上6443端口已经被master占用

mode tcp

default_backend apiserver

#---------------------------------------------------------------------

# round robin balancing between the various backends

#---------------------------------------------------------------------

backend app

mode tcp

balance roundrobin

server app1 172.25.10.2:8443 check

server app2 172.25.10.3:8443 check

server app3 172.25.10.4:8443 check

vim kubeadm-init.yaml 编辑

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: abcdef.0123456789abcdef

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 172.25.10.4 #指定当前主机ip

bindPort: 6443 端口

nodeRegistration:

criSocket: /var/run/dockershim.sock

name: server4 主机名称

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: "172.25.10.100:8443 指定集群高可用的vip及端口

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: reg.westos.org/library 指定仓库位置

kind: ClusterConfiguration

kubernetesVersion: v1.18.3 注意版本

networking:

dnsDomain: cluster.local

podSubnet: 10.244.0.0/16 指定pod网段

serviceSubnet: 10.96.0.0/12 指定服务网段

scheduler: {}

--- 指定使用的模式为ipvs,若不指定默认使用的是iptables,也可以每个主机加载ipvs内核模块

apiVersion: kubeproxy.config.k8s.io/v1alpha1

kind: KubeProxyConfiguration

mode: ipvs