centos8 k8s安装部署

部署前准备

(1)、安装时所需要的镜像(现在准备好并打包到csdn上,下载地址如下:https://download.csdn.net/download/baidu_38432732/12376543)

在一台国外网络比较好的机器上pull相应版本的镜像

docker pull k8s.gcr.io/kube-apiserver:v1.18.1

docker pull k8s.gcr.io/kube-controller-manager:v1.18.1

docker pull k8s.gcr.io/kube-scheduler:v1.18.1

docker pull k8s.gcr.io/kube-proxy:v1.18.1

docker pull k8s.gcr.io/pause:3.2

docker pull k8s.gcr.io/etcd:3.4.3-0

docker pull k8s.gcr.io/coredns:1.6.7

docker pull quay.io/coreos/flannel:v0.12.0-amd64将pull下来的镜像打包,之后迁移到我们的安装服务器上

docker save k8s.gcr.io/kube-apiserver:v1.18.1 >apiserver-1.18.1.tar.gz

docker save k8s.gcr.io/etcd:3.4.3-0 > etcd-3.4.3-0.tar

docker save k8s.gcr.io/pause:3.2 > pause-3.2.tar

docker save k8s.gcr.io/kube-controller-manager:v1.18.1 > kube-controller-manager-1.18.1.tar

docker save k8s.gcr.io/kube-scheduler:v1.18.1 > kube-scheduler-1.18.1.tar

docker save k8s.gcr.io/kube-proxy:v1.18.1 > kube-proxy-1.18.1.tar

docker save k8s.gcr.io/coredns:1.6.7 >coredns-1.6.7.tar

docker save quay.io/coreos/flannel:v0.12.0-amd64 >flannel-0.12.0.tar(2)、准备三台机器

| 主机名 | IP地址 | 服务 |

|---|---|---|

| master | 192.168.0.155 | docker |

| node01 | 192.168.0.154 | docker |

| node02 | 192.168.0.153 | docker |

(3)、设置hostname,并配置hosts文件

[root@localhost kubenet]# hostnamectl set-hostname k8s_master

[root@localhost kubenet]# hostname

k8s_master

[root@localhost kubenet]# su -

[root@k8s_master kubenet]# tail -3 /etc/hosts

192.168.0.155 k8s_master

192.168.0.154 k8s_node1

192.168.0.153 k8s_node2

(4)、关闭swap分区

[root@master ~]# swapoff -a

[root@master ~]# vim /etc/fstab

[root@master ~]# free -h

^H total used free shared buff/cache available

Mem: 2.7Gi 278Mi 1.6Gi 8.0Mi 812Mi 2.2Gi

Swap: 0B 0B 0B

[root@master ~]# tail -1 /etc/fstab

#/dev/mapper/cl-swap swap swap defaults 0 0

(5)测试环境关闭防火墙(线上则可以添加相应的防火墙规则)

[root@master updates]# systemctl stop firewalld

[root@master updates]# systemctl disable firewalld

Removed /etc/systemd/system/multi-user.target.wants/firewalld.service.

Removed /etc/systemd/system/dbus-org.fedoraproject.FirewallD1.service.(6) 关闭selinux

[root@master ~]# vim /etc/sysconfig/selinux

[root@master ~]# grep "^SELINUX" /etc/sysconfig/selinux

SELINUX=disabled

SELINUXTYPE=targeted

[root@master ~]# setenforce 0

2、在安装的服务器上导入我们所需要的镜像、安装docker服务,详见https://blog.csdn.net/baidu_38432732/article/details/105315880

3、 打开路由转发和iptables桥接功能(三台)

[root@k8s_master kubenet]# cat /etc/sysctl.d/k8s.conf //开启iptables桥接功能

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@localhost kubenet]# echo net.ipv4.ip_forward = 1 >> /etc/sysctl.conf //**打开路由转发

[root@localhost kubenet]# sysctl -p /etc/sysctl.d/k8s.conf //刷新一下

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

[root@localhost kubenet]# sysctl -p

net.ipv4.ip_forward = 14、master节点安装部署k8s

1) 指定yum安装kubernetes的yum源(三台)

[root@k8s_master kubenet]# cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF 2)检查仓库是否可用

[root@k8s_master kubenet]# yum repolist

Repository AppStream is listed more than once in the configuration

Repository extras is listed more than once in the configuration

Repository PowerTools is listed more than once in the configuration

Repository centosplus is listed more than once in the configuration

Kubernetes 2.5 kB/s | 454 B 00:00

Kubernetes 19 kB/s | 1.8 kB 00:00

Importing GPG key 0xA7317B0F:

Userid : "Google Cloud Packages Automatic Signing Key "

Fingerprint: D0BC 747F D8CA F711 7500 D6FA 3746 C208 A731 7B0F

From : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Is this ok [y/N]: y

Importing GPG key 0xBA07F4FB:

Userid : "Google Cloud Packages Automatic Signing Key "

Fingerprint: 54A6 47F9 048D 5688 D7DA 2ABE 6A03 0B21 BA07 F4FB

From : https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

Is this ok [y/N]: y

Kubernetes 22 kB/s | 975 B 00:00

Importing GPG key 0x3E1BA8D5:

Userid : "Google Cloud Packages RPM Signing Key "

Fingerprint: 3749 E1BA 95A8 6CE0 5454 6ED2 F09C 394C 3E1B A8D5

From : https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

Is this ok [y/N]: ty

Is this ok [y/N]: y

Kubernetes 166 kB/s | 90 kB 00:00

repo id repo name status

AppStream CentOS-8 - AppStream 5,281

base CentOS-8 - Base - mirrors.aliyun.com 2,231

docker-ce-stable Docker CE Stable - x86_64 63

extras CentOS-8 - Extras - mirrors.aliyun.com 15

kubernetes Kubernetes 3)创建本地缓存(三台)

[root@k8s_master ~]# yum makecache

Repository AppStream is listed more than once in the configuration

Repository extras is listed more than once in the configuration

Repository PowerTools is listed more than once in the configuration

Repository centosplus is listed more than once in the configuration

CentOS-8 - AppStream 6.9 kB/s | 4.3 kB 00:00

CentOS-8 - Base - mirrors.aliyun.com 91 kB/s | 3.8 kB 00:00

CentOS-8 - Extras - mirrors.aliyun.com 28 kB/s | 1.5 kB 00:00

Docker CE Stable - x86_64 3.9 kB/s | 3.5 kB 00:00

Kubernetes 4.1 kB/s | 454 B 00:00

Metadata cache created.5、所有节点安装各个服务

1)master安装以下服务

[root@k8s_master ~]# yum -y install kubeadm kubelet kubectl2)node1和node2安装

[root@k8s_node1 ~]# yum -y install kubeadm kubelet (kubeadm-1.18.1 kubelet-1.18.1(指定版本))3)三台主机设置kubelet开机启动

[root@k8s_master ~]# systemctl enable kubelet

Created symlink /etc/systemd/system/multi-user.target.wants/kubelet.service → /usr/lib/systemd/system/kubelet.service.6、master导入之前准备好的镜像

[root@k8s_master kubenet]# ls

apiserver-1.18.1.tar.gz etcd-3.4.3-0.tar.gz kube-proxy-1.18.1.tar.gz pause-3.2.tar.gz

coredns-1.6.7.tar.gz kube-controller-manager-1.18.1.tar.gz kube-scheduler-1.18.1.tar.gz v2.0.0-rc7.tar.gz

[root@k8s_master kubenet]# docker load ] 53.88MB/53.88MB

58d9c9d2174e: Loading layer [==================================================>] 120.7MB/120.7MB

Loaded image: k8s.gcr.io/kube-apiserver:v1.18.1

[root@k8s_master kubenet]# docker load ] 43.87MB/43.87MB

ce04b89b7def: Loading layer [==================================================>] 224.9MB/224.9MB

1b2bc745b46f: Loading layer [==================================================>] 21.22MB/21.22MB

Loaded image: k8s.gcr.io/etcd:3.4.3-0

[root@k8s_master kubenet]# docker load ] 21.06MB/21.06MB

2dc2f2423ad1: Loading layer [==================================================>] 5.168MB/5.168MB

ad9fb2411669: Loading layer [==================================================>] 4.608kB/4.608kB

597151d24476: Loading layer [==================================================>] 8.192kB/8.192kB

0d8d54147a3a: Loading layer [==================================================>] 8.704kB/8.704kB

310c81aa788d: Loading layer [==================================================>] 38.38MB/38.38MB

Loaded image: k8s.gcr.io/kube-proxy:v1.18.1

[root@k8s_master kubenet]# docker load ] 684.5kB/684.5kB

Loaded image: k8s.gcr.io/pause:3.2

[root@k8s_master kubenet]# docker load ] 336.4kB/336.4kB

c965b38a6629: Loading layer [==================================================>] 43.58MB/43.58MB

Loaded image: k8s.gcr.io/coredns:1.6.7

[root@k8s_master kubenet]# docker load ] 110.1MB/110.1MB

Loaded image: k8s.gcr.io/kube-controller-manager:v1.18.1

[root@k8s_master kubenet]# docker load ] 42.95MB/42.95MB

Loaded image: k8s.gcr.io/kube-scheduler:v1.18.1

[root@k8s_master kubenet]# docker load 查询下刚刚导入的镜像

[root@k8s_master kubenet]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.18.1 4e68534e24f6 13 days ago 117MB

k8s.gcr.io/kube-controller-manager v1.18.1 d1ccdd18e6ed 13 days ago 162MB

k8s.gcr.io/kube-apiserver v1.18.1 a595af0107f9 13 days ago 173MB

k8s.gcr.io/kube-scheduler v1.18.1 6c9320041a7b 13 days ago 95.3MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 2 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 2 months ago 43.8MB

k8s.gcr.io/etcd 3.4.3-0 303ce5db0e90 5 months ago 288MB

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 5 weeks ago 52.8MB

7、初始化kubernetes集群

kubeadm init --kubernetes-version=v1.18.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap[root@master ~]# kubeadm init --kubernetes-version=v1.18.1 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12 --ignore-preflight-errors=Swap

W0422 18:41:24.971483 14853 configset.go:202] WARNING: kubeadm cannot validate component configs for API groups [kubelet.config.k8s.io kubeproxy.config.k8s.io]

[init] Using Kubernetes version: v1.18.1

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Starting the kubelet

[certs] Using certificateDir folder "/etc/kubernetes/pki"

[certs] Using existing ca certificate authority

[certs] Using existing apiserver certificate and key on disk

[certs] Using existing apiserver-kubelet-client certificate and key on disk

[certs] Using existing front-proxy-ca certificate authority

[certs] Using existing front-proxy-client certificate and key on disk

[certs] Using existing etcd/ca certificate authority

[certs] Using existing etcd/server certificate and key on disk

[certs] Using existing etcd/peer certificate and key on disk

[certs] Using existing etcd/healthcheck-client certificate and key on disk

[certs] Using existing apiserver-etcd-client certificate and key on disk

[certs] Using the existing "sa" key

[kubeconfig] Using kubeconfig folder "/etc/kubernetes"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/admin.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/kubelet.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/controller-manager.conf"

[kubeconfig] Using existing kubeconfig file: "/etc/kubernetes/scheduler.conf"

[control-plane] Using manifest folder "/etc/kubernetes/manifests"

[control-plane] Creating static Pod manifest for "kube-apiserver"

[control-plane] Creating static Pod manifest for "kube-controller-manager"

W0422 18:41:26.045499 14853 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[control-plane] Creating static Pod manifest for "kube-scheduler"

W0422 18:41:26.046076 14853 manifests.go:225] the default kube-apiserver authorization-mode is "Node,RBAC"; using "Node,RBAC"

[etcd] Creating static Pod manifest for local etcd in "/etc/kubernetes/manifests"

[wait-control-plane] Waiting for the kubelet to boot up the control plane as static Pods from directory "/etc/kubernetes/manifests". This can take up to 4m0s

[apiclient] All control plane components are healthy after 12.003005 seconds

[upload-config] Storing the configuration used in ConfigMap "kubeadm-config" in the "kube-system" Namespace

[kubelet] Creating a ConfigMap "kubelet-config-1.18" in namespace kube-system with the configuration for the kubelets in the cluster

[upload-certs] Skipping phase. Please see --upload-certs

[mark-control-plane] Marking the node master as control-plane by adding the label "node-role.kubernetes.io/master=''"

[mark-control-plane] Marking the node master as control-plane by adding the taints [node-role.kubernetes.io/master:NoSchedule]

[bootstrap-token] Using token: ywhj33.7n34chv9k2ouitvk

[bootstrap-token] Configuring bootstrap tokens, cluster-info ConfigMap, RBAC Roles

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to get nodes

[bootstrap-token] configured RBAC rules to allow Node Bootstrap tokens to post CSRs in order for nodes to get long term certificate credentials

[bootstrap-token] configured RBAC rules to allow the csrapprover controller automatically approve CSRs from a Node Bootstrap Token

[bootstrap-token] configured RBAC rules to allow certificate rotation for all node client certificates in the cluster

[bootstrap-token] Creating the "cluster-info" ConfigMap in the "kube-public" namespace

[kubelet-finalize] Updating "/etc/kubernetes/kubelet.conf" to point to a rotatable kubelet client certificate and key

[addons] Applied essential addon: CoreDNS

[addons] Applied essential addon: kube-proxy

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 192.168.0.155:6443 --token nc5pfp.wrp5mutpwb4blufb --discovery-token-ca-cert-hash sha256:a675056664f0d65bda6ef36d48243b372b5d59e259aa599d34d3f9ef2077f3c2

root用户

[root@node1 ~]# echo "export KUBECONFIG=/etc/kubernetes/admin.conf" >> /etc/profile

[root@node1 ~]# source /etc/profile普通用户

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config2) 在其他两台node节点上导入刚刚准备好的镜像

[root@node1 ~]# docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

k8s.gcr.io/kube-proxy v1.18.1 4e68534e24f6 13 days ago 117MB

quay.io/coreos/flannel v0.12.0-amd64 4e9f801d2217 5 weeks ago 52.8MB

k8s.gcr.io/pause 3.2 80d28bedfe5d 2 months ago 683kB

k8s.gcr.io/coredns 1.6.7 67da37a9a360 2 months ago 43.8MB

3)在其他node主机上执行

kubeadm join 192.168.0.155:6443 --token nc5pfp.wrp5mutpwb4blufb --discovery-token-ca-cert-hash sha256:a675056664f0d65bda6ef36d48243b372b5d59e259aa599d34d3f9ef2077f3c2node1的执行结果

[root@node1 ~]# kubeadm join 192.168.0.155:6443 --token nc5pfp.wrp5mutpwb4blufb --discovery-token-ca-cert-hash sha256:a675056664f0d65bda6ef36d48243b372b5d59e259aa599d34d3f9ef2077f3c2

W0422 19:08:45.598062 4205 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.node2的执行结果

[root@node2 pods]# kubeadm join 192.168.0.155:6443 --token nc5pfp.wrp5mutpwb4blufb --discovery-token-ca-cert-hash sha256:a675056664f0d65bda6ef36d48243b372b5d59e259aa599d34d3f9ef2077f3c2

W0422 19:10:55.127994 17734 join.go:346] [preflight] WARNING: JoinControlPane.controlPlane settings will be ignored when control-plane flag is not set.

[preflight] Running pre-flight checks

[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd". Please follow the guide at https://kubernetes.io/docs/setup/cri/

[WARNING FileExisting-tc]: tc not found in system path

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.18" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

3)查询节点状态

[root@master updates]# kubectl get node

NAME STATUS ROLES AGE VERSION

master NotReady master 5m2s v1.18.24)查询健康状况

[root@master ~]# kubectl get cs

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-0 Healthy {"health":"true"} 8、添加网络组件flannel

[root@master ~]# wget https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

--2020-04-22 17:57:12-- https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.108.133

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.108.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 14366 (14K) [text/plain]

Saving to: 'kube-flannel.yml'

kube-flannel.yml 100%[============================================================================>] 14.03K 25.0KB/s in 0.6s

2020-04-22 17:57:15 (25.0 KB/s) - 'kube-flannel.yml' saved [14366/14366]

[root@master ~]# ls

kube-flannel.yml

[root@master ~]# kubectl apply -f kube-flannel.yml

podsecuritypolicy.policy/psp.flannel.unprivileged created

clusterrole.rbac.authorization.k8s.io/flannel created

clusterrolebinding.rbac.authorization.k8s.io/flannel created

serviceaccount/flannel created

configmap/kube-flannel-cfg created

daemonset.apps/kube-flannel-ds-amd64 created

daemonset.apps/kube-flannel-ds-arm64 created

daemonset.apps/kube-flannel-ds-arm created

daemonset.apps/kube-flannel-ds-ppc64le created

daemonset.apps/kube-flannel-ds-s390x created

以下是cat kube-flannel.yml 的具体内容

---

apiVersion: policy/v1beta1

kind: PodSecurityPolicy

metadata:

name: psp.flannel.unprivileged

annotations:

seccomp.security.alpha.kubernetes.io/allowedProfileNames: docker/default

seccomp.security.alpha.kubernetes.io/defaultProfileName: docker/default

apparmor.security.beta.kubernetes.io/allowedProfileNames: runtime/default

apparmor.security.beta.kubernetes.io/defaultProfileName: runtime/default

spec:

privileged: false

volumes:

- configMap

- secret

- emptyDir

- hostPath

allowedHostPaths:

- pathPrefix: "/etc/cni/net.d"

- pathPrefix: "/etc/kube-flannel"

- pathPrefix: "/run/flannel"

readOnlyRootFilesystem: false

# Users and groups

runAsUser:

rule: RunAsAny

supplementalGroups:

rule: RunAsAny

fsGroup:

rule: RunAsAny

# Privilege Escalation

allowPrivilegeEscalation: false

defaultAllowPrivilegeEscalation: false

# Capabilities

allowedCapabilities: ['NET_ADMIN']

defaultAddCapabilities: []

requiredDropCapabilities: []

# Host namespaces

hostPID: false

hostIPC: false

hostNetwork: true

hostPorts:

- min: 0

max: 65535

# SELinux

seLinux:

# SELinux is unused in CaaSP

rule: 'RunAsAny'

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

rules:

- apiGroups: ['extensions']

resources: ['podsecuritypolicies']

verbs: ['use']

resourceNames: ['psp.flannel.unprivileged']

- apiGroups:

- ""

resources:

- pods

verbs:

- get

- apiGroups:

- ""

resources:

- nodes

verbs:

- list

- watch

- apiGroups:

- ""

resources:

- nodes/status

verbs:

- patch

---

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: flannel

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: flannel

subjects:

- kind: ServiceAccount

name: flannel

namespace: kube-system

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: flannel

namespace: kube-system

---

kind: ConfigMap

apiVersion: v1

metadata:

name: kube-flannel-cfg

namespace: kube-system

labels:

tier: node

app: flannel

data:

cni-conf.json: |

{

"name": "cbr0",

"cniVersion": "0.3.1",

"plugins": [

{

"type": "flannel",

"delegate": {

"hairpinMode": true,

"isDefaultGateway": true

}

},

{

"type": "portmap",

"capabilities": {

"portMappings": true

}

}

]

}

net-conf.json: |

{

"Network": "10.244.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-amd64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- amd64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-amd64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm64

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm64

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm64

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-arm

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- arm

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-arm

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-ppc64le

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- ppc64le

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-ppc64le

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

name: kube-flannel-cfg

---

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: kube-flannel-ds-s390x

namespace: kube-system

labels:

tier: node

app: flannel

spec:

selector:

matchLabels:

app: flannel

template:

metadata:

labels:

tier: node

app: flannel

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms:

- matchExpressions:

- key: kubernetes.io/os

operator: In

values:

- linux

- key: kubernetes.io/arch

operator: In

values:

- s390x

hostNetwork: true

tolerations:

- operator: Exists

effect: NoSchedule

serviceAccountName: flannel

initContainers:

- name: install-cni

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- cp

args:

- -f

- /etc/kube-flannel/cni-conf.json

- /etc/cni/net.d/10-flannel.conflist

volumeMounts:

- name: cni

mountPath: /etc/cni/net.d

- name: flannel-cfg

mountPath: /etc/kube-flannel/

containers:

- name: kube-flannel

image: quay.io/coreos/flannel:v0.12.0-s390x

command:

- /opt/bin/flanneld

args:

- --ip-masq

- --kube-subnet-mgr

resources:

requests:

cpu: "100m"

memory: "50Mi"

limits:

cpu: "100m"

memory: "50Mi"

securityContext:

privileged: false

capabilities:

add: ["NET_ADMIN"]

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

volumeMounts:

- name: run

mountPath: /run/flannel

- name: flannel-cfg

mountPath: /etc/kube-flannel/

volumes:

- name: run

hostPath:

path: /run/flannel

- name: cni

hostPath:

path: /etc/cni/net.d

- name: flannel-cfg

configMap:

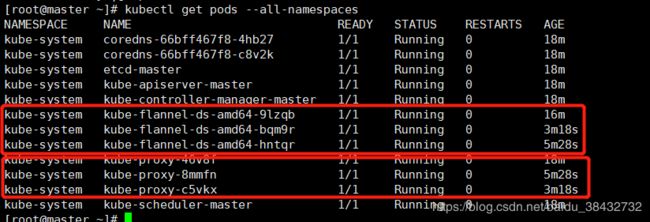

name: kube-flannel-cfg查看对应的pod是否启动并运行(结果显示对应的三个kube-proxy和flannel网络的pod在运行)

在查询个节点状态

[root@master ~]# kubectl get node

NAME STATUS ROLES AGE VERSION

master Ready master 16m v1.18.2

node1 Ready 3m13s v1.18.2

node2 Ready 63s v1.18.2 上传一份kubernetes的yaml配置文件的解读

apiVersion: v1 # 必选,版本号,例如v1

kind: Pod # 必选,Pod

metadata: # 必选,元数据

name: string # 必选,Pod名称

namespace: string # 必选,Pod所属的命名空间

labels: # 自定义标签

- name: string # 自定义标签名字

annotations: # 自定义注释列表

- name: string

spec: # 必选,Pod中容器的详细定义

containers: # 必选,Pod中容器列表

- name: string # 必选,容器名称

image: string # 必选,容器的镜像名称

imagePullPolicy: [Always | Never | IfNotPresent]# 镜像拉取的策略,默认为Always:Alawys 表示每次都尝试重新拉取镜像;IfNotPresent 表示如果本地有该镜像,则使用本地镜像,本地不存在时拉取镜像;Nerver表示仅使用本地镜像

command: [string] # 容器的启动命令列表,如不指定,使用打包时使用的启动命令

args: [string] # 容器的启动命令参数列表

workingDir: string # 容器的工作目录

volumeMounts: # 挂载到容器内部的存储卷配置

- name: string # 引用pod定义的共享存储卷的名称,需用volumes[]部分定义的的卷名

mountPath: string # 存储卷在容器内mount的绝对路径,应少于512字符

readOnly: boolean # 是否为只读模式,默认为读写模式

ports: # 需要暴露的端口库号列表

- name: string # 端口号名称

containerPort: int # 容器需要监听的端口号

hostPort: int # 容器所在主机需要监听的端口号,默认与Container相同。

# 当设置hostPort时,同一台宿主机将无法启动该容器的第二个副本

protocol: string # 端口协议,支持TCP和UDP,默认TCP

env: # 容器运行前需设置的环境变量列表

- name: string # 环境变量名称

value: string # 环境变量的值

resources: # 资源限制和请求的设置

limits: # 资源限制的设置

cpu: string # Cpu的限制,单位为core数,将用于docker run --cpu-shares参数

memory: string # 内存限制,单位可以为Mib/Gib,将用于docker run --memory参数

requests: # 资源请求的设置

cpu: string # Cpu请求,容器启动的初始可用数量

memory: string # 内存清楚,容器启动的初始可用数量

livenessProbe:# 对Pod内个容器健康检查的设置,当探测无响应几次后将自动重启该容器;检查方法有exec、httpGet和tcpSocket,对一个容器只需设置其中一种方法即可

exec: # 对Pod容器内检查方式设置为exec方式

command: [string] # exec方式需要制定的命令或脚本

httpGet: # 对Pod内个容器健康检查方法设置为HttpGet,需要制定Path、port

path: string

port: number

host: string

scheme: string

HttpHeaders:

- name: string

value: string

tcpSocket: # 对Pod内个容器健康检查方式设置为tcpSocket方式

port: number

initialDelaySeconds: 0 # 容器启动完成后首次探测的时间,单位为秒

timeoutSeconds: 0 # 对容器健康检查探测等待响应的超时时间,单位秒,默认1秒

periodSeconds: 0 # 对容器监控检查的定期探测时间设置,单位秒,默认10秒一次

successThreshold: 0

failureThreshold: 0

securityContext:

privileged: false

restartPolicy: [Always | Never | OnFailure]# Pod的重启策略: Always表示不管以何种方式终止运行,kubelet都将重启;OnFailure表示只有Pod以非0退出码退出才重启; Nerver表示不再重启该Pod

nodeSelector: obeject # 设置NodeSelector表示将该Pod调度到包含这个label的node上,以key:value的格式指定

imagePullSecrets: # Pull镜像时使用的secret名称,以key:secretkey格式指定

- name: string

hostNetwork: false # 是否使用主机网络模式,默认为false,如果设置为true,表示使用宿主机网络

volumes: # 在该pod上定义共享存储卷列表

- name: string # 共享存储卷名称 (volumes类型有很多种)

emptyDir: {} # 类型为emtyDir的存储卷,与Pod同生命周期的一个临时目录。为空值

hostPath: string # 类型为hostPath的存储卷,表示挂载Pod所在宿主机的目录

path: string # Pod所在宿主机的目录,将被用于同期中mount的目录

secret: # 类型为secret的存储卷,挂载集群与定义的secre对象到容器内部

scretname: string

items:

- key: string

path: string

configMap: # 类型为configMap的存储卷,挂载预定义的configMap对象到容器内部

name: string

items:

- key: string

path: string