【Kubernetes】Pod学习(八)Pod调度:定向调度与亲和性调度

此文为学习《Kubernetes权威指南》的相关笔记

学习笔记:

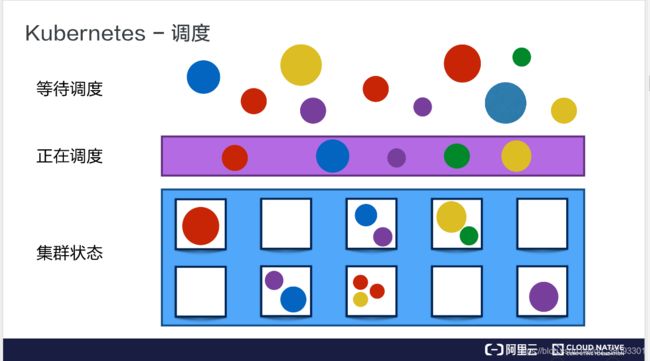

RC等副本控制器使用了系统自动调度算法完成一组Pod的部署,在完成对Pod的定义后,交由调度器scheduler根据各工作节点情况,以负载均衡的原则进行分配,在这种模式下,Pod副本可能被调度到任意一个可用的工作节点。

阿里云大学云原生公开课上,对Deployment给出的介绍如下:

但在某些场景下, 设计者希望Pod副本能被调度到特定的工作节点上,需要更加细粒度的调度策略设置,完成对Pod的精准调度

NodeSelector为一种定向调度策略,在给工作节点打上标签后,可以在副本控制器创建时通过节点选择器来指定可调度的节点范围,这种方式足够精准,但一定程度上缺乏灵活性。

亲和性策略进一步扩展了Pod调度能力,正如“亲和性”这个定义所表达的,设计者希望这种策略能更细粒度,同时更灵活的去寻找最合适的调度结点,该策略包括节点亲和性和Pod亲和性,亲和性调度机制的优点如下:

- 更具表达力(不仅仅是死板的“必需满足”)

- 可以存在软限制,即有优先级策略,可以存在“退而求其次”的情况

- 可以延伸出Pod之间的亲和或互斥关系,依据节点上正在运行的其他Pod的标签进行限制

Node亲和性与NodeSelector类似,增强了上述两点优势,Pod的亲和性和互斥限制则通过Pod标签而不是节点标签来实现

不难想象上述策略的应用场景,下面是两种策略的运行实例。

一、NodeSelector:定向调度

1、通过kubectl label命令给目标Node打上一些标签

kubectl label nodes

给xu.node1节点打上标签zone=north

# kubectl label nodes xu.node1 zone=north

node/xu.node1 labeled

查看xu.Node1,可以看到新增加的标签列表,与此同时,可以看到K8s给Node提供的预定义标签

# kubectl describe node xu.node1

Name: xu.node1

Roles:

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/arch=amd64

kubernetes.io/hostname=xu.node1

kubernetes.io/os=linux

zone=north......

创建配置文件redis-master-controller.yaml

原书给出的是创建一个RC,这里修改为创建Deployment

可以看到nodeSelector域在spec.spec域之下,是对Pod属性的定义(毕竟Pod是调度基本单位

apiVersion: apps/v1

kind: Deployment

metadata:

name: redis-master

labels:

name: redis-master

spec:

replicas: 1

selector:

matchLabels:

name: redis-master

template:

metadata:

name: redis-master

labels:

name: redis-master

spec:

containers:

- name: master

image: kubeguide/redis-master

ports:

- containerPort: 6379

nodeSelector:

zone: north

创建该deployment

# kubectl create -f redis-master-controller.yaml

deployment.apps/redis-master created

可以看到节点选择器已经在Deployment所创建的Pod定义之中

在Events中,可以看到节点被成功调度到xu.node1

# kubectl describe pod redis-master-799d96bf47-nn6x2

Name: redis-master-799d96bf47-nn6x2

Namespace: default

Priority: 0

Node: xu.node1/192.168.31.189

......

Node-Selectors: zone=north

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduleddefault-scheduler Successfully assigned default/redis-master-799d96bf47-nn6x2 to xu.node1

使用kubectl get pods -o wide命令,可以直接看到pod列表更详细的信息,包括被调度到的节点位置

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-master-799d96bf47-nn6x2 1/1 Running 0 7m7s 10.44.0.3 xu.node1

2、对于Node标签的相关命令使用

①查询具有特定标签的节点:

# kubectl get node -l "key=value"

# kubectl get node -l "zone=north"

NAME STATUS ROLES AGE VERSION

xu.node1 Ready10d v1.16.3

②删除一个Label,只需在命令行最后指定Label的key名并与一个减号相连即可:

# kubectl label nodes

③修改一个Label的值,需要加上--overwrite参数:

# kubectl label nodes

删除原有Deploymen,修改xu.node1标签为zone=south,再次尝试部署redis-master-controller.yaml

# kubectl label nodes xu.node1 zone=south --overwrite

node/xu.node1 labeled

可以看到因为没有匹配节点,Pod一直处在Pending状态

# kubectl get pods -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

redis-master-799d96bf47-k92qz 0/1 Pending 0 67sEvents:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedSchedulingdefault-scheduler 0/2 nodes are available: 2 node(s) didn't match node selector.

删除xu.node1的自定义标签

# kubectl label node xu.node1 zone-

node/xu.node1 labeled

二、NodeAffinity:Node亲和性调度

NodeAffinity是用于替换NodeSelector的全新调度策略,有两种节点亲和性表达:

- RequiredDuringSchedulingIgnoredDuringExecution:硬限制,必须满足条件。

- PreferrefDuringSchedulingIgnoredDuringExecution:软限制,可以按权重优先。

下面是运行实例:

1、创建带有NodeAffinity的Pod定义文件

创建with-node-affinity.yaml如下:

apiVersion: v1

kind: Pod

metadata:

name: with-node-affinity

spec:

affinity:

nodeAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

nodeSelectorTerms: #对于硬限制,使用nodeSelectorTerms.matchExpressions定义匹配标签

- matchExpressions:

- key: beta.kubernetes.io/arch

operator: In

values:

- amd64

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 1 #对于软限制,定义权重大小weight,随后在reference域中同样给出匹配表达式

preference:

matchExpressions:

- key: disk-type

operator: In

values:

- ssd

containers:

- name: with-node-affinity

image: nginx

2、创建并查看调度情况可以看到调度成功

值得一提的是,对于NodeAffinity配置,并没有显示在descibe出的信息中

# kubectl create -f with-node-affinity.yaml

pod/with-node-affinity createdEvents:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduleddefault-scheduler Successfully assigned default/with-node-affinity to xu.node1

另:NodeAffinity规则注意事项:

- 同时包含NodeSelector和NodeAffinity:必需同时满足

- 一个NodeAffinity包含多个nodeSelectorTerms:满足一个即可

- nodeSelectorTerms包含多个matchExpressions:必须同时满足

三、PodAffinity:Pod亲和与互斥调度策略

根据在节点上正在运行的Pod标签进行判断和调度

如果在具有标签X的Node上运行了一个或者多个符合条件Y的Pod,那么Pod应该(或拒绝)运行在这个Pod上

此处的标签X是指Node筛选的集群范围,包括结点、机架、区域等概念,K8s将这个属性内置在topologyKey中

topologyKey以为表达结点所属的topology范围,topologyKey域必须定义,在软限制中若该值为空,则默认为全部范围的组合

topologyKey包括:

- kubernetes.io/hostname

- failure-domain.beta.kubernetes.io/zone

- failure-domain.beta.kubernetes.io/regin

1、建立参照目标Pod

编写Pod配置文件并建立Pod

apiVersion: v1

kind: Pod

metadata:

name: pod-flag

labels: #为参照Pod打上测试用标签

security: "s1"

app: "nginx"

spec:

containers:

- name: nginx

image: nginx

# kubectl create -f pod-flag.yaml

pod/pod-flag created

2、Pod的亲和性调度测试

定义并创建pod-affinity

该节点包含一个Pod亲和性调度策略

调度范围为主机范围(topologyKey: kubernetes.io/hostname)

亲和性硬限制:目标节点上必须包含标签为security In {s1} 的Pod

apiVersion: v1

kind: Pod

metadata:

name: pod-affinity

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security

operator: In

values:

- s1

topologyKey: kubernetes.io/hostname

containers:

- name: with-pod-affinity

image: kubernetes/pause

# kubectl create -f pod-affinity.yaml

pod/pod-affinity created

查看pod列表并查看详情,可以看的pod-affinity已经被调度到和pod-flag相同的结点上

# kubectl get pods

NAME READY STATUS RESTARTS AGE

pod-affinity 1/1 Running 0 47s

pod-flag 1/1 Running 0 6m54sEvents:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduleddefault-scheduler Successfully assigned default/pod-affinity to xu.node1

3、Pod的互斥性调度实现

定义并创建anti-affinity

在该Pod中,硬限制与security=s1的Pod在同一个zone中

于此同时互斥调度配置为不与app=nginx的Pod在同一Node上

可以看到,互斥性调度与亲和性调度在语法上的不同:podAffinity与podAntiAffinity

apiVersion: v1

kind: Pod

metadata:

name: anti-affinity

spec:

affinity:

podAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: security

operator: In

values:

- s1

topologyKey: failure-doman.beta.kubernetes.io/zone

podAntiAffinity:

requiredDuringSchedulingIgnoredDuringExecution:

- labelSelector:

matchExpressions:

- key: app

operator: In

values:

- nginx

topologyKey: kubernetes.io/hostname

containers:

- name: anti-affinity

image: kubernetes/pause

创建并查看Pod列表,可以看到anti-affinity一直处于Pending状态

# kubectl get pods

NAME READY STATUS RESTARTS AGE

anti-affinity 0/1 Pending 0 7s

pod-affinity 1/1 Running 0 58m

pod-flag 1/1 Running 0 64m

查看运行Events可以看到调度器没有找到满足要求的Node

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Warning FailedSchedulingdefault-scheduler 0/2 nodes are available: 1 node(s) didn't match pod affinity rules, 1 node(s) didn't match pod affinity/anti-affinity, 1 node(s) had taints that the pod didn't tolerate.