kubernetes持久化存储卷PersistentVolume、持久化存储卷声明PersistentVolumeClaim的使用

准备一台机器安装nfs

#安装nfs-utils

yum -y install nfs-utils

systemctl start nfs

systemctl enable nfs

# 创建目录

mkdir /data/volumes -pv

cd /data/volumes

mkdir v{1,2,3,4,5}

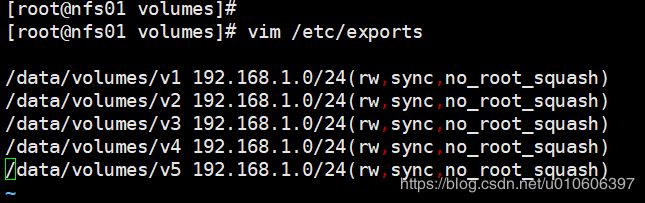

配置共享文件

vim /etc/exports

/data/volumes/v1 192.168.1.0/24(rw,sync,no_root_squash)

/data/volumes/v2 192.168.1.0/24(rw,sync,no_root_squash)

/data/volumes/v3 192.168.1.0/24(rw,sync,no_root_squash)

/data/volumes/v4 192.168.1.0/24(rw,sync,no_root_squash)

/data/volumes/v5 192.168.1.0/24(rw,sync,no_root_squash)

# 重新挂载/etc/exports的设置

exportfs -arv

# 显示主机的/etc/exports所共享的目录数据

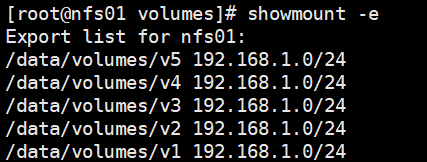

showmount -e

在k8s所有的node节点上安装nfs

yum -y install nfs-utils

systemctl start nfs

systemctl enable nfs

测试下nfs功能是否正常

mkdir /v1

echo test v1 >> /v1/test.txt

#192.168.1.190是nfs主机ip

mount -t nfs 192.168.1.190:/data/volumes/v1 /v1

echo test v1 >> /v1/test.txt

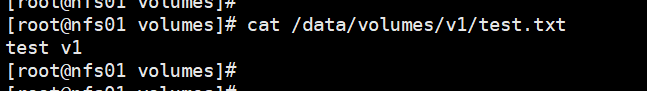

# nfs节点

cat /data/volumes/v1/test.txt

node节点上的test.txt已经同步到nfs节点,No problem。

#卸载node节点上的/v1测试目录

umount /v1

在master节点上创建PersistentVolume、PersistentVolumeClaim、Pod

vim pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv1

labels:

name: pv1

spec:

nfs:

# path 挂载路径

path: /data/volumes/v1

# server nfs机器的ip

server: 192.168.1.190

# ReadWriteOnce能以读写模式被加载到一个节点上

accessModes: ["ReadWriteOnce"]

capacity:

storage: 2Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv2

labels:

name: pv2

spec:

nfs:

path: /data/volumes/v2

server: 192.168.1.190

# ReadOnlyMany以只读模式加载到多个节点上

accessModes: ["ReadOnlyMany"]

capacity:

storage: 5Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv3

labels:

name: pv3

spec:

nfs:

path: /data/volumes/v3

server: 192.168.1.190

# ReadWriteMany以读写模式被加载到多个节点上

accessModes: ["ReadWriteMany", "ReadWriteOnce"]

capacity:

storage: 10Gi

---

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv4

labels:

name: pv4

spec:

nfs:

path: /data/volumes/v4

server: 192.168.1.190

accessModes: ["ReadWriteMany", "ReadWriteOnce"]

capacity:

storage: 10Gi

vim pod-pvc.yaml

apiVersion: v1

# 存储卷声明

kind: PersistentVolumeClaim

metadata:

name: pvc-myapp

namespace: default

spec:

accessModes: ["ReadWriteMany"]

resources:

requests:

storage: 1Gi

---

apiVersion: v1

kind: Pod

metadata:

name: pod-myapp

namespace: default

spec:

volumes:

- name: html

# 使用pvc-myapp存储卷声明

persistentVolumeClaim:

claimName: pvc-myapp

containers:

- name: myapp

image: ikubernetes/myapp:v1

volumeMounts:

- name: html

mountPath: /usr/share/nginx/html

部署

kubectl apply -f pv.yaml

kubectl apply -f pod-pvc.yaml

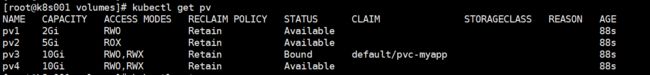

查看pv

kubectl get pv

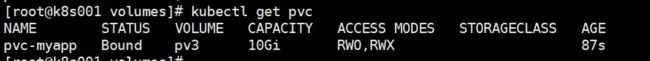

查看pvc

kubectl get pvc

使用了pv3,到nfs节点新建/data/volumes/v3/index.html

echo 这是主页pv3 >> /data/volumes/v3/index.html

查看pod

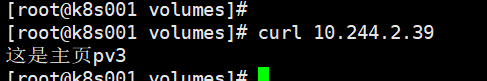

kubectl get pods -o wide

curl 10.244.2.39

nfs的/data/volumes/v3与pod容器中的/usr/share/nginx/html/目录同步了

到pod容器中修改/usr/share/nginx/html/看是否会同步到/data/volumes/v3

kubectl exec -it pod-myapp -- /bin/sh

cd /usr/share/nginx/html

echo 添加修改 >> index.html

echo 添加文件 >> new.txt

可到nfs的/data/volumes/v3查看修改是否同步了。

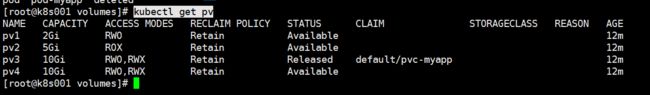

删除pod、pvc

kubectl delete -f pod-pvc.yaml

pv3是Released状态

Released:释放状态,表明PVC解绑PV,但还未执行回收策略。

也就是删除pvc之后,与之绑定的pv无法被新的pvc利用。

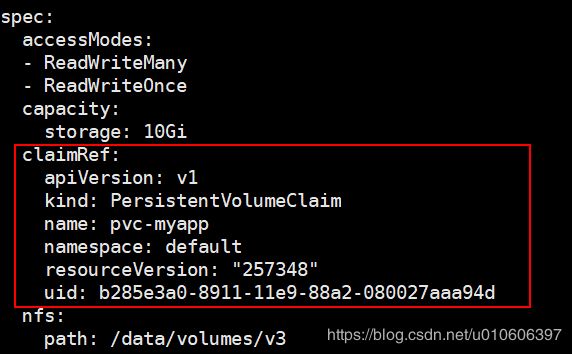

想要再次利用pv3,可通过修改pv3的定义实现

kubectl edit pv pv3

删除spec.claimRef(红框中的内容)即可

pv变成Available了。