ElasticSearch快照方式备份恢复数据库

要备份你的集群,你可以使用 snapshot API。这个会拿到你集群里当前的状态和数据然后保存到一个共享仓库里。这个备份过程是"智能"的。你的第一个快照会是一个数据的完整拷贝,但是所有后续的快照会保留的是已存快照和新数据之间的差异。随着你不时的对数据进行快照,备份也在增量的添加和删除。这意味着后续备份会相当快速,因为它们只传输很小的数据量。

要使用这个功能,你必须首先创建一个保存数据的仓库。有多个仓库类型可以供你选择:

- 共享文件系统,比如 NAS

- Amazon S3

- HDFS (Hadoop 分布式文件系统)

- Azure Cloud

一 配置NFS

这里机器资源有限,因此把ES中的其中一台服务器作为NFS服务端,其实,应该找一台备份服务器作为NFS服务端,ES服务器都作为NFS客户端比较好。

| IP |

角色 |

| 192.168.1.202 |

NFS服务端 |

| 192.168.1.203 |

NFS客户端 |

| 192.168.1.204 |

NFS客户端 |

1.1 安装NFS

在三台服务器上都需要执行:

1.1.1 检查安装 NFS 服务

rpm -qa | grep nfs

rpm -qa | grep rpcbind

如果组件没有安装,请执行下面的命令进行安装:

yum install nfs-utils rpcbind

1.1.2 设置开机自动启动

CentOS 6 可以通过下面的命令设置开机启动服务:

chkconfig nfs on

chkconfig rpcbind on

CentOS 7 可以通过下面命令设置开机自动启动:

systemctl enable rpcbind.service

systemctl enable nfs-server.service

1.1.3 为nfs设置固定端口,并设置防火墙规则

vi /etc/sysconfig/nfs

添加如下内容:

RQUOTAD_PORT=30001

LOCKD_TCPPORT=30002

LOCKD_UDPPORT=30002

MOUNTD_PORT=30003

STATD_PORT=30004

#开放防火墙端口

#开放NFS客户端的111,2049,30001-30004端口给NFS服务端192.168.1.202

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="tcp" port="111" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="udp" port="111" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="tcp" port="2049" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="udp" port="2049" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="tcp" port="30001" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="tcp" port="30002" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="tcp" port="30003" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="tcp" port="30004" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="udp" port="30001" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="udp" port="30002" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="udp" port="30003" accept"

sudo firewall-cmd --permanent --add-rich-rule="rule family="ipv4" source address="192.168.1.202" port protocol="udp" port="30004" accept"sudo firewall-cmd --reload

#启动nfs服务

CentOS 6 执行下面命令:

service rpcbind start

service nfs start

CentOS 7 执行下面命令:

systemctl start rpcbind.service

systemctl start nfs-server.service

#查看NFS端口

[root@pc1 backup]# rpcinfo -p

program vers proto port service

100000 4 tcp 111 portmapper

100000 3 tcp 111 portmapper

100000 2 tcp 111 portmapper

100000 4 udp 111 portmapper

100000 3 udp 111 portmapper

100000 2 udp 111 portmapper

100005 1 udp 30003 mountd

100005 1 tcp 30003 mountd

100005 2 udp 30003 mountd

100005 2 tcp 30003 mountd

100005 3 udp 30003 mountd

100005 3 tcp 30003 mountd

100003 2 tcp 2049 nfs

100003 3 tcp 2049 nfs

100003 4 tcp 2049 nfs

100227 2 tcp 2049 nfs_acl

100227 3 tcp 2049 nfs_acl

100003 2 udp 2049 nfs

100003 3 udp 2049 nfs

100003 4 udp 2049 nfs

100227 2 udp 2049 nfs_acl

100227 3 udp 2049 nfs_acl

100021 1 udp 30002 nlockmgr

100021 3 udp 30002 nlockmgr

100021 4 udp 30002 nlockmgr

100021 1 tcp 30002 nlockmgr

100021 3 tcp 30002 nlockmgr

100021 4 tcp 30002 nlockmgr1.1.4 创建共享目录

[root@pc1 ElasticSearch]# pwd

/data/ElasticSearch

[root@pc1 ElasticSearch]# mkdir backup

chown -R EsUser:EsUser backup

1.2 配置NFS服务端

在192.168.1.202上执行

vi /etc/exports

添加:

/data/ElasticSearch/backup 192.168.1.203(rw)

/data/ElasticSearch/backup 192.168.1.204(rw)

# 刷新配置使得修改立刻生效

exportfs -a

# 查看可挂载目录

showmount -e 192.168.1.190

1.3 配置NFS客户端

在192.168.1.203/204上执行:

#查看可挂载目录

[root@pc3 ElasticSearch]# showmount -e 192.168.1.202

Export list for 192.168.1.202:

/data/ElasticSearch/backup 192.168.1.204,192.168.1.203

#挂载

mount -t nfs 192.168.1.202:/data/ElasticSearch/backup /data/ElasticSearch/backup

#设置开机自动挂载

vi /etc/fstab

192.168.1.202:/data/ElasticSearch/backup /data/ElasticSearch/backup nfs defaults 0 0

1.4 修改ES配置

配置完共享目录后,需要修改 ES 配置,并重启 ES 使其生效。

# 在 elasticsearch.yml 中添加下面配置来设置备份仓库路径

path.repo: ["/data/ElasticSearch/backup"]

二 生成快照

2.1 部署一个共享文件系统仓库

PUT _snapshot/my_backup

{

"type": "fs",

"settings": {

"location": "/data/ElasticSearch/backup"

}

}

给我们的仓库取一个名字,在本例它叫 my_backup 。

我们指定仓库的类型应该是一个共享文件系统。

最后,我们提供一个已挂载的设备作为目的地址。

注意:共享文件系统路径必须确保集群所有节点都可以访问到。

用curl执行示例:

curl -H "Content-Type: application/json" -XPUT "http://10.236.9.20:9200/_snapshot/my_backup" -d '{"type":"fs","settings":{"location":"/k8s/backup"}}'

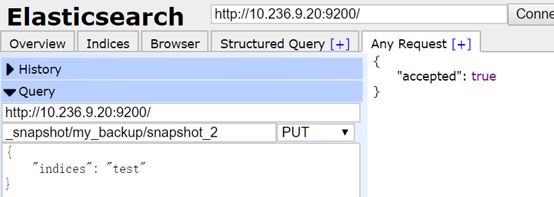

2.2 快照指定索引

语法:

备份所有索引:

curl -XPUT http://localhost:9200/_snapshot/backup/snapshot_11?wait_for_completion=true

备份部分索引:

PUT _snapshot/my_backup/snapshot_2{ "indices": "index_1,index_2"}这个快照命令只备份 index1 和 index2 。

用elastic-head备份示例:

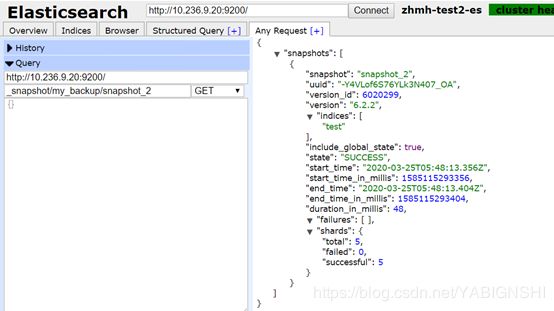

2.3 查询快照

#查看某个快照

GET _snapshot/my_backup/snapshot_2用elastic-head查询快照示例:

要获取一个仓库中所有快照的完整列表,使用 _all 占位符替换掉具体的快照名称:

GET _snapshot/my_backup/_all

2.4 监控快照进度

GET _snapshot/my_backup/snapshot_2/_status一个正在运行的快照会显示 IN_PROGRESS 作为状态。

输出示例:

{

"snapshots": [

{

"snapshot": "snapshot_2",

"repository": "my_backup",

"uuid": "-Y4VLof6S76YLk3N407_OA",

"state": "SUCCESS",

"include_global_state": true,

"shards_stats": {

"initializing": 0,

"started": 0,

"finalizing": 0,

"done": 5,

"failed": 0,

"total": 5

},

"stats": {

"number_of_files": 4,

"processed_files": 4,

"total_size_in_bytes": 4130,

"processed_size_in_bytes": 4130,

"start_time_in_millis": 1585115293376,

"time_in_millis": 18

},

"indices": {

"test": {

"shards_stats": {

"initializing": 0,

"started": 0,

"finalizing": 0,

"done": 5,

"failed": 0,

"total": 5

},

"stats": {

"number_of_files": 4,

"processed_files": 4,

"total_size_in_bytes": 4130,

"processed_size_in_bytes": 4130,

"start_time_in_millis": 1585115293376,

"time_in_millis": 18

},

"shards": {

"0": {

"stage": "DONE",

"stats": {

"number_of_files": 0,

"processed_files": 0,

"total_size_in_bytes": 0,

"processed_size_in_bytes": 0,

"start_time_in_millis": 1585115293378,

"time_in_millis": 3

}

},

"1": {

"stage": "DONE",

"stats": {

"number_of_files": 0,

"processed_files": 0,

"total_size_in_bytes": 0,

"processed_size_in_bytes": 0,

"start_time_in_millis": 1585115293379,

"time_in_millis": 2

}

},

"2": {

"stage": "DONE",

"stats": {

"number_of_files": 4,

"processed_files": 4,

"total_size_in_bytes": 4130,

"processed_size_in_bytes": 4130,

"start_time_in_millis": 1585115293380,

"time_in_millis": 14

}

},

"3": {

"stage": "DONE",

"stats": {

"number_of_files": 0,

"processed_files": 0,

"total_size_in_bytes": 0,

"processed_size_in_bytes": 0,

"start_time_in_millis": 1585115293376,

"time_in_millis": 7

}

},

"4": {

"stage": "DONE",

"stats": {

"number_of_files": 0,

"processed_files": 0,

"total_size_in_bytes": 0,

"processed_size_in_bytes": 0,

"start_time_in_millis": 1585115293376,

"time_in_millis": 7

}

}

}

}

}

}

]

}三 从快照中恢复

只要在你希望恢复回集群的快照 ID后面加上_restore 即可:

POST _snapshot/my_backup/snapshot_2/_restore

默认行为是把这个快照里存有的所有索引都恢复。如果 snapshot_1 包括五个索引,这五个都会被恢复到我们集群里。和 snapshot API 一样,我们也可以选择希望恢复具体哪个索引。

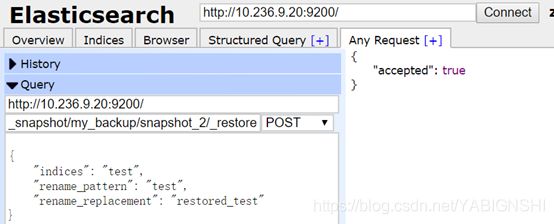

还有附加的选项用来重命名索引。这个选项允许你通过模式匹配索引名称,然后通过恢复进程提供一个新名称。如果你想在不替换现有数据的前提下,恢复老数据来验证内容,或者做其他处理,这个选项很有用。让我们从快照里恢复单个索引并提供一个替换的名称:

POST _snapshot/my_backup/snapshot_1/_restore

{

"indices": "index_1",

"rename_pattern": "index_(.+)",

"rename_replacement": "restored_index_$1"

}

只恢复 index_1 索引,忽略快照中存在的其余索引。

查找所提供的模式能匹配上的正在恢复的索引。

然后把它们重命名成替代的模式。

这个会恢复 index_1 到你集群里,但是重命名成了 restored_index_1 。

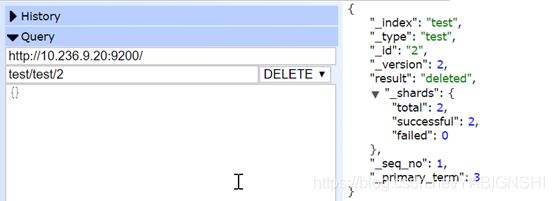

试验:

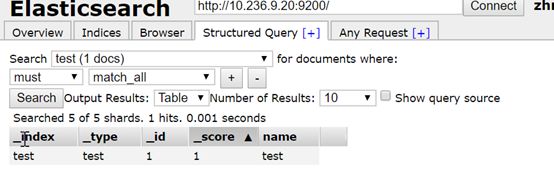

删除一条数据:

恢复的时候报错:

{

"error": {

"root_cause": [

{

"type": "snapshot_restore_exception",

"reason": "[my_backup:snapshot_2/-Y4VLof6S76YLk3N407_OA] cannot restore index [test] because an open index with same name already exists in the cluster. Either close or delete the existing index or restore the index under a different name by providing a rename pattern and replacement name"

}

],

"type": "snapshot_restore_exception",

"reason": "[my_backup:snapshot_2/-Y4VLof6S76YLk3N407_OA] cannot restore index [test] because an open index with same name already exists in the cluster. Either close or delete the existing index or restore the index under a different name by providing a rename pattern and replacement name"

},

"status": 500

}

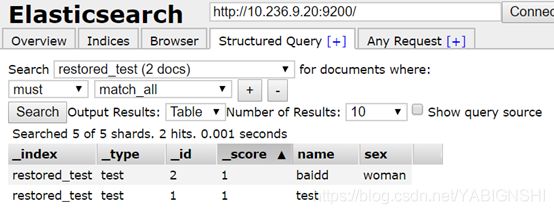

解决办法:恢复成一个新的索引(现有索引会保留)

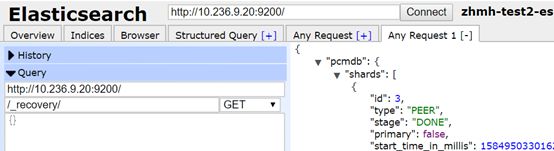

#监控恢复进度

根据需要将restored_test里的要恢复的数据插入到test索引里。

--备份参考了:

https://www.elastic.co/guide/cn/elasticsearch/guide/current/backing-up-your-cluster.html

恢复参考了:

https://www.elastic.co/guide/cn/elasticsearch/guide/current/_restoring_from_a_snapshot.html#_restoring_from_a_snapshot