Spark实时项目第四天-ODS层实时计算分流(根据表分流到不同的主题中去)

编写代码

在原来得spark-gmall-dw-realtime项目中继续添加代码

增加MyKafkaSinkUtil

在scala\com\atguigu\gmall\realtime\utils\MyKafkaSinkUtil.scala

import java.util.Properties

import org.apache.kafka.clients.producer.{KafkaProducer, ProducerRecord}

object MyKafkaSinkUtil {

private val properties: Properties = PropertiesUtil.load("config.properties")

val broker_list = properties.getProperty("kafka.broker.list")

var kafkaProducer: KafkaProducer[String, String] = null

def createKafkaProducer: KafkaProducer[String, String] = {

val properties = new Properties

properties.put("bootstrap.servers", broker_list)

properties.put("key.serializer", "org.apache.kafka.common.serialization.StringSerializer")

properties.put("value.serializer", "org.apache.kafka.common.serialization.StringSerializer")

properties.put("enable.idompotence",(true: java.lang.Boolean))

var producer: KafkaProducer[String, String] = null

try

producer = new KafkaProducer[String, String](properties)

catch {

case e: Exception =>

e.printStackTrace()

}

producer

}

// 主要两个send方法

def send(topic: String, msg: String): Unit = {

if (kafkaProducer == null) kafkaProducer = createKafkaProducer

kafkaProducer.send(new ProducerRecord[String, String](topic, msg))

}

def send(topic: String,key:String, msg: String): Unit = {

if (kafkaProducer == null) kafkaProducer = createKafkaProducer

kafkaProducer.send(new ProducerRecord[String, String](topic,key, msg))

}

}

增加BaseDBCanalApp

在scala\com\atguigu\gmall\realtime\app\ods\BaseDBCanalApp

import com.alibaba.fastjson.{JSON, JSONArray, JSONObject}

import com.atguigu.gmall.realtime.utils.{MyKafkaSinkUtil, MyKafkaUtil, OffsetManagerUtil}

import org.apache.kafka.clients.consumer.ConsumerRecord

import org.apache.kafka.common.TopicPartition

import org.apache.spark.SparkConf

import org.apache.spark.streaming.dstream.{DStream, InputDStream}

import org.apache.spark.streaming.kafka010.{HasOffsetRanges, OffsetRange}

import org.apache.spark.streaming.{Seconds, StreamingContext}

object BaseDBCanalApp {

def main(args: Array[String]): Unit = {

val sparkConf: SparkConf = new SparkConf().setMaster("local[*]").setAppName("ods_base_db_canal_app")

val ssc = new StreamingContext(sparkConf,Seconds(5))

val topic ="ODS_DB_GMALL_C";

val groupId="base_db_canal_group"

val offset: Map[TopicPartition, Long] = OffsetManagerUtil.getOffset(groupId,topic)

var inputDstream: InputDStream[ConsumerRecord[String, String]]=null

// 判断如果从redis中读取当前最新偏移量 则用该偏移量加载kafka中的数据 否则直接用kafka读出默认最新的数据

if(offset!=null&&offset.size>0){

inputDstream = MyKafkaUtil.getKafkaStream(topic,ssc,offset,groupId)

//startInputDstream.map(_.value).print(1000)

}else{

inputDstream = MyKafkaUtil.getKafkaStream(topic,ssc,groupId)

}

//取得偏移量步长

var offsetRanges: Array[OffsetRange] =null

val inputGetOffsetDstream: DStream[ConsumerRecord[String, String]] = inputDstream.transform { rdd =>

offsetRanges = rdd.asInstanceOf[HasOffsetRanges].offsetRanges

rdd

}

val dbJsonObjDstream: DStream[JSONObject] = inputGetOffsetDstream.map { record =>

val jsonString: String = record.value()

val jsonObj: JSONObject = JSON.parseObject(jsonString)

jsonObj

}

// 核心获取数据

dbJsonObjDstream.foreachRDD{rdd=>

rdd.foreachPartition{jsonObjItr=>

for (jsonObj <- jsonObjItr ) {

val dataArr: JSONArray = jsonObj.getJSONArray("data")

for (i <- 0 to dataArr.size()-1 ) {

val dataJsonObj: JSONObject = dataArr.getJSONObject(i)

val topic="ODS_T_"+jsonObj.getString("table").toUpperCase

val id: String = dataJsonObj.getString("id")

//println(topic+":"+dataJsonObj.toJSONString)

// 发送到Kafka数据

MyKafkaSinkUtil.send(topic,id,dataJsonObj.toJSONString)

}

}

}

OffsetManagerUtil.saveOffset(groupId,topic,offsetRanges)

}

ssc.start()

ssc.awaitTermination()

}

}

修改Kafka默认分区数并分发(注意borkerID不一致)

在/opt/module/kafka_2.11-0.11.0.2/config/server.properties

// 或者更改分区

bin/kafka-topics.sh --zookeeper hadoop102:2181 --alter --topic ODS_DB_GMALL_C --partitions 12

查看分区

bin/kafka-topics.sh --zookeeper hadoop102:2181 \

> --describe --topic ODS_DB_GMALL_C

删除Topic

bin/kafka-topics.sh --zookeeper hadoop102:2181 --delete --topic ODS_DB_GMALL_C

执行程序

开启消费

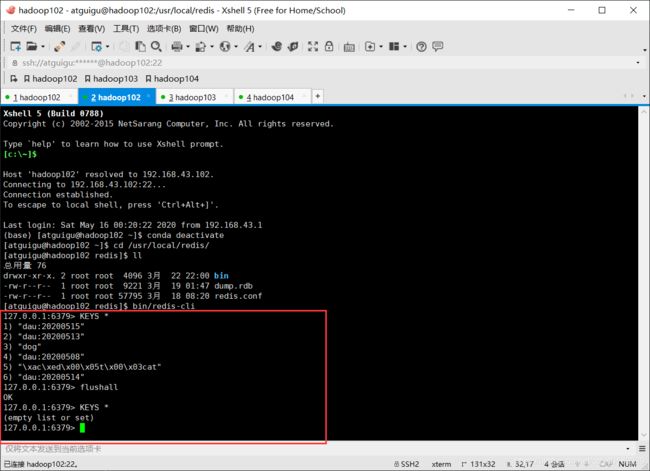

清空缓存

进程查看

测试更改

TOPIC分流

canal会追踪整个数据库的变更,把所有的数据变化都发到一个topic中了,但是为了后续处理方便,应该把这些数据根据不同的表,分流到不同的主题中去。