elasticsearch和kafka消息容量配置 Result window is too large, from + size must be less than or equal

es默认最大的消息量是10000

[2020-07-17T01:36:15,908][WARN ][r.suppressed ] path: /metaobject/objType/_search, params: {size=100000000, index=metaobject, from=0, type=objType}

org.elasticsearch.action.search.SearchPhaseExecutionException: all shards failed

at org.elasticsearch.action.search.AbstractSearchAsyncAction.onPhaseFailure(AbstractSearchAsyncAction.java:272) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.AbstractSearchAsyncAction.executeNextPhase(AbstractSearchAsyncAction.java:130) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.AbstractSearchAsyncAction.onPhaseDone(AbstractSearchAsyncAction.java:241) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.InitialSearchPhase.onShardFailure(InitialSearchPhase.java:107) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.InitialSearchPhase.access$100(InitialSearchPhase.java:49) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.InitialSearchPhase$2.lambda$onFailure$1(InitialSearchPhase.java:217) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.InitialSearchPhase.maybeFork(InitialSearchPhase.java:171) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.InitialSearchPhase.access$000(InitialSearchPhase.java:49) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.InitialSearchPhase$2.onFailure(InitialSearchPhase.java:217) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.ActionListenerResponseHandler.handleException(ActionListenerResponseHandler.java:51) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.transport.TransportService$ContextRestoreResponseHandler.handleException(TransportService.java:1077) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.transport.TransportService$DirectResponseChannel.processException(TransportService.java:1181) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.transport.TransportService$DirectResponseChannel.sendResponse(TransportService.java:1159) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.transport.TransportService$7.onFailure(TransportService.java:665) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.onFailure(ThreadContext.java:623) [elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:39) [elasticsearch-5.6.4.jar:5.6.4]

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149) [?:1.8.0_151]

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624) [?:1.8.0_151]

at java.lang.Thread.run(Thread.java:748) [?:1.8.0_151]

Caused by: org.elasticsearch.search.query.QueryPhaseExecutionException: Result window is too large, from + size must be less than or equal to: [10000] but was [100000000]. See the scroll api for a more efficient way to request large data sets. This limit can be set by changing the [index.max_result_window] index level setting.

at org.elasticsearch.search.DefaultSearchContext.preProcess(DefaultSearchContext.java:203) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.search.query.QueryPhase.preProcess(QueryPhase.java:95) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.search.SearchService.createContext(SearchService.java:497) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.search.SearchService.createAndPutContext(SearchService.java:461) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.search.SearchService.executeQueryPhase(SearchService.java:257) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.SearchTransportService$6.messageReceived(SearchTransportService.java:343) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.action.search.SearchTransportService$6.messageReceived(SearchTransportService.java:340) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.transport.RequestHandlerRegistry.processMessageReceived(RequestHandlerRegistry.java:69) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.transport.TransportService$7.doRun(TransportService.java:654) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingAbstractRunnable.doRun(ThreadContext.java:638) ~[elasticsearch-5.6.4.jar:5.6.4]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) ~[elasticsearch-5.6.4.jar:5.6.4]

... 3 more

解决方法:

修改一下设置,把这个设置到自己想要的极限即可(直接设置到20亿,应该够用了),在kibana中执行

PUT policy_document/_settings

{

"index":{

"max_result_window":2000000000

}

}

转换为直接在bash中执行的命令

root@test:~# curl -XPUT "http://localhost:9200/policy_document/_settings" -H 'Content-Type: application/json' -d'

> {

> "index":{

> "max_result_window":1000000

> }

> }'

{"acknowledged":true}

root@test:~#

从es的日志中也能查询到

docker logs -f elasticsearch

[2020-07-17T01:53:34,759][INFO ][o.e.c.s.IndexScopedSettings] [govZ15g] updating [index.max_result_window] from [10000] to [2000000000]

[2020-07-17T01:53:34,760][INFO ][o.e.c.s.IndexScopedSettings] [govZ15g] updating [index.max_rescore_window] from [10000] to [2000000000]

[2020-07-17T01:53:34,760][INFO ][o.e.c.s.IndexScopedSettings] [govZ15g] updating [index.max_result_window] from [10000] to [2000000000]

[2020-07-17T01:53:34,760][INFO ][o.e.c.s.IndexScopedSettings] [govZ15g] updating [index.max_rescore_window] from [10000] to [2000000000]

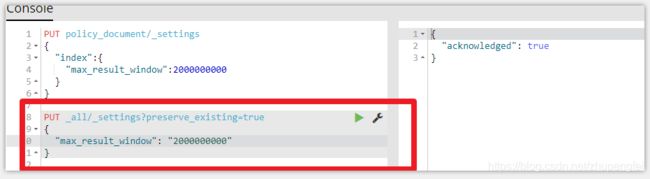

上面的方法对我并没有起作用,好像上面的方法只能对以后新增加的index有效,对已存在的index好像无效。

又使用了下面的方法,OK了。

PUT _all/_settings?preserve_existing=true

{

"max_result_window": "2000000000"

}转换为curl命令

curl -XPUT "http://elasticsearch:9200/_all/_settings?preserve_existing=true" -H 'Content-Type: application/json' -d'

{

"max_result_window": "2000000000"

}'

很遗憾的通知大家,上面的操作虽然最终解决了提示的问题,但是,但是,最终还是失败了。

一次请求了10W笔记录,造成服务器CPU直接爆满,直接无响应了。

所以又把参数修改了过来,

合理的解决方案应该是在业务上限制,不允许请求这么多笔数据,对数据进行分页请求。