运用Redis和Flask动态维护IP代理池 -- 系统详解 & 代码分析

本文针对动态代理池myProxyPool(GitHub)源码进行系统分析与代码解读,提供完整源码共大家一同交流学习,也欢迎对该项目多多指正,提出宝贵的意见~

本文涉及环境/模块/服务:

- Python3,MacOS(本机),Linux OS(阿里云服务器/Ubuntu),Redis数据库(代理队列),Flask(API接口)

文章目录

- @[toc]

- 0 效果呈现

- 1 前提

- 1.1 项目结构

- 1.2 Redis服务

- 1.2.1 启动Redis服务

- 1.2.2 配置文件

- 2 系统要求与模块间关系

- 2.1 动态代理池需具有的功能

- 2.2 模块间的关系

- 2.3 四大主要模块的功能分析

- a. Redis队列的"机械手臂" -- db.py

- b. 用户获取有效ip的"窗口" -- api.py

- c. 批量获取待测ip的"挖掘机" -- getter.py

- d. 动态平衡的"维护者" -- scheduler.py

- 3 代码解析

- 3.1 Redis队列的"机械手臂" -- db.py

- 3.2 用户获取有效ip的"窗口" -- api.py

- 3.3 批量获取待测ip的"挖掘机" -- getter.py

- 3.4 动态平衡的"维护者" -- scheduler.py

- 3.5 异常模块以及settings.py配置文件

- 3.6 项目入口 -- run.py

文章目录

- @[toc]

- 0 效果呈现

- 1 前提

- 1.1 项目结构

- 1.2 Redis服务

- 1.2.1 启动Redis服务

- 1.2.2 配置文件

- 2 系统要求与模块间关系

- 2.1 动态代理池需具有的功能

- 2.2 模块间的关系

- 2.3 四大主要模块的功能分析

- a. Redis队列的"机械手臂" -- db.py

- b. 用户获取有效ip的"窗口" -- api.py

- c. 批量获取待测ip的"挖掘机" -- getter.py

- d. 动态平衡的"维护者" -- scheduler.py

- 3 代码解析

- 3.1 Redis队列的"机械手臂" -- db.py

- 3.2 用户获取有效ip的"窗口" -- api.py

- 3.3 批量获取待测ip的"挖掘机" -- getter.py

- 3.4 动态平衡的"维护者" -- scheduler.py

- 3.5 异常模块以及settings.py配置文件

- 3.6 项目入口 -- run.py

0 效果呈现

- 远程连接阿里云服务器,运行myProxyPool目录下的

run.py文件,如图:

1 前提

1.1 项目结构

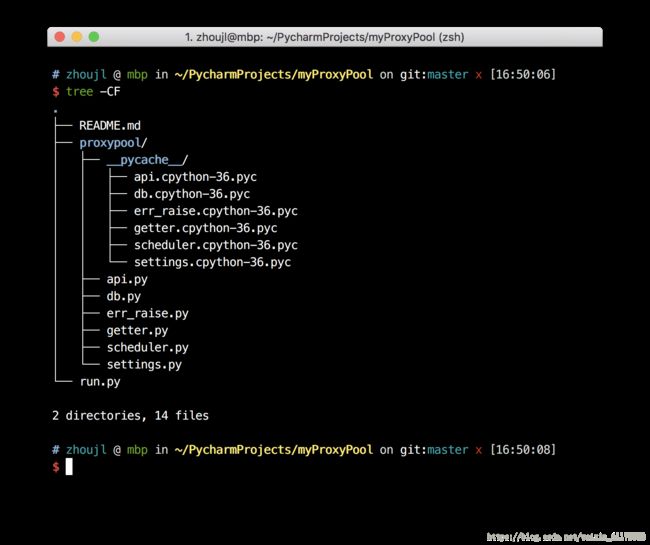

- 通过

tree -CF命令查看目录文件结构

- 由图可见,除了

__pycache__目录(内含缓存文件),项目的主要文件放在proxypool目录中,共包含六个模块,分别为:- api.py

- db.py

- err_raise.py

- getter.py

- scheduler.py

- settings.py

1.2 Redis服务

1.2.1 启动Redis服务

# Step 1: 安装Redis服务

sudo apt-get install redis-server

# Step 2: 配置文件redis.conf

sudo vim /etc/redis/redis.conf

# Step 3: 读取配置文件,启动Redis服务

redis-server /etc/redis/redis.conf

# Step 4: 查看Redis服务进程

ps aux | grep "redis"

1.2.2 配置文件

- 设置为守护进程:后台运行Redis服务

################################ GENERAL #####################################

# By default Redis does not run as a daemon. Use 'yes' if you need it.

# Note that Redis will write a pid file in /var/run/redis.pid when daemonized.

daemonize yes

- 不绑定IP地址:任何IP均可访问

# By default Redis listens for connections from all the network interfaces

# available on the server. It is possible to listen to just one or multiple

# interfaces using the "bind" configuration directive, followed by one or

# more IP addresses.

#

# Examples:

#

# bind 127.0.0.1

2 系统要求与模块间关系

2.1 动态代理池需具有的功能

- 目前网上获取代理的方式主要分为两种——付费/免费:

- 付费途径可以通过各家网站的api接口,获取质量较高的可用ip。但是获得ip的数量和速度与付费金额紧紧挂钩。

- 免费途径通过网上的免费ip网站获取代理地址。但是直接从免费网站获取的ip地址往往十有八九无法使用或者已经过期,难以直接使用。

- 本文动态代理池通过对各大免费ip网站进行代理抓取,存入Redis数据库,同时通过调度器

Scheduler以多进程的方式,实时监控代理池中ip的数量和质量,从而保证代理池内的ip 维持一定的数量并且可用 。通过Flask构建API接口,使得有效的ip随取随用,并能够实时监控代理池内ip的数量。

2.2 模块间的关系

前文所述六个模块中,

settings.py和err_raise.py主要负责各服务的连接设置和异常定义,并不属于功能模块,故不在下述讨论之列。如需查看完整代码,可访问GitHub源码一起交流学习。剩下的四个模块(scheduler.py/db.py/getter.py/api.py)关系如下,结合Redis服务和Flask服务,就构成了能够动态更新的ip代理池,结构图如下:

2.3 四大主要模块的功能分析

a. Redis队列的"机械手臂" – db.py

- db.py模块处在整个系统的中心位置,与其它的任意模块都有着紧密的联系。该模块仅定义了一个

RedisClient()类,该类定义了对Redis队列进行操作的几个通用方法(put()/get_for_test()/pop_for_use()等),并在其它模块当中实例化为conn对象,用于充当数据库"机械手"的角色。

b. 用户获取有效ip的"窗口" – api.py

- api.py模块身处db.py和Flask接口之间,发挥通过python语言调用Flask服务的作用。而可用的ip代理就通过Flask服务对应的网址页面呈现出来,方便用户读取和使用。该模块比较简单,任务也比较明确直接,且没有重新定义新的class,因此代码部分在将在后文详述。

c. 批量获取待测ip的"挖掘机" – getter.py

- 上图为getter.py模块中定义的两个class的类关系图。事实上,

ProxyMetaclass作为FreeProxyGetter的元类,仅仅是为了能够动态地生成两个和爬取免费ip的方法有关(后文会有详细代码分析)的两个属性。简单地看,此模块中也仅有FreeProxyGetter一个功能类。 FreeProxyGetter类主要定义了一组以crawl_打头的爬取方法,并通过调用主方法get_raw_proxies()遍历上述爬取方法,批量爬取一系列raw_proxies,即所谓的未经有效性检测的ip代理。这些待测ip若通过下文所说的Scheduler.ValidityTester的检测,就会经由RedisClient().put()方法一次放入Redis队列当中。

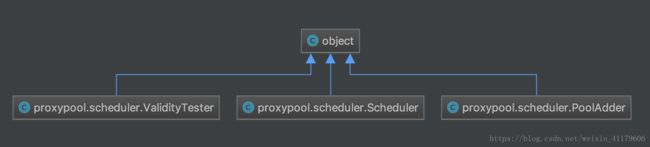

d. 动态平衡的"维护者" – scheduler.py

ValidityTester– ip有效性测试器类,实例化为**tester对象**。PoolAdder– ip池数量维护器类,实例化为**adder对象**Scheduler– 调度器类,拥有两个静态方法(主体分别为tester和adder),和一个run()方法开启两个并行进程,进行有效性和数量的动态维护。

3 代码解析

3.1 Redis队列的"机械手臂" – db.py

import redis

from proxypool.err_raise import PoolEmptyError

from proxypool.settings import REDIS__LIST_NAME, REDIS_HOST, REDIS_PASSWORD, REDIS_PORT

class RedisClient(object):

"""

Redis连接类,用于连接Redis数据库并操纵其中的代理数据列表

"""

def __init__(self, host=REDIS_HOST, port=REDIS_PORT):

"""

连接Redis数据库

:param host: 数据库ip地址

:param port: 数据库端口

"""

if REDIS_PASSWORD:

self._db = redis.Redis(host=host, port=port, password=REDIS_PASSWORD)

else:

self._db = redis.Redis(host=host, port=port)

@property

def list_len(self):

"""

获取队列长度

:return: 返回队列长度

"""

return self._db.llen(REDIS__LIST_NAME)

def flush(self):

"""

清空队列

"""

self._db.flushall()

def get_for_test(self, num=1):

"""

从Redis队列左端获取num个代理,用于测试有效性

:param num: 一次性获取代理个数,默认值为1

:return: 返回获取代理(bytes类型)构成的列表

"""

proxies_to_get = self._db.lrange(REDIS__LIST_NAME, 0, num - 1)

self._db.ltrim(REDIS__LIST_NAME, num, -1)

return proxies_to_get

def put(self, proxy):

"""

向Redis队列右侧插入1个代理

:param proxy: 插入的代理,类型为bytes

"""

self._db.rpush(REDIS__LIST_NAME, proxy)

def pop_for_use(self):

"""

从Redis队列右端获取1个可用代理

"""

try:

return self._db.rpop(REDIS__LIST_NAME)

except:

raise PoolEmptyError

3.2 用户获取有效ip的"窗口" – api.py

from flask import Flask, g

from proxypool.db import RedisClient

from proxypool.settings import FLASK_HOST, FLASK_PORT

# __all__ = ['app']

app = Flask(__name__)

def get_conn():

"""

建立Redis连接;若已连接则直接返回

:return: 返回一个Redis连接类的全局对象

"""

if not hasattr(g, 'redis_client'):

g.redis_client = RedisClient()

return g.redis_client

welcome_page = """

Welcome to zhoujl's Proxy Pool ~ ^_^ ~

"""

@app.route('/')

def index():

"""

欢迎页面

"""

return welcome_page

@app.route('/get')

def get_proxy():

"""

打印代理队列的第一个数据

"""

conn = get_conn()

return conn.pop_for_use()

@app.route('/count')

def get_counts():

"""

打印列表队列的长度

"""

conn = get_conn()

return str(conn.list_len)

3.3 批量获取待测ip的"挖掘机" – getter.py

import requests

from bs4 import BeautifulSoup

from bs4.element import Tag

from proxypool.settings import HEADERS

class ProxyMetaclass(type):

"""

元类, 在FreeProxyGetter中添加两个属性:

__CrawlFunc__: 爬虫函数组成的列表

__CrawlFuncCount__: 爬虫函数的数量,即列表的长度

"""

def __new__(cls, name, bases, attrs):

count = 0

attrs['__CrawlFunc__'] = []

for key, value in attrs.items():

if 'crawl_' in key:

attrs['__CrawlFunc__'].append(key)

count += 1

attrs['__CrawlFuncCount__'] = count

return type.__new__(cls, name, bases, attrs)

class FreeProxyGetter(object, metaclass=ProxyMetaclass):

def get_raw_proxies(self, callback):

proxies = []

print('Callback: {}'.format(callback))

for proxy in eval('self.{}()'.format(callback)):

print('Getting {} from {}'.format(proxy, callback))

proxies.append(proxy)

return proxies

def crawl_xicidaili(self):

base_url = 'http://www.xicidaili.com/wt/{page}'

for page in range(1, 2):

resp = requests.get(url=base_url.format(page=page), headers=HEADERS)

if resp.status_code != 200:

print('Error status code: {}'.format(resp.status_code))

else:

soup = BeautifulSoup(resp.text, 'lxml')

ip_list = soup.find(id='ip_list')

for child in ip_list.children:

if not isinstance(child, str):

ip_host = child.contents[3].string

ip_port = child.contents[5].string

ip = '{host}:{port}'.format(host=ip_host, port=ip_port)

if ip[0].isdigit():

yield ip

# Other methods for raw proxies...

3.4 动态平衡的"维护者" – scheduler.py

import aiohttp

import asyncio

import time

from multiprocessing import Process

from aiohttp import ClientConnectionError as ProxyConnectionError, ServerDisconnectedError, ClientResponseError, \

ClientConnectorError

from proxypool.db import RedisClient

from proxypool.err_raise import ResourceDepletionError

from proxypool.getter import FreeProxyGetter

from proxypool.settings import TEST_API, GET_PROXIES_TIMEOUT

from proxypool.settings import VALID_CHECK_CYCLE, POOL_LEN_CHECK_CYCLE

from proxypool.settings import POOL_LOWER_THRESHOLD, POOL_UPPER_THRESHOLD

class ValidityTester(object):

test_api = TEST_API

def __init__(self):

self._raw_proxies = None

self._valid_proxies = []

def set_raw_proxies(self, proxies):

self._raw_proxies = proxies

self._conn = RedisClient()

async def test_single_proxies(self, proxy):

"""

对单个代理(取自self._raw_proxies)进行有效性测试,若测试通过,则加入_valid_proxies列表

:param proxy: 单个待测代理

:return:

"""

if isinstance(proxy, bytes):

proxy = proxy.decode('utf8')

# 尝试开启aiohttp,否则抛出ServerDisconnectedError, ClientConnectorError, ClientResponseError等连接异常

try:

async with aiohttp.ClientSession() as session:

# aiohttp已成功开启,开始验证代理ip的有效性

# 若代理无效,则抛出 ProxyConnectionError, TimeoutError, ValueError 异常

try:

async with session.get(url=self.test_api, proxy='http://{}'.format(proxy),

timeout=GET_PROXIES_TIMEOUT) as response:

if response.status == 200:

self._conn.put(proxy)

print('Valid proxy: {}'.format(proxy))

except (ProxyConnectionError, TimeoutError, ValueError):

print('Invalid proxy: {}'.format(proxy))

except (ServerDisconnectedError, ClientConnectorError, ClientResponseError) as s:

print(s)

def test(self):

"""

测试所有代理的有效性

"""

print('ValidityTester is working...')

try:

loop = asyncio.get_event_loop()

tasks = [self.test_single_proxies(proxy) for proxy in self._raw_proxies]

loop.run_until_complete(asyncio.wait(tasks))

except ValueError:

print('Async Error')

class PoolAdder(object):

def __init__(self, upper_threshold):

self._upper_threshold = upper_threshold

self._conn = RedisClient()

self._tester = ValidityTester()

self._crawler = FreeProxyGetter()

def over_upper_threshold(self):

"""

判断代理池是否过盈

"""

return True if self._conn.list_len >= self._upper_threshold else False

def add_to_pool(self):

print('PoolAdder is working...')

raw_proxies_count = 0

while not self.over_upper_threshold():

for callback_label in range(self._crawler.__CrawlFuncCount__):

callback = self._crawler.__CrawlFunc__[callback_label]

raw_proxies = self._crawler.get_raw_proxies(callback=callback)

self._tester.set_raw_proxies(raw_proxies)

self._tester.test()

raw_proxies_count += len(raw_proxies)

if self.over_upper_threshold():

print('IPs are enough, waiting to be used')

break

if raw_proxies_count == 0:

raise ResourceDepletionError

class Scheduler(object):

@staticmethod

def test_proxies(cycle=VALID_CHECK_CYCLE):

"""

检查代理队列左半边(旧的)队列的代理有效性,无效的剔除,有效的重新放入队列右侧

:param cycle: 检测周期

"""

conn = RedisClient()

tester = ValidityTester()

while True:

print('testing & refreshing ips...')

count = int(0.5 * conn.list_len)

if count == 0:

print('0 ip, waiting for adding...')

time.sleep(cycle)

continue

raw_proxies = conn.get_for_test(count)

tester.set_raw_proxies(raw_proxies)

tester.test()

time.sleep(cycle)

@staticmethod

def check_pool(lower_threshold=POOL_LOWER_THRESHOLD,

upper_threshold=POOL_UPPER_THRESHOLD,

cycle=POOL_LEN_CHECK_CYCLE):

conn = RedisClient()

adder = PoolAdder(upper_threshold)

while True:

if conn.list_len < lower_threshold:

adder.add_to_pool()

time.sleep(cycle)

def run(self):

print('IP scheduler is running...')

valid_process = Process(target=Scheduler.test_proxies)

check_process = Process(target=Scheduler.check_pool)

valid_process.start()

check_process.start()

3.5 异常模块以及settings.py配置文件

- 自定义异常

class PoolEmptyError(Exception):

def __init__(self):

super.__init__(self)

def __str__(self):

return repr('Pool is EMPTY!')

class ResourceDepletionError(Exception):

def __init__(self):

super().__init__(self)

def __str__(self):

return repr('The proxy source is exhausted, please add new websites for more ip.')

- 配置文件settings.py

# Redis数据库服务

REDIS_HOST = '*.*.*.*' # 此处填写redis服务host地址

REDIS_PORT = 6379

REDIS_PASSWORD = '' # 若有密码,则填写;若无则保持空

REDIS__LIST_NAME = "proxies" # 可自定义队列名称

# Flask服务

FLASK_HOST = '0.0.0.0' # '0.0.0.0'代表其他主机可以通过地址访问该接口

FLASK_PORT = 5000

DEBUG = True

# HTTP请求头

User_Agent = '' # 可以使用自己的User-Agent

HEADERS = {

'User-Agent' : User_Agent,

}

# 代理测试网址

TEST_API = 'http://www.baidu.com'

# 代理测试时间上限

GET_PROXIES_TIMEOUT = 5

# 代理有效性检查周期(s)

VALID_CHECK_CYCLE = 60

# 代理池ip数量检查周期(s)

POOL_LEN_CHECK_CYCLE = 20

# 代理池ip数量上限

POOL_UPPER_THRESHOLD = 150

# 代理池ip数量下限

POOL_LOWER_THRESHOLD = 30

3.6 项目入口 – run.py

from proxypool.api import app

from proxypool.scheduler import Scheduler

from proxypool.settings import FLASK_HOST, FLASK_PORT

def main():

s = Scheduler()

s.run()

# Flask服务默认只能在本机进行连接,

# 若需要远程访问,必须手动设置app.run()的host和port参数!

app.run(host=FLASK_HOST, port=FLASK_PORT, debug=True)

if __name__ == '__main__':

main()