前置:minimize讲解

from scipy.optimize import minimize

import numpy as np

def fun(args):

a=args

v=lambda x:a/x[0] +x[0]

return v

if __name__ == "__main__":

args = (1)

x0 = np.asarray((2))

res = minimize(fun(args), x0, method='SLSQP')

print(res.fun)

print(res.success)

print(res.x)

import tensorflow as tf

import numpy as np

import matplotlib.pyplot as plt

%matplotlib inline

from tensorflow.examples.tutorials.mnist import input_data

mnist = input_data.read_data_sets('MNIST_data', one_hot=True)

real_img = tf.placeholder(tf.float32, [None, 784], name='real_img')

noise_img = tf.placeholder(tf.float32, [None, 100], name='noise_img')

def generator(noise_img, hidden_units, out_dim, reuse=False):

with tf.variable_scope("generator", reuse=reuse):

hidden1 = tf.layers.dense(noise_img, hidden_units)

hidden1 = tf.nn.relu(hidden1)

logits = tf.layers.dense(hidden1, out_dim)

outputs = tf.nn.sigmoid(logits)

return logits, outputs

def discriminator(img, hidden_units, reuse=False):

with tf.variable_scope("discriminator", reuse=reuse):

hidden1 = tf.layers.dense(img, hidden_units)

hidden1 = tf.nn.relu(hidden1)

outputs = tf.layers.dense(hidden1, 1)

return outputs

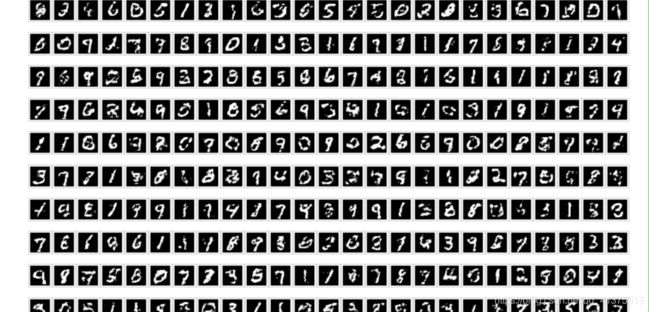

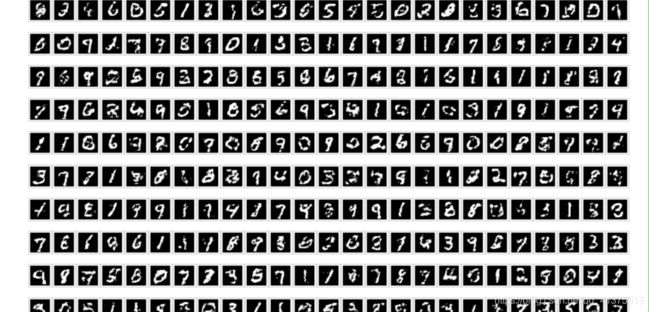

def plot_images(samples):

fig, axes = plt.subplots(nrows=1, ncols=25, sharex=True, sharey=True, figsize=(50,2))

for img, ax in zip(samples, axes):

ax.imshow(img.reshape((28, 28)), cmap='Greys_r')

ax.get_xaxis().set_visible(False)

ax.get_yaxis().set_visible(False)

fig.tight_layout(pad=0)

img_size = 784

noise_size = 100

hidden_units = 128

learning_rate = 0.0001

g_logits, g_outputs = generator(noise_img, hidden_units, img_size)

d_real = discriminator(real_img, hidden_units)

d_fake = discriminator(g_outputs, hidden_units, reuse=True)

d_loss = tf.reduce_mean(d_real) - tf.reduce_mean(d_fake)

g_loss = -tf.reduce_mean(d_fake)

train_vars = tf.trainable_variables()

g_vars = [var for var in train_vars if var.name.startswith("generator")]

d_vars = [var for var in train_vars if var.name.startswith("discriminator")]

d_train_opt = tf.train.RMSPropOptimizer(learning_rate).minimize(-d_loss, var_list=d_vars)

g_train_opt = tf.train.RMSPropOptimizer(learning_rate).minimize(g_loss, var_list=g_vars)

clip_d = [p.assign(tf.clip_by_value(p, -0.01, 0.01)) for p in d_vars]

batch_size = 32

n_sample = 25

samples = []

losses = []

saver = tf.train.Saver(var_list = g_vars)

with tf.Session() as sess:

sess.run(tf.global_variables_initializer())

for it in range(1000000):

for _ in range(5):

batch_images, _ = mnist.train.next_batch(batch_size)

batch_noise = np.random.uniform(-1, 1, size=(batch_size, noise_size))

_ = sess.run([d_train_opt, clip_d], feed_dict={real_img: batch_images, noise_img: batch_noise})

_ = sess.run(g_train_opt, feed_dict={noise_img: batch_noise})

if it%10000 == 0:

sample_noise = np.random.uniform(-1, 1, size=(n_sample, noise_size))

_, samples = sess.run(generator(noise_img, hidden_units, img_size, reuse=True),

feed_dict={noise_img: sample_noise})

plot_images(samples)

train_loss_d = sess.run(d_loss,

feed_dict = {real_img: batch_images,

noise_img: batch_noise})

train_loss_g = sess.run(g_loss,

feed_dict = {noise_img: batch_noise})

print("Epoch {}/{}...".format(it, 1000000),

"Discriminator Loss: {:.4f}...".format(train_loss_d),

"Generator Loss: {:.4f}".format(train_loss_g))

losses.append((train_loss_d, train_loss_g))

saver.save(sess, './checkpoints/generator.ckpt')