【DL笔记】Tutorial on Variational AutoEncoder——中英文对照(更新中)

Abstract

In just three years, Variational Autoencoders (VAEs) have emerged as one of the most popular approaches to unsupervised learning of complicated distributions. VAEs are appealing because they are built on top of standard function approximators (neural networks), and can be trained with stochastic gradient descent. VAEs have already shown promise in generating many kinds of complicated data, including handwritten digits [1, 2], faces [1, 3, 4], house numbers [5, 6], CIFAR images [6], physical models of scenes [4], segmentation [7], and predicting the future from static images [8]. This tutorial introduces the intuitions behind VAEs, explains the mathematics behind them, and describes some empirical behavior. No prior knowledge of variational Bayesian methods is assumed.

Keywords: variational autoencoders, unsupervised learning, structured prediction, neural networks

摘要

近三年来,变分自编码(VAE)作为一种无监督学习复杂分布的方法受到人们关注,VAE因其基于标准函数近似(神经网络)而吸引人,并且可以通过随机梯度下降进行训练。VAE已经在许多生成复杂数据包括手写字体[1,2]、人脸图像[1,3,4]、住宅编码[5,6]、CIFAR图像[6]、物理模型场景[4]、分割[7]以及预测静态图像[8]上显现优势。本教程介绍VAE背后的灵感和数学解释,以及一些实证。没有变分贝叶斯方法假设的先验知识。

关键字:变分自编码,无监督学习,结构预测,神经网络

1 Introduction

“Generative modeling” is a broad area of machine learning which deals with models of distributions P(X), defined over datapoints X in some potentially high-dimensional space X . For instance, images are a popular kind of data for which we might create generative models. Each “datapoint” (image) has thousands or millions of dimensions (pixels), and the generative model’s job is to somehow capture the dependencies between pixels, e.g., that nearby pixels have similar color, and are organized into objects. Exactly what it means to “capture” these dependencies depends on exactly what we want to do with the model. One straightforward kind of generative model simply allows us to compute P(X) numerically. In the case of images, X values which look like real images should get high probability, whereas images that look like random noise should get low probability. However, models like this are not necessarily useful: knowing that one image is unlikely does not help us synthesize one that is likely.

1 介绍

生成模型是机器学习中在一些潜在的高维空间 χ \chi χ 中定义在数据点 X X X 上处理模型分布 P ( X ) P(X) P(X) 的一个广泛领域,例如,图像可能就是一种用于创建生成模型的数据。每一个数据点(图片)都有成千上万维(像素),生成模型的工作就是捕捉像素之间的相关性,例如相邻的像素具有相似的颜色,组成实体。实际上,捕捉这些相关性依赖于我们想用模型做什么。一种简单的模型允许对 P ( X ) P(X) P(X) 进行数值表示计算。在图像的情况下,看起来像真实图像的X值应该具有高概率,而看起来像随机噪声的图像应该具有较低概率。然而,像这样的模型并不一定有用:已知一个不太可能的图像不会帮助我们合成一个可能的图像。

Instead, one often cares about producing more examples that are like those already in a database, but not exactly the same. We could start with a database of raw images and synthesize new, unseen images. We might take in a database of 3D models of something like plants and produce more of them to fill a forest in a video game. We could take handwritten text and try to produce more handwritten text. Tools like this might actually be useful for graphic designers. We can formalize this setup by saying that we get examples X distributed according to some unknown distribution P g t ( X ) P_{gt}(X) Pgt(X), and our goal is to learn a model P which we can sample from, such that P is as similar as possible to P g t P_{gt} Pgt.

相反,人们更会去关心去产生那些已经在数据库中,但又与数据库中的图像不是完全一样的例子,我们可以从原始图像数据库开始,合成新的、没见过的图像。我们可以在一个视频游戏中填充一个从3D模型数据库中获取的像植物一样的作为森林;我们可以采取手写文本,并尝试产生更多的手写文本,像这样的工具实际上对图形设计者来说是有用的。我们可以形式化地设置通过未知分布的 P g t ( X ) P_{gt}(X) Pgt(X) 来生成已知 X X X 的分布,目标是学习一个可以从中取样的模型 P P P ,使 P P P 与 P g t P_{gt} Pgt 尽可能相似。

Training this type of model has been a long-standing problem in the machine learning community, and classically, most approaches have had one of three serious drawbacks. First, they might require strong assumptions about the structure in the data. Second, they might make severe approximations, leading to suboptimal models. Or third, they might rely on computation- ally expensive inference procedures like Markov Chain Monte Carlo. More recently, some works have made tremendous progress in training neural networks as powerful function approximators through backpropagation [9]. These advances have given rise to promising frameworks which can use backpropagation-based function approximators to build generative models.

训练这种模型一直以来都是机器学习社区一个长期存在的问题,并且,大多数方法都存在一下三种严重缺陷之一:首先,可能需要对数据中的结构进行强假设。第二,可能会作出严重的近似,导致次优模型。第三,可能依赖于计算昂贵的推理过程,如马尔可夫链蒙特卡罗方法。近年来,一些研究成果在神经网络的训练中取得了巨大的进展,通过反向传播作为强大的函数逼近器[9]。这些进展已经产生了有前途的框架,可以使用基于反向传播的函数逼近器来生成生成模型。

One of the most popular such frameworks is the Variational Autoencoder [1, 3], the subject of this tutorial. The assumptions of this model are weak, and training is fast via backpropagation. VAEs do make an approximation, but the error introduced by this approximation is arguably small given high-capacity models. These characteristics have contributed to a quick rise in their popularity.

其中一种受欢迎的模型就是变分自编码器[1,3],这篇教程的主角。该模型进行弱假设,训练通过反向传播迅速进行。VAE确实做了近似,但是由这种近似引入的误差对于高容量模型来说是小的,这些特点促成了它的迅速普及。

This tutorial is intended to be an informal introduction to VAEs, and not a formal scientific paper about them. It is aimed at people who might have uses for generative models, but might not have a strong background in the variatonal Bayesian methods and “minimum description length” coding models on which VAEs are based. This tutorial began its life as a presentation for computer vision reading groups at UC Berkeley and Carnegie Mellon, and hence has a bias toward a vision audience. Suggestions for improvement are appreciated.

本教程旨在对VAEs进行非正式的介绍,而不是正式的关于它的科学论文。它的目的是帮助那些可能使用生成模型,但没有强大的背景在变量贝叶斯方法的人,其中的VAE是基于“最小描述长度”编码模型。本教程开始在UC伯克利和卡耐基梅隆的计算机视觉阅读组的演示,并因此偏向一个视觉观众。欢迎改进建议。

1.1 Preliminaries: Latent Variable Models

When training a generative model, the more complicated the dependencies between the dimensions, the more difficult the models are to train. Take, for example, the problem of generating images of handwritten characters. Say for simplicity that we only care about modeling the digits 0-9. If the left half of the character contains the left half of a 5, then the right half cannot contain the left half of a 0, or the character will very clearly not look like any real digit. Intuitively, it helps if the model first decides which character to generate before it assigns a value to any specific pixel. This kind of decision is formally called a latent variable. That is, before our model draws anything, it first randomly samples a digit value z from the set [0, …, 9], and then makes sure all the strokes match that character. z is called ‘latent’ because given just a character produced by the model, we don’t necessarily know which settings of the latent variables generated the character. We would need to infer it using something like computer vision.

1.1 初步研究:隐变量模型

当训练生成模型时,维度之间的依赖关系越复杂,模型就越难训练。例如,产生手写字符图像的问题。简单地说,我们只关心数字0到9的建模。如果一个字符的左半边包含了数字5的左半边,那么右半边不会包含0的左半边,或者这个图片很清晰看起来不是一个数字。直观地说,这有助于模型首先决定在将一个值分配给任何特定像素之前生成哪个字符,这种决策方法成为隐变量。也就是说,在我们的模型画出任何东西之前,它首先随机地从集合0~9中采样一个数字值 z z z ,然后确保所有的笔画与之匹配, z z z 被称为“隐含“的原因在于给定的只是一个由模型产生的字符,我们没必要知道设置那个隐变量产生字符,只需要使用像计算机视觉这样的技术来推断它。

Before we can say that our model is representative of our dataset, we need to make sure that for every datapoint X X X in the dataset, there is one (or many) settings of the latent variables which causes the model to generate something very similar to X X X. Formally, say we have a vector of latent variables z z z in a high-dimensional space Z Z Z which we can easily sample according to some probability density function (PDF) P ( z ) P(z) P(z) defined over Z Z Z. Then, say we have a family of deterministic functions f ( z ; θ ) f (z; θ) f(z;θ), parameterized by a vector θ θ θ in some space Θ Θ Θ, where f : Z × Θ → X f :Z×Θ→X f:Z×Θ→X. f f f is deterministic, but if z z z is random and θ θ θ is fixed, then f ( z ; θ ) f(z; θ) f(z;θ) is a random variable in the space X X X . We wish to optimize θ θ θ such that we can sample z z z from P ( z ) P(z) P(z) and, with high probability, f ( z ; θ ) f(z; θ) f(z;θ) will be like the X X X’s in our dataset.

在模型可以表示数据集之前,我们需要确保数据集中的每个点 X X X 都会有一个或多个隐变量的设置,这样可以使模型生成更像 X X X 的分布,形式上说,我们在高维空间Z中有一个潜变量Z向量,我们可以根据Z上定义的一些概率密度函数(PDF) P ( z ) P(z) P(z) ,很容易地对其进行采样。然后,假设我们有一个确定函数 f ( z ; θ ) f (z; θ) f(z;θ) ,在某个空间 Θ Θ Θ中用向量 θ θ θ参数化,其中 f : Z × Θ → X f :Z×Θ→X f:Z×Θ→X , f f f 使确定的,但是如果 z z z 随机并且 θ θ θ 固定,那么 f ( z ; θ ) f(z; θ) f(z;θ) 就是 X X X 空间中的随机变量。我们希望优化 θ θ θ 使我们从 P ( z ) P(z) P(z) 中采样得到的 z z z , 将像数据集 X X X 中的 f ( z ; θ ) f(z; θ) f(z;θ) 一样具有更高的可能性。

To make this notion precise mathematically, we are aiming maximize the probability of each X X X in the training set under the entire generative process, according to:

(1) P ( X ) = ∫ P ( X ∣ z ; θ ) P ( z ) d z P(X)=\int P(X|z;\theta)P(z)dz \tag{1} P(X)=∫P(X∣z;θ)P(z)dz(1)

为了使这一概念在数学上精确,我们的目标是在整个生成过程中最大化训练集中的每个X的概率,根据如下:

(1) P ( X ) = ∫ P ( X ∣ z ; θ ) P ( z ) d z P(X)=\int P(X|z;\theta)P(z)dz \tag{1} P(X)=∫P(X∣z;θ)P(z)dz(1)

Here, f ( z ; θ ) f(z; θ) f(z;θ) has been replaced by a distribution P ( X ∣ z ; θ ) P(X|z; θ) P(X∣z;θ), which allows us to make the dependence of X X X on z explicit by using the law of total probability. The intuition behind this framework—called “maximum likelihood”— is that if the model is likely to produce training set samples, then it is also likely to produce similar samples, and unlikely to produce dissimilar ones. In VAEs, the choice of this output distribution is often Gaussian, i.e., P ( X ∣ z ; θ ) = N ( X ∣ f ( z ; θ ) , σ 2 ∗ I ) P(X|z;θ) = N(X|f(z;θ),σ^2 ∗ I) P(X∣z;θ)=N(X∣f(z;θ),σ2∗I). That is, it has mean f ( z ; θ ) f(z;θ) f(z;θ) and covariance equal to the identity matrix I I I times some scalar σ σ σ (which is a hyperparameter). This replacement is necessary to formalize the intuition that some z z z needs to result in samples that are merely like X X X. In general, and particularly early in training, our model will not produce outputs that are identical to any particular X X X. By having a Gaussian distribution, we can use gradient descent (or any other optimization technique) to increase P ( X ) P(X) P(X) by making f ( z ; θ ) f(z; θ) f(z;θ) approach X X X for some z z z, i.e., gradually making the training data more likely under the generative model. This wouldn’t be possible if P ( X ∣ z ) P(X|z) P(X∣z) was a Dirac delta function, as it would be if we used X = f ( z ; θ ) X = f (z; θ) X=f(z;θ) deterministically! Note that the output distribution is not required to be Gaussian: for instance, if X X X is binary, then P ( X ∣ z ) P(X|z) P(X∣z) might be a Bernoulli parameterized by f ( z ; θ ) f (z; θ) f(z;θ). The important property is simply that P ( X ∣ z ) P(X|z) P(X∣z) can be computed, and is continuous in θ θ θ. From here onward, we will omit θ from f ( z ; θ ) f(z; θ) f(z;θ) to avoid clutter.

这里, f ( z ; θ ) f(z; θ) f(z;θ) 已被一个分布 P ( X ∣ z ; θ ) P(X|z; θ) P(X∣z;θ) 所代替,这使得我们可以利用全概率公式来证明 X X X 在 Z Z Z 显式上的依赖性。这个框架的直觉称为“最大似然”——如果模型很可能产生训练集样本,那么它也很可能产生相似的样本,不太可能产生不同的样本。在VAE中,通常选择的输出分布为高斯分布,如: P ( X ∣ z ; θ ) = N ( X ∣ f ( z ; θ ) , σ 2 ∗ I ) P(X|z;θ) = N(X|f(z;θ),σ^2 ∗ I) P(X∣z;θ)=N(X∣f(z;θ),σ2∗I) 。也就是说,这意味着 f ( z ; θ ) f(z;θ) f(z;θ) 和协方差等于同一矩阵 I I I 乘以某个标量 σ σ σ(这是一个超参数)。这种必要的替换形式化了一些 Z Z Z 需要产生的样本,这些样本一般只与 X X X 相似,特别是在训练早期,我们的模型将不会产生与任何特定 x x x 相同的输出。通过使 f ( z ; θ ) f(z;θ) f(z;θ) 接近 z z z 的方法来增加 p ( x ) p(x) p(x) 的 p ( x ) p(x) p(x) ,即在生成模型下逐渐使训练数据变得更可能。如果 P ( X ∣ z ) P(X|z) P(X∣z) 是Dirac delta函数则不可能实现,就像用 X = f ( z ; θ ) X=f(z;\theta) X=f(z;θ) 确定一样。注意,输出分布不需要是高斯分布的,例如,如果x是二分类,那么 p ( x z ) p(x z) p(xz) 可能是由 f ( z ; θ ) f(z;\theta) f(z;θ) 参数化的伯努利。一个重要的性质是 P ( X ∣ z ) P(X|z) P(X∣z) 可以计算,并且在 θ \theta θ 中是连续的。从这里开始,我们将从 f ( z ; θ ) f(z;\theta) f(z;θ) 中省略 θ \theta θ ,以避免杂波。

2 Variational Autoencoders

The mathematical basis of VAEs actually has relatively little to do with classical autoencoders, e.g. sparse autoencoders [10, 11] or denoising autoencoders [12, 13]. VAEs approximately maximize Equation 1, according to the model shown in Figure 1. They are called “autoencoders” only because the final training objective that derives from this setup does have an encoder and a decoder, and resembles a traditional autoencoder. Unlike sparse autoencoders, there are generally no tuning parameters analogous to the sparsity penalties. And unlike sparse and denoising autoencoders, we can sample directly from P ( X ) P(X) P(X) (without performing Markov Chain Monte Carlo, as in [14]).

2 变分自编码

实际上,VAE的数学基础与经典的自编码器如稀疏自编码器、降噪自编码器等关联较少,如图1所示,VAE最大化方程1。他们都叫做自编码器仅仅是因为最终训练目标派生出像自编码器那样的编码器、解码器的结构。与稀疏自动编码器不同,通常没有类似于稀疏惩罚的调优参数,不像稀疏自编码器和去噪自编码器,我们可以直接从 P ( X ) P(X) P(X) 采样(不执行马尔可夫链蒙特卡罗[14])。

To solve Equation 1, there are two problems that VAEs must deal with: how to define the latent variables z z z (i.e., decide what information they represent), and how to deal with the integral over z z z. VAEs give a definite answer to both.

为了解方程1,VAE有两个问题需要解决:如何定义隐变量 z z z (如:决定这些隐变量所表达的信息);如何处理 z z z 的积分。VAE给出了确切答案。

First, how do we choose the latent variables z z z such that we capture latent information? Returning to our digits example, the ‘latent’ decisions that the model needs to make before it begins painting the digit are actually rather complicated. It needs to choose not just the digit, but the angle that the digit is drawn, the stroke width, and also abstract stylistic properties. Worse, these properties may be correlated: a more angled digit may result if one writes faster, which also might tend to result in a thinner stroke. Ideally, we want to avoid deciding by hand what information each dimension of z z z encodes (although we may want to specify it by hand for some dimensions [4]). We also want to avoid explicitly describing the dependencies—i.e., the latent structure—between the dimensions of z z z. VAEs take an unusual approach to dealing with this problem: they assume that there is no simple interpretation of the dimensions of z, and instead assert that samples of z can be drawn from a simple distribution, i.e., N ( 0 , I ) N (0, I) N(0,I), where I is the identity matrix. How is this possible? The key is to notice that any distribution in d dimensions can be generated by taking a set of d variables that are normally distributed and mapping them through a sufficiently complicated function1. For example, say we wanted to construct a 2D random variable whose values lie on a ring. If z z z is 2D and normally distributed, g ( z ) = z 10 + z ∣ ∣ z ∣ ∣ g(z) = \frac{z}{10} + \frac{z}{||z||} g(z)=10z+∣∣z∣∣z is roughly ring-shaped, as shown in Figure 2. Hence, provided powerful function approximators, we can simply learn a function which maps our independent, normally-distributed z z z values to whatever latent variables might be needed for the model, and then map those latent variables to X X X. In fact, recall that P ( X ∣ z ; θ ) = N ( X ∣ f ( z ; θ ) , σ 2 ∗ I ) P(X|z; θ) = N (X| f (z; θ), σ^2 ∗ I) P(X∣z;θ)=N(X∣f(z;θ),σ2∗I). If f ( z ; θ ) f(z; θ) f(z;θ) is a multi-layer neural network, then we can imagine the network using its first few layers to map the normally distributed z z z’s to the latent values (like digit identity, stroke weight, angle, etc.) with exactly the right statistics. Then it can use later layers to map those latent values to a fully-rendered digit. In general, we don’t need to worry about ensuring that the latent structure exists. If such latent structure helps the model accurately reproduce (i.e. maximize the likelihood of) the training set, then the network will learn that structure at some layer.

首先,如何选择因变量 z z z来捕捉因含信息?回到我们手写数字的例子中,这个

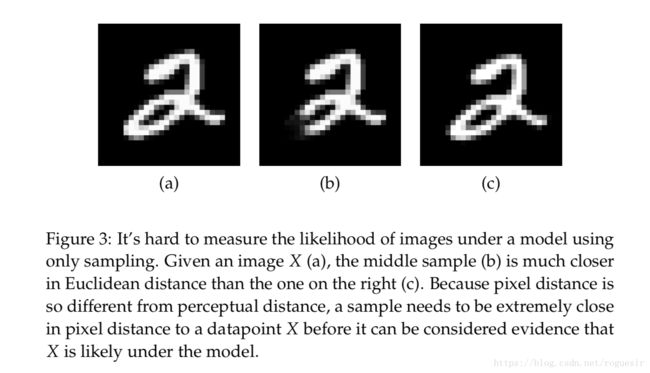

Now all that remains is to maximize Equation 1, where P ( z ) = N ( z ∣ 0 , I ) P(z) = N (z|0, I) P(z)=N(z∣0,I). As is common in machine learning, if we can find a computable formula for P ( X ) P(X) P(X), and we can take the gradient of that formula, then we can optimize the model using stochastic gradient ascent. It is actually conceptually straightforward to compute P ( X ) P(X) P(X) approximately: we first sample a large number of z z z values z 1 , ⋯ , z n {z_1, \cdots, z_n} z1,⋯,zn, and compute P ( X ) ≈ 1 n ∑ i P ( X ∣ z i ) P(X) ≈ \frac{1}{n} ∑_i P(X|z_i) P(X)≈n1∑iP(X∣zi). The problem here is that in high dimensional spaces, n n n might need to be extremely large before we have an accurate estimate of P ( X ) P(X) P(X). To see why, consider our example of handwritten digits. Say that our digit datapoints are stored in pixel space, in 28x28 images as shown in Figure 3. Since P ( X ∣ z ) P(X|z) P(X∣z) is an isotropic Gaussian, the negative log probability of X X X is proportional squared Euclidean distance between f ( z ) f(z) f(z) and X X X. Say that Figure 3(a) is the target (X) for which we are trying to find P ( X ) P(X) P(X). A model which produces the image shown in Figure 3(b) is probably a bad model, since this digit is not much like a 2. Hence, we should set the σ σ σ hyperparameter of our Gaussian distribution such that this kind of erroroneous digit does not contribute to P ( X ) P(X) P(X). On the other hand, a model which produces Figure 3© (identical to X X X but shifted down and to the right by half a pixel) might be a good model. We would hope that this sample would contribute to P ( X ) P(X) P(X). Unfortunately, however, we can’t have it both ways: the squared distance between X X X and Figure 3© is .2693 (assuming pixels range between 0 and 1), but between X X X and Figure 3(b) it is just .0387. The lesson here is that in order to reject samples like Figure 3(b), we need to set σ σ σ very small, such that the model needs to generate something significantly more like X X X than Figure 3©! Even if our model is an accurate generator of digits, we would likely need to sample many thousands of digits before we produce a 2 that is sufficiently similar to the one in Figure 3(a). We might solve this problem by using a better similarity metric, but in practice these are difficult to engineer in complex domains like vision, and they’re difficult to train without labels that indicate which datapoints are similar to each other. Instead, VAEs alter the sampling procedure to make it faster, without changing the similarity metric.

2.1 Setting up the objective

Is there a shortcut we can take when using sampling to compute Equation 1? In practice, for most z, P(X|z) will be nearly zero, and hence contribute almost nothing to our estimate of P(X). The key idea behind the variational autoencoder is to attempt to sample values of z that are likely to have produced X, and compute P(X) just from those. This means that we need a new function Q(z|X) which can take a value of X and give us a distribution over z values that are likely to produce X. Hopefully the space of z values that are likely under Q will be much smaller than the space of all z’s that are likely under the prior P(z). This lets us, for example, compute Ez∼QP(X|z) relatively easily. However, if z is sampled from an arbitrary distribution with PDF Q(z), which is not N (0, I), then how does that help us optimize P(X)? The first thing we need to do is relate Ez∼QP(X|z) and P(X). We’ll see where Q comes from later.

The relationship between Ez∼QP(X|z) and P(X) is one of the corner- stones of variational Bayesian methods. We begin with the definition of Kullback-Leibler divergence (KL divergence or D) between P(z|X) and Q(z), for some arbitrary Q (which may or may not depend on X):

(2) D [ Q ( z ) ∣ ∣ P ( z ∣ X ) ] = E z − Q [ l o g Q ( z ) − l o g P ( z ∣ X ) ] D[Q(z)||P(z|X)]=E_{z-Q}[logQ(z)-logP(z|X)] \tag{2} D[Q(z)∣∣P(z∣X)]=Ez−Q[logQ(z)−logP(z∣X)](2)

We can get both P(X) and P(X|z) into this equation by applying Bayes rule

to P(z|X):

(3) D [ Q ( z ) ∣ ∣ P ( z ∣ X ) ] = E z − Q [ l o g Q ( z ) − l o g P ( X ∣ z ) − l o g P ( z ) ] + l o g P ( X ) D[Q(z)||P(z|X)]=E_{z-Q}[logQ(z)-logP(X|z)-logP(z)]+logP(X) \tag{3} D[Q(z)∣∣P(z∣X)]=Ez−Q[logQ(z)−logP(X∣z)−logP(z)]+logP(X)(3)

Here, log P(X) comes out of the expectation because it does not depend on z. Negating both sides, rearranging, and contracting part of Ez∼Q into a KL-divergence terms yields:

(4) l o g P ( X ) − D [ Q ( z ) ∣ ∣ P ( z ∣ X ) ] = E z − Q [ l o g P ( X ∣ z ) ] − D [ Q ( z ) ∣ ∣ P ( z ) ] logP(X)-D[Q(z)||P(z|X)]=E_{z-Q}[logP(X|z)]-D[Q(z)||P(z)] \tag{4} logP(X)−D[Q(z)∣∣P(z∣X)]=Ez−Q[logP(X∣z)]−D[Q(z)∣∣P(z)](4)

Note that X is fixed, and Q can be any distribution, not just a distribution which does a good job mapping X to the z’s that can produce X. Since we’re interested in inferring P(X), it makes sense to construct a Q which does depend on X, and in particular, one which makes D [Q(z)∥|P(z|X)] small:

(4) l o g P ( X ) − D [ Q ( z ∣ X ) ∣ ∣ P ( z ∣ X ) ] = E z − Q [ l o g P ( X ∣ z ) ] − D [ Q ( z ∣ X ) ∣ ∣ P ( z ) ] logP(X)-D[Q(z|X)||P(z|X)]=E_{z-Q}[logP(X|z)]-D[Q(z|X)||P(z)] \tag{4} logP(X)−D[Q(z∣X)∣∣P(z∣X)]=Ez−Q[logP(X∣z)]−D[Q(z∣X)∣∣P(z)](4)

This equation serves is the core of the variational autoencoder, and it’s worth spending some time thinking about what it says2. In two sentences, the left hand side has the quantity we want to maximize: log P(X) (plus an error term, which makes Q produce z’s that can reproduce a given X; this term will become small if Q is high-capacity). The right hand side is something we can optimize via stochastic gradient descent given the right choice of Q (although it may not be obvious yet how). Note that the framework—in particular, the right hand side of Equation 5—has suddenly taken a form which looks like an autoencoder, since Q is “encoding” X into z, and P is “decoding” it to reconstruct X. We’ll explore this connection in more detail later.

Now for a bit more detail on Equatinon 5. Starting with the left hand side,

we are maximizing log P(X) while simultaneously minimizing D [Q(z|X)∥|P(z|X)]. P(z|X) is not something we can compute analytically: it describes the values of z that are likely to give rise to a sample like X under our model in Figure 1. However, the second term on the left is pulling Q(z|x) to match P(z|X). Assuming we use an arbitrarily high-capacity model for Q(z|x),

then Q(z|x) will hopefully actually match P(z|X), in which case this KL- divergence term will be zero, and we will be directly optimizing log P(X).

As an added bonus, we have made the intractable P(z|X) tractable: we can

just use Q(z|x) to compute it.

2.2 Optimizing the objective

So how can we perform stochastic gradient descent on the right hand side of Equation 5? First we need to be a bit more specific about the form that Q(z|X) will take. The usual choice is to say that Q(z|X) = N (z|μ(X; θ), Σ(X; θ)), where μ and Σ are arbitrary deterministic functions with parameters θ that can be learned from data (we will omit θ in later equations). In practice, μ and Σ are again implemented via neural networks, and Σ is constrained to be a diagonal matrix. The advantages of this choice are computational, as they make it clear how to compute the right hand side. The last term—D [Q(z|X)∥P(z)]—is now a KL-divergence between two multivariate Gaussian distributions, which can be computed in closed form as: