Python的requests库简介

Python的网络爬虫与信息提取

1、HTTP,Hypertext Transfer Protocol,超文本传输协议

(1)HTTP是一个基于“请求与响应”模式的、无状态的应用层协议

(2)HTTP协议采用URL作为定位网络资源的标识,URL格式如下:http://host[:port][path]

- host: 合法的Internet主机域名或IP地址

- port: 端口号,缺省端口为80

- path: 请求资源的路径

(3)HTTP URL实例:http://www.bit.edu.cnhttp://220.181.111.188/duty

(4)HTTP URL的理解:URL是通过HTTP协议存取资源的Internet路径,一个URL对应一个数据资源

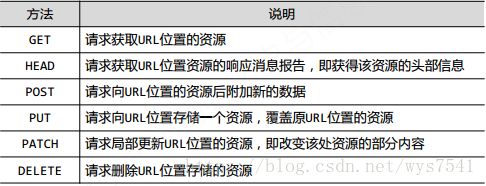

(5)HTTP协议对资源的操作

2、方法与对象

(1)requests.get(url, params=None, **kwargs)

- url : 拟获取页面的url链接

- params : url中的额外参数,字典或字节流格式,可选

- **kwargs: 12个控制访问的参数

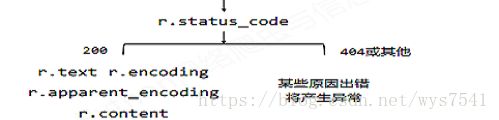

(2)response对象的属性

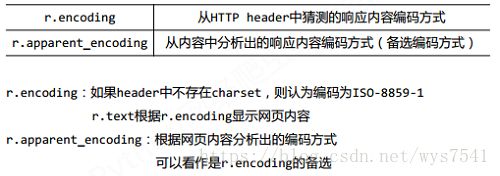

(3)response对象的编码

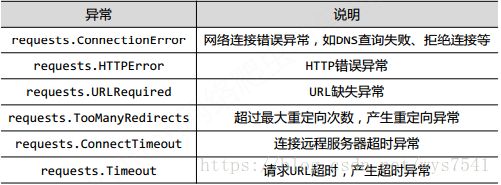

(4)requests库的异常

(5)response对象的异常

3、resquests库的7个主要方法

>>> r = requests.head('http://httpbin.org/get')

>>> r.headers

{'Content‐Length': '238', 'Access‐Control‐Allow‐Origin': '*', 'Access‐

Control‐Allow‐Credentials': 'true', 'Content‐Type':

'application/json', 'Server': 'nginx', 'Connection': 'keep‐alive',

'Date': 'Sat, 18 Feb 2017 12:07:44 GMT'}

>>> payload = {'key1': 'value1', 'key2': 'value2'}

>>> r = requests.post('http://httpbin.org/post', data = payload)

>>> print(r.text)

{ ...

"form": {

"key2": "value2", #向URL POST一个字典

"key1": "value1" #自动编码为form(表单)

},

}

(1)requests.request(method, url, **kwargs)

- method : 请求方式,对应get/put/post等7种

- r= requests.request('GET', url, **kwargs)

- r = requests.request('HEAD', url, **kwargs)

- r = requests.request('POST', url, **kwargs)

- r = requests.request('PUT', url, **kwargs)

- r = requests.request('PATCH', url, **kwargs)

- r = requests.request('delete', url, **kwargs)

- r = requests.request('OPTIONS', url, **kwargs)

- url : 拟获取页面的url链接

- **kwargs: 控制访问的参数,共13个

1. params : 字典或字节序列,作为参数增加到url中

>>> kv = {'key1': 'value1', 'key2': 'value2'}

>>> r = requests.request('GET', 'http://python123.io/ws', params=kv)

>>> print(r.url)

http://python123.io/ws?key1=value1&key2=value22. data : 字典、字节序列或文件对象,作为Request的内容

>>> kv = {'key1': 'value1', 'key2': 'value2'}

>>> r = requests.request('POST', 'http://python123.io/ws', data=kv)

>>> body = '主体内容'

>>> r = requests.request('POST', 'http://python123.io/ws', data=body)3. json : JSON格式的数据,作为Request的内容

>>> kv = {'key1': 'value1'}

>>> r = requests.request('POST', 'http://python123.io/ws', json=kv)4. headers : 字典,HTTP定制头

>>> hd = {'user‐agent': 'Chrome/10'}

>>> r = requests.request('POST', 'http://python123.io/ws', headers=hd)5. cookies : 字典或CookieJar,Request中的cookie

6. auth : 元组,支持HTTP认证功能

7. files : 字典类型,传输文件

>>> fs = {'file': open('data.xls', 'rb')}

>>> r = requests.request('POST', 'http://python123.io/ws', files=fs)8. timeout : 设定超时时间,秒为单位

>>> r = requests.request('GET', 'http://www.baidu.com', timeout=10)9. proxies : 字典类型,设定访问代理服务器,可以增加登录认证

>>> pxs = { 'http': 'http://user:[email protected]:1234'

'https': 'https://10.10.10.1:4321' }

>>> r = requests.request('GET', 'http://www.baidu.com', proxies=pxs)10. allow_redirects : True/False,默认为True,重定向开关

11. stream : True/False,默认为True,获取内容立即下载开关

12. verify : True/False,默认为True,认证SSL证书开关

13. cert : 本地SSL证书路径

(2)requests.get(url, params=None, **kwargs)

(3)requests.head(url, **kwargs)

(4)requests.post(url, data=None, json=None, **kwargs)

(5)requests.put(url, data=None, **kwargs)

(6)requests.patch(url, data=None, **kwargs)

(7)requests.delete(url, **kwargs)

4、Robots协议

- Robots Exclusion Standard,网络爬虫排除标准

- 作用:网站告知网络爬虫哪些页面可以抓取,哪些不行

- 形式:在网站根目录下的robots.txt文件

4、应用实例

- 京东商品页面的爬取实例

#jingdong.py

import requests

url = "https://item.jd.com/2967929.html"

try:

r = requests.get(url)

r.raise_for_status()

r.encoding = r.apparent_encoding

print(r.text[:1000])

except:

print("爬取失败")- 亚马逊商品页面的爬取实例

#amazon.py

import requests

url = "https://www.amazon.cn/gp/product/B01M8L5Z3Y"

try:

kv = {'user-agent':'Mozilla/5.0'}

r = requests.get(url, headers=kv)

r.raise_for_status()

r.encoding = r.apparent_encoding

print(r.text[:3000])

except:

print("爬取失败")- 百度/360搜索关键字提交实例

#baiduKdSearch.py

import requests

keyword = "Python"

try:

kv = {'wd':keyword}

r = requests.get("http://www.baidu.com/s",params=kv)

print(r.request.url)

r.raise_for_status()

print(len(r.text))

except:

print("爬取失败")- 网络图片的爬取和存储实例

#getImg.py

import requests

import os

url = "http://jwc.jxnu.edu.cn/images/new/banner3.jpg"

root = "F:\\learningsoftware\\PyCharm\\spiderTests\\"

path = root + url.split('/')[-1] #组成图片的存储位置

print(root+"\n"+path)

try:

if not os.path.exists(root):

os.mkdir(root)

if not os.path.exists(path):

r = requests.get(url)

print(r.raise_for_status())

with open(path, 'wb') as f:

f.write(r.content)

f.close()

print("文件保存成功")

else:

print("文件已经存在")

except:

print("爬取失败")- IP地址归属地的自动查询

#ipSearch.py

import requests

url = 'http://m.ip138.com/ip.asp?ip='

try:

r = requests.get(url + '100.100.100.100')

print(r.raise_for_status())

r.encoding = r.apparent_encoding

print(r.text[-500:])

except:

print("爬取失败")