基于GNS的oracle RAC 19c搭建与简单维护

基于GNS的Oracle RAC 19c搭建与简单维护

- 一、服务器准备

- 1.1网络地址规划

- 1.2存储规划

- 1.3操作系统安装

- 1.3.1安装oracleasm

- 1.3.2安装需要的库文件

- 1.3.3创建用户组

- 1.3.4创建软件安装目录

- 1.3.5 配置环境变量

- 1.4修改操作系统参数

- 1.4.1关闭防火墙

- 1.4.2禁用selinux

- 1.4.3关闭TransparentHugePages

- 1.4.4配置/etc/sysctl.conf

- 1.4.5配置/etc/security/limits.conf

- 1.4.6关闭ZEROCONF

- 1.4.7修改chronyd配置

- 1.4.8 修改/etc/resolv.conf

- 1.4.9 修改/etc/hosts

- 1.4.10 修改/etc/iscsi/initiatorname.iscsi

- 1.5 节点克隆

- 1.6 DNS/GNS服务器配置

- 1.6.1安装DNS

- 1.6.2 配置DNS

- 1.6.2.1 修改name.conf 文件

- 1.6.2.2 配置正反向解析Zone

- 1.6.2.3 配置正向解析的区域文件

- 1.6.2.4 配置反向解析的区域文件

- 1.6.2.5 验证dns

- 1.7 DHCP服务器配置

- 1.8 chrony服务器配置

- 1.7 ISCSI服务器配置

- 1.8 ASM磁盘组配置

- 二、grid安装

- 2.1软件下载

- 2.2 解压安装

- 2.3 其它安装

- 三、数据库的简单使用

- 3.1 起动和停止

- 3.2创建、删除表空间

- 3.3 创建PDB

- 3.4 tnsname.ora文件配置

- 3.5 导入DMP文件到PDB中

作者:甘肃省教育考试院 阿福 u9085@163.com

一、服务器准备

本次系统中规划3台云服务器(rac01、rac02、rac03)作为计算结点,一台云服务器(DNS SERVER)作为DNS、GNS、DHCP、CHRONY(校时)、iscsi服务器,服务器系统均为oracle linux 7.6,服务器配置内存24G,硬盘500G。

1.1网络地址规划

| 主机名 | Public IP(eth0) | Private IP(eth1) | DNS | GNS |

|---|---|---|---|---|

| rac01.hecf.cn | 192.168.5.150 | 192.168.4.150 | 192.168.5.149 | 192.168.5.148 |

| rac02.hecf.cn | 192.168.5.151 | 192.168.4.151 | 192.168.5.149 | 192.168.5.148 |

| rac03.hecf.cn | 192.168.5.152 | 192.168.4.152 | 192.168.5.149 | 192.168.5.148 |

SCAN IP 由GNS提供自动获取。

关于IP地址的作用请查看 https://www.cnblogs.com/zmlctt/p/3755985.html

每个节点要2块网卡, 3个IP,虚拟IP或者叫做业务IP,单个网卡当掉可以“漂”到其他网卡是继续提供服务

在Oracle RAC环境下,每个节点都会有多个IP地址,分别为Public/Private/Vip,这三个IP到底有啥区别呢?分别用在那些场合呢?来看看老外的回答。

- private IP address is used only for internal clustering processing (Cache Fusion)

私有IP用于心跳同步,这个对于用户层面,可以直接忽略,简单理解,这个Ip用来保证两台服务器同步数据用的私网IP。- VIP is used by database applications to enable fail over when one cluster node fails

虚拟IP用于客户端应用,以支持失效转移,通俗说就是一台挂了,另一台自动接管,客户端没有任何感觉。

这也是为什么要使用RAC的原因之一,另一个原因,我认为是负载均衡。- public IP adress is the normal IP address typically used by DBA and SA to manage storage, system and database.

公有IP一般用于管理员,用来确保可以操作到正确的机器,我更愿意叫他真实IP。

1.2存储规划

因为本次安装在云系统中,云主机不能直接使用FC存储,所以先把FC存储挂载在DNS SERVER,然后用targetcli转换为Iscsi存储供RAC使用,因为FC存储已经做了raid,所在本次存储规划中每一个ASM分区只做了一个分区,在系统安装时选择extend,再没有做冗余备份,具体如下:

OCR 500G,MGMT 100G,DATA 2T ,FRA 50G

如果要在ASM中做冗余备份,不同的方式要求硬盘数量不一,但分区时各组磁盘的大小必须一致,请参阅ORACLE官网。

如果在生产环境中,可以直接在存储上分配好各组的硬盘,然后挂载至服务器。

1.3操作系统安装

操作系统直接从oracle官网下载,然后挂载云服务器的光驱进行安装,安装过程中选择“带GUI的服务器”,先安装好一台再克隆就可以了。

操作系统安装时要注意swap分区大于16G,根目录至少留250G空间,其余默认就成了。

安装完成后,先配置一个能接入互联网的网卡(eth3)和地址,用于对操作系统升级和安装软件。

升级 yum -y update

1.3.1安装oracleasm

yum -y install oracleasm-support

yum -y install kmod-oracleasm

1.3.2安装需要的库文件

yum -y install bc gcc gcc-c++ binutils make gdb cmake glibc ksh \

elfutils-libelf elfutils-libelf-devel fontconfig-devel glibc-devel \

libaio libaio-devel libXrender libXrender-devel libX11 libXau sysstat \

libXi libXtst libgcc librdmacm-devel libstdc++ libstdc++-devel libxcb \

net-tools nfs-utils compat-libcap1 compat-libstdc++ smartmontools targetcli \

python python-configshell python-rtslib python-six unixODBC unixODBC-devel

1.3.3创建用户组

/usr/sbin/groupadd –g 501 oinstall

/usr/sbin/groupadd –g 502 dba

/usr/sbin/groupadd –g 503 oper

/usr/sbin/groupadd –g 504 asmadmin

/usr/sbin/groupadd –g 505 asmdba

/usr/sbin/groupadd –g 506 asmoper

/usr/sbin/useradd –u 501 –g oinstall –G asmadmin,asmdba,asmoper grid

/usr/sbin/useradd –u 502 –g oinstall –G dba,asmdba oracle

passwd oracle

passwd grid

1.3.4创建软件安装目录

mkdir -p /oracle/app/grid

mkdir -p /oracle/app/19.2.0/grid

mkdir -p /oracle/app/oracle

mkdir -p /oracle/app/oracle/product/19.2.0/db_1

mkdir -p /oracle/app/oraInventory

chown -R grid:oinstall /oracle/app

chown -R oracle:oinstall /oracle/app/oracle

chmod -R 775 /oracle/app

1.3.5 配置环境变量

三个节点都要配置环境变量,具体如下,我是把一台配好然后克隆的,完了再修改就简单多了。

oracle用户环境变量

#su - oracle

#vi ~/.bash_profile

PS1="[`whoami`@`hostname`:"'$PWD]$'

export PS1

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/oracle/app/oracle

export ORACLE_HOME=$ORACLE_BASE/product/19.2.0/db_1

export ORACLE_SID=orcl1 #这是SID,其余两台分别为orcl2,orcl3

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

export NLS_LANG=AMERICAN_AMERICA.AL32UTF8

export LC_ALL=en_US.UTF-8

#alias sqlplus="rlwrap sqlplus"

#alias rman="rlwrap rman"

HREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

grid用户环境变量

#su - grid

#vi ~/.bash_profile

PS1="[`whoami`@`hostname`:"'$PWD]$'

export PS1

umask 022

export TMP=/tmp

export TMPDIR=$TMP

export ORACLE_BASE=/oracle/app/grid

export ORACLE_HOME=/oracle/app/19.2.0/grid

export ORACLE_SID=+ASM1 #这是SID,其余两台分别为+ASM2 ,+ASM3

export PATH=/usr/sbin:$PATH

export PATH=$ORACLE_HOME/bin:$PATH

export LD_LIBRARY_PATH=$ORACLE_HOME/lib:/lib:/usr/lib

export CLASSPATH=$ORACLE_HOME/JRE:$ORACLE_HOME/jlib:$ORACLE_HOME/rdbms/jlib

alias sqlplus="rlwrap sqlplus"

THREADS_FLAG=native; export THREADS_FLAG

if [ $USER = "oracle" ] || [ $USER = "grid" ]; then

if [ $SHELL = "/bin/ksh" ]; then

ulimit -p 16384

ulimit -n 65536

else

ulimit -u 16384 -n 65536

fi

umask 022

fi

1.4修改操作系统参数

1.4.1关闭防火墙

#systemctl stop firewalld

#systemctl disable firewalld

1.4.2禁用selinux

vi /etc/selinux/config

SELINUX=enforcing改为SELINUX=disabled

1.4.3关闭TransparentHugePages

(1)查看验证transparent_hugepage的状态

cat/sys/kernel/mm/redhat_transparent_hugepage/enabled

always madvise [never] 结果为never表示关闭

(2)关闭transparent_hugepage的配置

#vi /etc/rc.local #注释:编辑rc.local文件,增加以下内容

if test -f /sys/kernel/mm/redhat_transparent_hugepage/enabled; then

echo never > /sys/kernel/mm/redhat_transparent_hugepage/enabled

fi

1.4.4配置/etc/sysctl.conf

#vi /etc/security/limits.conf

grid soft nproc 16384

grid hard nproc 65536

grid soft nofile 32768

grid hard nofile 65536

grid soft stack 32768

grid hard stack 65536

grid soft memlock -1

grid hard memlock -1

oracle soft nproc 16384

oracle hard nproc 65536

oracle soft nofile 32768

oracle hard nofile 65536

oracle soft stack 32768

oracle hard stack 65536

oracle soft memlock -1

oracle hard memlock -1

#vi /etc/pam.d/login

session required pam_limits.so

1.4.5配置/etc/security/limits.conf

# vi /etc/sysctl.conf

fs.aio-max-nr = 4194304

fs.file-max = 6815744

kernel.shmall = 4194304

kernel.shmmax = 16106127360

kernel.shmmni = 4096

kernel.sem = 250 32000 100 128

kernel.panic_on_oops = 1

kernel.panic = 10

net.ipv4.ip_local_port_range = 9000 65500

net.core.rmem_default = 262144

net.core.rmem_max = 4194304

net.core.wmem_default = 262144

net.core.wmem_max = 4194304

net.ipv4.tcp_moderate_rcvbuf=1

net.ipv4.tcp_rmem = 4096 87380 4194304

net.ipv4.tcp_wmem = 4096 16384 4194304

net.ipv4.conf.ens36.rp_filter = 2

net.ipv4.conf.ens35.rp_filter = 2

net.ipv4.conf.ens34.rp_filter = 1

vm.vfs_cache_pressure=200

vm.swappiness=10

vm.min_free_kbytes=102400

1.4.6关闭ZEROCONF

#vi /etc/sysconfig/network

NOZEROCONF=yes

1.4.7修改chronyd配置

chrony用来同步各服务器的时间

#vi /etc/chrony.conf

把下面四行都注释了,再加一行

#server 0.pool.ntp.org iburst

#server 1.pool.ntp.org iburst

#server 2.pool.ntp.org iburst

#server 3.pool.ntp.org iburst

server 192.168.5.149 iburst

1.4.8 修改/etc/resolv.conf

此文件会随着网卡配置而自动变化,但是变化后安装软件过程中会出现问题 ,所以把他改成只读的。

#vi /etc/resolv.conf

serach hecf.cn

nameserver 192.168.5.149

nameserver 192.168.5.148 #(不配这个地址,在安装grid校验时会提示子域委派错误,配置会提示resolv.conf一致性错误,我也没搞清楚,就忽略了,安装也没有问题)

#chattr +i /etc/resolv.conf

1.4.9 修改/etc/hosts

#vi /etc/hosts

127.0.0.1 localhost localhost.localdomain localhost4 localhost4.localdomain4

::1 localhost localhost.localdomain localhost6 localhost6.localdomain6

192.168.5.150 rac01.hecf.cn rac01

192.168.5.151 rac02.hecf.cn rac02

192.168.5.152 rac03.hecf.cn rac03

192.168.5.148 gns.hecf.cn gns

192.168.4.150 rac01priv.hecf.cn rac01priv

192.168.4.151 rac02priv.hecf.cn rac02priv

192.168.4.152 rac03priv.hecf.cn rac03priv

1.4.10 修改/etc/iscsi/initiatorname.iscsi

根据需要修改文件内容

#vi /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1988-12.com.oracle:295aaeabd01

建议最后两位和服务器对应。

1.5 节点克隆

到这里节点的配置基本完成了,然后把此节点再克隆两个(最好再多克隆一个,如果安装坏了,可以用这个做模板,重头再来),克隆后需要修改以下信息,修改完成重新启动三台服务器

(1)IP 地址,可以通过图形界面修改,我觉得很方便

(2)修改主机名 hostnamectl set-hostname rac02.hecf.cn或 hostnamectl set-hostname rac03.hecf.cn

(3)修改环境变量

(4)修改ISCSI标识,在后面配置ISCSI服务器的时候会用到

#vi /etc/iscsi/initiatorname.iscsi

把标识修改成有统一规律的,但是不能一样。

1.6 DNS/GNS服务器配置

配置DNS,这部分内容参考了https://www.cndba.cn/marvinn/article/2766

1.6.1安装DNS

yum install bind-libs bind bind-utils

rpm -qa | grep "^bind"

1.6.2 配置DNS

1.6.2.1 修改name.conf 文件

安装完成后,bind的主配置文件是/etc/named.conf;区域类型配置文件是/etc/named.rfc1912.zones;区域配置文件在/var/named/下;

//

// named.conf

//

// Provided by Red Hat bind package to configure the ISC BIND named(8) DNS

// server as a caching only nameserver (as a localhost DNS resolver only).

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

// See the BIND Administrator's Reference Manual (ARM) for details about the

// configuration located in /usr/share/doc/bind-{version}/Bv9ARM.html

options {

// listen-on port 53 { 127.0.0.1; };

// listen-on-v6 port 53 { ::1; };

directory "/var/named";

dump-file "/var/named/data/cache_dump.db";

statistics-file "/var/named/data/named_stats.txt";

memstatistics-file "/var/named/data/named_mem_stats.txt";

recursing-file "/var/named/data/named.recursing";

secroots-file "/var/named/data/named.secroots";

// allow-query { localhost; };

/*

- If you are building an AUTHORITATIVE DNS server, do NOT enable recursion.

- If you are building a RECURSIVE (caching) DNS server, you need to enable

recursion.

- If your recursive DNS server has a public IP address, you MUST enable access

control to limit queries to your legitimate users. Failing to do so will

cause your server to become part of large scale DNS amplification

attacks. Implementing BCP38 within your network would greatly

reduce such attack surface

*/

recursion yes;

dnssec-enable yes;

dnssec-validation yes;

dnssec-lookaside auto;

/* Path to ISC DLV key */

bindkeys-file "/etc/named.root.key";

managed-keys-directory "/var/named/dynamic";

pid-file "/run/named/named.pid";

session-keyfile "/run/named/session.key";

};

logging {

channel default_debug {

file "data/named.run";

severity dynamic;

};

};

zone "." IN {

type hint;

// file "named.ca";

file"/dev/null";

};

include "/etc/named.rfc1912.zones";

include "/etc/named.root.key";

1.6.2.2 配置正反向解析Zone

修改Zone 文件:/etc/named.rfc1912.zones, 添加正向解析和反向解析的Zone 定义

// named.rfc1912.zones:

//

// Provided by Red Hat caching-nameserver package

//

// ISC BIND named zone configuration for zones recommended by

// RFC 1912 section 4.1 : localhost TLDs and address zones

// and http://www.ietf.org/internet-drafts/draft-ietf-dnsop-default-local-zones-02.txt

// (c)2007 R W Franks

//

// See /usr/share/doc/bind*/sample/ for example named configuration files.

//

zone "localhost.localdomain" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "localhost" IN {

type master;

file "named.localhost";

allow-update { none; };

};

zone "1.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.0.ip6.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "1.0.0.127.in-addr.arpa" IN {

type master;

file "named.loopback";

allow-update { none; };

};

zone "0.in-addr.arpa" IN {

type master;

file "named.empty";

allow-update { none; };

};

zone "hecf.cn" IN { // Zone名字可自取

type master;

file "hecf.cn.zone"; // Zone file 名可自取

allow-update{ none; };

};

zone "5.168.192.in-addr.arpa" IN { // 反向Zone ,IP反转后缀需要加in-addr.arp,下同

type master;

file "5.168.192.local"; // Zone File名可自取,下同

allow-update{ none; };

};

zone "4.168.192.in-addr.arpa" IN { // 反向Zone ,IP反转后缀需要加in-addr.arp,下同

type master;

file "4.168.192.local"; // Zone File名可自取,下同

allow-update{ none; };

};

1.6.2.3 配置正向解析的区域文件

在之前的name.conf 配置中指定的区域文件目录是:/var/named。 所以在这个目录里创建正向解析的区域文件。

文件名就是在Zone中定义的file名。

#touch /var/named/hecf.cn.zone

#chgrp named /var/named/hecf.cn.zone

#vi /var/named/hecf.cn.zone

--添加如下内容:

$TTL 3D

@ IN SOA dns.hecf.cn. root.hecf.cn. (

42 ; serial (d.adams)

3H ; refresh

15M ; retry

1W ; expiry

1D) ; minimum

IN NS dns.hecf.cn.

dns IN A 192.168.5.149

gns IN A 192.168.5.148

rac01 IN A 192.168.5.150

rac02 IN A 192.168.5.151

rac03 IN A 192.168.5.152

rac04 IN A 192.168.5.153

rac01priv IN A 192.168.4.150

rac02priv IN A 192.168.4.151

rac03priv IN A 192.168.4.152

rac04priv IN A 192.168.4.153

rac IN NS gns

//这一句是整个系统能否安GNS进行scan的关键,这个问题我搞了一个多星期,有好篇教程教没有讲,导致系统安装失败,这简直就是一个天大的坑呀。

//需要注意的是上面一行,表示以后解析子域名rac.hecf.cn的服务器是gns服务器,而gns服务器的ip是192.168.5.148

//这是配置基于GNS RAC安装的关键(最后找到了这篇帖子才算搞定http://blog.sina.com.cn/s/blog_701a48e70102w6gv.html)。

$ORIGIN hecf.cn.

@ IN NS gns.hecf.cn.

1.6.2.4 配置反向解析的区域文件

在/var/named 目录下创建反向解析的区域文件,

文件名也是之前在Zone中定义的:5.168.192.local以及4.168.192.local

[root@server ~]# touch /var/named/5.168.192.local

[root@server ~]# chgrp named /var/named/5.168.192.local

[root@server ~]# vi /var/named/5.168.192.local

--添加如下内容:

$TTL 3D

@ IN SOA dns.hecf.cn. root.hecf.cn. (

1997022700 ; Serial

28800 ; Refresh

14400 ; Retry

3600000 ; Expire

86400) ; Minimum

IN NS dns.hecf.cn.

149 IN PTR dns.hecf.cn.

148 IN PTR gns.hecf.cn.

150 IN PTR rac01.hecf.cn.

151 IN PTR rac02.hecf.cn.

152 IN PTR rac03.hecf.cn.

153 IN PTR rac04.hecf.cn.

[root@server ~]# touch /var/named/4.168.192.local

[root@server ~]# chgrp named /var/named/4.168.192.local

[root@server ~]# vi /var/named/4.168.192.local

--添加如下内容:

$TTL 3D

@ IN SOA dns.hecf.cn. root.hecf.cn. (

1997022700 ; Serial

28800 ; Refresh

14400 ; Retry

3600000 ; Expire

86400) ; Minimum

IN NS dns.hecf.cn.

149 IN PTR dns.hecf.cn.

148 IN PTR gns.hecf.cn.

150 IN PTR rac01priv.hecf.cn.

151 IN PTR rac02priv.hecf.cn.

152 IN PTR rac03priv.hecf.cn.

153 IN PTR rac04priv.hecf.cn.

1.6.2.5 验证dns

#systemctl start named

#systemctl enable named

#nslookup rac01.hecf.cn

Server: 192.168.5.149

Address: 192.168.5.149#53

Name: rac01.hecf.cn

Address: 192.168.5.150

#nslookup rac02.hecf.cn

Server: 192.168.5.149

Address: 192.168.5.149#53

Name: rac02.hecf.cn

Address: 192.168.5.151

#nslookup rac03.hecf.cn

Server: 192.168.5.149

Address: 192.168.5.149#53

Name: rac03.hecf.cn

Address: 192.168.5.152

1.7 DHCP服务器配置

# cat /etc/dhcp/dhcpd.conf

#

# DHCP Server Configuration file.

# see /usr/share/doc/dhcp*/dhcpd.conf.example

# see dhcpd.conf(5) man page

#

ddns-update-style interim;

ignore client-updates;

subnet 192.168.5.0 netmask 255.255.255.0 {

# --- default gateway

# option routers 192.168.5.1;

option subnet-mask 255.255.255.0;

option nis-domain "hecf.cn";

option domain-name "hecf.cn";

option domain-name-servers 192.168.5.149;

option domain-name-servers 192.168.5.148;

option time-offset -18000; # Eastern Standard Time

option ip-forwarding off;

range dynamic-bootp 192.168.5.240 192.168.5.249;

default-lease-time 21600;

max-lease-time 43200;

}

启动dhcp服务器

#systemctl start dhcpd

1.8 chrony服务器配置

cat chrony.conf

# Use public servers from the pool.ntp.org project.

# Please consider joining the pool (http://www.pool.ntp.org/join.html).

server 0.pool.ntp.org iburst

server 1.pool.ntp.org iburst

server 2.pool.ntp.org iburst

server 3.pool.ntp.org iburst

server 192.168.5.149 iburst

#在这里加这一句就好了

# Record the rate at which the system clock gains/losses time.

driftfile /var/lib/chrony/drift

# Allow the system clock to be stepped in the first three updates

# if its offset is larger than 1 second.

makestep 1.0 3

# Enable kernel synchronization of the real-time clock (RTC).

rtcsync

# Enable hardware timestamping on all interfaces that support it.

#hwtimestamp *

# Increase the minimum number of selectable sources required to adjust

# the system clock.

#minsources 2

# Allow NTP client access from local network.

allow 192.168.0.0/16

#这里是允许哪些地址的机器校时

#allow 192.168.4.0/24

# Serve time even if not synchronized to a time source.

#local stratum 10

# Specify file containing keys for NTP authentication.

#keyfile /etc/chrony.keys

# Specify directory for log files.

logdir /var/log/chrony

# Select which information is logged.

#log measurements statistics tracking

1.7 ISCSI服务器配置

具体配置可以参考这个帖子,写的很详细

https://blog.csdn.net/gd0306/article/details/83715038

创建访问控制列表的时候,要改为前面配置的名称

acls create iqn.1988-12.com.oracle:295aaeabd01(这是我们在1.4.10中配置的名称)

1.8 ASM磁盘组配置

把Iscsi服务器配置好以后,将共享磁盘挂载至各节点上,各节点上都会多出来一块硬盘,比如 /dev/sda,

在节点一上给硬盘分区,小硬盘用fdisk就可以了,大硬盘建议转换为GPT格式,用使用parted来分区,或者在操作系统中用图形化工作分区,分区大小为OCR 500G,MGMT 100G,DATA 2T ,FRA 50G,四个分区名称分别为/dev/sda1,/dev/sda2,/dev/sda3,/dev/sda4。

然后组建ASM磁盘组,有两种方式,UDEV和oracleasm,下面介绍oracleasm方式。

# oracleasm init

#oracleasm configure -i

用户名填 grid

组名:asmadmin

然后三个yes,然后执行以下几行命令就成了。

#orcleasm createdisk asmdisk1 /dev/sda1

#orcleasm createdisk asmdisk2 /dev/sda2

#orcleasm createdisk asmdisk3 /dev/sda3

#orcleasm createdisk asmdisk4 /dev/sda4

#oracleasm scandisks

如果不出错误会在/dev/oracleasm/disks/发现四个文件,分别对应四个asm磁盘,在其它节点上直接执行oracleasm scandisks就会出现asm磁盘组了。

$oracleasm listdisks

ASMDISK1

ASMDISK2

ASMDISK3

ASMDISK4

ASMDISK5

如果是这样,那ASM就算成功了。

二、grid安装

2.1软件下载

在oracle的官网上下载LINUX.X64_193000_db_home.zip和LINUX.X64_193000_grid_home.zip两个文件,地址如下:

https://www.oracle.com/database/technologies/oracle19c-linux-downloads.html

2.2 解压安装

# su - grid

# cp 你的存放地址/LINUX.X64_193000_grid_home.zip /oracle/app/19.2.0/grid/.

# cd /oracle/app/19.2.0/grid

# unzip LINUX.X64_193000_grid_home.zip

然后在图形界面中用grid用户登录,并执行

cd /oracle/app/19.2.0/grid

./gridsetup.sh

你就可以看到期盼以久的安装界面了。

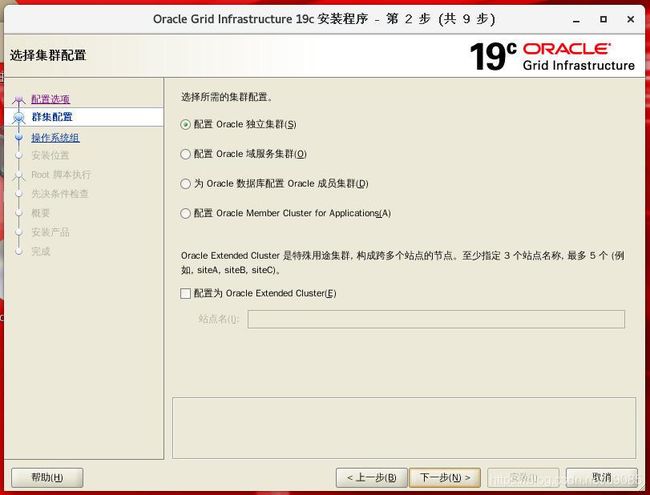

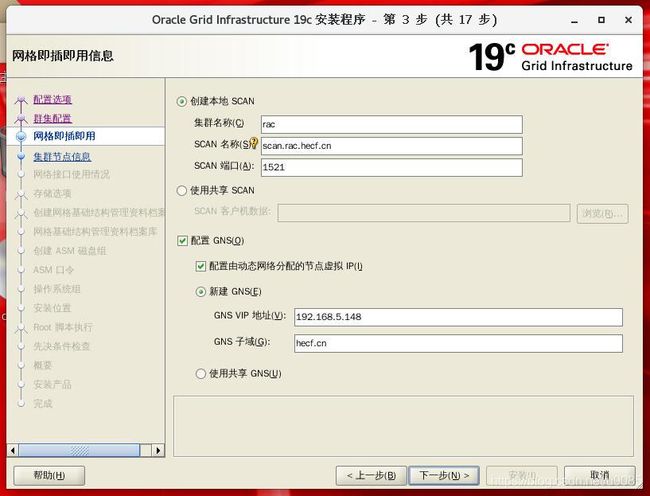

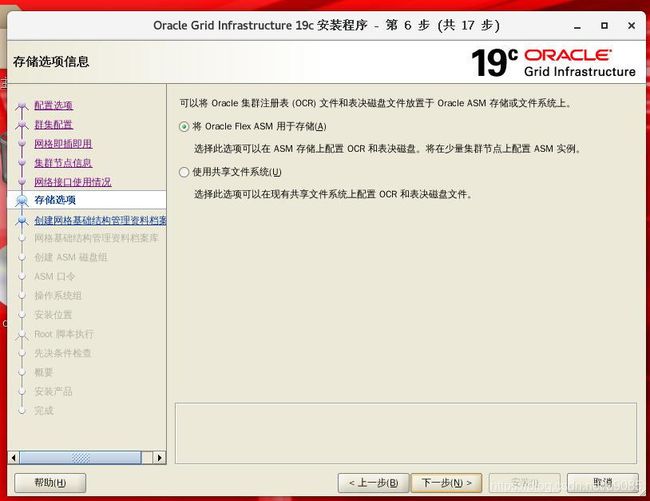

这个地方是个难点,也是一个关键点,一定要小心,容易出错造成安装后系统不稳定,集群名称必须是我们在DNS服务器中设置的那个,scan名称为scan名称+集群名称+域名称。

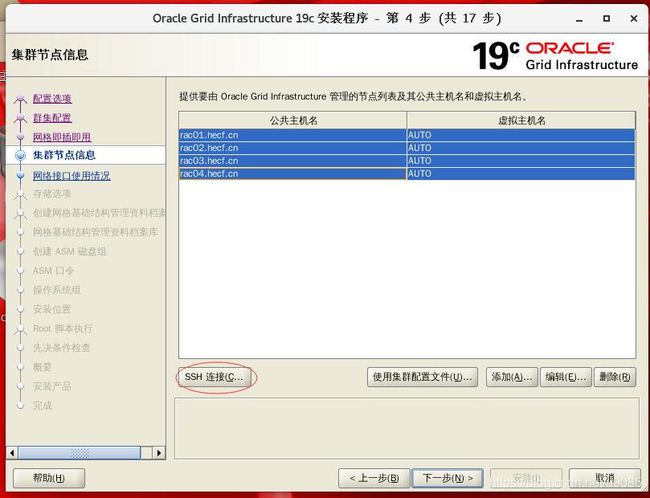

点添加,出现如下对话框

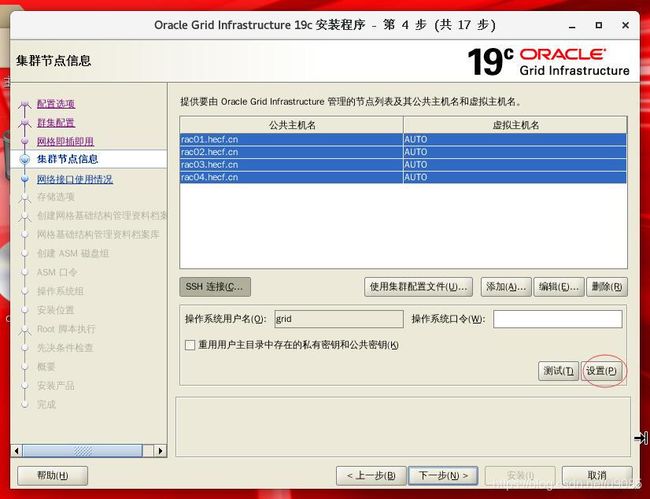

确定后,主机就可以添加在列表中,然后点ssh连接,输入密码,配置互信

记得要把DATA修改为OCR,DATA我在后面用来存放数据。因为磁盘本来就在存储中,做过raid,此处再不需要做备份,就选了外部,点更改搜索路径,可以搜索到前面设置的ASM磁盘组,按需要选择就行了,因为我这个机子上没有做asm,所以后面的步骤都没发截图,大家往下点就行了,后面都不难。

盗了一张图,到这里一定要选自动执行root脚本,并输入操作系统密码,否则按照到最后会累死人的。

2.3 其它安装

剩下DB软件配置、ASMCA创建磁盘组、 DBCA建库可以参考以下网址,写的非常详细。

https://blog.csdn.net/lihuarongaini/article/details/100077955

三、数据库的简单使用

3.1 起动和停止

集群的启动与停止,用root用户登录

/oracle/app/19.2.0/grid/bin/crsctl stop cluster -all

/oracle/app/19.2.0/grid/bin/crsctl status cluster -all

实例的启动与停止,用root用户登录

/oracle/app/19.2.0/grid/bin/srvctl start database -d orcl

/oracle/app/19.2.0/grid/bin/srvctl stop database -d orcl

监听的起动与停止,用grid用户

srvctl stop listener;

srvctl start listener;

srvctl start listener -n rac01

srvctl stop listener -n rac01

3.2创建、删除表空间

#su - oracle

#sqlplus /nolog

sql>conn /as sysdba;

sql>CREATE TEMPORARY TABLESPACE tk_TEMP

TEMPFILE '+DATA/ORCL/DATAFILE/tk_TEMP.DBF'

SIZE 32M

AUTOEXTEND ON

NEXT 32M MAXSIZE UNLIMITED

EXTENT MANAGEMENT LOCAL;

sql>CREATE TABLESPACE tk_DATA

LOGGING

DATAFILE '+DATA/ORCL/DATAFILE/tk_DATA.DBF'

SIZE 32M

AUTOEXTEND ON

NEXT 32M MAXSIZE UNLIMITED

EXTENT MANAGEMENT LOCAL;

sql>drop tablespace tk_DATA including contents and datafiles cascade constraint;

3.3 创建PDB

sql>CREATE PLUGGABLE DATABASE pdba

admin user 用户名 identified by 密码 roles=(dba)

DEFAULT TABLESPACE tk_DATA;

# 切换当前操作的PDB

sql>alter session set container=pdba;

# 打开、关闭PDB

sql>alter pluggable database pdba open instances=ALL;

sql>alter pluggable database pdba close ;

# 删除PDB

sql>drop pluggable database pdba including datafiles;

# 给用户授权操作表空间

sql>ALTER USER username QUOTA UNLIMITED ON tk_DATA;

3.4 tnsname.ora文件配置

cat /oracle/app/oracle/product/19.2.0/db_1/network/admin/tnsnames.ora

# tnsnames.ora Network Configuration File: /oracle/app/oracle/product/19.2.0/db_1/network/admin/tnsnames.ora

# Generated by Oracle configuration tools.

ORCL =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = scan.rac.hecf.cn)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl.hecf.cn)

)

)

PDBTK =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = scan.rac.hecf.cn)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = pdba.hecf.cn)

)

)

3.5 导入DMP文件到PDB中

impdp 用户名/密码@pdba remap_tablespace=原表空间名:现表空间名 remap_schema=原用户名:现用户名 FULL=Y transform=oid:n directory=DATA_PUMP_DIR dumpfile=文件名