HBase API 操作

1. 环境准备

1)新建Maven项目,在pom.xml中添加依赖

<dependencies>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-serverartifactId>

<version>2.2.3version>

dependency>

<dependency>

<groupId>org.apache.hbasegroupId>

<artifactId>hbase-clientartifactId>

<version>2.2.3version>

dependency>

<dependency>

<groupId>jdk.toolsgroupId>

<artifactId>jdk.toolsartifactId>

<version>1.8version>

<scope>systemscope>

<systemPath>C:/Program Files/Java/jdk1.8.0_231/lib/tools.jarsystemPath>

dependency>

dependencies>

2)将conf/hbase-site.xml放到工程中

3)配置log4j.properties

在resources目录中创建一个名为log4j.properties文件,填入如下内容.

log4j.rootLogger=INFO, stdout

log4j.appender.stdout=org.apache.log4j.ConsoleAppender

log4j.appender.stdout.layout=org.apache.log4j.PatternLayout

log4j.appender.stdout.layout.ConversionPattern=%d %p [%c] - %m%n

log4j.appender.logfile=org.apache.log4j.FileAppender

log4j.appender.logfile.File=target/spring.log

log4j.appender.logfile.layout=org.apache.log4j.PatternLayout

log4j.appender.logfile.layout.ConversionPattern=%d %p [%c] - %m%n

如果是windows端,需要配置hosts

4)将HBase集群节点的hostname和ip映射关系添加到host文件

所在位置

C:\Windows\System32\drivers\etc\

e.g:

由于我是基于docker搭建的hbase集群,所以外网访问时主机ip都一样.

111.222.33.44 cluster-hdfs-master

111.222.33.44 cluster-hdfs-slave1

111.222.33.44 cluster-hdfs-slave2

2. HBase API

1)创建连接,获取Admin对象.

这里特别要注意的是,java端环境是在本地(win10),而HBase集群我是搭建在腾讯云上的(Centos7),如果3台节点那么会简单很多,但我这里由于是用docker搭建的3个容器,所以虽然在linux端内网访问没有什么问题(每个容器内网ip不同,所以即使都使用了同一个端口,服务启动时也不会互相影响),但是对外网而言,却只有一个云主机ip.

因此在hbase-site.xml配置时需要为每台主机分别追加对RegionServer相关的端口配置,并分别映射到宿主机端口.(当然别忘了安全组也要配置)

#1 master的hbase-site.xml

<property>

<name>hbase.regionserver.portname>

<value>16020value>

<description>The port the HBase RegionServer binds to.description>

property>

<property>

<name>hbase.regionserver.info.portname>

<value>16030value>

<description>The port for the HBase RegionServer web UI

Set to -1 if you do not want the RegionServer UI to run.

description>

property>

#2 slave1的hbase-site.xml

<property>

<name>hbase.regionserver.portname>

<value>16021value>

<description>The port the HBase RegionServer binds to.description>

property>

<property>

<name>hbase.regionserver.info.portname>

<value>16031value>

<description>The port for the HBase RegionServer web UI

Set to -1 if you do not want the RegionServer UI to run.

description>

property>

#3 slave2的hbase-site.xml

<property>

<name>hbase.regionserver.portname>

<value>16022value>

<description>The port the HBase RegionServer binds to.description>

property>

<property>

<name>hbase.regionserver.info.portname>

<value>16032value>

<description>The port for the HBase RegionServer web UI

Set to -1 if you do not want the RegionServer UI to run.

description>

property>

#4 docker容器启动时需要映射的端口:

# NameNode 50070 hdfs 9000 zkCli 2181 hbase

# hbase.master.port 16000

# web info 16010

# region server 16020

# region server info 16030

sudo docker run -p 9000:9000 -p 50070:50070 -p 2181:2181 -p 16000:16000 -p 16010:16010 -p 16020:16020 -p 16030:16030 --name master --privileged -id -h cluster-hdfs-master \

centos:hbase /usr/sbin/init

# Resource Manager 8088 zkCli 2181

# region server 16021

# region server info 16031

sudo docker run -p 8088:8088 -p 2182:2181 -p 16021:16021 -p 16031:16031 --name slave1 --privileged -d -h cluster-hdfs-slave1 \

centos:hbase /usr/sbin/init

# Secondary NameNode 50090 zkCli 2181

# region server 16022

# region server info 16032

sudo docker run -p 50090:50090 -p 2183:2181 -p 16022:16022 -p 16032:16032 --name slave2 --privileged -d -h cluster-hdfs-slave2 \

centos:hbase /usr/sbin/init

这样一来,对外网来说,三台节点的regionserver分别为:

111.222.33.44:16020

111.222.33.44:16021

111.222.33.44:16022

虽然主机IP一样,但是通过不同的端口来访问不同容器的服务,这里这么配置的原因是,Java通过API访问HBase时,客户端会根据hbase-default.xml,hbase-site.xml等等配置信息:

![]()

- 首先向ZooKeeper发起请求,获取到各个节点信息(Master,RegionServers).

- 然后会尝试连接RegionServers

而连接RegionServers时所用的节点就是hbase.regionserver.port,默认值为16020,所以,如果我们在配置hbase-site.xml时,没有主动的为每个容器节点配置单独的端口的话,(虽然在宿主机端,是各个容器所分配的内网IP:16020的形式,彼此没有影响)对Java端来讲,虽然所配置的HOSTNAME不同,但实际上因为IP都指向同一主机,所以,所有RegionServer的URL都是111.222.33.44:16020,想一下客户端尝试连接所有RegionServer时都用同样的IP:PORT,除了Master之外都无法连接.因此必然无法成功建立与hbase的连接.

一般会有如下的错误内容:

ostname=cluster-hdfs-slave2,16020,1583323917195, seqNum=-1, see https://s.apache.org/timeout

[org.apache.hadoop.hbase.client.RpcRetryingCallerImpl] - Call exception, tries=10, retries=16,

started=41146 ms ago, cancelled=false, msg=org.apache.hadoop.hbase.NotServingRegionException:

hbase:meta,,1 is not online on cluster-hdfs-master,16020,1583323922141

package priv.shuu.hbase;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.hbase.HBaseConfiguration;

import org.apache.hadoop.hbase.client.Admin;

import org.apache.hadoop.hbase.client.Connection;

import org.apache.hadoop.hbase.client.ConnectionFactory;

import java.io.IOException;

/**

* 1. 获取Configuration对象

* 2. 创建连接

* 3. 获取Admin对象

*/

public class HBaseAPI {

private static Configuration conf;

private static HBaseAdminadmin;

private static Connection conn;

static {

conf = HBaseConfiguration.create();

try {

conn = ConnectionFactory.createConnection(conf);

admin = (HBaseAdmin) conn.getAdmin();

} catch (IOException e) {

e.printStackTrace();

}

}

}

2)判断表是否存在

public static boolean isTableExist(String name) throws IOException {

TableName tableName = TableName.valueOf(name);

return admin.tableExists(tableName);

}

3)创建表

// createTable(“Shop”, “category”);

public static void createTable(String name, String columnFamily) throws IOException {

if (!isTableExist(name)) {

ColumnFamilyDescriptor columnFamilyDescriptor = ColumnFamilyDescriptorBuilder.newBuilder(Bytes.toBytes(columnFamily)).build();

TableDescriptor tableDescriptor = TableDescriptorBuilder.newBuilder(TableName.valueOf(name)).setColumnFamily(columnFamilyDescriptor).build();

admin.createTable(tableDescriptor);

} else {

System.out.println("Table " + name + " already exists");

}

}

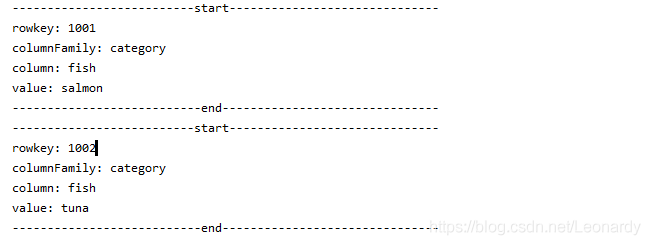

4)向表中插入数据

// addRowData(“Shop”, “1001”, “category”, “fish”, “salmon”);

// addRowData(“Shop”, “1002”, “category”, “fish”, “tuna”);

public static void addRowData(String name, String rowkey, String columnFamily, String column, String value) throws IOException {

Table table = conn.getTable(TableName.valueOf(name));

Put put = new Put(Bytes.toBytes(rowkey));

put.addColumn(Bytes.toBytes(columnFamily), Bytes.toBytes(column), Bytes.toBytes(value));

table.put(put);

System.out.println("Data added successfully");

}

5)获取所有数据

// getAll(“Shop”);

public static void getAll(String name) throws IOException {

Table table = conn.getTable(TableName.valueOf(name));

Scan scan = new Scan();

ResultScanner scanner = table.getScanner(scan);

for (Result result : scanner) {

for (Cell cell : result.rawCells()) {

System.out.println("--------------------------start------------------------------");

System.out.println("rowkey: " + Bytes.toString(CellUtil.cloneRow(cell)));

System.out.println("columnFamily: " + Bytes.toString(CellUtil.cloneFamily(cell)));

System.out.println("column: " + Bytes.toString(CellUtil.cloneQualifier(cell)));

System.out.println("value: " + Bytes.toString(CellUtil.cloneValue(cell)));

System.out.println("---------------------------end-------------------------------");

}

}

}

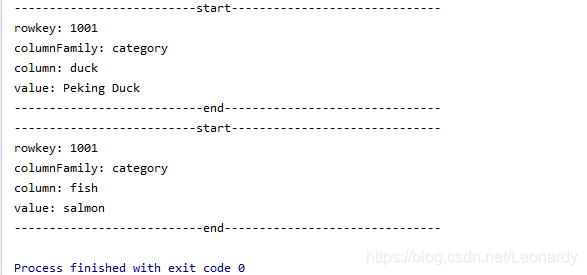

6)获取某一行数据

// get(“Shop”,“1002”);

public static void get(String name, String rowkey) throws IOException {

Table table = conn.getTable(TableName.valueOf(name));

Get get = new Get(Bytes.toBytes(rowkey));

Result result = table.get(get);

for (Cell cell : result.rawCells()) {

System.out.println("--------------------------start------------------------------");

System.out.println("rowkey: " + Bytes.toString(CellUtil.cloneRow(cell)));

System.out.println("columnFamily: " + Bytes.toString(CellUtil.cloneFamily(cell)));

System.out.println("column: " + Bytes.toString(CellUtil.cloneQualifier(cell)));

System.out.println("value: " + Bytes.toString(CellUtil.cloneValue(cell)));

System.out.println("---------------------------end-------------------------------");

}

}

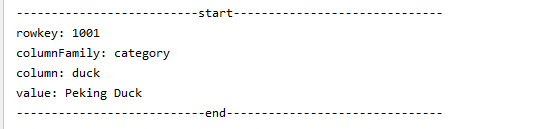

7)获取某一行指定"列族:列"的数据

// addRowData(“Shop”, “1001”, “category”, “duck”, “Peking Duck”);

// getByCol(“Shop”, “1001”, “category”, “duck”);

public static void getByCol(String name, String rowKey, String columnFamily, String column) throws IOException {

Table table = conn.getTable(TableName.valueOf(name));

Get get = new Get(Bytes.toBytes(rowKey));

get.addColumn(Bytes.toBytes(columnFamily), Bytes.toBytes(column));

Result result = table.get(get);

for (Cell cell : result.rawCells()) {

System.out.println("--------------------------start------------------------------");

System.out.println("rowkey: " + Bytes.toString(CellUtil.cloneRow(cell)));

System.out.println("columnFamily: " + Bytes.toString(CellUtil.cloneFamily(cell)));

System.out.println("column: " + Bytes.toString(CellUtil.cloneQualifier(cell)));

System.out.println("value: " + Bytes.toString(CellUtil.cloneValue(cell)));

System.out.println("---------------------------end-------------------------------");

}

}

8)删除指定行数据

// deleteRow(“Shop”, “1002”);

// getAll(“Shop”);

public static void deleteRow(String name, String rowKey) throws IOException {

Table table = conn.getTable((TableName.valueOf(name)));

Delete delete = new Delete(Bytes.toBytes(rowKey));

// 可以精确的指定 列族:列 进行删除

//delete.addColumn(Bytes.toBytes(""), Bytes.toBytes(""));

// 也可以指定同时删除 多行 delete(List deletes)

table.delete(delete);

System.out.println("Row deleted successfully");

}

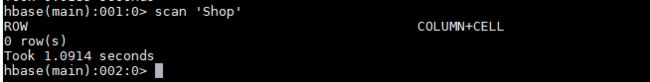

9)清空表数据

// addRowData(“Shop”, “1003”, “category”, “duck”, “Black Duck”);

public static void truncate(String name) throws IOException {

// 首先 disable the table first

// 否则 Exception in thread "main" org.apache.hadoop.hbase.TableNotDisabledException:

// org.apache.hadoop.hbase.TableNotDisabledException: Shop

admin.disableTable(TableName.valueOf(name));

admin.truncateTable(TableName.valueOf(name), true);

System.out.println("Table truncated successfully");

}

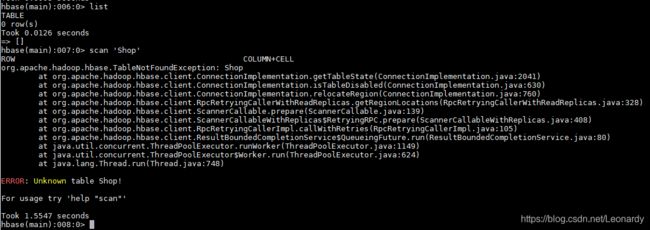

10)删除表

// dropTable(“Shop”);

public static void dropTable(String name) throws IOException {

// 首先 disable the table first

// 否则 Exception in thread "main" org.apache.hadoop.hbase.TableNotDisabledException:

// org.apache.hadoop.hbase.TableNotDisabledException: Shop

admin.disableTable(TableName.valueOf(name));

admin.deleteTable(TableName.valueOf(name));

System.out.println("Table dropped successfully");

}