分片查询(sharding-jdbc)以及常见问题解决

新公司使用了自动分库分表的插件(sharding-jdbc),由于有多个数据源,所以结合了durid框架,作为数据库链接管理框架。

Sharding jdbc

Sharding-JDBC是一个开源的分布式数据库中间件,它无需额外部署和依赖,完全兼容JDBC和各种ORM框架。Sharding-JDBC作为面向开发的微服务云原生基础类库,完整的实现了分库分表、读写分离和分布式主键功能,并初步实现了柔性事务。

研究了一天具体的运行的流程,自己实现了个小demo

项目用的是springboot 2.0+ 、mybaties 、durid

项目地址:https://github.com/zz790609619/LeetCodeRecord.git

一、准备工作

由于是分库分表,所以新建三个库user_1,user_2,user_3,在各个数据库分别插入30个表

user_pay_order_0 ----> user_pay_order_29

建表语句如下:

CREATE TABLE IF NOT EXISTS user_pay_order (order_id INT NOT NULL, user_id INT NOT NULL, status VARCHAR(50), PRIMARY KEY (order_id))

二、项目配置

项目的基本配置(pom.xml)

<project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<modelVersion>4.0.0modelVersion>

<parent>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-parentartifactId>

<version>2.1.7.RELEASEversion>

<relativePath/>

parent>

<groupId>com.examplegroupId>

<artifactId>demoartifactId>

<version>0.0.1-SNAPSHOTversion>

<packaging>warpackaging>

<name>demoname>

<description>Demo project for Spring Bootdescription>

<properties>

<java.version>1.8java.version>

properties>

<dependencies>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-webartifactId>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-testartifactId>

<scope>testscope>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>fastjsonartifactId>

<version>1.2.47version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>druidartifactId>

<version>1.1.14version>

dependency>

<dependency>

<groupId>org.apache.shardingspheregroupId>

<artifactId>sharding-jdbc-spring-boot-starterartifactId>

<version>4.0.0-RC1version>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-webartifactId>

<version>5.1.6.RELEASEversion>

dependency>

<dependency>

<groupId>org.mybatis.spring.bootgroupId>

<artifactId>mybatis-spring-boot-starterartifactId>

<version>2.0.0version>

dependency>

<dependency>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-starter-jta-atomikosartifactId>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>8.0.11version>

dependency>

dependencies>

<build>

<plugins>

<plugin>

<groupId>org.springframework.bootgroupId>

<artifactId>spring-boot-maven-pluginartifactId>

plugin>

plugins>

build>

project>

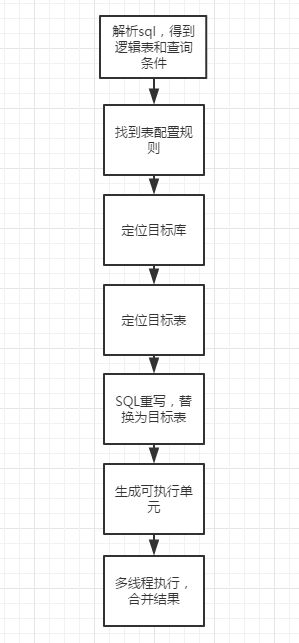

三、流程

四、具体实现代码

1.配置多数据源的参数(application.yml)

server:

port: 8090 #端口

spring:

datasource: #主数据源

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://127.0.0.1:3306/game?useSSL=false&characterEncoding=utf8&serverTimezone=Asia/Shanghai

username: root

password: 123456

initialSize: 5

minIdle: 1

maxActive: 50

maxWait: 60000

timeBetweenEvictionRunsMillis: 60000

minEvictableIdleTimeMillis: 300000

filters: stat,wall

user: #分库后每个库的数据源

datasource:

ds0:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://127.0.0.1:3306/user_0?useSSL=false&characterEncoding=utf8&serverTimezone=Asia/Shanghai

username: root

password: 123456

ds1:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://127.0.0.1:3306/user_1?useSSL=false&characterEncoding=utf8&serverTimezone=Asia/Shanghai

username: root

password: 123456

ds2:

type: com.alibaba.druid.pool.DruidDataSource

driverClassName: com.mysql.cj.jdbc.Driver

url: jdbc:mysql://127.0.0.1:3306/user_2?useSSL=false&characterEncoding=utf8&serverTimezone=Asia/Shanghai

username: root

password: 123456

mybatis:

mapper-locations: classpath:mapper/*.xml

2.主数据源配置(没有分库分表的)

注意点:

@Primary 必须配置 不然会报错

@MapperScan 扫描的mapper都将使用当前数据源

/**

* @Author ww

* @Date 2020-04-22

*/

@Configuration

@MapperScan(basePackages = {"com.example.demo.data.mapper.main"}, sqlSessionFactoryRef = "apiMainSqlSessionFactory")

public class MainDataSourceConfig {

//@Primary 标识主数据源

@Bean(name = "dataSource")

@Primary

public DataSource apiMainDataSource() {

//druid数据库连接配置

DruidXADataSource druidDataSource = new DruidXADataSource();

druidDataSource.setUrl(url);

druidDataSource.setUsername(userName);

druidDataSource.setPassword(password);

druidDataSource.setDriverClassName(driverClassName);

druidDataSource.setInitialSize(initialSize);

druidDataSource.setMaxActive(maxActive);

druidDataSource.setMinIdle(minIdle);

druidDataSource.setMaxWait(maxWait);

druidDataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

druidDataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

try {

druidDataSource.setFilters(filters);

} catch (SQLException e) {

e.printStackTrace();

}

//分布式数据源配置

AtomikosDataSourceBean sourceBean = new AtomikosDataSourceBean();

sourceBean.setXaDataSource(druidDataSource);

sourceBean.setMaxPoolSize(maxActive);

sourceBean.setUniqueResourceName("main0");

return sourceBean;

}

//@Qualifier("dataSource") 特指当前上面分布式数据源

@Bean(name = "apiMainSqlSessionFactory")

public SqlSessionFactory sqlSessionFactoryBean(@Qualifier("dataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean sqlSessionFactoryBean = new SqlSessionFactoryBean();

sqlSessionFactoryBean.setDataSource(dataSource);

PathMatchingResourcePatternResolver resolver = new PathMatchingResourcePatternResolver();

sqlSessionFactoryBean.setMapperLocations(resolver

.getResources("classpath:mapper/*.xml"));

//SqlMonitorInterceptor 拦截sql查询语句 替换参数

sqlSessionFactoryBean.setPlugins(new Interceptor[]{new SqlMonitorInterceptor()});

return sqlSessionFactoryBean.getObject();

}

//@Value 获取刚刚yml配置文件中主数据源的参数

@Value("${spring.datasource.type}")

private String type;

@Value("${spring.datasource.driverClassName}")

private String driverClassName;

@Value("${spring.datasource.url}")

private String url;

@Value("${spring.datasource.username}")

private String userName;

@Value("${spring.datasource.password}")

private String password;

@Value("${spring.datasource.initialSize}")

private Integer initialSize;

@Value("${spring.datasource.maxActive}")

private Integer maxActive;

@Value("${spring.datasource.minIdle}")

private Integer minIdle;

@Value("${spring.datasource.maxWait}")

private Long maxWait;

@Value("${spring.datasource.timeBetweenEvictionRunsMillis}")

private Long timeBetweenEvictionRunsMillis;

@Value("${spring.datasource.minEvictableIdleTimeMillis}")

private Long minEvictableIdleTimeMillis;

@Value("${spring.datasource.filters}")

private String filters;

}

SqlMonitorInterceptor 拦截器

package com.example.demo.config.plugin;//

@Intercepts({@Signature(

args = {MappedStatement.class, Object.class},

method = "update",

type = Executor.class

), @Signature(

args = {MappedStatement.class, Object.class, RowBounds.class, ResultHandler.class},

method = "query",

type = Executor.class

), @Signature(

args = {MappedStatement.class, Object.class, RowBounds.class, ResultHandler.class, CacheKey.class, BoundSql.class},

method = "query",

type = Executor.class

)})

public class SqlMonitorInterceptor implements Interceptor {

public SqlMonitorInterceptor() {

}

/**

* 将责任链的内的sql,替换参数 查询sql

**/

public Object intercept(Invocation invocation) throws Throwable {

String classname = "";

String method = "";

String sql = "";

String sql_param = "";

long duration = -1L;

long beginTime = System.currentTimeMillis();

try {

MappedStatement mappedStatement = (MappedStatement)invocation.getArgs()[0];

String[] strArr = mappedStatement.getId().split("\\.");

classname = strArr[strArr.length - 2];

method = strArr[strArr.length - 1];

Object parameter = null;

if (invocation.getArgs().length > 1) {

parameter = invocation.getArgs()[1];

}

BoundSql boundSql = mappedStatement.getBoundSql(parameter);

sql = boundSql.getSql();

sql_param = JSON.toJSONString(parameter);

} catch (Exception var14) {

var14.printStackTrace();

}

Object returnObj = invocation.proceed();

long endTime = System.currentTimeMillis();

duration = endTime - beginTime;

return returnObj;

}

public String showSql(Configuration configuration, BoundSql boundSql) {

Object parameterObject = boundSql.getParameterObject();

List<ParameterMapping> parameterMappings = boundSql.getParameterMappings();

String sql = boundSql.getSql().replaceAll("[\\s]+", " ");

if (parameterMappings.size() > 0 && parameterObject != null) {

TypeHandlerRegistry typeHandlerRegistry = configuration.getTypeHandlerRegistry();

if (typeHandlerRegistry.hasTypeHandler(parameterObject.getClass())) {

sql = sql.replaceFirst("\\?", this.getParameterValue(parameterObject));

} else {

MetaObject metaObject = configuration.newMetaObject(parameterObject);

Iterator var8 = parameterMappings.iterator();

while(var8.hasNext()) {

ParameterMapping parameterMapping = (ParameterMapping)var8.next();

String propertyName = parameterMapping.getProperty();

Object obj;

if (metaObject.hasGetter(propertyName)) {

obj = metaObject.getValue(propertyName);

sql = sql.replaceFirst("\\?", this.getParameterValue(obj));

} else if (boundSql.hasAdditionalParameter(propertyName)) {

obj = boundSql.getAdditionalParameter(propertyName);

sql = sql.replaceFirst("\\?", this.getParameterValue(obj));

}

}

}

}

return sql;

}

private String getParameterValue(Object obj) {

String value = null;

if (obj instanceof String) {

value = "'" + obj.toString() + "'";

} else if (obj instanceof Date) {

DateFormat formatter = DateFormat.getDateTimeInstance(2, 2, Locale.CHINA);

value = "'" + formatter.format(new Date()) + "'";

} else if (obj != null) {

value = obj.toString();

} else {

value = "";

}

return value;

}

public Object plugin(Object target) {

return target instanceof Executor ? Plugin.wrap(target, this) : target;

}

public void setProperties(Properties properties) {

}

}

3.分库分表数据源配置

配置三个数据源的信息

package com.example.demo.config;

import com.alibaba.druid.pool.xa.DruidXADataSource;

import org.springframework.beans.factory.annotation.Value;

import org.springframework.boot.jta.atomikos.AtomikosDataSourceBean;

import org.springframework.context.annotation.Bean;

import org.springframework.context.annotation.Configuration;

import javax.sql.DataSource;

import java.sql.SQLException;

/**

* @Author ww

* @Date 2020-04-22

*/

@Configuration

public class UserDataSourceConfig {

@Bean(name = "shardingdsDataSource")

public DataSource shardingDataSource() {

AtomikosDataSourceBean sourceBean = new AtomikosDataSourceBean();

DruidXADataSource druidDataSource = new DruidXADataSource();

druidDataSource.setUrl(url);

druidDataSource.setUsername(userName);

druidDataSource.setPassword(password);

druidDataSource.setDriverClassName(driverClassName);

druidDataSource.setInitialSize(initialSize);

druidDataSource.setMaxActive(maxActive);

druidDataSource.setMinIdle(minIdle);

druidDataSource.setMaxWait(maxWait);

druidDataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

druidDataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

try {

druidDataSource.setFilters(filters);

} catch (SQLException e) {

e.printStackTrace();

}

sourceBean.setXaDataSource(druidDataSource);

sourceBean.setUniqueResourceName("ds0");

sourceBean.setMaxPoolSize(maxActive);

return sourceBean;

}

@Bean(name = "shardingOneDataSource")

public DataSource shardingOneDataSource() {

AtomikosDataSourceBean sourceBean = new AtomikosDataSourceBean();

DruidXADataSource druidDataSource = new DruidXADataSource();

druidDataSource.setUrl(urlOne);

druidDataSource.setUsername(userNameOne);

druidDataSource.setPassword(passwordOne);

druidDataSource.setDriverClassName(driverClassNameOne);

druidDataSource.setInitialSize(initialSize);

druidDataSource.setMaxActive(maxActive);

druidDataSource.setMinIdle(minIdle);

druidDataSource.setMaxWait(maxWait);

druidDataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

druidDataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

try {

druidDataSource.setFilters(filters);

} catch (SQLException e) {

e.printStackTrace();

}

sourceBean.setXaDataSource(druidDataSource);

sourceBean.setMaxPoolSize(maxActive);

sourceBean.setUniqueResourceName("ds1");

return sourceBean;

}

@Bean(name = "shardingTwoDataSource")

public DataSource shardingTwoDataSource() {

AtomikosDataSourceBean sourceBean = new AtomikosDataSourceBean();

DruidXADataSource druidDataSource = new DruidXADataSource();

druidDataSource.setUrl(urlTwo);

druidDataSource.setUsername(userNameTwo);

druidDataSource.setPassword(passwordTwo);

druidDataSource.setDriverClassName(driverClassNameTwo);

druidDataSource.setInitialSize(initialSize);

druidDataSource.setMaxActive(maxActive);

druidDataSource.setMinIdle(minIdle);

druidDataSource.setMaxWait(maxWait);

druidDataSource.setTimeBetweenEvictionRunsMillis(timeBetweenEvictionRunsMillis);

druidDataSource.setMinEvictableIdleTimeMillis(minEvictableIdleTimeMillis);

try {

druidDataSource.setFilters(filters);

} catch (SQLException e) {

e.printStackTrace();

}

sourceBean.setXaDataSource(druidDataSource);

sourceBean.setMaxPoolSize(maxActive);

sourceBean.setUniqueResourceName("ds2");

return sourceBean;

}

//三个分库的数据源配置

@Value("${spring.user.datasource.ds0.type}")

private String type;

@Value("${spring.user.datasource.ds0.driver-class-name}")

private String driverClassName;

@Value("${spring.user.datasource.ds0.url}")

private String url;

@Value("${spring.user.datasource.ds0.username}")

private String userName;

@Value("${spring.user.datasource.ds0.password}")

private String password;

@Value("${spring.user.datasource.ds1.type}")

private String typeOne;

@Value("${spring.user.datasource.ds1.driver-class-name}")

private String driverClassNameOne;

@Value("${spring.user.datasource.ds1.url}")

private String urlOne;

@Value("${spring.user.datasource.ds1.username}")

private String userNameOne;

@Value("${spring.user.datasource.ds1.password}")

private String passwordOne;

@Value("${spring.user.datasource.ds2.type}")

private String typeTwo;

@Value("${spring.user.datasource.ds2.driver-class-name}")

private String driverClassNameTwo;

@Value("${spring.user.datasource.ds2.url}")

private String urlTwo;

@Value("${spring.user.datasource.ds2.username}")

private String userNameTwo;

@Value("${spring.user.datasource.ds2.password}")

private String passwordTwo;

@Value("${spring.datasource.initialSize}")

private Integer initialSize;

@Value("${spring.datasource.maxActive}")

private Integer maxActive;

@Value("${spring.datasource.minIdle}")

private Integer minIdle;

@Value("${spring.datasource.maxWait}")

private Long maxWait;

@Value("${spring.datasource.timeBetweenEvictionRunsMillis}")

private Long timeBetweenEvictionRunsMillis;

@Value("${spring.datasource.minEvictableIdleTimeMillis}")

private Long minEvictableIdleTimeMillis;

@Value("${spring.datasource.filters}")

private String filters;

}

将三个数据源装载在分片数据源工厂里

/**

* @Author ww

* @Date 2020-04-22

*/

@Component

public class ShardingDataSourceConfig {

//最后返回的由 分片数据工厂ShardingDataSourceFactory 生产的DataSource

private DataSource shardingDataSource;

//前面配置的三个分库的数据源

@Resource(name = "shardingdsDataSource")

private DataSource shardingdsDataSource;

@Resource(name = "shardingOneDataSource")

private DataSource shardingOneDataSource;

@Resource(name = "shardingTwoDataSource")

private DataSource shardingTwoDataSource;

//在当前类被依赖注入(@autowired)后执行的方法。

@PostConstruct

public void init() throws SQLException {

//将三个数据源放在map中

Map<String ,DataSource> dataSourceMap = new HashMap<>();

dataSourceMap.put("ds0",shardingdsDataSource);

dataSourceMap.put("ds1",shardingOneDataSource);

dataSourceMap.put("ds2",shardingTwoDataSource);

//

/**

* 新建分片规则配置类 参数任意选

* public final class ShardingRuleConfiguration {

* //默认数据源名称

* private String defaultDataSourceName;

* //表规则配置

* private Collection tableRuleConfigs = new LinkedList<>();

* //相同表分片规则的组,如果表分片规则相同,则可以放在一个组里。

* private Collection bindingTableGroups = new LinkedList<>();

* //默认数据库的分片算法配置

* private ShardingStrategyConfiguration defaultDatabaseShardingStrategyConfig;

* //默认表的分片算法配置

* private ShardingStrategyConfiguration defaultTableShardingStrategyConfig;

* //默认键的生成工具类

* private KeyGenerator defaultKeyGenerator;

* //主备配置信息

* private Collection masterSlaveRuleConfigs = new LinkedList<>();

* }

*/

ShardingRuleConfiguration shardingRuleConfiguration = new ShardingRuleConfiguration();

//相同表分片规则的组,如果表分片规则相同,则可以放在一个组里

shardingRuleConfiguration.getBindingTableGroups().addAll(Arrays.asList(

"user_pay_order"

));

//表规则配置

List<TableRuleConfiguration> tableRuleConfigurationList = new ArrayList<>();

/**

* //逻辑表名

* private String logicTable;

* //真实的数据节点名称

* private String actualDataNodes;

* //数据库分片算法配置

* private ShardingStrategyConfiguration databaseShardingStrategyConfig;

* //表分片算法配置

* private ShardingStrategyConfiguration tableShardingStrategyConfig;

* //自动生成键的名称

* private String keyGeneratorColumnName;

* //自动生成键的工具类

* private KeyGenerator keyGenerator;

*

* private String logicIndex;

*/

// param1 : 逻辑表名, param2 : 真实存在的节点,由数据源 + 表明组成, ds${0..1} 代表 数据库选择 ds 后缀为 0 - 2 之间,user_pay_order_ 代表数据表 user_pay_order_ 后缀 0 - 1 之间

TableRuleConfiguration tableRuleConfiguration = new TableRuleConfiguration("user_pay_order","ds${0..2}.user_pay_order_${0..29}");

//表分片算法配置

tableRuleConfiguration.setTableShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_pay_id",new ShardingAlgorithmLong()));

//数据库分片算法配置

tableRuleConfiguration.setDatabaseShardingStrategyConfig(new StandardShardingStrategyConfiguration("user_pay_id",new UserPayOrderDataSourceAlgo()));

tableRuleConfigurationList.add(tableRuleConfiguration);

// 配置分片规则

shardingRuleConfiguration.getTableRuleConfigs().addAll(tableRuleConfigurationList);

shardingDataSource = ShardingDataSourceFactory.createDataSource(dataSourceMap,shardingRuleConfiguration,new Properties());

}

public DataSource getDataSource() {

return shardingDataSource;

}

}

4.分片算法

精确分片算法

对应PreciseShardingAlgorithm,用于处理使用单一键作为分片键的=与IN进行分片的场景。需要配合StandardShardingStrategy使用。

范围分片算法

对应RangeShardingAlgorithm,用于处理使用单一键作为分片键的BETWEEN AND进行分片的场景。需要配合StandardShardingStrategy使用。

复合分片算法

对应ComplexKeysShardingAlgorithm,用于处理使用多键作为分片键进行分片的场景,包含多个分片键的逻辑较复杂,需要应用开发者自行处理其中的复杂度。需要配合ComplexShardingStrategy使用。

Hint分片算法

对应HintShardingAlgorithm,用于处理使用Hint行分片的场景。需要配合HintShardingStrategy使用。

分库算法UserPayOrderDataSourceAlgo 使用的是精确分片算法

/**

* @Author ww

* @Date 2020-04-22

*/

public class UserPayOrderDataSourceAlgo implements PreciseShardingAlgorithm<Long> {

@Override

public String doSharding(Collection<String> availableTargetNames, PreciseShardingValue<Long> shardingValue) {

int postfix = (int)((shardingValue.getValue() / 30) % availableTargetNames.size());

for (String dataSource : availableTargetNames) {

if (dataSource.endsWith(String.valueOf(postfix))) {

return dataSource;

}

}

throw new IllegalArgumentException();

}

}

分表算法 ShardingAlgorithmLong

/**

* @Author ww

* @Date 2020-04-22

*/

public class ShardingAlgorithmLong implements PreciseShardingAlgorithm<Long> {

@Override

public String doSharding(Collection<String> collection, PreciseShardingValue<Long> preciseShardingValue) {

String postfix = "" +(preciseShardingValue.getValue() % collection.size());

for (String tableName : collection){

if(tableName.endsWith(postfix)){

return tableName;

}

}

throw new IllegalArgumentException("没有匹配到id:"+preciseShardingValue.getValue());

}

}

5.将前面的数据源 适配到对应的mapper分类中,使用该mapper中自动会去用到分片查询

@Configuration

@MapperScan(basePackages = {"com.example.demo.data.mapper.user"}, sqlSessionFactoryRef = "sqlSessionFactory")

public class ShardingMybatisConfig {

@Autowired

private ShardingDataSourceConfig dataSourceConfig;

@Bean(name = "sqlSessionFactory")

public SqlSessionFactory sqlSessionFactory(@Qualifier("dataSource") DataSource dataSource) throws Exception {

SqlSessionFactoryBean sqlSessionFactoryBean = new SqlSessionFactoryBean();

sqlSessionFactoryBean.setDataSource(dataSourceConfig.getDataSource());

PathMatchingResourcePatternResolver resolver = new PathMatchingResourcePatternResolver();

sqlSessionFactoryBean.setMapperLocations(resolver

.getResources("classpath:mapper/*.xml"));

sqlSessionFactoryBean.setPlugins(new Interceptor[]{new UserPayOrderSqlInterceptor()});

return sqlSessionFactoryBean.getObject();

}

}

6.sql重写 责任链模式 sqlSession增加一个plugn过滤

Mybatis采用责任链模式,通过动态代理组织多个拦截器(插件),通过这些拦截器可以改变Mybatis的默认行为(诸如SQL重写之类的)

/**

* 这是个临时的补救类,主要解决UserPayOrder根据主键进行查询的问题(分片查询) 没有也可以

*/

@Intercepts({@Signature(

args = {MappedStatement.class, Object.class},

method = "update",

type = Executor.class

), @Signature(

args = {MappedStatement.class, Object.class, RowBounds.class, ResultHandler.class},

method = "query",

type = Executor.class

), @Signature(

args = {MappedStatement.class, Object.class, RowBounds.class, ResultHandler.class, CacheKey.class, BoundSql.class},

method = "query",

type = Executor.class

)})

public class UserPayOrderSqlInterceptor implements Interceptor {

//Object intercept(Invocation invocation)是实现拦截逻辑的地方,内部要通过invocation.proceed()显式地推进责任链前进,也就是调用下一个拦截器拦截目标方法

public Object intercept(Invocation invocation) throws Throwable {

String classname = "";

String method = "";

String sql = "";

String sql_param = "";

long duration = -1L;

long beginTime = System.currentTimeMillis();

try {

MappedStatement mappedStatement = (MappedStatement)invocation.getArgs()[0];

String[] strArr = mappedStatement.getId().split("\\.");

classname = strArr[strArr.length - 2];

method = strArr[strArr.length - 1];

Object parameter = null;

if (invocation.getArgs().length > 1) {

parameter = invocation.getArgs()[1];

}

BoundSql boundSql = mappedStatement.getBoundSql(parameter);

sql = boundSql.getSql();

sql_param = JSON.toJSONString(parameter);

if(classname.equals("UserPayOrderMapper") && method.equals("selectByPrimaryKey")){

String user_pay_id=parameter.toString().split("_")[1];

sql=sql+" and user_pay_id="+user_pay_id;

System.out.println("newsql: "+sql);

modify(boundSql,"sql",sql);

}

} catch (Exception var14) {

var14.printStackTrace();

}

Object returnObj = invocation.proceed();

long endTime = System.currentTimeMillis();

duration = endTime - beginTime;

return returnObj;

}

public String showSql(Configuration configuration, BoundSql boundSql) {

Object parameterObject = boundSql.getParameterObject();

List<ParameterMapping> parameterMappings = boundSql.getParameterMappings();

String sql = boundSql.getSql().replaceAll("[\\s]+", " ");

if (parameterMappings.size() > 0 && parameterObject != null) {

TypeHandlerRegistry typeHandlerRegistry = configuration.getTypeHandlerRegistry();

if (typeHandlerRegistry.hasTypeHandler(parameterObject.getClass())) {

sql = sql.replaceFirst("\\?", this.getParameterValue(parameterObject));

} else {

MetaObject metaObject = configuration.newMetaObject(parameterObject);

Iterator var8 = parameterMappings.iterator();

while(var8.hasNext()) {

ParameterMapping parameterMapping = (ParameterMapping)var8.next();

String propertyName = parameterMapping.getProperty();

Object obj;

if (metaObject.hasGetter(propertyName)) {

obj = metaObject.getValue(propertyName);

sql = sql.replaceFirst("\\?", this.getParameterValue(obj));

} else if (boundSql.hasAdditionalParameter(propertyName)) {

obj = boundSql.getAdditionalParameter(propertyName);

sql = sql.replaceFirst("\\?", this.getParameterValue(obj));

}

}

}

}

return sql;

}

private static void modify(Object object, String fieldName, Object newFieldValue){

try {

Field field = object.getClass().getDeclaredField(fieldName);

Field modifiersField = Field.class.getDeclaredField("modifiers");

modifiersField.setAccessible(true);

modifiersField.setInt(field, field.getModifiers() & Modifier.FINAL);

if(!field.isAccessible()) {

field.setAccessible(true);

}

field.set(object, newFieldValue);

} catch (Exception e) {

e.printStackTrace();

}

}

private String getParameterValue(Object obj) {

String value = null;

if (obj instanceof String) {

value = "'" + obj.toString() + "'";

} else if (obj instanceof Date) {

DateFormat formatter = DateFormat.getDateTimeInstance(2, 2, Locale.CHINA);

value = "'" + formatter.format(new Date()) + "'";

} else if (obj != null) {

value = obj.toString();

} else {

value = "";

}

return value;

}

//Object plugin(Object target) 就是用当前这个拦截器生成对目标target的代理,实际是通过Plugin.wrap(target,this) 来完成的,把目标target和拦截器this传给了包装函数

public Object plugin(Object target) {

return target instanceof Executor ? Plugin.wrap(target, this) : target;

}

//setProperties(Properties properties)用于设置额外的参数,参数配置在拦截器的Properties节点里

public void setProperties(Properties properties) {

}

}

7.项目结构以及代码

@RestController

public class UserController{

@Autowired

private UserPayOrderService userPayOrderService;

/**

* 根据订单号获取商户id

* @param userOrderId 订单id

* @return

*/

@PostMapping("/userOrderMerchantId")

public String userOrderMerchantId(@RequestParam("userOrderId") String userOrderId){

return userPayOrderService.userOrderMerchantId(userOrderId);

}

@PostMapping("/submit")

public String submit(@RequestBody SubmitUserPayOrderRequest userPayOrderRequest){

return userPayOrderService.submit(userPayOrderRequest);

}

}

UserPayOrderService .java

@Service

public class UserPayOrderService {

@Autowired

private UserPayOrderMapper userPayOrderDao;

/**

* 根据订单号获取商户id

*

* @param userOrderId

* @return

*/

public String userOrderMerchantId(String userOrderId) {

UserPayOrder upo = userPayOrderDao.selectByEntity(new UserPayOrder()

.setId(userOrderId));

return JSON.toJSONString(upo);

}

/**

* 提交订单

*

* @param userPayOrderRequest

* @return

* @throws Exception

*/

@Transactional(rollbackFor = Exception.class)

public String submit(SubmitUserPayOrderRequest userPayOrderRequest) {

Date createTime = new Date();

userPayOrderDao.insertSelective(new UserPayOrder()

.setId(userPayOrderRequest.getId())

.setMerchantId(userPayOrderRequest.getMerchantId())

.setUserPayId(userPayOrderRequest.getUserPayId())

.setAmountOfConsumption(userPayOrderRequest.getAmountOfConsumption())

.setPayAbleAmount(userPayOrderRequest.getPayAblAmount())

.setUserId(userPayOrderRequest.getUserId())

.setDiscountAmount(userPayOrderRequest.getDiscountAmount())

.setFreeAmount(userPayOrderRequest.getFreeAmount())

.setStatus(userPayOrderRequest.getStatus())

.setChannelId(userPayOrderRequest.getChannelId())

.setEnjoyKingAmount(userPayOrderRequest.getEnjoyKingAmount())

.setPayType(userPayOrderRequest.getPayType())

.setScanType(userPayOrderRequest.getScanType())

.setCreateTime(createTime));

return "xx";

}

}

UserPayOrderMapper.java

/**

* @Author ww

* @Date 2020-04-22

*/

@Mapper

public interface UserPayOrderMapper extends BaseDao<UserPayOrder,String> {

//自行扩展

int updateByIdAndPayUserId(UserPayOrder userPayOrder);

Integer queryStatus(@Param("id") String id,

@Param("userPayId") Long userPayId);

UserPayOrder selectByPayTime(UserPayOrder userPayOrder);

}

<mapper namespace="com.example.demo.data.mapper.user.UserPayOrderMapper">

<resultMap id="BaseResultMap" type="com.example.demo.entity.UserPayOrder">

<id column="id" jdbcType="VARCHAR" property="id" />

<result column="merchant_id" jdbcType="BIGINT" property="merchantId" />

<result column="user_pay_id" jdbcType="BIGINT" property="userPayId" />

<result column="amount_of_consumption" jdbcType="DECIMAL" property="amountOfConsumption" />

<result column="pay_able_amount" jdbcType="DECIMAL" property="payAbleAmount" />

<result column="merchant_receive_amount" jdbcType="DECIMAL" property="merchantReceiveAmount" />

<result column="pay_type" jdbcType="INTEGER" property="payType" />

<result column="pay_time" jdbcType="TIMESTAMP" property="payTime" />

<result column="user_id" jdbcType="BIGINT" property="userId" />

<result column="discount_amount" jdbcType="DECIMAL" property="discountAmount" />

<result column="free_amount" jdbcType="DECIMAL" property="freeAmount" />

<result column="status" jdbcType="INTEGER" property="status" />

<result column="channel_id" jdbcType="INTEGER" property="channelId" />

<result column="enjoy_king_amount" jdbcType="DECIMAL" property="enjoyKingAmount" />

<result column="create_time" jdbcType="TIMESTAMP" property="createTime" />

<result column="update_time" jdbcType="TIMESTAMP" property="updateTime" />

<result column="enjoy_issue_king_amount" jdbcType="DECIMAL" property="enjoyIssueKingAmount" />

<result column="enjoy_receive_king_amount" jdbcType="DECIMAL" property="enjoyReceiveKingAmount" />

resultMap>

mapper>

注意点

- 多数据源情况下,要有主数据源@Primary

- mapper文件地址要和ShardingMybatisConfig配置文件中@MapperScan路径一致,最好分库的数据源的mapper都在一个路径下,该路径下的mapper都是用ShardingDataSource的数据库链接,查询时候自带分片查询

- 插入和查询的时候会根据自定义算法