MapReduce常见算法

2016年4月6日18:28:29

MapReduce常见算法

作者:数据分析玩家

对于MapReduce,常见的算法有单词计数、数据去重、排序、TopK、选择、投影、分组、多表链接、单表关联。本文将具体阐述两个算法:数据去重与TopK。

为了让大家看的更清楚,现在将所用数据grade.txt数据列出:

HeBei 568

HeBei 313

HeBei 608

HeBei 458

HeBei 157

HeBei 629

HeBei 594

HeBei 305

HeBei 168

HeBei 116

ShanDong 405

ShanDong 667

ShanDong 289

ShanDong 463

ShanDong 59

ShanDong 695

ShanDong 74

ShanDong 547

ShanDong 115

ShanDong 534

ShanXi 74

ShanXi 254

ShanXi 270

ShanXi 30

ShanXi 130

ShanXi 149

ShanXi 417

ShanXi 486

ShanXi 156

ShanXi 608

JiangSu 628

JiangSu 567

JiangSu 681

JiangSu 289

JiangSu 314

JiangSu 147

JiangSu 389

JiangSu 491

JiangSu 353

JiangSu 162

HeNan 202

HeNan 161

HeNan 121

HeNan 450

HeNan 603

HeNan 144

HeNan 250

HeNan 521

HeNan 86

HeNan 404先将源代码列出供大家参考:

package Top;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

//本程序的目的是通过MapReduce进行数据的去重,同时求取topone

public class Top0

{

public static String path1 = "hdfs://hadoop:9000/grade.txt";

public static String path2 = "hdfs://hadoop:9000/gradedir";

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException, URISyntaxException

{

FileSystem fileSystem = FileSystem.get(new URI(path1),new Configuration());

if(fileSystem.exists(new Path(path2)))

{

fileSystem.delete(new Path(path2), true);

}

Job job = new Job(new Configuration());

//编写MapReduce程序的驱动

FileInputFormat.setInputPaths(job, new Path(path1));

job.setMapperClass(MyMapper.class);

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

FileOutputFormat.setOutputPath(job, new Path(path2));

//提交任务

job.waitForCompletion(true);

FSDataInputStream fr = fileSystem.open(new Path("hdfs://hadoop:9000/gradedir/part-r-00000"));

IOUtils.copyBytes(fr, System.out, 1024, true);

}

public static class MyMapper extends Mapper

{

protected void map(LongWritable k1, Text v1,Context context)throws IOException, InterruptedException

{

String[] splited = v1.toString().split("\t");

String province = splited[0];

String grade = splited[1];

context.write(new Text(province),new LongWritable(Long.parseLong(grade)));

}

}

public static class MyReducer extends Reducer

{

protected void reduce(Text k2, Iterable v2s,Context context)throws IOException, InterruptedException

{

long topgrade = Long.MIN_VALUE;

for (LongWritable v2 : v2s)

{

if(v2.get()>topgrade)

{

topgrade = v2.get();

}

}

context.write(k2,new LongWritable(topgrade));

}

}

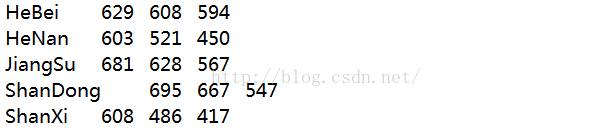

} MapReduce程序运行之后显示的结果为:

接下来讲述第二个业务:求取每个省份分数的前三个最大值,这个业务涉及到了数据的去重与topk.

先将源代码列出供大家参看:

package Top;

import java.io.IOException;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.ArrayList;

import java.util.Collections;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.FSDataInputStream;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.IOUtils;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

public class Top3

{

public static String path1 = "hdfs://hadoop:9000/grade.txt";

public static String path2 = "hdfs://hadoop:9000/gradedir2";

public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException, URISyntaxException

{

FileSystem fileSystem = FileSystem.get(new URI(path1),new Configuration());

if(fileSystem.exists(new Path(path2)))

{

fileSystem.delete(new Path(path2), true);

}

Job job = new Job(new Configuration());

//编写MapReduce程序的驱动

FileInputFormat.setInputPaths(job, new Path(path1));

job.setMapperClass(MyMapper.class);

job.setReducerClass(MyReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

FileOutputFormat.setOutputPath(job, new Path(path2));

//提交任务

job.waitForCompletion(true);

FSDataInputStream fr = fileSystem.open(new Path("hdfs://hadoop:9000/gradedir2/part-r-00000"));

IOUtils.copyBytes(fr, System.out, 1024, true);

}

public static class MyMapper extends Mapper

{

protected void map(LongWritable k1, Text v1,Context context)throws IOException, InterruptedException

{

String[] splited = v1.toString().split("\t");

String province = splited[0];

String grade = splited[1];

context.write(new Text(province),new LongWritable(Long.parseLong(grade)));

}

}

public static class MyReducer extends Reducer

{

protected void reduce(Text k2, Iterable v2s,Context context)throws IOException, InterruptedException

{

ArrayList arr = new ArrayList();

for (LongWritable v2 : v2s)

{

arr.add(v2.get());

}

Collections.sort(arr);

Collections.reverse(arr);

context.write(k2,new Text(arr.get(0)+"\t"+arr.get(1)+"\t"+arr.get(2)+""));

}

}

} MapReduce程序运行之后显示的结果为:

对于MapReduce更重要的是可以灵活运用,尤其是shuffle阶段是MapReduce自动运行的,我们往往可以在里面做很多的文章。对于上面的两个程序,如有问题可以给我留言.

2016年4月6日18:52:15