RocketMQ从入门到放弃

前言

RocketMQ是一个轻量级的数据处理平台,本篇主要讲解RocketMQ的安装、配置、基本使用等。

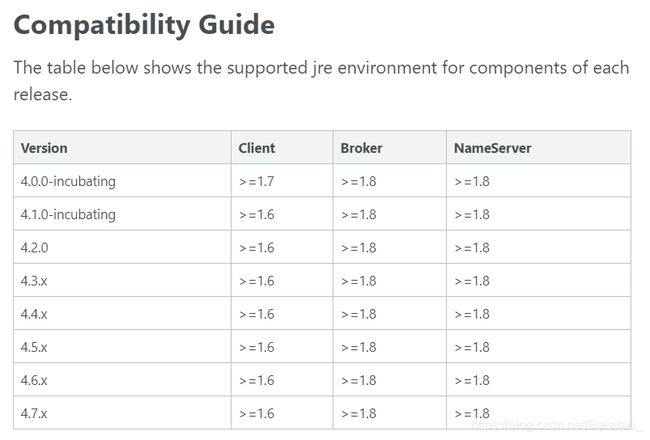

环境

以下是各个版本的RocketMQ对JDK版本的要求,本篇使用的是RocketMQ 4.6.1

(图片来源:http://rocketmq.apache.org/dowloading/releases/)

概念

角色

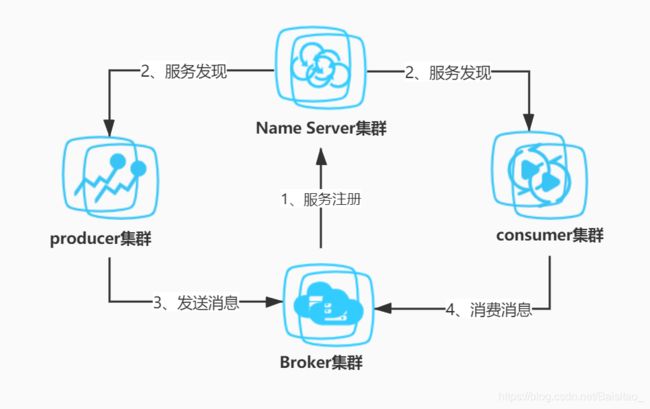

RocketMQ中相关的角色大致可以分为四个:NameServer、Broker、Producer和Consumer。

broker

broker是整个RocketMQ的核心

- Broker面向producer和consumer发送和接收消息

- 向Name Server提交自己的信息

- 是RocketMQ的存储转发服务器

- 每个Broker节点在启动时都会遍历Name Server列表,与每个Name Server建立长连接,注册自己的信息,然后定时上报

如果broker搭建了集群

- Broker高可用,可以配置成Master/Slave结构,Master可读可写,Slave只读,Master将写入的数据同步给Slave

- 一个Master可以对应多个Slave,一个Slave只对应一个Master

- Master与Slave的对应关系通过指定相同的BrokerName,不同的BrokerId来定义,BrokerId为0表示Master,非0表示Slave

- Master多机负载,可以部署多个broker

- 每个broker与nameserver集群中所有节点建立长连接,定时注册Topic信息到所有NameServer

producer

- 消息生产者

- 通过集群中的一个节点(随机选择)建立长连接,获得Topic的路由信息,包括Topic下面有哪些Queue,这些Queue分布在哪些Broker上

- 接下来向提供Topic服务的Master建立长连接,且定时向Master发送心跳

consumer

- 消息的消费者

- 通过Name Server集群获得Topic的路由信息,连接到对应的Broker上进行消费

- Master和Slave都可以读取消息,因此consumer会与Master和Slave都建立连接

Name Server

- 底层由Netty实现,提供了路由管理,服务注册,服务发现的功能,是一个无状态节点

- Name Server是服务发现者,集群中的各个角色(Broker、Producer、Consumer)都需要定时上报自己的状态,以便互相发现彼此,超时不上报的话,Name Server会把它从列表中剔除

- Name Server可以部署多个,部署多个时,其他角色同时向多个Name Server上报自己的状态,以保证高可用

- Name Server集群间互不通信,没有主备概念

- Name Server采用内存式存储,Broker、Topic等信息默认不会持久化

安装

## 下载

wget https://archive.apache.org/dist/rocketmq/4.6.1/rocketmq-all-4.6.1-bin-release.zip

## 解压

unzip rocketmq-all-4.6.1-bin-release.zip

进入解压后的RocketMQ的bin目录

[root@localhost rocketmq-all-4.6.1-bin-release]# cd bin/

[root@localhost bin]# ll

总用量 108

-rwxr-xr-x 1 root root 1654 11月 28 2019 cachedog.sh

-rwxr-xr-x 1 root root 845 11月 28 2019 cleancache.sh

-rwxr-xr-x 1 root root 1116 11月 28 2019 cleancache.v1.sh

drwxr-xr-x 2 root root 25 11月 28 2019 dledger

-rwxr-xr-x 1 root root 1398 11月 28 2019 mqadmin

-rwxr-xr-x 1 root root 1029 11月 28 2019 mqadmin.cmd

-rwxr-xr-x 1 root root 1394 11月 28 2019 mqbroker

-rwxr-xr-x 1 root root 1084 11月 28 2019 mqbroker.cmd

-rwxr-xr-x 1 root root 1373 11月 28 2019 mqbroker.numanode0

-rwxr-xr-x 1 root root 1373 11月 28 2019 mqbroker.numanode1

-rwxr-xr-x 1 root root 1373 11月 28 2019 mqbroker.numanode2

-rwxr-xr-x 1 root root 1373 11月 28 2019 mqbroker.numanode3

-rwxr-xr-x 1 root root 1396 11月 28 2019 mqnamesrv

-rwxr-xr-x 1 root root 1088 11月 28 2019 mqnamesrv.cmd

-rwxr-xr-x 1 root root 1571 11月 28 2019 mqshutdown

-rwxr-xr-x 1 root root 1398 11月 28 2019 mqshutdown.cmd

-rwxr-xr-x 1 root root 2222 11月 28 2019 os.sh

-rwxr-xr-x 1 root root 1148 11月 28 2019 play.cmd

-rwxr-xr-x 1 root root 1008 11月 28 2019 play.sh

-rwxr-xr-x 1 root root 772 11月 28 2019 README.md

-rwxr-xr-x 1 root root 2206 11月 28 2019 runbroker.cmd

-rwxr-xr-x 1 root root 3713 12月 24 2019 runbroker.sh

-rwxr-xr-x 1 root root 1816 11月 28 2019 runserver.cmd

-rwxr-xr-x 1 root root 3397 12月 24 2019 runserver.sh

-rwxr-xr-x 1 root root 1156 11月 28 2019 setcache.sh

-rwxr-xr-x 1 root root 1408 11月 28 2019 startfsrv.sh

-rwxr-xr-x 1 root root 1634 12月 24 2019 tools.cmd

-rwxr-xr-x 1 root root 1901 12月 24 2019 tools.sh

需要启动的是mqbroker和mqnamesrv两个脚本。

首先启动mqnamesrv,可以看到如下日志

[root@localhost bin]# ./mqnamesrv

Java HotSpot(TM) 64-Bit Server VM warning: Using the DefNew young collector with the CMS collector is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: UseCMSCompactAtFullCollection is deprecated and will likely be removed in a future release.

Java HotSpot(TM) 64-Bit Server VM warning: INFO: os::commit_memory(0x00000006c0000000, 2147483648, 0) failed; error='Cannot allocate memory' (errno=12)

#

# There is insufficient memory for the Java Runtime Environment to continue.

# Native memory allocation (mmap) failed to map 2147483648 bytes for committing reserved memory.

# An error report file with more information is saved as:

# /root/rocketmq-all-4.6.1-bin-release/bin/hs_err_pid2154.log

服务启动失败,根据日志可以看到,第一行是说ParNew + CMS收集器的方式会在未来的版本中被移除,第二行日志是说CMS收集器的参数UseCMSCompactAtFullCollection会在未来的版本中被移除。

但是这两行日志都不是服务启动失败的原因,失败的原因在第三行error='Cannot allocate memory' (errno=12),是说无法分配内存,根据日志前半段可以知道,启动这个脚本需要分配2147483648byte(2G)的内存,由于本篇使用的虚拟机只有1G内存,所以启动失败。

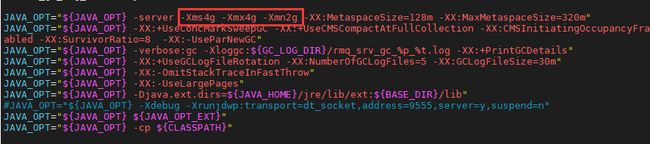

因此需要修改脚本启动参数,查看脚本mqnamesrv

if [ -z "$ROCKETMQ_HOME" ] ; then

## resolve links - $0 may be a link to maven's home

PRG="$0"

# need this for relative symlinks

while [ -h "$PRG" ] ; do

ls=`ls -ld "$PRG"`

link=`expr "$ls" : '.*-> \(.*\)$'`

if expr "$link" : '/.*' > /dev/null; then

PRG="$link"

else

PRG="`dirname "$PRG"`/$link"

fi

done

saveddir=`pwd`

ROCKETMQ_HOME=`dirname "$PRG"`/..

# make it fully qualified

ROCKETMQ_HOME=`cd "$ROCKETMQ_HOME" && pwd`

cd "$saveddir"

fi

export ROCKETMQ_HOME

sh ${ROCKETMQ_HOME}/bin/runserver.sh org.apache.rocketmq.namesrv.NamesrvStartup $@

可以看到脚本并未设置内存参数,但是最后一行可以看到,改脚本又执行了另一个脚本runserver.sh,所以应该编辑runserver.sh脚本,打开runserver.sh脚本后,可以看到如下参数

把相关的参数改成合适的即可。

再次启动mqnamesrv可以看到如下日志

[root@localhost bin]# ./mqnamesrv

Java HotSpot(TM) 64-Bit Server VM warning: Using the DefNew young collector with the CMS collector is deprecated and will likely be removed in a future release

Java HotSpot(TM) 64-Bit Server VM warning: UseCMSCompactAtFullCollection is deprecated and will likely be removed in a future release.

The Name Server boot success. serializeType=JSON

Name Server已经启动

同样的,启动mqbroker也需要按照上述方式修改对应的参数,成功启动之后会出现如下日志

[root@localhost bin]# ./mqbroker -n 192.168.1.101:9876 autoCreateTopicEnable=true

The broker[localhost.localdomain, 192.168.1.101:10911] boot success. serializeType=JSON and name server is 192.168.1.101:9876

-n指定连接哪个Name Server,autoCreateTopicEnable=true指定当Topic不存在时,自动创建。

测试

同步消息

这种方式发送的消息是可靠的消息,消息发送中进入同步等待状态,可以保证消息投递一定到达

Producer代码

/**

* @author sicimike

* @create 2020-07-27 22:36

*/

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException, RemotingException, InterruptedException, MQBrokerException {

DefaultMQProducer producer = new DefaultMQProducer("hello-group-producer);

// 设置Name Server

producer.setNamesrvAddr("192.168.1.101:9876");

// 启动Producer

producer.start();

// 消息对象

Message message = new Message("hello-topic", "Hello RocketMQ".getBytes());

// 发送同步消息

SendResult sendResult = producer.send(message);

System.out.println("sendResult is : " + sendResult);

// 关闭连接

producer.shutdown();

}

}

Consumer代码

/**

* @author sicimike

* @create 2020-07-27 22:37

*/

public class SicimikeConsumer {

public static void main(String[] args) throws MQClientException {

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("hello-group-consumer");

// 设置Name Server

consumer.setNamesrvAddr("192.168.1.101:9876");

// 订阅topic,第二个参数是消息过滤器,“*”表示不过滤

consumer.subscribe("hello-topic", "*");

// 注册消息监听器

consumer.registerMessageListener(new MessageListenerConcurrently() {

@Override

public ConsumeConcurrentlyStatus consumeMessage(List list, ConsumeConcurrentlyContext consumeConcurrentlyContext) {

for (MessageExt messageExt : list) {

// 一次性可能收到多条消息

System.out.println("message is : " + new String(messageExt.getBody()));

}

// 返回消息已经被消费

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

// 启动Consumer

consumer.start();

}

}

批量消息

RocketMQ可以将多条消息打包一起发送,减少网络传输的次数,以提高效率。

Producer代码

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException, RemotingException, InterruptedException, MQBrokerException {

DefaultMQProducer producer = new DefaultMQProducer("hello-group-producer");

producer.setNamesrvAddr("192.168.1.101:9876");

producer.start();

Message message1 = new Message("hello-topic", "Hello RocketMQ-1".getBytes());

Message message2 = new Message("hello-topic", "Hello RocketMQ-2".getBytes());

Message message3 = new Message("hello-topic", "Hello RocketMQ-3".getBytes());

List messageList = new ArrayList<>();

messageList.add(message1);

messageList.add(message2);

messageList.add(message3);

SendResult sendResult = producer.send(messageList);

System.out.println("sendResult is : " + sendResult);

producer.shutdown();

}

}

发送批量消息有一定的限制

- 批量消息要求必要具有同一topic、相同消息配置

- 不支持延时消息

- 建议一个批量消息最好不要超过1MB大小

- 如果不确定是否超过限制,可以手动计算大小分批发送

异步消息

异步消息通常用于响应时间敏感的业务场景中。

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException, RemotingException, InterruptedException, MQBrokerException {

DefaultMQProducer producer = new DefaultMQProducer("hello-group-producer");

producer.setNamesrvAddr("192.168.1.101:9876");

producer.start();

// 异步消息发送失败后重试几次(不包括第一次发送的那次)

producer.setRetryTimesWhenSendAsyncFailed(0);

Message message = new Message("hello-topic", "Hello RocketMQ asynchronous".getBytes());

producer.send(message, new SendCallback() {

@Override

public void onSuccess(SendResult sendResult) {

// 发送成功后回调

System.out.println("sendResult is : " + sendResult);

// 关闭连接

producer.shutdown();

}

@Override

public void onException(Throwable e) {

// 抛出异常后回调

System.out.println("Exception : " + e);

}

});

}

}

单向消息

单向消息用于要求中等可靠性的情况,例如日志收集。因为单向消息不确保消息能够发送成功,所以速度较快

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException, RemotingException, InterruptedException, MQBrokerException {

DefaultMQProducer producer = new DefaultMQProducer("hello-group-producer");

producer.setNamesrvAddr("192.168.1.101:9876");

producer.start();

Message message = new Message("hello-topic", "Hello RocketMQ One way".getBytes());

// 发送单向消息

producer.sendOneway(message);

}

}

广播消息

与上面不同的是,是否是广播消息是由消费者来决定的

- 集群消费:Consumer集群中,同一个Group下,只有一个Consumer能够消费

- 广播消费:订阅了这个Topic的所有Consumer都能消费

public class SicimikeConsumer {

public static void main(String[] args) throws MQClientException {

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("hello-group-consumer");

consumer.setNamesrvAddr("192.168.1.101:9876");

consumer.subscribe("hello-topic", "*");

// 默认是集群消费模式

// consumer.setMessageModel(MessageModel.CLUSTERING);

// 设置成广播消费模式

consumer.setMessageModel(MessageModel.BROADCASTING);

consumer.registerMessageListener(new MessageListenerConcurrently() {

@Override

public ConsumeConcurrentlyStatus consumeMessage(List list, ConsumeConcurrentlyContext consumeConcurrentlyContext) {

for (MessageExt messageExt : list) {

System.out.println("message is : " + new String(messageExt.getBody()));

}

return ConsumeConcurrentlyStatus.CONSUME_SUCCESS;

}

});

consumer.start();

}

}

事务消息

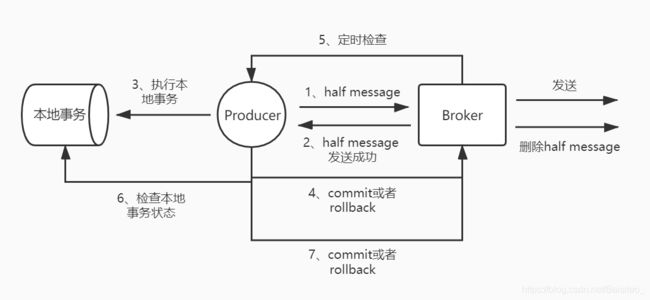

事务消息可以将其视为两阶段提交消息实现,以确保分布式系统中的最终一致性。事务性消息可确保本地事务的执行和消息的发送可以原子方式执行。

如图所示,是RocketMQ发送事务消息时的流程,RocketMQ首先会发送Half Message到Broker的Half Queue,这种消息是不能被消费的,发送成功后,再执行本地事务,如果本地事务正常提交,RockerMQ才会把Half Message转换成正常的Message消息发送出去,如果本地事务回滚,则Half Message会被删除。如果在超时时间之内,RocketMQ既没有收到回滚,也没有收到提交,则会定时检查本地事务的状态,来确定消息是应该被提交还是回滚。

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException, RemotingException, InterruptedException, MQBrokerException {

TransactionMQProducer producer = new TransactionMQProducer("hello-group-producer");

producer.setNamesrvAddr("192.168.1.101:9876");

// 设置事务监听器

producer.setTransactionListener(new TransactionListener() {

@Override

public LocalTransactionState executeLocalTransaction(Message msg, Object arg) {

System.out.println("executeLocalTransaction msg is : " + new String(msg.getBody()));

System.out.println("executeLocalTransaction arg is : " + arg);

// 执行本地事务

return LocalTransactionState.UNKNOW;

}

@Override

public LocalTransactionState checkLocalTransaction(MessageExt msg) {

System.out.println("checkLocalTransaction msg is : " + new String(msg.getBody()));

// 检查事务状态

return LocalTransactionState.COMMIT_MESSAGE;

}

});

producer.start();

Message message = new Message("hello-topic", "Hello RocketMQ Transaction".getBytes());

// 发送事务消息

SendResult sendResult = producer.sendMessageInTransaction(message, "sicimike");

System.out.println("sendResult is : " + sendResult);

}

}

顺序消息

RocketMQ使用FIFO顺序提供有序消息,顺序消息分为全局有序和分区有序。

顺序消息分为发送的时候有序和消费的时候有序,只有保证了这两个都有序,才是说是顺序消息。

因为RocketMQ中,每个Topic下面都有若干个Queue,而Queue是FIIFO的,所以想要消息的发送有序,只需要把消息发送到同一个Queue即可,RocketMQ提供了这样的API

Producer代码

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException, RemotingException, InterruptedException, MQBrokerException {

DefaultMQProducer producer = new DefaultMQProducer("hello-group-producer");

producer.setNamesrvAddr("192.168.1.101:9876");

producer.start();

for (int i = 0; i < 10; i++) {

// 同一个Topic下

Message message = new Message("hello-topic", ("Hello RocketMQ Order " + i).getBytes());

// MessageQueueSelector是Queue的选择器

SendResult sendResult = producer.send(message, new MessageQueueSelector() {

@Override

public MessageQueue select(List mqs, Message msg, Object arg) {

Integer id = (Integer) arg;

// 根据外部参数,选择合适的Queue

return mqs.get(id % mqs.size());

}

}, 1234);

System.out.println("sendResult is : " + sendResult);

}

}

}

想要实现消费者的顺序消费非常简单,RocketMQ也提供了这样的API。

Consumer代码

public class SicimikeConsumer {

public static void main(String[] args) throws MQClientException {

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("hello-group-consumer");

consumer.setNamesrvAddr("192.168.1.101:9876");

consumer.subscribe("hello-topic", "*");

// MessageListenerOrderly表示顺序消息

consumer.registerMessageListener(new MessageListenerOrderly() {

@Override

public ConsumeOrderlyStatus consumeMessage(List msgs, ConsumeOrderlyContext context) {

for (MessageExt messageExt : msgs) {

System.out.println(Thread.currentThread().getName() + " -> " + new String(messageExt.getBody()));

}

return ConsumeOrderlyStatus.SUCCESS;

}

});

consumer.start();

}

}

Consumer执行结果

ConsumeMessageThread_1 -> Hello RocketMQ Order 0

ConsumeMessageThread_1 -> Hello RocketMQ Order 1

ConsumeMessageThread_1 -> Hello RocketMQ Order 2

ConsumeMessageThread_1 -> Hello RocketMQ Order 3

ConsumeMessageThread_1 -> Hello RocketMQ Order 4

ConsumeMessageThread_1 -> Hello RocketMQ Order 5

ConsumeMessageThread_1 -> Hello RocketMQ Order 6

ConsumeMessageThread_1 -> Hello RocketMQ Order 7

ConsumeMessageThread_1 -> Hello RocketMQ Order 8

ConsumeMessageThread_1 -> Hello RocketMQ Order 9

MessageListenerOrderly会给每个Queue启动一个线程进行消费,所以对于一个Queue中的消息是有序的。

以下实例可以验证,在Producer中开启两个线程进行写入,分别把消息写到不同的Queue。

public class SicimikeProducer {

public static void main(String[] args) throws MQClientException {

DefaultMQProducer producer = new DefaultMQProducer("hello-group-producer");

producer.setNamesrvAddr("192.168.1.101:9876");

producer.start();

new Thread(() -> {

try {

for (int i = 0; i < 10; i++) {

Message message = new Message("hello-topic", ("Hello RocketMQ Order 1234-" + i).getBytes());

SendResult sendResult = producer.send(message, new MessageQueueSelector() {

@Override

public MessageQueue select(List mqs, Message msg, Object arg) {

Integer id = (Integer) arg;

return mqs.get(id % mqs.size());

}

}, 1234);

System.out.println(Thread.currentThread().getName() + " -> sendResult is : " + sendResult);

}

} catch (Exception e) {

}

}).start();

new Thread(() -> {

try {

for (int i = 0; i < 10; i++) {

Message message = new Message("hello-topic", ("Hello RocketMQ Order 4321-" + i).getBytes());

SendResult sendResult = producer.send(message, new MessageQueueSelector() {

@Override

public MessageQueue select(List mqs, Message msg, Object arg) {

Integer id = (Integer) arg;

return mqs.get(id % mqs.size());

}

}, 4321);

System.out.println(Thread.currentThread().getName() + " -> sendResult is : " + sendResult);

}

} catch (Exception e) {

}

}).start();

}

}

执行结果

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863AE0001, offsetMsgId=C0A8016500002A9F0000000000013EA1, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=115]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863AE0000, offsetMsgId=C0A8016500002A9F0000000000013DBD, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=18]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863BD0003, offsetMsgId=C0A8016500002A9F0000000000013F85, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=19]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863BD0002, offsetMsgId=C0A8016500002A9F0000000000014069, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=116]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863C60004, offsetMsgId=C0A8016500002A9F000000000001414D, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=117]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863C60005, offsetMsgId=C0A8016500002A9F0000000000014231, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=20]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863CB0006, offsetMsgId=C0A8016500002A9F0000000000014315, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=118]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863CD0007, offsetMsgId=C0A8016500002A9F00000000000143F9, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=21]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D30008, offsetMsgId=C0A8016500002A9F00000000000144DD, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=119]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D50009, offsetMsgId=C0A8016500002A9F00000000000145C1, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=22]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D8000A, offsetMsgId=C0A8016500002A9F00000000000146A5, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=120]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D9000B, offsetMsgId=C0A8016500002A9F0000000000014789, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=23]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E0000C, offsetMsgId=C0A8016500002A9F000000000001486D, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=121]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E6000D, offsetMsgId=C0A8016500002A9F0000000000014951, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=24]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E8000E, offsetMsgId=C0A8016500002A9F0000000000014A35, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=122]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E9000F, offsetMsgId=C0A8016500002A9F0000000000014B19, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=25]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863ED0010, offsetMsgId=C0A8016500002A9F0000000000014BFD, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=123]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863F40011, offsetMsgId=C0A8016500002A9F0000000000014CE1, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=26]

Thread-6 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863F50012, offsetMsgId=C0A8016500002A9F0000000000014DC5, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=2], queueOffset=124]

Thread-7 -> sendResult is : SendResult [sendStatus=SEND_OK, msgId=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863F80013, offsetMsgId=C0A8016500002A9F0000000000014EA9, messageQueue=MessageQueue [topic=hello-topic, brokerName=localhost.localdomain, queueId=1], queueOffset=27]

根据输出信息就可以看到,每个线程在写入自己的Queue时都是有序的。再来看看消费者

public class SicimikeConsumer {

public static void main(String[] args) throws MQClientException {

DefaultMQPushConsumer consumer = new DefaultMQPushConsumer("hello-group-consumer");

consumer.setNamesrvAddr("192.168.1.101:9876");

consumer.subscribe("hello-topic", "*");

consumer.registerMessageListener(new MessageListenerOrderly() {

@Override

public ConsumeOrderlyStatus consumeMessage(List msgs, ConsumeOrderlyContext context) {

for (MessageExt messageExt : msgs) {

System.out.println(Thread.currentThread().getName() + " -> " + messageExt.toString());

}

return ConsumeOrderlyStatus.SUCCESS;

}

});

consumer.start();

}

}

执行结果

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=115, sysFlag=0, bornTimestamp=1596467602352, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602468, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000013EA1, commitLogOffset=81569, bodyCRC=194455642, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863AE0001, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 48], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=18, sysFlag=0, bornTimestamp=1596467602352, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602467, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000013DBD, commitLogOffset=81341, bodyCRC=1481455063, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863AE0000, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 48], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=19, sysFlag=0, bornTimestamp=1596467602365, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602482, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000013F85, commitLogOffset=81797, bodyCRC=793380161, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863BD0003, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 49], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=116, sysFlag=0, bornTimestamp=1596467602365, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602482, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014069, commitLogOffset=82025, bodyCRC=2089818316, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863BD0002, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 49], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=20, sysFlag=0, bornTimestamp=1596467602374, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602486, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014231, commitLogOffset=82481, bodyCRC=910382331, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863C60005, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 50], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=21, sysFlag=0, bornTimestamp=1596467602381, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602496, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F00000000000143F9, commitLogOffset=82937, bodyCRC=1095001197, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863CD0007, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 51], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=117, sysFlag=0, bornTimestamp=1596467602374, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602486, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F000000000001414D, commitLogOffset=82253, bodyCRC=1704544630, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863C60004, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 50], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=22, sysFlag=0, bornTimestamp=1596467602389, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602500, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F00000000000145C1, commitLogOffset=83393, bodyCRC=1595994574, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D50009, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 52], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=118, sysFlag=0, bornTimestamp=1596467602379, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602491, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014315, commitLogOffset=82709, bodyCRC=312375776, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863CB0006, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 51], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=23, sysFlag=0, bornTimestamp=1596467602393, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602506, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014789, commitLogOffset=83849, bodyCRC=673694040, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D9000B, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 53], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=119, sysFlag=0, bornTimestamp=1596467602387, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602498, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F00000000000144DD, commitLogOffset=83165, bodyCRC=217771075, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D30008, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 52], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=24, sysFlag=0, bornTimestamp=1596467602406, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602517, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014951, commitLogOffset=84305, bodyCRC=825135330, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E6000D, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 54], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=120, sysFlag=0, bornTimestamp=1596467602392, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602506, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F00000000000146A5, commitLogOffset=83621, bodyCRC=2080234709, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863D8000A, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 53], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=25, sysFlag=0, bornTimestamp=1596467602409, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602524, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014B19, commitLogOffset=84761, bodyCRC=1177133172, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E9000F, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 55], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=121, sysFlag=0, bornTimestamp=1596467602400, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602514, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F000000000001486D, commitLogOffset=84077, bodyCRC=1660194159, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E0000C, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 54], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=26, sysFlag=0, bornTimestamp=1596467602420, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602531, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014CE1, commitLogOffset=85217, bodyCRC=1452719589, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863F40011, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 56], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=122, sysFlag=0, bornTimestamp=1596467602408, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602519, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014A35, commitLogOffset=84533, bodyCRC=368295417, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863E8000E, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 55], transactionId='null'}]

ConsumeMessageThread_1 -> MessageExt [queueId=1, storeSize=228, queueOffset=27, sysFlag=0, bornTimestamp=1596467602424, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602535, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014EA9, commitLogOffset=85673, bodyCRC=563187059, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=28, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863F80013, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 52, 51, 50, 49, 45, 57], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=123, sysFlag=0, bornTimestamp=1596467602413, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602525, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014BFD, commitLogOffset=84989, bodyCRC=88907880, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863ED0010, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 56], transactionId='null'}]

ConsumeMessageThread_2 -> MessageExt [queueId=2, storeSize=228, queueOffset=124, sysFlag=0, bornTimestamp=1596467602421, bornHost=/192.168.1.33:9717, storeTimestamp=1596467602532, storeHost=/192.168.1.101:10911, msgId=C0A8016500002A9F0000000000014DC5, commitLogOffset=85445, bodyCRC=1917554942, reconsumeTimes=0, preparedTransactionOffset=0, toString()=Message{topic='hello-topic', flag=0, properties={MIN_OFFSET=0, MAX_OFFSET=125, UNIQ_KEY=24098A4C0A222600A1B5C95D6F74641E000018B4AAC20F4863F50012, CLUSTER=DefaultCluster, WAIT=true}, body=[72, 101, 108, 108, 111, 32, 82, 111, 99, 107, 101, 116, 77, 81, 32, 79, 114, 100, 101, 114, 32, 49, 50, 51, 52, 45, 57], transactionId='null'}]

可以看到对于每一个Queue,都是是有序的。

总结

本篇主要讲解RocketMQ的安装、基本概念、基本使用方式。