ffmpeg源码分析与应用示例(一)——H.264解码与QP提取

本文包含以下内容

1、H.264解码流程详述与对应ffmpeg源码解析

2、针对一个应用实例介绍通过修改ffmpeg源码解决问题的方案

具有较强的综合性。

先介绍一下在第二部分中将要解决的实际问题:自ffmpeg 1.2版本之后,用于描述解码后的视音频原始数据相关信息的AVFrame结构体被移出了avcodec库,转而加入了avutil库之中,这样的改变本来是合理的,但是也带来了一些问题。例如,以前在AVFrame中可以直接提取解码得到的QP table(int8_t *qscale_table)和QP stride(int qstride),但是因为这两个变量需要利用avcodec库进行解码后才能获得,于是在AVFrame独立之后,这两个变量(以及包括mbskip_table在内的很多其他变量)也被标注为弃用的(attribute_deprecated),无法再进行提取。在进行诸如码流分析或质量评价之类的工作时,QP table是经常要用到的,于是这篇文章里就介绍一下如何通过分析ffmpeg源码并进行修改来重新获得QP table和QP stride。

简单介绍一下ffmpeg源码调试与修改的基本方法:

首先是基本的conifigure和make,configure命令不用太复杂,像下面这个这么简单的就可以,需要注意的是不要--enable-shared,否则调试时不能step into。如果是windows的话,需要mingw。

# ./configure --enable-gpl --enable-debug --disable-optimizations --disable-asm --enable-ffplay然后打开eclipse+cdt,选File->new->Makefile Project with Existing Code,接下来的对话框中的Toolchain for Indexer Settings中选Linux GCC,完成项目的新建之后右键点击ffmpeg_g选debug即可开始调试。如果进行修改的话,在每次修改了源码之后重新进行make即可。

H.264解码流程详述与对应ffmpeg源码解析

这里的分析基于20150630的ffmpeg版本。

ffmpeg中通过av_register_all注册了诸多的编解码器、封装格式、协议等,由此,在进行针对相应内容进行操作时会调用对应的函数。如下,在ffmpeg的API函数avcodec_decode_video2中,通过调用AVCodec的decode函数完成解码操作的关键部分

int attribute_align_arg avcodec_decode_video2(AVCodecContext *avctx, AVFrame *picture,

int *got_picture_ptr,

const AVPacket *avpkt)

{

AVCodecInternal *avci = avctx->internal;

int ret;

// copy to ensure we do not change avpkt

AVPacket tmp = *avpkt;

if (!avctx->codec)

return AVERROR(EINVAL);

if (avctx->codec->type != AVMEDIA_TYPE_VIDEO) {

av_log(avctx, AV_LOG_ERROR, "Invalid media type for video\n");

return AVERROR(EINVAL);

}

*got_picture_ptr = 0;

if ((avctx->coded_width || avctx->coded_height) && av_image_check_size(avctx->coded_width, avctx->coded_height, 0, avctx))

return AVERROR(EINVAL);

av_frame_unref(picture);

if ((avctx->codec->capabilities & CODEC_CAP_DELAY) || avpkt->size || (avctx->active_thread_type & FF_THREAD_FRAME)) {

int did_split = av_packet_split_side_data(&tmp);

ret = apply_param_change(avctx, &tmp);

if (ret < 0) {

av_log(avctx, AV_LOG_ERROR, "Error applying parameter changes.\n");

if (avctx->err_recognition & AV_EF_EXPLODE)

goto fail;

}

avctx->internal->pkt = &tmp;

if (HAVE_THREADS && avctx->active_thread_type & FF_THREAD_FRAME)

ret = ff_thread_decode_frame(avctx, picture, got_picture_ptr,

&tmp);

else {

ret = avctx->codec->decode(avctx, picture, got_picture_ptr,

&tmp);//解码功能的核心部分

picture->pkt_dts = avpkt->dts;

if(!avctx->has_b_frames){

av_frame_set_pkt_pos(picture, avpkt->pos);

}

//FIXME these should be under if(!avctx->has_b_frames)

/* get_buffer is supposed to set frame parameters */

if (!(avctx->codec->capabilities & CODEC_CAP_DR1)) {

if (!picture->sample_aspect_ratio.num) picture->sample_aspect_ratio = avctx->sample_aspect_ratio;

if (!picture->width) picture->width = avctx->width;

if (!picture->height) picture->height = avctx->height;

if (picture->format == AV_PIX_FMT_NONE) picture->format = avctx->pix_fmt;

}

}

add_metadata_from_side_data(avctx, picture);

fail:

emms_c(); //needed to avoid an emms_c() call before every return;

avctx->internal->pkt = NULL;

if (did_split) {

av_packet_free_side_data(&tmp);

if(ret == tmp.size)

ret = avpkt->size;

}

if (*got_picture_ptr) {

if (!avctx->refcounted_frames) {

int err = unrefcount_frame(avci, picture);

if (err < 0)

return err;

}

avctx->frame_number++;

av_frame_set_best_effort_timestamp(picture,

guess_correct_pts(avctx,

picture->pkt_pts,

picture->pkt_dts));

} else

av_frame_unref(picture);

} else

ret = 0;

/* many decoders assign whole AVFrames, thus overwriting extended_data;

* make sure it's set correctly */

av_assert0(!picture->extended_data || picture->extended_data == picture->data);

#if FF_API_AVCTX_TIMEBASE

if (avctx->framerate.num > 0 && avctx->framerate.den > 0)

avctx->time_base = av_inv_q(av_mul_q(avctx->framerate, (AVRational){avctx->ticks_per_frame, 1}));

#endif

return ret;

}

而注册的H.264具体解码功能包含在结构体ff_h264_decoder中,如下

AVCodec ff_h264_decoder = {

.name = "h264",

.long_name = NULL_IF_CONFIG_SMALL("H.264 / AVC / MPEG-4 AVC / MPEG-4 part 10"),

.type = AVMEDIA_TYPE_VIDEO,

.id = AV_CODEC_ID_H264,

.priv_data_size = sizeof(H264Context),

.init = ff_h264_decode_init,

.close = h264_decode_end,

.decode = h264_decode_frame,

.capabilities = /*CODEC_CAP_DRAW_HORIZ_BAND |*/ CODEC_CAP_DR1 |

CODEC_CAP_DELAY | CODEC_CAP_SLICE_THREADS |

CODEC_CAP_FRAME_THREADS,

.flush = flush_dpb,

.init_thread_copy = ONLY_IF_THREADS_ENABLED(decode_init_thread_copy),

.update_thread_context = ONLY_IF_THREADS_ENABLED(ff_h264_update_thread_context),

.profiles = NULL_IF_CONFIG_SMALL(profiles),

.priv_class = &h264_class,

};可以看到它就是一个AVCodec结构体,里面包含了H264解码的初始化、实际解码、关闭等操作。因此,要分析ffmpeg源码中的H264解码部分,就要重点看h264_decode_frame

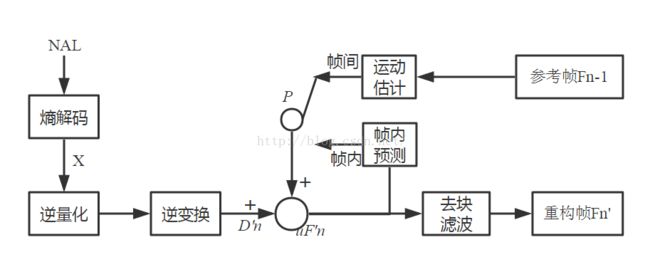

先给出一个标准的H.264解码流程图,如下

解码器从NAL中接收压缩的比特流,经过对码流进行熵解码获得量化系数等参数,量化参数X经过反量化和反变换后得到参数数据D‘,解码器使用从码流中解码得到的头信息创建一个预测块P,P与D'求和得到图像块数据uF',最后每个uF’通过去块效应滤波器得到重建图像的解码块F。

下面结合此流程图的各部分进行分析

首先是从NAL中接收压缩的比特流并进行解码,见ff_h264_decode_frame中的decode_nal_units

static int h264_decode_frame(AVCodecContext *avctx, void *data,

int *got_frame, AVPacket *avpkt)

{

……

if (h->is_avc && av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, NULL)) {

int side_size;

uint8_t *side = av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, &side_size);

if (is_extra(side, side_size))

ff_h264_decode_extradata(h, side, side_size);

}

if(h->is_avc && buf_size >= 9 && buf[0]==1 && buf[2]==0 && (buf[4]&0xFC)==0xFC && (buf[5]&0x1F) && buf[8]==0x67){

if (is_extra(buf, buf_size))

return ff_h264_decode_extradata(h, buf, buf_size);

}

buf_index = decode_nal_units(h, buf, buf_size, 0);

if (buf_index < 0)

return AVERROR_INVALIDDATA;

if (!h->cur_pic_ptr && h->nal_unit_type == NAL_END_SEQUENCE) {

av_assert0(buf_index <= buf_size);

goto out;

}

……

av_assert0(pict->buf[0] || !*got_frame);

ff_h264_unref_picture(h, &h->last_pic_for_ec);

return get_consumed_bytes(buf_index, buf_size);

}

进一步深入decode_nal_units,可见其首先调用了ff_h264_decode_nal对NAL单元进行解析,然后根据NAL单元类型的不同调用不同的处理函数,例如,对于SPS,调用ff_h264_decode_seq_parameter_set对SPS进行解析。在经过解析之后,会调用ff_h264_execute_decode_slices进行真正的解码slice的操作

static int decode_nal_units(H264Context *h, const uint8_t *buf, int buf_size,

int parse_extradata)

{

AVCodecContext *const avctx = h->avctx;

H264Context *hx; ///< thread context

……

for (;;) {

……

ptr = ff_h264_decode_nal(hx, buf + buf_index, &dst_length,

&consumed, next_avc - buf_index);

if (!ptr || dst_length < 0) {

ret = -1;

goto end;

}

……

switch (hx->nal_unit_type) {

case NAL_IDR_SLICE:

if ((ptr[0] & 0xFC) == 0x98) {

av_log(h->avctx, AV_LOG_ERROR, "Invalid inter IDR frame\n");

h->next_outputed_poc = INT_MIN;

ret = -1;

goto end;

}

if (h->nal_unit_type != NAL_IDR_SLICE) {

av_log(h->avctx, AV_LOG_ERROR,

"Invalid mix of idr and non-idr slices\n");

ret = -1;

goto end;

}

if(!idr_cleared)

idr(h); // FIXME ensure we don't lose some frames if there is reordering

idr_cleared = 1;

h->has_recovery_point = 1;

case NAL_SLICE:

init_get_bits(&hx->gb, ptr, bit_length);

hx->intra_gb_ptr =

hx->inter_gb_ptr = &hx->gb;

if ((err = ff_h264_decode_slice_header(hx, h)))

break;

if (h->sei_recovery_frame_cnt >= 0) {

if (h->frame_num != h->sei_recovery_frame_cnt || hx->slice_type_nos != AV_PICTURE_TYPE_I)

h->valid_recovery_point = 1;

if ( h->recovery_frame < 0

|| ((h->recovery_frame - h->frame_num) & ((1 << h->sps.log2_max_frame_num)-1)) > h->sei_recovery_frame_cnt) {

h->recovery_frame = (h->frame_num + h->sei_recovery_frame_cnt) &

((1 << h->sps.log2_max_frame_num) - 1);

if (!h->valid_recovery_point)

h->recovery_frame = h->frame_num;

}

}

h->cur_pic_ptr->f.key_frame |=

(hx->nal_unit_type == NAL_IDR_SLICE);

if (hx->nal_unit_type == NAL_IDR_SLICE ||

h->recovery_frame == h->frame_num) {

h->recovery_frame = -1;

h->cur_pic_ptr->recovered = 1;

}

// If we have an IDR, all frames after it in decoded order are

// "recovered".

if (hx->nal_unit_type == NAL_IDR_SLICE)

h->frame_recovered |= FRAME_RECOVERED_IDR;

h->frame_recovered |= 3*!!(avctx->flags2 & CODEC_FLAG2_SHOW_ALL);

h->frame_recovered |= 3*!!(avctx->flags & CODEC_FLAG_OUTPUT_CORRUPT);

#if 1

h->cur_pic_ptr->recovered |= h->frame_recovered;

#else

h->cur_pic_ptr->recovered |= !!(h->frame_recovered & FRAME_RECOVERED_IDR);

#endif

if (h->current_slice == 1) {

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS))

decode_postinit(h, nal_index >= nals_needed);

if (h->avctx->hwaccel &&

(ret = h->avctx->hwaccel->start_frame(h->avctx, NULL, 0)) < 0)

return ret;

if (CONFIG_H264_VDPAU_DECODER &&

h->avctx->codec->capabilities & CODEC_CAP_HWACCEL_VDPAU)

ff_vdpau_h264_picture_start(h);

}

if (hx->redundant_pic_count == 0) {

if (avctx->hwaccel) {

ret = avctx->hwaccel->decode_slice(avctx,

&buf[buf_index - consumed],

consumed);

if (ret < 0)

return ret;

} else if (CONFIG_H264_VDPAU_DECODER &&

h->avctx->codec->capabilities & CODEC_CAP_HWACCEL_VDPAU) {

ff_vdpau_add_data_chunk(h->cur_pic_ptr->f.data[0],

start_code,

sizeof(start_code));

ff_vdpau_add_data_chunk(h->cur_pic_ptr->f.data[0],

&buf[buf_index - consumed],

consumed);

} else

context_count++;

}

break;

case NAL_DPA:

case NAL_DPB:

case NAL_DPC:

avpriv_request_sample(avctx, "data partitioning");

ret = AVERROR(ENOSYS);

goto end;

break;

case NAL_SEI:

init_get_bits(&h->gb, ptr, bit_length);

ret = ff_h264_decode_sei(h);

if (ret < 0 && (h->avctx->err_recognition & AV_EF_EXPLODE))

goto end;

break;

case NAL_SPS:

init_get_bits(&h->gb, ptr, bit_length);

if (ff_h264_decode_seq_parameter_set(h) < 0 && (h->is_avc ? nalsize : 1)) {

av_log(h->avctx, AV_LOG_DEBUG,

"SPS decoding failure, trying again with the complete NAL\n");

if (h->is_avc)

av_assert0(next_avc - buf_index + consumed == nalsize);

if ((next_avc - buf_index + consumed - 1) >= INT_MAX/8)

break;

init_get_bits(&h->gb, &buf[buf_index + 1 - consumed],

8*(next_avc - buf_index + consumed - 1));

ff_h264_decode_seq_parameter_set(h);

}

break;

case NAL_PPS:

init_get_bits(&h->gb, ptr, bit_length);

ret = ff_h264_decode_picture_parameter_set(h, bit_length);

if (ret < 0 && (h->avctx->err_recognition & AV_EF_EXPLODE))

goto end;

break;

case NAL_AUD:

case NAL_END_SEQUENCE:

case NAL_END_STREAM:

case NAL_FILLER_DATA:

case NAL_SPS_EXT:

case NAL_AUXILIARY_SLICE:

break;

case NAL_FF_IGNORE:

break;

default:

av_log(avctx, AV_LOG_DEBUG, "Unknown NAL code: %d (%d bits)\n",

hx->nal_unit_type, bit_length);

}

if (context_count == h->max_contexts) {

ret = ff_h264_execute_decode_slices(h, context_count);

if (ret < 0 && (h->avctx->err_recognition & AV_EF_EXPLODE))

goto end;

context_count = 0;

}

if (err < 0 || err == SLICE_SKIPED) {

if (err < 0)

av_log(h->avctx, AV_LOG_ERROR, "decode_slice_header error\n");

h->ref_count[0] = h->ref_count[1] = h->list_count = 0;

} else if (err == SLICE_SINGLETHREAD) {

/* Slice could not be decoded in parallel mode, copy down

* NAL unit stuff to context 0 and restart. Note that

* rbsp_buffer is not transferred, but since we no longer

* run in parallel mode this should not be an issue. */

h->nal_unit_type = hx->nal_unit_type;

h->nal_ref_idc = hx->nal_ref_idc;

hx = h;

goto again;

}

}

}

if (context_count) {

ret = ff_h264_execute_decode_slices(h, context_count);

if (ret < 0 && (h->avctx->err_recognition & AV_EF_EXPLODE))

goto end;

}

ret = 0;

end:

/* clean up */

if (h->cur_pic_ptr && !h->droppable) {

ff_thread_report_progress(&h->cur_pic_ptr->tf, INT_MAX,

h->picture_structure == PICT_BOTTOM_FIELD);

}

return (ret < 0) ? ret : buf_index;

}

再深入去看ff_h264_execute_decode_slices,会发现它直接调用了decode_slice来进行slice的解码。

/**

* Call decode_slice() for each context.

*

* @param h h264 master context

* @param context_count number of contexts to execute

*/

int ff_h264_execute_decode_slices(H264Context *h, unsigned context_count)

{

AVCodecContext *const avctx = h->avctx;

H264Context *hx;

int i;

av_assert0(h->mb_y < h->mb_height);

if (h->avctx->hwaccel ||

h->avctx->codec->capabilities & CODEC_CAP_HWACCEL_VDPAU)

return 0;

if (context_count == 1) {

return decode_slice(avctx, &h);

} else {

av_assert0(context_count > 0);

for (i = 1; i < context_count; i++) {

hx = h->thread_context[i];

if (CONFIG_ERROR_RESILIENCE) {

hx->er.error_count = 0;

}

hx->x264_build = h->x264_build;

}

avctx->execute(avctx, decode_slice, h->thread_context,

NULL, context_count, sizeof(void *));

/* pull back stuff from slices to master context */

hx = h->thread_context[context_count - 1];

h->mb_x = hx->mb_x;

h->mb_y = hx->mb_y;

h->droppable = hx->droppable;

h->picture_structure = hx->picture_structure;

if (CONFIG_ERROR_RESILIENCE) {

for (i = 1; i < context_count; i++)

h->er.error_count += h->thread_context[i]->er.error_count;

}

}

return 0;

}那就再来看看decode_slice

static int decode_slice(struct AVCodecContext *avctx, void *arg)

{

H264Context *h = *(void **)arg;

……

if (h->pps.cabac) {

/* realign */

align_get_bits(&h->gb);

/* init cabac */

ff_init_cabac_decoder(&h->cabac,

h->gb.buffer + get_bits_count(&h->gb) / 8,

(get_bits_left(&h->gb) + 7) / 8);

ff_h264_init_cabac_states(h);

for (;;) {

// START_TIMER

int ret = ff_h264_decode_mb_cabac(h);

int eos;

// STOP_TIMER("decode_mb_cabac")

if (ret >= 0)

ff_h264_hl_decode_mb(h);

// FIXME optimal? or let mb_decode decode 16x32 ?

if (ret >= 0 && FRAME_MBAFF(h)) {

h->mb_y++;

ret = ff_h264_decode_mb_cabac(h);

if (ret >= 0)

ff_h264_hl_decode_mb(h);

h->mb_y--;

}

eos = get_cabac_terminate(&h->cabac);

if ((h->workaround_bugs & FF_BUG_TRUNCATED) &&

h->cabac.bytestream > h->cabac.bytestream_end + 2) {

er_add_slice(h, h->resync_mb_x, h->resync_mb_y, h->mb_x - 1,

h->mb_y, ER_MB_END);

if (h->mb_x >= lf_x_start)

loop_filter(h, lf_x_start, h->mb_x + 1);

return 0;

}

if (h->cabac.bytestream > h->cabac.bytestream_end + 2 )

av_log(h->avctx, AV_LOG_DEBUG, "bytestream overread %"PTRDIFF_SPECIFIER"\n", h->cabac.bytestream_end - h->cabac.bytestream);

if (ret < 0 || h->cabac.bytestream > h->cabac.bytestream_end + 4) {

av_log(h->avctx, AV_LOG_ERROR,

"error while decoding MB %d %d, bytestream %"PTRDIFF_SPECIFIER"\n",

h->mb_x, h->mb_y,

h->cabac.bytestream_end - h->cabac.bytestream);

er_add_slice(h, h->resync_mb_x, h->resync_mb_y, h->mb_x,

h->mb_y, ER_MB_ERROR);

return AVERROR_INVALIDDATA;

}

if (++h->mb_x >= h->mb_width) {

loop_filter(h, lf_x_start, h->mb_x);

h->mb_x = lf_x_start = 0;

decode_finish_row(h);

++h->mb_y;

if (FIELD_OR_MBAFF_PICTURE(h)) {

++h->mb_y;

if (FRAME_MBAFF(h) && h->mb_y < h->mb_height)

predict_field_decoding_flag(h);

}

}

if (eos || h->mb_y >= h->mb_height) {

tprintf(h->avctx, "slice end %d %d\n",

get_bits_count(&h->gb), h->gb.size_in_bits);

er_add_slice(h, h->resync_mb_x, h->resync_mb_y, h->mb_x - 1,

h->mb_y, ER_MB_END);

if (h->mb_x > lf_x_start)

loop_filter(h, lf_x_start, h->mb_x);

return 0;

}

}

} else {

for (;;) {

int ret = ff_h264_decode_mb_cavlc(h);

if (ret >= 0)

ff_h264_hl_decode_mb(h);

// FIXME optimal? or let mb_decode decode 16x32 ?

if (ret >= 0 && FRAME_MBAFF(h)) {

h->mb_y++;

ret = ff_h264_decode_mb_cavlc(h);

if (ret >= 0)

ff_h264_hl_decode_mb(h);

h->mb_y--;

}

if (ret < 0) {

av_log(h->avctx, AV_LOG_ERROR,

"error while decoding MB %d %d\n", h->mb_x, h->mb_y);

er_add_slice(h, h->resync_mb_x, h->resync_mb_y, h->mb_x,

h->mb_y, ER_MB_ERROR);

return ret;

}

if (++h->mb_x >= h->mb_width) {

loop_filter(h, lf_x_start, h->mb_x);

h->mb_x = lf_x_start = 0;

decode_finish_row(h);

++h->mb_y;

if (FIELD_OR_MBAFF_PICTURE(h)) {

++h->mb_y;

if (FRAME_MBAFF(h) && h->mb_y < h->mb_height)

predict_field_decoding_flag(h);

}

if (h->mb_y >= h->mb_height) {

tprintf(h->avctx, "slice end %d %d\n",

get_bits_count(&h->gb), h->gb.size_in_bits);

if ( get_bits_left(&h->gb) == 0

|| get_bits_left(&h->gb) > 0 && !(h->avctx->err_recognition & AV_EF_AGGRESSIVE)) {

er_add_slice(h, h->resync_mb_x, h->resync_mb_y,

h->mb_x - 1, h->mb_y, ER_MB_END);

return 0;

} else {

er_add_slice(h, h->resync_mb_x, h->resync_mb_y,

h->mb_x, h->mb_y, ER_MB_END);

return AVERROR_INVALIDDATA;

}

}

}

if (get_bits_left(&h->gb) <= 0 && h->mb_skip_run <= 0) {

tprintf(h->avctx, "slice end %d %d\n",

get_bits_count(&h->gb), h->gb.size_in_bits);

if (get_bits_left(&h->gb) == 0) {

er_add_slice(h, h->resync_mb_x, h->resync_mb_y,

h->mb_x - 1, h->mb_y, ER_MB_END);

if (h->mb_x > lf_x_start)

loop_filter(h, lf_x_start, h->mb_x);

return 0;

} else {

er_add_slice(h, h->resync_mb_x, h->resync_mb_y, h->mb_x,

h->mb_y, ER_MB_ERROR);

return AVERROR_INVALIDDATA;

}

}

}

}

}至此就已经很清晰了,首先通过PPS判断是CABAC熵编码还是CAVLC熵编码,然后针对每一个宏块调用对应的熵解码函数进行解码,即ff_h264_decode_mb_cabac和ff_h264_decode_mb_cavlc,然后再进行宏块的解码,使用的是ff_h264_hl_decode_mb,帧内预测和帧间预测的内容也包含在其中,最后使用loop_filter进行去块效应滤波,这里还多了一个错误隐藏功能,使用的是er_add_slice。

对于本文的需求,分析到这一步就够了。再往里面深入的话,就需要各位静下心来,对照标准一点一点看了,网上也有很多相关的文章供参考。

ffmpeg源码修改与QP提取

通过前面对ffmpeg中H.264解码部分的源码分析,现在我们可以解决在文章最开始提到的实际问题了。

每一个宏块都有自己的QP值,将一帧中所有宏块的QP值集合到一起即得到QP table。直接定位到与帧解码对应的函数h264_decode_frame(libavcodec/h264.c),当成功解码得到一帧数据的时候,会将got_frame赋值为1,此时即可将解码得到的QP table赋值给作为输出的AVFrame。于是,修改后的代码如下,非常简单。需要注意的是,在flush buffer阶段也需要进行对应的QP table赋值操作。

static int h264_decode_frame(AVCodecContext *avctx, void *data,

int *got_frame, AVPacket *avpkt)

{

const uint8_t *buf = avpkt->data;

int buf_size = avpkt->size;

H264Context *h = avctx->priv_data;

AVFrame *pict = data;

int buf_index = 0;

H264Picture *out;

int i, out_idx;

int ret;

h->flags = avctx->flags;

ff_h264_unref_picture(h, &h->last_pic_for_ec);

/* end of stream, output what is still in the buffers */

if (buf_size == 0) {

out:

h->cur_pic_ptr = NULL;

h->first_field = 0;

// FIXME factorize this with the output code below

out = h->delayed_pic[0];

out_idx = 0;

for (i = 1;

h->delayed_pic[i] &&

!h->delayed_pic[i]->f.key_frame &&

!h->delayed_pic[i]->mmco_reset;

i++)

if (h->delayed_pic[i]->poc < out->poc) {

out = h->delayed_pic[i];

out_idx = i;

}

for (i = out_idx; h->delayed_pic[i]; i++)

h->delayed_pic[i] = h->delayed_pic[i + 1];

if (out) {

out->reference &= ~DELAYED_PIC_REF;

ret = output_frame(h, pict, out);

if (ret < 0)

return ret;

//get the qscale table, modified by zhanghui

if(out->qscale_table)

{

pict->qscale_table=out->qscale_table;

pict->qstride=h->mb_stride;

pict->qscale_type=FF_QSCALE_TYPE_H264;

}

*got_frame = 1;

}

return buf_index;

}

if (h->is_avc && av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, NULL)) {

int side_size;

uint8_t *side = av_packet_get_side_data(avpkt, AV_PKT_DATA_NEW_EXTRADATA, &side_size);

if (is_extra(side, side_size))

ff_h264_decode_extradata(h, side, side_size);

}

if(h->is_avc && buf_size >= 9 && buf[0]==1 && buf[2]==0 && (buf[4]&0xFC)==0xFC && (buf[5]&0x1F) && buf[8]==0x67){

if (is_extra(buf, buf_size))

return ff_h264_decode_extradata(h, buf, buf_size);

}

buf_index = decode_nal_units(h, buf, buf_size, 0);

if (buf_index < 0)

return AVERROR_INVALIDDATA;

if (!h->cur_pic_ptr && h->nal_unit_type == NAL_END_SEQUENCE) {

av_assert0(buf_index <= buf_size);

goto out;

}

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS) && !h->cur_pic_ptr) {

if (avctx->skip_frame >= AVDISCARD_NONREF ||

buf_size >= 4 && !memcmp("Q264", buf, 4))

return buf_size;

av_log(avctx, AV_LOG_ERROR, "no frame!\n");

return AVERROR_INVALIDDATA;

}

if (!(avctx->flags2 & CODEC_FLAG2_CHUNKS) ||

(h->mb_y >= h->mb_height && h->mb_height)) {

if (avctx->flags2 & CODEC_FLAG2_CHUNKS)

decode_postinit(h, 1);

ff_h264_field_end(h, 0);

/* Wait for second field. */

*got_frame = 0;

if (h->next_output_pic && (

h->next_output_pic->recovered)) {

if (!h->next_output_pic->recovered)

h->next_output_pic->f.flags |= AV_FRAME_FLAG_CORRUPT;

if (!h->avctx->hwaccel &&

(h->next_output_pic->field_poc[0] == INT_MAX ||

h->next_output_pic->field_poc[1] == INT_MAX)

) {

int p;

AVFrame *f = &h->next_output_pic->f;

int field = h->next_output_pic->field_poc[0] == INT_MAX;

uint8_t *dst_data[4];

int linesizes[4];

const uint8_t *src_data[4];

av_log(h->avctx, AV_LOG_DEBUG, "Duplicating field %d to fill missing\n", field);

for (p = 0; p<4; p++) {

dst_data[p] = f->data[p] + (field^1)*f->linesize[p];

src_data[p] = f->data[p] + field *f->linesize[p];

linesizes[p] = 2*f->linesize[p];

}

av_image_copy(dst_data, linesizes, src_data, linesizes,

f->format, f->width, f->height>>1);

}

ret = output_frame(h, pict, h->next_output_pic);

if (ret < 0)

return ret;

//get the qscale table, modified by zhanghui

if(h->next_output_pic->qscale_table)

{

pict->qscale_table=h->next_output_pic->qscale_table;

pict->qstride=h->mb_stride;

pict->qscale_type=FF_QSCALE_TYPE_H264;

}

*got_frame = 1;

if (CONFIG_MPEGVIDEO) {

ff_print_debug_info2(h->avctx, pict, h->er.mbskip_table,

h->next_output_pic->mb_type,

h->next_output_pic->qscale_table,

h->next_output_pic->motion_val,

&h->low_delay,

h->mb_width, h->mb_height, h->mb_stride, 1);

}

}

}

av_assert0(pict->buf[0] || !*got_frame);

ff_h264_unref_picture(h, &h->last_pic_for_ec);

return get_consumed_bytes(buf_index, buf_size);

}保存修改,重新make即可。也可以利用diff和patch工具把对源码的修改做成一个补丁,这样以后再下载了新的ffmpeg源码也可以直接通过patch将我们曾经的修改应用到新的源码中去,非常方便。

上面的应用实例非常简单,进行其他的修改也可以使用类似的思路,我这里算是抛砖引玉,以后还会讲述如何从ffmpeg中抽取代码等其他的ffmpeg源码应用实例。

本文的示例也存在一个小问题,经观察,在AVFrame中新增了AVBufferRef *qp_table_buf变量,应该也是用于存储QP table的,但是AVBufferRef这个结构体我还不太了解它是干什么用的、怎么用的,按理说如果能将QP table存到这个里面应该是更好的,但是我的尝试都失败了,希望有经验的朋友多多指教,谢谢!

关注公众号,掌握更多多媒体领域知识与资讯

![]()

文章帮到你了?可以扫描如下二维码进行打赏~,打赏多少您随意~

![]()