Redis为何如此之快

- Redis基本是内存操作,所以速度很快

内存:

1. 寻址时间:纳秒级别ns

2. 带宽:很大

磁盘:

1. 寻址时间:毫秒级别ms

2. 带宽:G/M

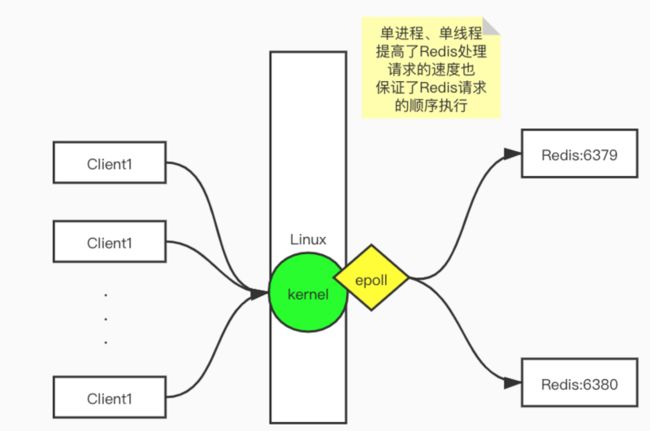

磁盘比内存寻址慢了10W倍以上,所以单机Redis能支持每秒10W以上的请求- Redis通信采用非阻塞IO, 内部实现采用epolll+自己实现的简单的事件框架。epoll中的读、写、关闭、连接都转化成了事件,然后利用epoll的多路复用特性,绝不在io上浪费一点时

- 单机Redis采用单进程、单线程、单实例,避免了不必要的上下文切换和竞争条件

可能很多人认为要想系统处理速度快不是应该使用多线程技术。但其实Redis的数据都是放在内存中,查询存储都延时都非常小,是纳秒级别的,所以如果使用多线程,就需要加锁,系统资源还需要耗费在线程之间上下文切换上面,反而会影响性能。单进程、单线程天生就保证了请求的顺序执行,不需要加锁,也没有了不必要的上下文切换,因此可以将硬件的性能发挥到极致

总结:这3个条件不是相互独立的,特别是第一条,如果请求都是耗时的,采用单线程吞吐量及性能可想而知了。应该说Redis为特殊的场景选择了合适的技术方案。

Epoll的高性能如何成就Redis

I/O模型 BIO、NIO、多路复用I/O、AIO

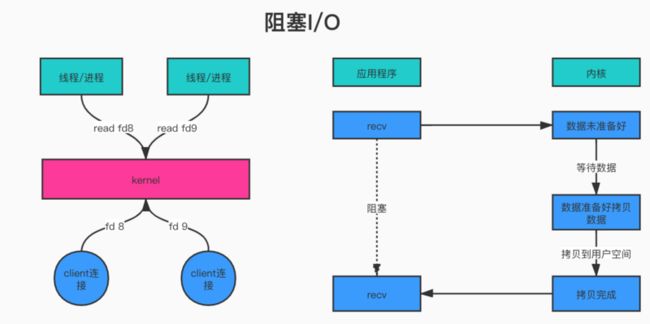

1. 阻塞I/O(Blocking I/O BIO)

应用程序进程/线程如果发起1K个请求,则开启1K个socket文件描述符,socket在等待内核返回数据时是阻塞式的,数据未准备好就一直阻塞等待,一次只会返回一个socket结果,直到返回数据后才等待下一个socket的返回

BIO Server端代码

public class Server {

public static void main(String[] args) throws IOException {

ServerSocket ss = new ServerSocket();

ss.bind(new InetSocketAddress("127.0.0.1", 8888));

while(true) {

Socket s = ss.accept(); //阻塞方法

//新起线程来处理client端请求,让主线程可以接受下一个client的accept

new Thread(() -> {

handle(s);

}).start();

}

}

static void handle(Socket s) {

try {

byte[] bytes = new byte[1024];

int len = s.getInputStream().read(bytes); //阻塞方法

System.out.println(new String(bytes, 0, len));

s.getOutputStream().write(bytes, 0, len); //阻塞方法

s.getOutputStream().flush();

} catch (IOException e) {

e.printStackTrace();

}

}

}BIO Client端代码

public class Client {

public static void main(String[] args) throws IOException {

Socket s = new Socket("127.0.0.1", 8888);

s.getOutputStream().write("HelloServer".getBytes());

s.getOutputStream().flush();

//s.getOutputStream().close();

System.out.println("write over, waiting for msg back...");

byte[] bytes = new byte[1024];

int len = s.getInputStream().read(bytes);

System.out.println(new String(bytes, 0, len));

s.close();

}

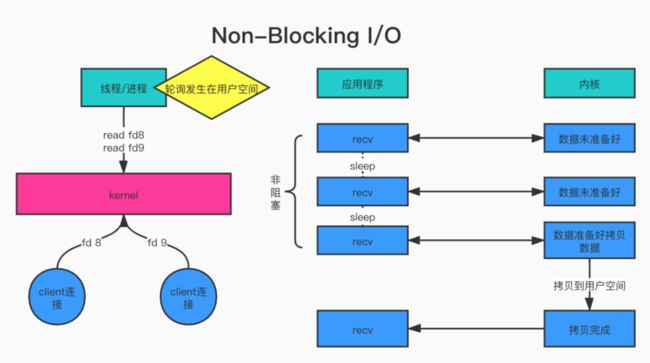

}2. 轮询非阻塞I/O(Non-Blocking I/O NIO)

应用进程如果发起1K个请求,则在用户空间不停轮询这1K个socket文件描述符,查看是否有结果返回。这种方法虽然不阻塞,但是效率太低,有大量无效的循环

单线程NIO Server代码:

一个selector做所有的事,既有accept、又有read

public class Server {

public static void main(String[] args) throws IOException {

ServerSocketChannel ssc = ServerSocketChannel.open();

ssc.socket().bind(new InetSocketAddress("127.0.0.1", 8888));

ssc.configureBlocking(false);

System.out.println("server started, listening on :" + ssc.getLocalAddress());

Selector selector = Selector.open();

ssc.register(selector, SelectionKey.OP_ACCEPT);

while(true) {

selector.select(); //阻塞方法

Set keys = selector.selectedKeys();

Iterator it = keys.iterator();

while(it.hasNext()) {

SelectionKey key = it.next();

it.remove();

handle(key);

}

}

}

private static void handle(SelectionKey key) {

if(key.isAcceptable()) { //判断为连接请求

try {

ServerSocketChannel ssc = (ServerSocketChannel) key.channel();

SocketChannel sc = ssc.accept();

sc.configureBlocking(false);

//new Client

//

//String hostIP = ((InetSocketAddress)sc.getRemoteAddress()).getHostString();

/*

log.info("client " + hostIP + " trying to connect");

for(int i=0; i NIO-reactor Server代码:

一个selector只负责accept(Boss),对每个client的handler由一个线程池处理(Workers)

public class PoolServer {

ExecutorService pool = Executors.newFixedThreadPool(50);

private Selector selector;

//中文测试

/**

*

* @throws IOException

*/

public static void main(String[] args) throws IOException {

PoolServer server = new PoolServer();

server.initServer(8000);

server.listen();

}

/**

*

* @param port

* @throws IOException

*/

public void initServer(int port) throws IOException {

//

ServerSocketChannel serverChannel = ServerSocketChannel.open();

//

serverChannel.configureBlocking(false);

//

serverChannel.socket().bind(new InetSocketAddress(port));

//

this.selector = Selector.open();

serverChannel.register(selector, SelectionKey.OP_ACCEPT);

System.out.println("服务端启动成功!");

}

/**

*

* @throws IOException

*/

@SuppressWarnings("unchecked")

public void listen() throws IOException {

// 轮询访问selector

while (true) {

//

selector.select();

//

Iterator ite = this.selector.selectedKeys().iterator();

while (ite.hasNext()) {

SelectionKey key = (SelectionKey) ite.next();

//

ite.remove();

//

if (key.isAcceptable()) {

ServerSocketChannel server = (ServerSocketChannel) key.channel();

//

SocketChannel channel = server.accept();

//

channel.configureBlocking(false);

//

channel.register(this.selector, SelectionKey.OP_READ);

//

} else if (key.isReadable()) {

//

key.interestOps(key.interestOps()&(~SelectionKey.OP_READ));

//

pool.execute(new ThreadHandlerChannel(key));

}

}

}

}

}

/**

*

* @param

* @throws IOException

*/

class ThreadHandlerChannel extends Thread{

private SelectionKey key;

ThreadHandlerChannel(SelectionKey key){

this.key=key;

}

@Override

public void run() {

//

SocketChannel channel = (SocketChannel) key.channel();

//

ByteBuffer buffer = ByteBuffer.allocate(1024);

//

ByteArrayOutputStream baos = new ByteArrayOutputStream();

try {

int size = 0;

while ((size = channel.read(buffer)) > 0) {

buffer.flip();

baos.write(buffer.array(),0,size);

buffer.clear();

}

baos.close();

//

byte[] content=baos.toByteArray();

ByteBuffer writeBuf = ByteBuffer.allocate(content.length);

writeBuf.put(content);

writeBuf.flip();

channel.write(writeBuf);//

if(size==-1){

channel.close();

}else{

//

key.interestOps(key.interestOps()|SelectionKey.OP_READ);

key.selector().wakeup();

}

}catch (Exception e) {

System.out.println(e.getMessage());

}

}

}画外音:为什么NIO只有server代码,没有client代码?------因为Client可以用BIO的代替啊

3. 多路复用I/O(Multiplexing I/O)

select:能打开的文件描述符个数有限(最多1024个),如果有1K个请求,用户进程每次都要把1K个文件描述符发送给内核,内核在内部轮询后将可读描述符返回,用户进程再依次读取。因为文件描述符(fd)相关数据需要在用户态和内核态之间拷来拷去,所以性能还是比较低

poll:可打开的文件描述符数量提高,因为用链表存储,但性能仍然不够,和selector一样数据需要在用户态和内核态之间拷来拷去

epoll(Linux下多为该技术):用户态和内核态之间不用文件描述符(fd)的拷贝,而是通过mmap技术开辟共享空间,所有fd用红黑树存储,有返回结果的fd放在链表中,用户进程通过链表读取返回结果,伪异步I/O,性能较高。epoll分为水平触发和边缘出发两种模式,ET是边缘触发,LT是水平触发,一个表示只有在变化的边际触发,一个表示在某个阶段都会触发

用Netty的示例代码来展现一下epoll的应用层用法

Server代码:

public class HelloNetty {

public static void main(String[] args) {

new NettyServer(8888).serverStart();

}

}

class NettyServer {

int port = 8888;

public NettyServer(int port) {

this.port = port;

}

public void serverStart() {

EventLoopGroup bossGroup = new NioEventLoopGroup();

EventLoopGroup workerGroup = new NioEventLoopGroup();

ServerBootstrap b = new ServerBootstrap();

b.group(bossGroup, workerGroup)

.channel(NioServerSocketChannel.class)

.childHandler(new ChannelInitializer() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

ch.pipeline().addLast(new Handler());

}

});

try {

ChannelFuture f = b.bind(port).sync();

f.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

workerGroup.shutdownGracefully();

bossGroup.shutdownGracefully();

}

}

}

class Handler extends ChannelInboundHandlerAdapter {

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

//super.channelRead(ctx, msg);

System.out.println("server: channel read");

ByteBuf buf = (ByteBuf)msg;

System.out.println(buf.toString(CharsetUtil.UTF_8));

ctx.writeAndFlush(msg);

ctx.close();

//buf.release();

}

@Override

public void exceptionCaught(ChannelHandlerContext ctx, Throwable cause) throws Exception {

//super.exceptionCaught(ctx, cause);

cause.printStackTrace();

ctx.close();

}

}

Client代码:

public class Client {

public static void main(String[] args) {

new Client().clientStart();

}

private void clientStart() {

EventLoopGroup workers = new NioEventLoopGroup();

Bootstrap b = new Bootstrap();

b.group(workers)

.channel(NioSocketChannel.class)

.handler(new ChannelInitializer() {

@Override

protected void initChannel(SocketChannel ch) throws Exception {

System.out.println("channel initialized!");

ch.pipeline().addLast(new ClientHandler());

}

});

try {

System.out.println("start to connect...");

ChannelFuture f = b.connect("127.0.0.1", 8888).sync();

f.channel().closeFuture().sync();

} catch (InterruptedException e) {

e.printStackTrace();

} finally {

workers.shutdownGracefully();

}

}

}

class ClientHandler extends ChannelInboundHandlerAdapter {

@Override

public void channelActive(ChannelHandlerContext ctx) throws Exception {

System.out.println("channel is activated.");

final ChannelFuture f = ctx.writeAndFlush(Unpooled.copiedBuffer("HelloNetty".getBytes()));

f.addListener(new ChannelFutureListener() {

@Override

public void operationComplete(ChannelFuture future) throws Exception {

System.out.println("msg send!");

//ctx.close();

}

});

}

@Override

public void channelRead(ChannelHandlerContext ctx, Object msg) throws Exception {

try {

ByteBuf buf = (ByteBuf)msg;

System.out.println(buf.toString());

} finally {

ReferenceCountUtil.release(msg);

}

}

}

4. 异步I/O AIO

AIO:异步I/O,性能最高,但是使用非常复杂,不是很常用(windows系统中多见,Java中有AIO,API在Linux上还是用epoll实现)

Redis的I/O总结

多路复用技术的发展代表目前I/O发展的方向。

select --- 只支持1024个句柄,轮询导致性能下降

poll --- 支持句柄数增多,性能仍然不高

epoll --- 支持句柄数增多,事件性驱动,性能高

Redis的I/O采用Linux下最先进的epoll,包括Netty也是使用的epoll(后续会有文章专门研究Netty)

AIO虽然更加先进,但是写起来更加复杂,而且在Linux内核下还没有真正支持AIO,但是Windows支持AIO

正是Redis作者对性能极致的追求,才成就了今天Redis在缓存界的霸主地位,选择Redis就是选择了高性能!!!