kafka入门学习(VMvare+kafka_2.11-1.0.1.tgz+springboot)

1. 入门教程

本人在学习kakfa时,拜读了以下大神的入门教程,kafka设计原理,使用场景,安装,配置,单主机集群搭建,多主机集群搭建阐述的很是详细.

Kafka入门经典教程

kafka入门:简介、使用场景、设计原理、主要配置及集群搭建(转)

2. 学习过程中遇到的问题与解决

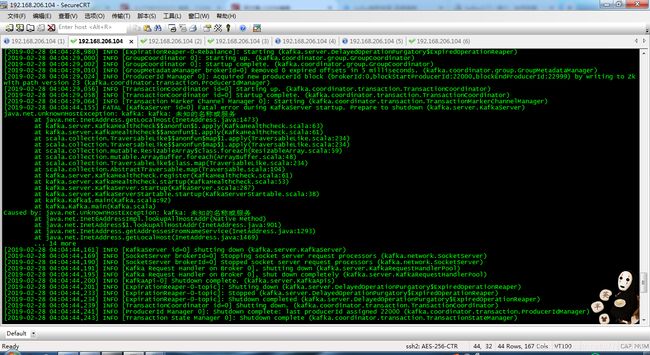

- 问题描述1:在按照上述教程启动kafka服务时,报错信息:Caused by: java.net.UnknownHostException: kafka: 未知的名称或服务.这里kafka是我当前linux系统的主机名

解决方案:

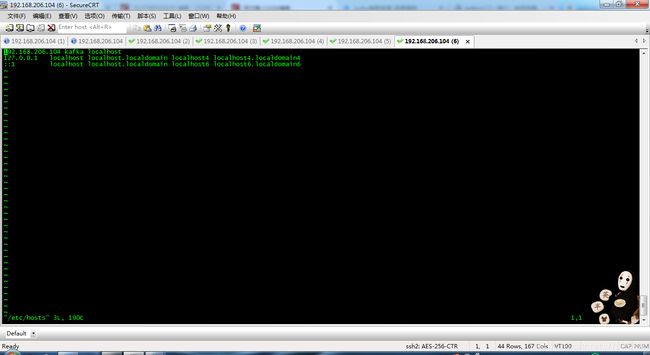

修改当前系统下hosts文件中主机名和ip映射关系,有点像DNS,域名和ip之间需要建立映射关系.

如:192.168.206.104 kafka localhost

172.0.0.1 kafka localhost

linux命令如下:

vim /etc/hosts

i (进入编辑模式)

设置映射信息

esc(退出编辑模式)

:(进入底行命令模式)

wq + enter(保存退出) / q! 强制退出不保存

问题所在应是在 config/server.properties 配置文件上有阐述到.如果没有配置listeners 就会调用java.net.InetAddress.getCanonicalHostName(),整好就是主机名.

而修改完hosts文件后,在调用hostName(),就能够解析出主机名kafka就是ip–>192.168.206.104

- 问题2 :我是在springboot2.0项目中应用kafka的,那么该引入什么依赖呢?

通过查询博客以及查阅kafka官方文档,可知.

我这里引入的是spring整合kakfa 依赖,只需一个依赖即可.

<dependency>

<groupId>org.springframework.kafka</groupId>

<artifactId>spring-kafka</artifactId>

<version>2.2.0.RELEASE</version>

</dependency>

- 问题描述3:在启动java项目时如果broker.server 配置有误或者kafka服务器down了,则消费者再尝试连接3次后仍然失败,该项目则直接shutdown.如何解决?

2019-03-03 08:36:12.692 INFO 6804 --- [ restartedMain] o.a.kafka.common.utils.AppInfoParser : Kafka version : 2.0.1

2019-03-03 08:36:12.692 INFO 6804 --- [ restartedMain] o.a.kafka.common.utils.AppInfoParser : Kafka commitId : fa14705e51bd2ce5

2019-03-03 08:36:33.885 WARN 6804 --- [ restartedMain] org.apache.kafka.clients.NetworkClient : [Consumer clientId=consumer-1, groupId=test] Connection to node -2 could not be established. Broker may not be available.

2019-03-03 08:36:54.997 WARN 6804 --- [ restartedMain] org.apache.kafka.clients.NetworkClient : [Consumer clientId=consumer-1, groupId=test] Connection to node -1 could not be established. Broker may not be available.

2019-03-03 08:37:12.881 WARN 6804 --- [ restartedMain] ConfigServletWebServerApplicationContext : Exception encountered during context initialization - cancelling refresh attempt: org.springframework.context.ApplicationContextException: Failed to start bean 'org.springframework.kafka.config.internalKafkaListenerEndpointRegistry'; nested exception is org.apache.kafka.common.errors.TimeoutException: Timeout expired while fetching topic metadata

2019-03-03 08:37:12.885 INFO 6804 --- [ restartedMain] o.s.s.concurrent.ThreadPoolTaskExecutor : Shutting down ExecutorService 'applicationTaskExecutor'

2019-03-03 08:37:12.885 INFO 6804 --- [ restartedMain] o.apache.catalina.core.StandardService : Stopping service [Tomcat]

2019-03-03 08:37:12.924 INFO 6804 --- [ restartedMain] ConditionEvaluationReportLoggingListener :

Error starting ApplicationContext. To display the conditions report re-run your application with 'debug' enabled.

2019-03-03 08:37:12.931 ERROR 6804 --- [ restartedMain] o.s.boot.SpringApplication : Application run failed

org.springframework.context.ApplicationContextException: Failed to start bean 'org.springframework.kafka.config.internalKafkaListenerEndpointRegistry'; nested exception is org.apache.kafka.common.errors.TimeoutException: Timeout expired while fetching topic metadata

at org.springframework.context.support.DefaultLifecycleProcessor.doStart(DefaultLifecycleProcessor.java:185) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.access$200(DefaultLifecycleProcessor.java:53) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor$LifecycleGroup.start(DefaultLifecycleProcessor.java:360) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.startBeans(DefaultLifecycleProcessor.java:158) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.onRefresh(DefaultLifecycleProcessor.java:122) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.finishRefresh(AbstractApplicationContext.java:893) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext.finishRefresh(ServletWebServerApplicationContext.java:163) ~[spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:552) ~[spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext.refresh(ServletWebServerApplicationContext.java:142) ~[spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.refresh(SpringApplication.java:775) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.refreshContext(SpringApplication.java:397) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:316) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1260) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1248) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at com.demo.kafaka_demo.KafakaDemoApplication.main(KafakaDemoApplication.java:10) [classes/:na]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_162]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_162]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_162]

at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_162]

at org.springframework.boot.devtools.restart.RestartLauncher.run(RestartLauncher.java:49) [spring-boot-devtools-2.1.3.RELEASE.jar:2.1.3.RELEASE]

Caused by: org.apache.kafka.common.errors.TimeoutException: Timeout expired while fetching topitc metadata

Process finished with exit code 0

在application.properties或.yml文件中配置日志等级dubug

logger.level.root=dubug

查看spring 生成bean时调用internalKafkaListenerEndpointRegistry.start方法的调用执行链找到根本性原因.,如下:

java.net.ConnectException: Connection timed out: no further information

at sun.nio.ch.SocketChannelImpl.checkConnect(Native Method) ~[na:1.8.0_162]

at sun.nio.ch.SocketChannelImpl.finishConnect(SocketChannelImpl.java:717) ~[na:1.8.0_162]

at org.apache.kafka.common.network.PlaintextTransportLayer.finishConnect(PlaintextTransportLayer.java:50) ~[kafka-clients-2.0.1.jar:na]

at org.apache.kafka.common.network.KafkaChannel.finishConnect(KafkaChannel.java:152) ~[kafka-clients-2.0.1.jar:na]

at org.apache.kafka.common.network.Selector.pollSelectionKeys(Selector.java:473) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.common.network.Selector.poll(Selector.java:427) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.NetworkClient.poll(NetworkClient.java:510) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:271) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:242) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.consumer.internals.ConsumerNetworkClient.poll(ConsumerNetworkClient.java:218) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.consumer.internals.Fetcher.getTopicMetadata(Fetcher.java:292) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.consumer.KafkaConsumer.partitionsFor(KafkaConsumer.java:1774) [kafka-clients-2.0.1.jar:na]

at org.apache.kafka.clients.consumer.KafkaConsumer.partitionsFor(KafkaConsumer.java:1742) [kafka-clients-2.0.1.jar:na]

at org.springframework.kafka.listener.AbstractMessageListenerContainer.checkTopics(AbstractMessageListenerContainer.java:275) [spring-kafka-2.2.0.RELEASE.jar:2.2.0.RELEASE]

at org.springframework.kafka.listener.ConcurrentMessageListenerContainer.doStart(ConcurrentMessageListenerContainer.java:135) [spring-kafka-2.2.0.RELEASE.jar:2.2.0.RELEASE]

at org.springframework.kafka.listener.AbstractMessageListenerContainer.start(AbstractMessageListenerContainer.java:257) [spring-kafka-2.2.0.RELEASE.jar:2.2.0.RELEASE]

at org.springframework.kafka.config.KafkaListenerEndpointRegistry.startIfNecessary(KafkaListenerEndpointRegistry.java:289) [spring-kafka-2.2.0.RELEASE.jar:2.2.0.RELEASE]

at org.springframework.kafka.config.KafkaListenerEndpointRegistry.start(KafkaListenerEndpointRegistry.java:238) [spring-kafka-2.2.0.RELEASE.jar:2.2.0.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.doStart(DefaultLifecycleProcessor.java:182) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.access$200(DefaultLifecycleProcessor.java:53) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor$LifecycleGroup.start(DefaultLifecycleProcessor.java:360) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.startBeans(DefaultLifecycleProcessor.java:158) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.DefaultLifecycleProcessor.onRefresh(DefaultLifecycleProcessor.java:122) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.finishRefresh(AbstractApplicationContext.java:893) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext.finishRefresh(ServletWebServerApplicationContext.java:163) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.context.support.AbstractApplicationContext.refresh(AbstractApplicationContext.java:552) [spring-context-5.1.5.RELEASE.jar:5.1.5.RELEASE]

at org.springframework.boot.web.servlet.context.ServletWebServerApplicationContext.refresh(ServletWebServerApplicationContext.java:142) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.refresh(SpringApplication.java:775) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.refreshContext(SpringApplication.java:397) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:316) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1260) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at org.springframework.boot.SpringApplication.run(SpringApplication.java:1248) [spring-boot-2.1.3.RELEASE.jar:2.1.3.RELEASE]

at com.demo.kafaka_demo.KafakaDemoApplication.main(KafakaDemoApplication.java:10) [classes/:na]

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) ~[na:1.8.0_162]

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62) ~[na:1.8.0_162]

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) ~[na:1.8.0_162]

at java.lang.reflect.Method.invoke(Method.java:498) ~[na:1.8.0_162]

at org.springframework.boot.devtools.restart.RestartLauncher.run(RestartLauncher.java:49) [spring-boot-devtools-2.1.3.RELEASE.jar:2.1.3.RELEASE]

在本次项目中的实际开发中,我采用的是一种这样不完美的方案.后续更恰当方案持续更新ing.

在customerConfig中在监听容器工厂中设置了是否自启动属性,来控制监听器的自启动,避免kafka broker全部down掉后应用服务直接起不来的现象.

public KafkaListenerContainerFactory<ConcurrentMessageListenerContainer<String, String>> kafkaListenerContainerFactory() {

ConcurrentKafkaListenerContainerFactory<String, String> factory = new ConcurrentKafkaListenerContainerFactory<>();

factory.setConsumerFactory(consumerFactory());

factory.setConcurrency(concurrency);

factory.getContainerProperties().setPollTimeout(1500);

// 设置监听器自启动

factory.setAutoStartup(false);

return factory;

}

在实际开发中是采用了在数据库系统参数配置表中设置了一个参数,通过查询该参数的值来设置是否自启动.尤其避免了如接入第三方平台的kafka时,开发环境kafka不支持联调,而在开发环境中下缺少对应的broker.server 造成的项目根本起不来的情况.另外还实现手动通过脚本去控制的目的.

- 问题四:随着问题三上临时解决方案的诞生有些问题随之而来:当kafka服务器shutDown以后,我们是可以通过禁用监听器的自启动策略来达到能启动我们应用项目的目的,但是随着而来的更严重的问题是如果kafka服务在经过修复ok后,那么以上述方案来说就可以通过重新启动项目,重新将启动策略配置到KafkaListenerContainerFactory中,这样通过spring在生成internalKafkaListenerEndpointRegistry bean时通过调用start方法来实现监听容器的启动.那么有没有一种方法可以在项目启动中还可以控制监听器容器的启动和关闭呢?答案是肯定的,在此再次膜拜那些大神们!

KafkaListenerEndpointRegistry部分源码

public void start() {

Iterator var1 = this.getListenerContainers().iterator();

while(var1.hasNext()) {

MessageListenerContainer listenerContainer = (MessageListenerContainer)var1.next();

this.startIfNecessary(listenerContainer);

}

}

private void startIfNecessary(MessageListenerContainer listenerContainer) {

if (this.contextRefreshed || listenerContainer.isAutoStartup()) {

listenerContainer.start();

}

}

public void stop() {

Iterator var1 = this.getListenerContainers().iterator();

while(var1.hasNext()) {

MessageListenerContainer listenerContainer = (MessageListenerContainer)var1.next();

listenerContainer.stop();

}

}

{

Collection<MessageListenerContainer> listenerContainers = this.getListenerContainers();

KafkaListenerEndpointRegistry.AggregatingCallback aggregatingCallback = new KafkaListenerEndpointRegistry.AggregatingCallback(listenerContainers.size(), callback);

Iterator var4 = listenerContainers.iterator();

while(var4.hasNext()) {

MessageListenerContainer listenerContainer = (MessageListenerContainer)var4.next();

if (listenerContainer.isRunning()) {

listenerContainer.stop(aggregatingCallback);

} else {

aggregatingCallback.run();

}

}

}

public MessageListenerContainer getListenerContainer(String id) {

Assert.hasText(id, "Container identifier must not be empty");

return (MessageListenerContainer)this.listenerContainers.get(id);

}

再次看上面源码, KafkaListenerEndpointRegistry中有两个stop方法,但都是通过listenerContainer.stop方法来实现的,那么也就是说我们只要知道当前的当前的监听容器是哪一个就可以实现有针对性的关闭监听容器.此刻我们再看源码中是否存在返回值是ListenerContainer的方法呢?通过查询发现有一个方法是public MessageListenerContainer getListenerContainer(String id),而ListenerContainer正是MessageListenerContainer的实现类,我想大家此刻都明白了我们是可以通过listenerContainer.id来查找对应的listenerContainer的.那么该如何实现呢?废话不多说,直接上代码!

消费者同时指定监听容器id

package com.demo.kafaka_demo.kafkaDemo;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.kafka.annotation.KafkaListener;

import org.springframework.stereotype.Component;

/**

* @author pengchen

* @date 2019-02-17:下午 3:54

*/

@Component

public class KafkaListenerDemo {

protected final Logger logger = LoggerFactory.getLogger(this.getClass());

@KafkaListener(id = "test",topics = "test")

public void listen(ConsumerRecord<?, ?> record) {

// logger.info("kafka的key: " + record.key());

logger.info("消费者消费kafka消息: " + record.value().toString());

}

}

生产者以及如果设置监听容器启动与关闭

package com.demo.kafaka_demo.kafkaDemo;

import lombok.extern.java.Log;

import lombok.extern.slf4j.Slf4j;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.kafka.config.KafkaListenerEndpointRegistry;

import org.springframework.kafka.core.KafkaTemplate;

import org.springframework.web.bind.annotation.GetMapping;

import org.springframework.web.bind.annotation.RequestMapping;

import org.springframework.web.bind.annotation.RestController;

import javax.servlet.http.HttpServletRequest;

import javax.servlet.http.HttpServletResponse;

/**

* @author pengchen

* @date 2019-02-17:下午 3:28

*/

@Slf4j

@RestController

@RequestMapping("kafka")

public class KafkaProducerController {

@Autowired

private KafkaTemplate kafkaTemplate;

@Autowired

private KafkaListenerEndpointRegistry registry;

private final Logger logger = LoggerFactory.getLogger(KafkaProducerController.class);

@GetMapping("send")

public String sendKafka(HttpServletRequest request, HttpServletResponse response) {

try {

String message = request.getParameter("message");

logger.info("kafka的生产者生成消息={}", message);

kafkaTemplate.send("test", message);

logger.info("生成者生产消息成功,message={}", message);

return "发送kafka成功";

} catch (Exception e) {

logger.error("发送kafka失败", e);

return "发送kafka失败";

}

}

@GetMapping("set")

public String setStartOrShutDown(String status) {

if ("Y".equals(status)) {

this.startListener();

return "成功";

} else {

this.shutDownListener();

return "失败";

}

}

/**

* 先判断容器是否运行,如果运行则调用恢复方法,否则调用启动方法

*/

public void startListener() {

// 判断监听容器是否启动,未启动则将其启动

System.out.println("启动监听器");

if (!registry.getListenerContainer("test").isRunning()) {

registry.getListenerContainer("test").start();

}

registry.getListenerContainer("test").resume();

}

/**

* 关闭监听器

*/

public void shutDownListener() {

System.out.println("关闭监听器");

registry.getListenerContainer("test").pause();

}

}

3.学习kafka的思考

- 待完善(kafka怎么保证消息被消费,或者说消息被消费后生产者怎么知道)