kubernetes 1.5安装 ELK(ElasticSearch, Logstash, Kibana)

安装这东西还是比较麻烦,在网上的文章很多,要么难得不行,要不就是简单的不行,看完了也不知道怎么搞。按着他们的好不容易搞出来,也发现无法使用。

还有和原来一样,先把镜像和版本说明一下:

index.tenxcloud.com/docker_library/elasticsearch:2.3.0

index.tenxcloud.com/docker_library/kibana:latest

pires/logstash

##1: 安装elasticsearch集群

es-master.yaml 文件内容

[root@localhost github]# cat es-master.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: es-master

labels:

component: elasticsearch

role: master

spec:

template:

metadata:

labels:

component: elasticsearch

role: master

annotations:

pod.beta.kubernetes.io/init-containers: '[

{

"name": "sysctl",

"image": "busybox",

"imagePullPolicy": "IfNotPresent",

"command": ["sysctl", "-w", "vm.max_map_count=262144"],

"securityContext": {

"privileged": true

}

}

]'

spec:

containers:

- name: es-master

securityContext:

privileged: true

capabilities:

add:

- IPC_LOCK

image: index.tenxcloud.com/docker_library/elasticsearch:2.3.0

imagePullPolicy: Always

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: "CLUSTER_NAME"

value: "myesdb"

- name: NODE_MASTER

value: "true"

- name: NODE_INGEST

value: "false"

- name: NODE_DATA

value: "false"

- name: HTTP_ENABLE

value: "false"

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: storage

mountPath: /data

volumes:

- emptyDir:

medium: ""

name: "storage" es-client.yaml文件内容

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: es-client

labels:

component: elasticsearch

role: client

spec:

template:

metadata:

labels:

component: elasticsearch

role: client

annotations:

pod.beta.kubernetes.io/init-containers: '[

{

"name": "sysctl",

"image": "busybox",

"imagePullPolicy": "IfNotPresent",

"command": ["sysctl", "-w", "vm.max_map_count=262144"],

"securityContext": {

"privileged": true

}

}

]'

spec:

containers:

- name: es-client

securityContext:

privileged: true

capabilities:

add:

- IPC_LOCK

image: index.tenxcloud.com/docker_library/elasticsearch:2.3.0

imagePullPolicy: Always

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: "CLUSTER_NAME"

value: "myesdb"

- name: NODE_MASTER

value: "false"

- name: NODE_DATA

value: "false"

- name: HTTP_ENABLE

value: "true"

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9200

name: http

protocol: TCP

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: storage

mountPath: /data

volumes:

- emptyDir:

medium: ""

name: "storage"es-data.yaml内容

[root@localhost github]# cat es-data.yaml

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: es-data

labels:

component: elasticsearch

role: data

spec:

template:

metadata:

labels:

component: elasticsearch

role: data

annotations:

pod.beta.kubernetes.io/init-containers: '[

{

"name": "sysctl",

"image": "busybox",

"imagePullPolicy": "IfNotPresent",

"command": ["sysctl", "-w", "vm.max_map_count=262144"],

"securityContext": {

"privileged": true

}

}

]'

spec:

containers:

- name: es-data

securityContext:

privileged: true

capabilities:

add:

- IPC_LOCK

image: index.tenxcloud.com/docker_library/elasticsearch:2.3.0

imagePullPolicy: Always

env:

- name: NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

- name: "CLUSTER_NAME"

value: "myesdb"

- name: NODE_MASTER

value: "false"

- name: NODE_INGEST

value: "false"

- name: HTTP_ENABLE

value: "false"

- name: "ES_JAVA_OPTS"

value: "-Xms256m -Xmx256m"

ports:

- containerPort: 9300

name: transport

protocol: TCP

volumeMounts:

- name: storage

mountPath: /data

volumes:

- emptyDir:

medium: ""

name: "storage"es-discovery-svc.yaml 内容:

[root@localhost github]# cat es-discovery-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-discovery

labels:

component: elasticsearch

role: master

spec:

selector:

component: elasticsearch

role: master

ports:

- name: transport

port: 9300

protocol: TCPes-svc.yaml文件内容:

[root@localhost github]# cat es-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

labels:

component: elasticsearch

role: client

spec:

type: LoadBalancer

selector:

component: elasticsearch

role: client

ports:

- name: http

port: 9200

protocol: TCPes-svc-1.yaml

[root@localhost github]# cat es-svc-1.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch-logging

namespace: kube-system

labels:

k8s-app: elasticsearch

kubernetes.io/name: "elasticsearch"

spec:

type: ExternalName

externalName: elasticsearch.default.svc.cluster.local

ports:

- port: 9200

targetPort: 9200这几个文件创建完成以后,一个一个的kubecret create -f .....

kubecrt create -f es-client.yaml -f es-data.yaml -f es-discovery-svc.yaml -f es-master.yaml -f es-svc-1.yaml -f es-svc.yaml完成以后,查看:

kubectl get pods

[root@localhost github]# kubectl get pods

NAME READY STATUS RESTARTS AGE

busybox 1/1 Running 2 2h

es-client-1983128803-k0g83 1/1 Running 0 2h

es-data-4107927351-f621b 1/1 Running 0 2h

es-master-1554717905-pg6tv 1/1 Running 0 2h再查看:

kubectl logs es-master-1554717905-pg6tv[root@localhost github]# kubectl logs es-master-1554717905-pg6tv

[2016-12-28 04:27:01,727][INFO ][node ] [Vindicator] version[2.3.0], pid[1], build[8371be8/2016-03-29T07:54:48Z]

[2016-12-28 04:27:01,727][INFO ][node ] [Vindicator] initializing ...

[2016-12-28 04:27:02,154][INFO ][plugins ] [Vindicator] modules [reindex, lang-expression, lang-groovy], plugins [], sites []

[2016-12-28 04:27:02,174][INFO ][env ] [Vindicator] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/mapper/centos-root)]], net usable_space [92.2gb], net total_space [99.9gb], spins? [possibly], types [xfs]

[2016-12-28 04:27:02,174][INFO ][env ] [Vindicator] heap size [247.5mb], compressed ordinary object pointers [true]

[2016-12-28 04:27:03,441][INFO ][node ] [Vindicator] initialized

[2016-12-28 04:27:03,441][INFO ][node ] [Vindicator] starting ...

[2016-12-28 04:27:03,493][INFO ][transport ] [Vindicator] publish_address {10.32.0.4:9300}, bound_addresses {[::]:9300}

[2016-12-28 04:27:03,497][INFO ][discovery ] [Vindicator] elasticsearch/x5bP8CfuTNOjId9FnM0EWQ

[2016-12-28 04:27:06,538][INFO ][cluster.service ] [Vindicator] new_master {Vindicator}{x5bP8CfuTNOjId9FnM0EWQ}{10.32.0.4}{10.32.0.4:9300}, reason: zen-disco-join(elected_as_master, [0] joins received)

[2016-12-28 04:27:06,566][INFO ][http ] [Vindicator] publish_address {10.32.0.4:9200}, bound_addresses {[::]:9200}

[2016-12-28 04:27:06,566][INFO ][node ] [Vindicator] started

[2016-12-28 04:27:06,591][INFO ][gateway ] [Vindicator] recovered [0] indices into cluster_statekubectl logs es-data-4107927351-f621b [root@localhost github]# kubectl logs es-data-4107927351-f621b

[2016-12-28 04:26:23,791][INFO ][node ] [Cassandra Nova] version[2.3.0], pid[1], build[8371be8/2016-03-29T07:54:48Z]

[2016-12-28 04:26:23,791][INFO ][node ] [Cassandra Nova] initializing ...

[2016-12-28 04:26:24,219][INFO ][plugins ] [Cassandra Nova] modules [reindex, lang-expression, lang-groovy], plugins [], sites []

[2016-12-28 04:26:24,240][INFO ][env ] [Cassandra Nova] using [1] data paths, mounts [[/usr/share/elasticsearch/data (/dev/mapper/centos-root)]], net usable_space [42gb], net total_space [50gb], spins? [possibly], types [xfs]

[2016-12-28 04:26:24,240][INFO ][env ] [Cassandra Nova] heap size [247.5mb], compressed ordinary object pointers [true]

[2016-12-28 04:26:25,537][INFO ][node ] [Cassandra Nova] initialized

[2016-12-28 04:26:25,537][INFO ][node ] [Cassandra Nova] starting ...

[2016-12-28 04:26:25,585][INFO ][transport ] [Cassandra Nova] publish_address {10.46.0.6:9300}, bound_addresses {[::]:9300}

[2016-12-28 04:26:25,589][INFO ][discovery ] [Cassandra Nova] elasticsearch/hcnJzTAZRBK517ZsLt2LQw

[2016-12-28 04:26:28,626][INFO ][cluster.service ] [Cassandra Nova] new_master {Cassandra Nova}{hcnJzTAZRBK517ZsLt2LQw}{10.46.0.6}{10.46.0.6:9300}, reason: zen-disco-join(elected_as_master, [0] joins received)

[2016-12-28 04:26:28,655][INFO ][http ] [Cassandra Nova] publish_address {10.46.0.6:9200}, bound_addresses {[::]:9200}

[2016-12-28 04:26:28,655][INFO ][node ] [Cassandra Nova] started

[2016-12-28 04:26:28,678][INFO ][gateway ] [Cassandra Nova] recovered [0] indices into cluster_state##2: 安装kibana和logstash

index.tenxcloud.com/docker_library/kibana:latest 现在的时间是:2016.12.28日的最新版本。也可以尝试别的版本

pires/logstash

继续看下yaml文件内容

kibana-controller.yaml 文件内容

[root@localhost elk]# cat kibana-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: kibana

namespace: default

labels:

component: elk

role: kibana

spec:

replicas: 1

selector:

component: elk

role: kibana

template:

metadata:

labels:

component: elk

role: kibana

spec:

serviceAccount: elk

containers:

- name: kibana

image: index.tenxcloud.com/docker_library/kibana:latest

env:

- name: KIBANA_ES_URL

value: "http://elasticsearch.default.svc.cluster.local:9200"

- name: KUBERNETES_TRUST_CERT

value: "true"

ports:

- containerPort: 5601

name: http

protocol: TCPkibana-service.yaml 文件内容:

[root@localhost elk]# cat kibana-service.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana

namespace: default

labels:

component: elk

role: kibana

spec:

selector:

component: elk

role: kibana

type: NodePort

ports:

- name: http

port: 80

nodePort: 30080

targetPort: 5601

protocol: TCP logstash-controller.yaml 文件内容:

[root@localhost elk]# cat logstash-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: logstash

namespace: default

labels:

component: elk

role: logstash

spec:

replicas: 1

selector:

component: elk

role: logstash

template:

metadata:

labels:

component: elk

role: logstash

spec:

serviceAccount: elk

containers:

- name: logstash

image: pires/logstash

env:

- name: KUBERNETES_TRUST_CERT

value: "true"

ports:

- containerPort: 5043

name: lumberjack

protocol: TCP

volumeMounts:

- mountPath: /certs

name: certs

volumes:

- emptyDir:

medium: ""

name: "storage"

- hostPath:

path: "/tmp"

name: "certs"logstash-service.yaml 文件内容:

[root@localhost elk]# cat logstash-service.yaml

apiVersion: v1

kind: Service

metadata:

name: logstash

namespace: default

labels:

component: elk

role: logstash

spec:

selector:

component: elk

role: logstash

ports:

- name: lumberjack

port: 5043

protocol: TCPservice-account.yaml 文件内容:

[root@localhost elk]# cat service-account.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: elk接下来安装:

kubectl create -f service-account.yaml -f logstash-service.yaml -f logstash-controller.yaml -f kibana-service.yaml -f kibana-controller.yaml##3: 安装fluentd-elasticsearch

gcr.io/google-containers/fluentd-elasticsearch:1.20

镜像还是先下载,最好在每一个节点都下载。

fluentd.yaml文件内容

[root@localhost elk]# cat fluentd.yaml

apiVersion: extensions/v1beta1

kind: DaemonSet

metadata:

name: fluentd-elasticsearch

namespace: kube-system

labels:

k8s-app: fluentd-logging

spec:

template:

metadata:

labels:

name: fluentd-elasticsearch

spec:

containers:

- name: fluentd-elasticsearch

image: gcr.io/google-containers/fluentd-elasticsearch:1.20

resources:

limits:

memory: 200Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containerskubectl create -f fluentd.yaml 安装完成。

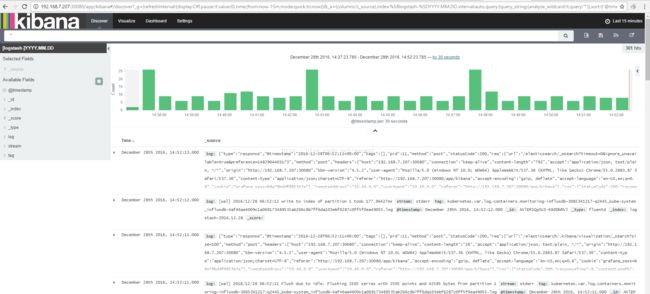

##4: 验证

kubectl get pods --all-namespaces[root@localhost elk]# kubectl get pods --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default busybox 1/1 Running 3 3h

default es-client-1983128803-k0g83 1/1 Running 0 2h

default es-data-4107927351-f621b 1/1 Running 0 2h

default es-master-1554717905-pg6tv 1/1 Running 0 2h

default kibana-39wgw 1/1 Running 0 2h

default logstash-18k38 1/1 Running 0 2h

kube-system dummy-2088944543-xjj21 1/1 Running 0 1d

kube-system etcd-centos-master 1/1 Running 0 1d

kube-system fluentd-elasticsearch-1mln9 1/1 Running 0 2h

kube-system fluentd-elasticsearch-qrl81 1/1 Running 0 2h

kube-system fluentd-elasticsearch-wkw0n 1/1 Running 0 2h

kube-system heapster-2193675300-j1jxn 1/1 Running 0 1d

kube-system kube-apiserver-centos-master 1/1 Running 0 1d

kube-system kube-controller-manager-centos-master 1/1 Running 0 1d

kube-system kube-discovery-1769846148-c45gd 1/1 Running 0 1d

kube-system kube-dns-2924299975-96xms 4/4 Running 0 1d

kube-system kube-proxy-33lsn 1/1 Running 0 1d

kube-system kube-proxy-jnz6q 1/1 Running 0 1d

kube-system kube-proxy-vfql2 1/1 Running 0 1d

kube-system kube-scheduler-centos-master 1/1 Running 0 1d

kube-system kubernetes-dashboard-3000605155-8mxgz 1/1 Running 0 1d

kube-system monitoring-grafana-810108360-h92v7 1/1 Running 0 1d

kube-system monitoring-influxdb-3065341217-q2445 1/1 Running 0 1d

kube-system weave-net-k5tlz 2/2 Running 0 1d

kube-system weave-net-q3n89 2/2 Running 0 1d

kube-system weave-net-x57k7 2/2 Running 0 1d再执行查看日志的命令:

kubectl --namespace=kube-system logs fluentd-elasticsearch-1mln9有以下成功的日志:

2016-12-28 05:48:08 +0000 [info]: Connection opened to Elasticsearch cluster => {:host=>"elasticsearch-logging", :port=>9200, :scheme=>"http"}

2016-12-28 05:48:08 +0000 [warn]: retry succeeded. plugin_id="object:3fa39c8a7e2c"

2017-01-12 07:04:48 +0000 [warn]: suppressed same stacktrace

2017-01-12 07:04:48 +0000 [warn]: record_reformer: Fluent::BufferQueueLimitError queue size exceeds limit /opt/td-agent/embedded/lib/ruby/gems/2.1.0/gems/fluentd-0.12.12/lib/fluent/buffer.rb:189:in `block in emit'

2017-01-12 07:04:48 +0000 [warn]: emit transaction failed: error_class=Fluent::BufferQueueLimitError error="queue size exceeds limit" tag="var.log.containers.tomcat-apr-dm-1449051961-shb7d_default_tomcat-apr-a251826108724b4265bd92b7fcf48177d82d6527c1185818129f62d2c24b7442.log"

2017-01-12 07:04:48 +0000 [warn]: suppressed same stacktrace

2017-01-12 07:04:48 +0000 [warn]: record_reformer: Fluent::BufferQueueLimitError queue size exceeds limit /opt/td-agent/embedded/lib/ruby/gems/2.1.0/gems/fluentd-0.12.12/lib/fluent/buffer.rb:189:in `block in emit'

^C