论文阅读笔记(三十七):MegDet: A Large Mini-Batch Object Detector

The development of object detection in the era of deep learning, from R-CNN [11], Fast/Faster R-CNN [10, 31] to recent Mask R-CNN [14] and RetinaNet [24], mainly come from novel network, new framework, or loss design. However, mini-batch size, a key factor for the training of deep neural networks, has not been well studied for object detection. In this paper, we propose a Large Mini-Batch Object Detector (MegDet) to enable the training with a large minibatch size up to 256, so that we can effectively utilize at most 128 GPUs to significantly shorten the training time. Technically, we suggest a warmup learning rate policy and Cross-GPU Batch Normalization, which together allow us to successfully train a large mini-batch detector in much less time (e.g., from 33 hours to 4 hours), and achieve even better accuracy. The MegDet is the backbone of our submission (mmAP 52.5%) to COCO 2017 Challenge, where we won the 1st place of Detection task.

目标检测在深入学习时代的发展, 从R-CNN [11], Fast/Faster R-CNN [10, 31] 到最近的Mask R-CNN [14] 和 RetinaNet [24], 主要来自新的网络, 新的框架或loss设计。然而, 小批量是深层神经网络训练的关键因素, 在目标检测方面还没有得到很好的研究。本文提出了一个Large Mini-Batch Object Detector (MegDet), 使 large minibatch size达到 256, 使我们能够有效地利用最多 128 GPUs 来显著缩短训练时间。从技术上讲, 我们建议一个warmup learning rate策略和Cross-GPU Batch Normalization, 这使得我们能够在更少的时间 (例如从33小时到4小时) 成功地训练一个large mini-batch检测器, 并取得更高的精确度。MegDet 是我们提交 (mmAP 52.5%) COCO2017挑战的backbone,并且赢得了检测任务第一名。

Tremendous progresses have been made on CNN-based object detection, since seminal work of R-CNN [11], Fast/Faster R-CNN series [10, 31], and recent state-of-theart detectors like Mask R-CNN [14] and RetinaNet [24]. Taking COCO [25] dataset as an example, its performance has been boosted from 19.7 AP in Fast R-CNN [10] to 39.1 AP in RetinaNet [24], in just two years. The improvements are mainly due to better backbone network [16], new detection framework [31], novel loss design [24], improved pooling method [5, 14], and so on [19].

自从R-CNN [11],Fast/Faster R-CNN系列[10,31]和最近的state-of-theart检测器如 Mask R-CNN和RetinaNet [24]的开创性工作以来,在基于CNN的目标检测方面取得了巨大进展[ 14]。以COCO [25]数据集为例,在短短的两年时间里,其性能从Fast R-CNN [10]的19.7 AP提升到RetinaNet [39]的39.1 AP。这些改进主要是由于更好的backbone network[16],新的检测框架[31],新颖的loss设计[24],改进的pooling方法[5,14]等[19]。

A recent trend on CNN-based image classification uses very large min-batch size to significantly speed up the training. For example, the training of ResNet-50 can be accomplished in an hour [13] or even in 31 minutes [39] , using mini-batch size 8,192 or 16,000, with little or small sacrifice on the accuracy. In contract, the mini-batch size remains very small (e.g., 2-16) in object detection literatures. Therefore in this paper, we study the problem of mini-batch size in object detection and present a technical solution to successfully train a large mini-batch size object detector.

基于CNN的图像分类最近的趋势是使用非常大的min-batch来显着加快训练速度。例如,ResNet-50的训练可以在一小时内完成[13],甚至在31分钟内完成[39],使用8,192或16,000的mini-batch size,在精度上做小的牺牲。在物体检测文献中,mini-batch size保持非常小(例如2-16)。因此,本文研究了物体检测中mini-batch size的问题,并提出了一种技术方案,以成功训练一个large mini-batch size物体检测器。

What is wrong with the small mini-batch size? Originating from the object detector R-CNN series, a mini-batch involving only 2 images is widely adopted in popular detectors like Faster R-CNN and R-FCN. Though in state-of-the-art detectors like RetinaNet and Mask R-CNN the mini-batch size is increased to 16, which is still quite small compared with the mini-batch size (e.g., 256) used in current image classification. There are several potential drawbacks associated with small mini-batch size. First, the training time is notoriously lengthy. For example, the training of ResNet152 on COCO takes 3 days, using the mini-bath size 16 on a machine with 8 Titian XP GPUs. Second, training with small mini-batch size fails to provide accurate statistics for batch normalization [20] (BN). In order to obtain a good batch normalization statistics, the mini-batch size for ImageNet classification network is usually set to 256, which is significantly larger than the mini-batch size used in current object detector setting.

小mini-batch size有什么问题?源于物体检测器R-CNN系列,在R-CNN和R-FCN等流行检测器中广泛采用仅包含2幅图像的mini-batch产品。虽然在像RetinaNet和Mask R-CNN这样state-of-the-art 检测器中,mini-batch size增加到了16,与当前图像分类中使用的mini-batch size(例如256)相比,这仍然很小。小的mini-batch size有几个潜在的缺点。首先,训练时间非常冗长。例如,在COCO上ResNet152的训练需要3天,在8台Titian XP GPU的机器上使用16的mini-bath size。其次,小的mini-batch size训练不能提供批量标准化[20](BN)的准确统计数据。为了获得良好的批量归一化统计数据,ImageNet分类网络的mini-bath size通常设置为256,这比当前目标检测器设置中使用的mini-bath size要大得多。

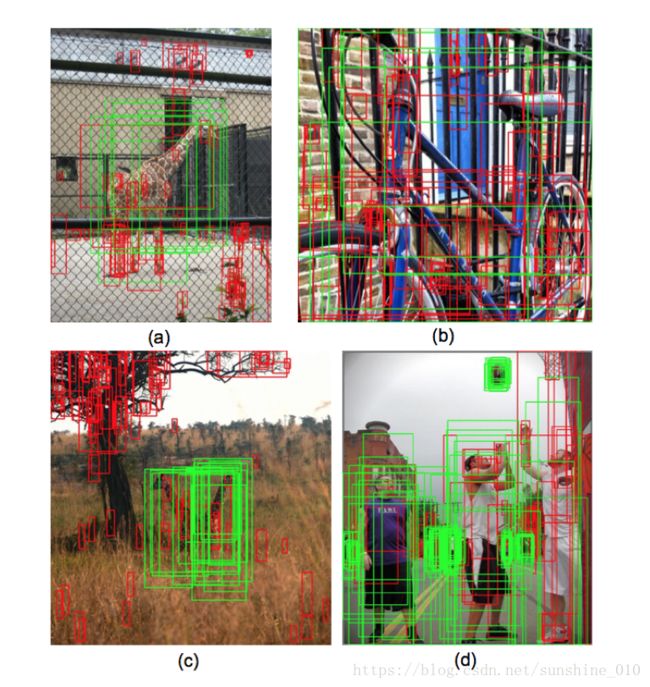

Last but not the least, the number of positive and negative training examples within a small mini-batch are more likely imbalanced, which might hurt the final accuracy. Figure 2 gives some examples with imbalanced positive and negative proposals. And Table 1 compares the statistics of two detectors with different mini-batch sizes, at different training epochs on COCO dataset.

最后但并非最不重要的是,小的mini-batch中的positive和negative训练示例的数量更可能不平衡,这可能会影响最终的准确性。图2给出了一些不平衡的positive和negative提议的例子。表1比较了COCO数据集不同训练时期两种不同mini-batch sizes检测器的统计数据。

What is the challenge to simply increase the min-batch size? As in the image classification problem, the main dilemma we are facing is: the large min-batch size usually requires a large learning rate to maintain the accuracy, according to “equivalent learning rate rule” [13, 21]. But a large learning rate in object detection could be very likely leading to the failure of convergence; if we use a smaller learning rate to ensure the convergence, an inferior results are often obtained.

简单地增加mini-bath size有什么挑战?与图像分类问题一样,我们面临的主要困境是:根据“equivalent learning rate rule”[13,21],大的min-batch size通常需要大量的学习速率来保持准确性。但是,目标检测中的大量学习速率很可能导致收敛失败;如果我们使用较小的学习率来确保收敛,通常会得到较差的结果。

To tackle the above dilemma, we propose a solution as follows. First, we present a new explanation of linear scaling rule and borrow the “warmup” learning rate policy [13] to gradually increase the learning rate at the very early stage. This ensures the convergence of training. Second, to address the accuracy and convergence issues, we introduce Cross-GPU Batch Normalization (CGBN) for better BN statistics. CGBN not only improves the accuracy but also makes the training much more stable. This is significant because we are able to safely enjoy the rapidly increased computational power from industry.

为了解决上述困境,我们提出如下解决方案。首先,我们提出linear scaling rule的新解释,并借用“warmup”学习速率策略[13],以在最初阶段逐渐提高学习速率。这确保了训练的收敛。其次,为了解决准确性和收敛性问题,我们引入了Cross-GPU Batch Normalization(CGBN)以获得更好的BN统计。 CGBN不仅提高了准确性,而且使训练更加稳定。这很重要,因为我们能够安全地享受行业快速增长的计算能力。

Our MegDet (ResNet-50 as backbone) can finish COCO training in 4 hours on 128 GPUs, reaching even higher accuracy. In contrast, the small mini-batch counterpart takes 33 hours with lower accuracy. This means that we can speed up the innovation cycle by nearly an order-of-magnitude with even better performance, as shown in Figure 1. Based on MegDet, we secured 1st place of COCO 2017 Detection Challenge.

我们的MegDet(ResNet-50作为backbone)可以在4个小时内完成128个GPU的COCO训练,达到更高的准确度。相比之下,小的mini-batch对应机器以33小时准确度较低。这意味着我们可以将创新周期加快几个数量级,并获得更好的性能,如图1所示。基于MegDet,我们获得了COCO 2017检测挑战赛的第一名。

Our technical contributions can be summarized as:

• We give a new interpretation of linear scaling rule, in the context of object detection, based on an assumption of maintaining equivalent loss variance.

• We are the first to train BN in the object detection framework. We demonstrate that our Cross-GPU Batch Normalization not only benefits the accuracy, but also makes the training easy to converge, especially for the large mini-batch size.

• We are the first to finish the COCO training (based on ResNet-50) in 4 hours, using 128 GPUs, and achieving higher accuracy.

• Our MegDet leads to the winning of COCO 2017 Detection Challenge.

我们的技术贡献可以概括为:

•基于假设,我们在物体检测的上下文中给出linear scaling rule的新解释

保持等价的loss变化。

•我们是第一个在物体检测框架中训练BN的。我们证明,我们的 Cross-GPU Batch Normalization不仅有利于精度,而且还使得训练易于收敛,特别是对于large mini-batch size。

•我们是第一个在4小时内完成COCO训练(基于ResNet-50),使用128个GPU并实现更高的精度的。

•我们的MegDet成就了COCO 2017检测挑战赛的胜利。

CNN-based detectors have been the mainstream in current academia and industry. We can roughly divide existing CNN-based detectors into two categories: one-stage detectors like SSD [26], YOLO [29, 30] and recent RetinaNet [24], and two-stage detectors [33, 1] like Faster RCNN [31], R-FCN [6] and Mask-RCNN [14].

基于CNN的检测器一直是当前学术界和工业界的主流。我们可以大致将现有的基于CNN的检测器分为两类:像SSD [26],YOLO [29,30]和最近的RetinaNet [24]等one-stage检测器,以及two-stage检测器[33,1] Faster RCNN [31], R-FCN [6] 和Mask-RCNN [14]。

For two-stage detectors, let us start from the R-CNN family. R-CNN [11] was first introduced in 2014. It employs Selective Search [37] to generate a set of region proposals and then classifies the warped patches through a CNN recognition model. As the computation of the warp process is intensive, SPPNet [15] improves the R-CNN by performing classification on the pooled feature maps based on a spatial pyramid pooling rather than classifying on the resized raw images. Fast-RCNN [10] simplifies the Spatial Pyramid Pooling (SPP) to ROIPooling. Although reasonable performance has been obtained based on FastRCNN, it still replies on traditional methods like selective search to generate proposals. Faster-RCNN [31] replaces the traditional region proposal method with the Region Proposal Network (RPN), and proposes an end-to-end detection framework. The computational cost of Faster-RCNN will increase dramatically if the number of proposals is large. In R-FCN [6], position-sensitive pooling is introduced to obtain a speed-accuracy trade-off. Recent works are more focusing on improving detection performance. Deformable ConvNets [7] uses the learned offsets to convolve different locations of feature maps, and forces the networks to focus on the objects. FPN [23] introduces the feature pyramid technique and makes significant progress on small object detection. As FPN provides a good trade-off between accuracy and implementation, we use it as the default detection framework. To address the alignment issue, Mask R-CNN [14] introduces the ROIAlign and achieves state-of-the-art results for both object detection and instance segmentation.

对于two-stage检测器,让我们从R-CNN家族开始。 R-CNN [11]于2014年首次引入。它采用选择性搜索[37]来生成一组区域提议,然后通过CNN识别模型对变形的补丁进行分类。由于扭曲过程的计算量很大,SPPNet [15]通过对基于空间金字塔池的混合特征图进行分类而不是对调整大小的原始图像进行分类来改进R-CNN。 Fast-RCNN [10]简化了ROIPooling的空间金字塔池(SPP)。尽管基于Fast R-CNN获得了合理的性能,但它仍然回答传统方法(如选择性搜索)以生成提议。 Faster-RCNN [31]用区域提议网络(RPN)取代了传统的区域提议方法,并提出了一种端到端的检测框架。如果提案数量很大,Faster R-CNN的计算成本将显着增加。在R-FCN [6]中,引入位置敏感池化以获得速度精度的折衷。最近的工作更侧重于提高检测性能。Deformable ConvNets[7]使用学习的偏移量来卷积特征映射的不同位置,并且强制网络专注于物体。 FPN [23]引入了特征金字塔技术,并在小物体检测方面取得重大进展。由于FPN在准确性和实现之间提供了良好的折衷,我们将其用作默认检测框架。为了解决对齐问题,Mask R-CNN [14]引入了ROI Align并实现了物体检测和实例分割的最新结果。

Different from two-stage detectors, which involve a proposal and refining step, one-stage detectors usually run faster. In YOLO [29, 30], a convolutional network is followed with a fully connected layer to obtain classification and regression results based on a 7 × 7 grid. SSD [26] presents a fully convolutional network with different feature layers targeting different anchor scales. Recently, RetinaNet is introduced in [24] based on the focal loss, which can significantly reduce false positives in one-stage detectors.

与涉及proposal 和 refining步骤的two-stage检测器不同,one-stage检测器通常运行得更快。在YOLO [29,30]中,卷积网络跟随一个完全连接的层,以获得基于7×7网格的分类和回归结果。 SSD [26]提出了一个完全卷积网络,其中不同的特征层面向不同的anchor scales。最近,基于focal loss的RetinaNet被引入了[24],它可以显着减少one-stage 检测器中的false positives。

Large mini-batch training has been an active research topic in image classification. In [13], imagenet training based on ResNet50 can be finished in one hour. [39] presents a training setting which can finish the ResNet50 training in 31 minutes without losing classification accuracy. Besides the training speed, [17] investigates the generalization gap between large mini-batch and small mini-batch, and propose the novel model and algorithm to eliminate the gap. However, the topic of large mini-batch training for object detection is rarely discussed so far.

Large mini-batch训练一直是图像分类中的一个活跃的研究课题。在[13]中,基于ResNet50的imagenet训练可以在一个小时内完成。 [39]提供了一个训练设置,可以在31分钟内完成ResNet50训练,而不会丢失分类准确性。除了训练速度之外,[17]研究了large mini-batch 和 small mini-batch之间的泛化差距,并提出了消除差距的新模型和算法。然而,迄今为止很少讨论用于物体检测的large mini-batch训练的主题。

In this section, we present our Large Mini-Batch Detector (MegDet), to finish the training in less time while achieving higher accuracy.

在本节中,我们将介绍我们的Large Mini-Batch Detector(MegDet),以在更短的时间内完成训练,同时实现更高的准确度。

3.1. Problems with Small Mini-Batch Size

The early generation of CNN-based detectors use very small mini-batch size like 2 in Faster-RCNN and R-FCN. Even in state-of-the-art detectors like RetinaNet and Mask R-CNN, the batch size is set as 16. There exist a few problems when training with a small mini-batch size. First, we have to pay much longer training time if a small mini-batch size is utilized for training. As shown in Figure 1, the training of a ResNet-50 detector based on a mini-batch size of 16 takes more than 30 hours. With the original mini-batch size 2, the training time could be more than one week. Second, in the training of detector, we usually fix the statistics of Batch Normalization and use the pre-computed values on ImageNet dataset, since the small mini-batch size is not applicable to re-train the BN layers. It is a sub-optimal tradeoff since the two datasets, COCO and ImageNet, are much different. Last but not the least, the ratio of positive and negative samples could be very imbalanced. In Table 1, we provide the statistics for the ratio of positive and negative training examples. We can see that a small mini-batch size leads to more imbalanced training examples, especially at the initial stage. This imbalance may affect the overall detection performance.

3.1 Small Mini-Batch Size的问题

早期的基于CNN的检测器在Faster R-CNN和R-FCN中使用非常小的mini-batch size,如2。即使在RetinaNet和Mask R-CNN等最state-of-the-art的检测器中,batch size也设置为16.使用小的 mini-batch size进行训练时存在一些问题。首先,如果使用小的 mini-batch size训练,我们必须支付更长的训练时间。如图1所示,基于min-batch 16的ResNet-50检测器的训练需要超过30小时。由于原始mini-batch size为2,训练时间可能超过一周。其次,在检测器的训练中,我们通常修复批量归一化的统计量并在ImageNet数据集上使用预先计算的值,因为小的mini-bath size不适用于重新训练BN层。这是一个次优的折衷,因为两个数据集COCO和ImageNet差别很大。最后但并非最不重要的是,positive和negative样本的比例可能非常不平衡。在表1中,我们提供了positive和negative训练示例比例的统计数据。我们可以看到,小的mini-batch size导致更多不平衡的训练实例,特别是在初始阶段。这种不平衡可能会影响整体检测性能。

As we discussed in the introduction, simply increasing the mini-batch size has to deal with the tradeoff between convergence and accuracy. To address this issue, we first discuss the learning rate policy for the large mini-batch.

正如我们在介绍中所讨论的,简单地增加mini-batch size必须处理收敛和准确性之间的折中。为了解决这个问题,我们首先讨论large mini-batch的学习率政策。

We have presented a large mini-batch size detector, which achieved better accuracy in much shorter time. This is remarkable because our research cycle has been greatly accelerated. As a result, we have obtained 1st place of COCO 2017 detection challenge.

我们提供了一个large mini-batch size检测器,可以在更短的时间内实现更高的精度。 这是显着的,因为我们的研究周期大大加快了。 因此,我们获得了COCO 2017检测挑战的第一名。

Figure 2: Example images with positive and negative proposals. (a-b) two examples with imbalanced ratio, (c-d) two examples with moderate balanced ratio. Note that we subsampled the negative proposals for visualization.

图2:带有positive和negative提议的示例图像。 (a-b)不平衡比率的两个例子,(c-d)具有中等均衡比例的两个例子。 请注意,我们对可视化的negative提议进行了二次抽样。

Figure 3: Implementation of Cross-GPU Batch Normalization. The gray ellipse depicts the synchronization over devices, while the rounded boxes represents paralleled computation of multiple devices.

图3:Cross-GPU Batch Normalization的实现。 灰色椭圆表示通过设备的同步,而圆角框表示多个设备的并行计算。