翻译《R-FCN: Object Detection via Region-based Fully Convolutional Network》

R-FCN: Object Detection via Region-based Fully Convolutional Network

- Abstract 摘要

- 1 Introduction 引言

- 2 Our approach 我们的方法

- 2.1 Overview

- 2.2 Backbone architecture 骨干架构

- 2.3 Position-sensitive score maps & Position-sensitive RoI pooling. 位置敏感得分映射和位置敏感RoI池化

- 2.4 Training 训练

- 2.5 Inference. 推理

- 2.6 À trous and stride.

- 2.7 Visualization

- 3 Related Work

- 4 Experiments

- 4.1 Experiments on PASCAL VOC

- 4.2 Experiments on MS COCO

- 5 Conclusion and Future Work

- References

原文: https://arxiv.org/pdf/1605.06409.pdf

Abstract 摘要

We present region-based, fully convolutional networks for accurate and efficient object detection. In contrast to previous region-based detectors such as Fast/Faster R-CNN [6, 18] that apply a costly perregion subnetwork hundreds of times, our region-based detector is fully convolutional with almost all computation shared on the entire image. To achieve this goal, we propose position-sensitive score maps to address a dilemma between translation-invariance in image classification and translation-variance in object detection. Our method can thus naturally adopt fully convolutional image classifier backbones, such as the latest Residual Networks (ResNets) [9], for object detection. We show competitive results on the PASCAL VOC datasets (e.g., 83.6% mAP on the 2007 set) with the 101-layer ResNet. Meanwhile, our result is achieved at a test-time speed of 170ms per image, 2.5-20× faster than the Faster R-CNN counterpart. Code is made publicly available at:r-fcn-github

我们提出了基于区域的、完全卷积的网络,用于精确和高效的目标检测。与之前的基于区域的检测器(如Fast/Faster R-CNN [6, 18]相比,我们的基于区域的检测器完全卷积,几乎所有计算都在整个图像上共享。为了实现这一目标,我们提出了position-sensitive score maps位置敏感评分映射,以解决图像分类中的平移不变性和目标检测中的平移变化性之间的矛盾。因此,我们的方法可以自然地采用完全卷积的图像分类器骨架,如最新的残差网络(ResNets) [9],用于对象检测。我们使用101层ResNet在PASCAL VOC数据集上显示竞争结果(例如,2007的83.6% mAP)。与此同时,我们的结果达到了每图像测试时间170毫秒的速度,比Faster R-CNN快快 2.5 - 20×。代码可以在r-fcn-github上获得。

1 Introduction 引言

A prevalent family [8, 6, 18] of deep networks for object detection can be divided into two subnetworks by the Region-of-Interest (RoI) pooling layer [6]: (i) a shared, “fully convolutional” subnetwork independent of RoIs, and (ii) an RoI-wise subnetwork that does not share computation. This decomposition [8] was historically resulted from the pioneering classification architectures, such as AlexNet [10] and VGG Nets [23], that consist of two subnetworks by design — a convolutional subnetwork ending with a spatial pooling layer, followed by several fully-connected (fc) layers. Thus the (last) spatial pooling layer in image classification networks is naturally turned into the RoI pooling layer in object detection networks [8, 6, 18].

一个用于对象检测的深度网络家族[8,6,18]可以由Region-of-Interest (RoI) pooling layer感兴趣区域池化层[6]划分为两个子网络:(i)共享的、“完全卷积的”子网络,独立于RoI, (ii)一个不共享计算的RoI-wise子网络。这种分开方法[8]在历史上是开创性的分类体系结构的作品,例如AlexNet[10]和VGG Nets[23],它们通过设计由两个子网络组成 —— 一个卷积子网络以spatial pooling layer 空间池化层结束,然后是几个完全连接(fc)层。因此,图像分类网络中的(最后一个)空间池化层自然转化成了目标检测网络中的RoI池层[8,6,18]。

But recent state-of-the-art image classification networks such as Residual Nets (ResNets) [9] and GoogLeNets [24, 26] are by design fully convolutional(Only the last layer is fully-connected, which is removed and replaced when fine-tuning for object detection.). By analogy, it appears natural to use all convolutional layers to construct the shared, convolutional subnetwork in the object detection architecture, leaving the RoI-wise subnetwork no hidden layer. However, as empirically investigated in this work, this naïve solution turns out to have considerably inferior detection accuracy that does not match the network’s superior classification accuracy. To remedy this issue, in the ResNet paper [9] the RoI pooling layer of the Faster R-CNN detector [18] is unnaturally inserted between two sets of convolutional layers — this creates a deeper RoI-wise subnetwork that improves accuracy, at the cost of lower speed due to the unshared per-RoI computation.

但是最近最先进的图像分类网络,如残差网络(ResNets)[9]和GoogLeNets[24,26],在设计上是完全卷积的(只有最后一层是完全连接的,在对对象检测进行微调时将其删除并替换)。通过类比,在对象检测体系结构中使用所有卷积层来构造共享的卷积子网络似乎是很自然的,这使得 RoI-wise 子网络没有隐藏层。然而,根据本研究的实证研究,这种朴素的解决方案的检测准确率相当低,与网络优越的分类准确率不匹配。为了解决这个问题,在ResNet的论文[9]中,Faster R-CNN检测器[18]的RoI pooling 层被不自然地插入到两组卷积层之间 —— 这创建了一个更深层的RoI-wise子网络,以提高准确性,但由于每个RoI都有未共享的计算,因此有着更低速度的代价。

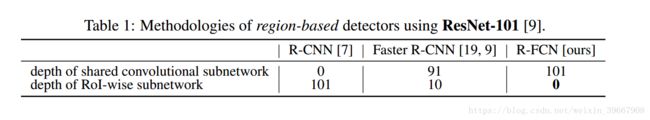

We argue that the aforementioned unnatural design is caused by a dilemma of increasing translation invariance for image classification vs. respecting translation variance for object detection. On one hand, the image-level classification task favors translation invariance — shift of an object inside an image should be indiscriminative. Thus, deep (fully) convolutional architectures that are as translation-invariant as possible are preferable as evidenced by the leading results on ImageNet classification[9, 24, 26]. On the other hand, the object detection task needs localization representations that are translation-variant to an extent. For example, translation of an object inside a candidate box should produce meaningful responses for describing how good the candidate box overlaps the object. We hypothesize that deeper convolutional layers in an image classification network are less sensitive to translation. To address this dilemma, the ResNet paper’s detection pipeline [9] inserts the RoI pooling layer into convolutions — this region-specific operation breaks down translation invariance, and the post-RoI convolutional layers are no longer translation-invariant when evaluated across different regions. However, this design sacrifices training and testing efficiency since it introduces a considerable number of region-wise layers (Table 1).

我们认为,上述的、不自然的设计是由一个两难问题造成的——增加图像分类的平移不变性与尊重目标检测的平移变化。一方面,图像级分类任务偏重图像内物体的平移不变性——图像内物体的平移应该是不加区别的。因此,尽可能平移不变的深度(完全)卷积体系结构更可取,这一点从ImageNet分类的主要结果可以看出[9,24,26]。另一方面,目标检测任务在一定程度上需要平移变化性的定位表示。例如,在候选框中平移一个对象应该产生有意义的响应——描述候选框与对象的重叠程度。我们假设图像分类网络中较深的卷积层对平移的敏感性较低。为了解决这个难题,ResNet论文的检测管道[9]将RoI pooling层插入到卷积中——这个 region-specific 区域特定的操作分解了平移不变性,并且在跨不同区域评估时,post-RoI 卷积层不再具有平移不变性。但是,这种设计牺牲了训练和测试效率,因为它引入了大量 region-wise 层(Table 1)。

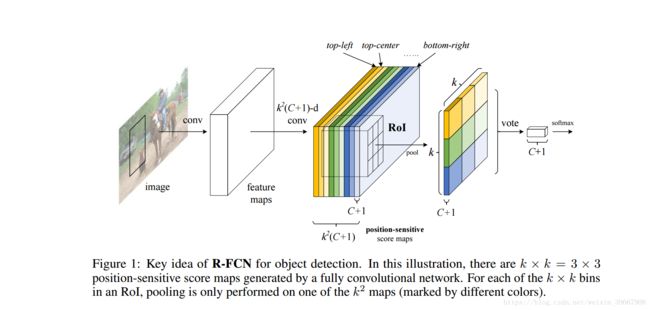

In this paper, we develop a framework called Region-based Fully Convolutional Network (R-FCN) for object detection. Our network consists of shared, fully convolutional architectures as is the case of FCN [15]. To incorporate translation variance into FCN, we construct a set of position-sensitive score maps by using a bank of specialized convolutional layers as the FCN output. Each of these score maps encodes the position information with respect to a relative spatial position (e.g., “to the left of an object”). On top of this FCN, we append a position-sensitive RoI pooling layer that shepherds information from these score maps, with no weight (convolutional/fc) layers following. The entire architecture is learned end-to-end. All learnable layers are convolutional and shared on the entire image, yet encode spatial information required for object detection. Figure 1 illustrates the key idea and Table 1 compares the methodologies among region-based detectors.

本文提出了一种基于区域的全卷积网络(R-FCN)的目标检测框架。我们的网络由共享的、完全卷积的体系结构组成,正如FCN[15]一样。为了在FCN中加入平移变化性,我们构建了一组位置敏感的分数映射,通过使用一组专门的卷积层来作为FCN输出。每个score maps都对相对空间位置的位置信息进行编码(例如,“对象的左侧”)。在这个FCN的上面,我们附加了一个position-sensitive RoI pooling layer,它可以从这些score maps中获取信息,且没有权重(卷积/fc)层跟随在后面。整个体系结构是端到端学习的。所有可学习的层都是卷积的,并在整个图像上共享,但对目标检测来说,需要对空间信息进行编码(?)。Figure 1说明了关键思想,Table 1比较了基于区域的检测器之间的方法。

Using the 101-layer Residual Net (ResNet-101) [9] as the backbone, our R-FCN yields competitive results of 83.6% mAP on the PASCAL VOC 2007 set and 82.0% the 2012 set. Meanwhile, our results are achieved at a test-time speed of 170ms per image using ResNet-101, which is 2.5× to 20× faster than the Faster R-CNN + ResNet-101 counterpart in [9]. These experiments demonstrate that our method manages to address the dilemma between invariance/variance on translation, and fully convolutional image-level classifiers such as ResNets can be effectively converted to fully convolutional object detectors. Code is made publicly available at: r-fcn-github.

使用101-layer Residual Net(ResNet-101)[9]为骨干,我们R-FCN的结果——83.6% mAP 在 PASCAL VOC 2007和82.0%在2012版。与此同时,我们的结果在测试时间达到了170ms每张图的速度(使用resnet - 101),这比在[9]中的Faster R-CNN + ResNet-101 快2.5-20×的速度。这些实验表明,我们的方法能够有效地解决平移上的不变性/变化性之间的矛盾,而像ResNets这样的完全卷积的图像级别的分类器可以有效地转换成完全卷积对象检测器。代码可以在r-fcn-github上获得。

2 Our approach 我们的方法

2.1 Overview

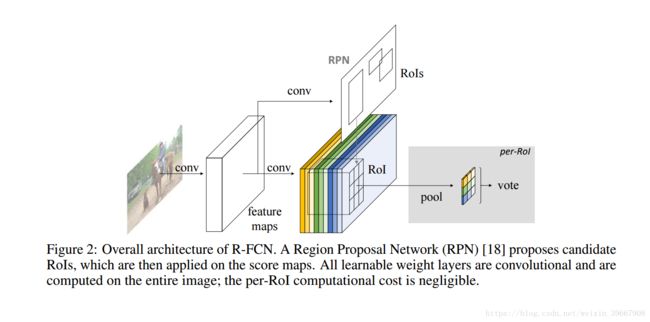

Following R-CNN [7], we adopt the popular two-stage object detection strategy [7, 8, 6, 18, 1, 22] that consists of: (i) region proposal, and (ii) region classification. Although methods that do not rely on region proposal do exist (e.g., [17, 14]), region-based systems still possess leadingaccuracy on several benchmarks [5, 13, 20]. We extract candidate regions by the Region Proposal Network (RPN) [18], which is a fully convolutional architecture in itself. Following [18], we share the features between RPN and R-FCN. Figure 2 shows an overview of the system.

根据R-CNN[7],我们采用了流行的两阶段目标检测策略[7,8,6,18,1,22],包括:(i)区域提议,(ii)区域分类。虽然不依赖于区域建议的方法确实存在(例如,[17,14]),但是基于区域的系统仍然在几个benchmark上具有领先的准确性[5,13,20]。我们通过 Region Proposal Network区域提议网络(RPN)[18]提取候选区域,它本身就是一个完全卷积的体系结构。在[18]之后,我们共享了特征,在RPN和R-FCN之间。Figure 2显示了系统的概述。

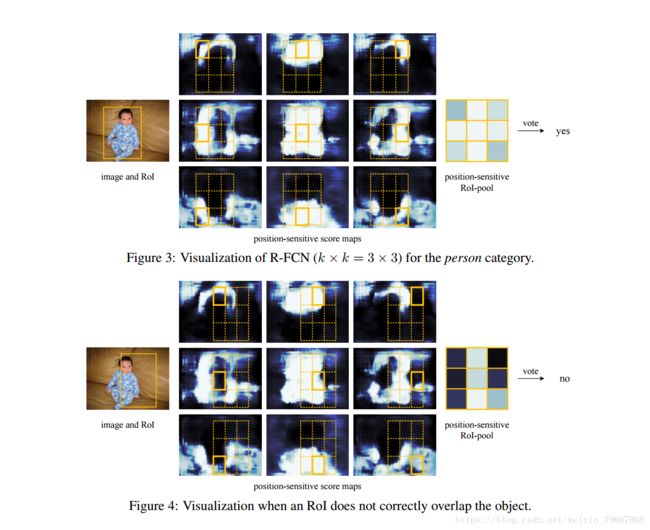

Given the proposal regions (RoIs), the R-FCN architecture is designed to classify the RoIs into object categories and background. In R-FCN, all learnable weight layers are convolutional and are computed on the entire image. The last convolutional layer produces a bank of k2 position-sensitive score maps for each category, and thus has a k2 (C + 1)-channel output layer with C object categories (+1 for background). The bank of k2 score maps correspond to a k × k spatial grid describing relative positions. For example, with k × k = 3 × 3, the 9 score maps encode the cases of {top-left, top-center, top-right, …, bottom-right} of an object category. R-FCN ends with a position-sensitive RoI pooling layer. This layer aggregates the outputs of the last convolutional layer and generates scores for each RoI. Unlike [8, 6], our position-sensitive RoI layer conducts selective pooling, and each of the k × k bin aggregates responses from only one score map out of the bank of k × k score maps. With end-to-end training, this RoI layer shepherds the last convolutional layer to learn specialized position-sensitive score maps. Figure 1 illustrates this idea. Figure 3 and 4 visualize an example. The details are introduced as follows.

考虑到提议区域(RoIs), R-FCN体系结构被设计为将RoIs划分为对象类别和背景。在R-FCN中,所有可学习的权重层都是卷积层,并在整个图像上进行计算。最后一个卷积层为每个类别生成一堆k2 position-sensitive score maps ,因此有一个k2 (C + 1)-channel output layer with C object categories( +1 为背景 )。The bank of k2 score maps 对应一个k×k空间网格描述相对位置。例如,with k × k = 3 × 3 kernel,9 score maps 对一个类别的某个部位{左上,中上,右上,…,右下}进行编码。R-FCN以 position-sensitive RoI layer结束。这个层汇总了最后一个卷积层的输出,并为每个RoI生成scores。与[6,8]不同,position-sensitive RoI layer进行选择性池化,每个k × k bin从一个 k × k score maps输出的score map汇总响应。通过端到端训练,这个RoI层引导最后一个卷积层来学习专门的位置敏感得分映射。Figure 1说明了这个想法。Figure 3和4显示了一个示例。具体内容如下:

2.2 Backbone architecture 骨干架构

The incarnation of R-FCN in this paper is based on ResNet-101 [9], though other networks [10, 23] are applicable. ResNet-101 has 100 convolutional layers followed by global average pooling and a 1000-class fc layer. We remove the average pooling layer and the fc layer and only use the convolutional layers to compute feature maps. We use the ResNet-101 released by the authors of [9], pre-trained on ImageNet [20]. The last convolutional block in ResNet-101 is 2048-d, and we attach a randomly initialized 1024-d 1×1 convolutional layer for reducing dimension (to be precise, this increases the depth in Table 1 by 1). Then we apply the k2 (C + 1)-channel convolutional layer to generate score maps, as introduced next.

本文中的R-FCN的实现是基于ResNet-101[9],但其他网络[10,23]也是适用的。ResNet-101有100个卷积层,然后是 global average pooling 全局平均池化和1000-class的fc层。我们去掉了平均池化层和fc层,只使用卷积层来计算feature map。我们使用了由[9]的作者发布的ResNet-101,在ImageNet[20]上预先训练。在ResNet-101中,最后的convolutional block卷积块是2048-d,我们附上一个随机初始化的 1024-d 1×1 卷积层,去减少维度(准确地说,这就增加了深度 in Table 1 by 1)。然后我们应用 k 2 k^2 k2 (C + 1)-channel卷积层生成score maps,介绍下。

2.3 Position-sensitive score maps & Position-sensitive RoI pooling. 位置敏感得分映射和位置敏感RoI池化

To explicitly encode position information into each RoI, we divide each RoI rectangle into k × k bins by a regular grid. For an RoI rectangle of a size w × h, a bin is of a size ≈ w k \frac{w}{k} kw× h k \frac{h}{k} kh[8, 6]. In our method, the last convolutional layer is constructed to produce k 2 k^2 k2 score maps for each category. Inside the (i, j)-th bin (0 ≤ i, j ≤ k − 1), we define a position-sensitive RoI pooling operation that pools only over the (i, j)-th score map:

r c ( i , j ∣ Θ ) = ∑ ( x , y ) ∈ b i n ( i , j ) z i , j , c ( x + x 0 , y + y 0 ∣ Θ ) / n r_c(i, j | Θ) = \sum_{(x,y)∈bin(i,j)}z_{i,j,c}(x + x_0, y + y_0 | Θ)/n rc(i,j∣Θ)=∑(x,y)∈bin(i,j)zi,j,c(x+x0,y+y0∣Θ)/n (1)

将位置信息显示编码到每个RoI中,我们把每一个RoI矩形分为 k × k bins的常规格。RoI 的矩形的大小 w × h , a bin 的 大小≈ w k \frac{w}{k} kw× h k \frac{h}{k} kh[8,6]。在我们的方法中,构建最后一个卷积层,生成每个类别的 k 2 k^2 k2评分映射。在(i,j)-th bin(0≤i,j≤k−1),我们在(i,j)-th score map 上定义一个position-sensitive RoI pooling operation:

r c ( i , j ∣ Θ ) = ∑ ( x , y ) ∈ b i n ( i , j ) z i , j , c ( x + x 0 , y + y 0 ∣ Θ ) / n r_c(i, j | Θ) = \sum_{(x,y)∈bin(i,j)}z_{i,j,c}(x + x_0, y + y_0 | Θ)/n rc(i,j∣Θ)=∑(x,y)∈bin(i,j)zi,j,c(x+x0,y+y0∣Θ)/n (1)

Here r c ( i , j ) r_c(i, j) rc(i,j) is the pooled response in the (i, j)-th bin for the c-th category, z i , j , c z_{i,j,c} zi,j,c is one score map out of the k 2 ( C + 1 ) k^2 (C + 1) k2(C+1) score maps, ( x 0 , y 0 ) (x_0, y_0) (x0,y0) denotes the top-left corner of an RoI, n is the number of pixels in the bin, and Θ denotes all learnable parameters of the network. The ( i , j ) − t h (i, j)-th (i,j)−th bin spans ⌊ i w k ⌋ ≤ x < ⌈ ( i + 1 ) w k ⌉ \lfloor i\frac{w}{k}\rfloor ≤ x < \lceil (i + 1) \frac{w}{k}\rceil ⌊ikw⌋≤x<⌈(i+1)kw⌉ and ⌊ j h k ⌋ ≤ y < ⌈ ( j + 1 ) h k ⌉ \lfloor j \frac{h}{k} \rfloor≤ y < \lceil(j + 1) \frac{h}{k}\rceil ⌊jkh⌋≤y<⌈(j+1)kh⌉. The operation of Eqn.(1) is illustrated in Figure 1, where a color represents a pair of (i, j). Eqn.(1) performs average pooling (as we use throughout this paper), but max pooling can be conducted as well.

在这, r c ( i , j ) r_c(i, j) rc(i,j)是(i, j)-th bin上关于第c个类别的池化响应, z i , j , c z_{i,j,c} zi,j,c是 k 2 ( C + 1 ) k^2 (C + 1) k2(C+1)score maps输出的一个score map, ( x 0 , y 0 ) (x_0, y_0) (x0,y0) 代表的是一个RoI的左上角, n n n是bin中像素的个数,而 Θ Θ Θ指的是网络的所有可学习的参数。第 ( i , j ) (i, j) (i,j)个bin跨越(spans) ⌊ i w k ⌋ ≤ x < ⌈ ( i + 1 ) w k ⌉ \lfloor i\frac{w}{k}\rfloor ≤ x < \lceil (i + 1) \frac{w}{k}\rceil ⌊ikw⌋≤x<⌈(i+1)kw⌉ and ⌊ j h k ⌋ ≤ y < ⌈ ( j + 1 ) h k ⌉ \lfloor j \frac{h}{k} \rfloor≤ y < \lceil(j + 1) \frac{h}{k}\rceil ⌊jkh⌋≤y<⌈(j+1)kh⌉。算式(1)的操作如Figure 1 所示,其中一个颜色表示一对(i, j)。Eqn.(1)执行平均池化(正如我们在本文中所使用的),但也可以进行最大池化。

The k2 position-sensitive scores then vote on the RoI. In this paper we simply vote by averaging the scores, producing a (C + 1)-dimensional vector for each RoI: r c ( Θ ) = ∑ ( i , j ) r c ( i , j ∣ Θ ) r_c(Θ) = \sum_{(i,j)} r_c(i, j | Θ) rc(Θ)=∑(i,j)rc(i,j∣Θ). Then we compute the softmax responses across categories: s c ( Θ ) = e r c ( Θ ) / ∑ c ′ = 0 C P C e r c ′ ( Θ ) s_c(Θ) = e ^{r_{c(Θ)}} / \sum_{c'=0}^{C} P_Ce^{r_{c'}(Θ)} sc(Θ)=erc(Θ)/∑c′=0CPCerc′(Θ) . They are used for evaluating the cross-entropy loss during training and for ranking the RoIs during inference.

接着,k2位置敏感分值对RoI进行投票。在本文中,我们简单地通过平均分值进行投票,为每个RoI产生(C + 1)维向量: r c ( Θ ) = ∑ ( i , j ) r c ( i , j ∣ Θ ) r_c(Θ) = \sum_{(i,j)} r_c(i, j | Θ) rc(Θ)=∑(i,j)rc(i,j∣Θ)。然后我们计算跨类别的softmax 响应: s c ( Θ ) = e r c ( Θ ) / ∑ c ′ = 0 C P C e r c ′ ( Θ ) s_c(Θ) = e ^{r_{c(Θ)}} / \sum_{c'=0}^{C} P_Ce^{r_{c'}(Θ)} sc(Θ)=erc(Θ)/∑c′=0CPCerc′(Θ) 。它们用于评估训练过程中的交叉熵损失,以及在推理过程中对roi进行排序。

We further address bounding box regression [7, 6] in a similar way. Aside from the above k2 (C +1)-d convolutional layer, we append a sibling 4k2 -d convolutional layer for bounding box regression. The position-sensitive RoI pooling is performed on this bank of 4k2 maps, producing a 4k2 -d vector for each RoI. Then it is aggregated into a 4-d vector by average voting. This 4-d vector parameterizes a bounding box as t = ( t x , t y , t w , t h ) t = (t_x, t_y, t_w, t_h) t=(tx,ty,tw,th)following the parameterization in [6]. We note that we perform class-agnostic bounding box regression for simplicity, but the class-specific counterpart (i.e., with a 4k2C-d output layer) is applicable.

我们以类似的方式进一步处理包围盒回归[7,6]。除了以上k2 (C +1)-d卷积层外,我们还附加了一个兄弟4 k2 -d的卷积层用于包围盒回归。对位置敏感的RoI池在该行4k2 map上进行,为每个RoI产生4k2 -d的向量。然后通过平均投票将其聚合为4维向量。这个4-d向量将边界框参数化为 t = ( t x , t y , t w , t h ) t = (t_x, t_y, t_w, t_h) t=(tx,ty,tw,th),遵循[6]中的参数化。我们注意到,为了简单起见,我们执行类无关的边界框回归,但是类特定的对应项也是适用的(例如,使用4k2C-d输出层)。

The concept of position-sensitive score maps is partially inspired by [3] that develops FCNs for instance-level semantic segmentation. We further introduce the position-sensitive RoI pooling layer that shepherds learning of the score maps for object detection. There is no learnable layer after the RoI layer, enabling nearly cost-free region-wise computation and speeding up both training and inference.

position-sensitive score maps的概念部分是受到[3]的启发,[3]开发了用于实例级语义分割的FCNs。我们进一步引进了位置敏感的RoI池化层,它引导对目标检测的score map学习。在RoI层之后没有可学习层,可以实现几乎没有成本的 region-wise计算,并加快了训练和推理。

2.4 Training 训练

With pre-computed region proposals, it is easy to end-to-end train the R-FCN architecture. Following [6], our loss function defined on each RoI is the summation of the cross-entropy loss and the box regression loss: L ( s , t x , y , w , h ) = L c l s ( s c ∗ ) + λ [ c ∗ > 0 ] L r e g ( t , t ∗ ) L(s, t_{x,y,w,h}) = L_{cls}(s_{c^∗}) + λ[c^∗ > 0]L_{reg}(t, t^∗) L(s,tx,y,w,h)=Lcls(sc∗)+λ[c∗>0]Lreg(t,t∗). Here c ∗ c^∗ c∗ is the RoI’s ground-truth label ( c ∗ c^∗ c∗ = 0 means background). L c l s ( s c ∗ ) = − l o g ( s c ∗ ) L_{cls}(s_{c^∗} ) = − log(s_{c^∗}) Lcls(sc∗)=−log(sc∗) is the cross-entropy loss for classification, L r e g L_{reg} Lreg is the bounding box regression loss as defined in [6], and t ∗ t ^∗ t∗ represents the ground truth box. [ c ∗ > 0 ] [c ^∗ > 0] [c∗>0] is an indicator which equals to 1 if the argument is true and 0 otherwise. We set the balance weight λ = 1 λ = 1 λ=1 as in [6]. We define positive examples as the RoIs that have intersection-over-union (IoU) overlap with a ground-truth box of at least 0.5, and negative otherwise.

通过预先计算的区域提案,很容易对R-FCN体系结构进行端到端培训。[6]后,我们在每个RoI定义的损失函数为交叉熵损失和盒子回归损失的总和: L ( s , t x , y , w , h ) = L c l s ( s c ∗ ) + λ [ c ∗ > 0 ] L r e g ( t , t ∗ ) L(s, t_{x,y,w,h}) = L_{cls}(s_{c^∗}) + λ[c^∗ > 0]L_{reg}(t, t^∗) L(s,tx,y,w,h)=Lcls(sc∗)+λ[c∗>0]Lreg(t,t∗)。 其中, c ∗ c^∗ c∗ 是RoI的GT标签( c ∗ = 0 c^∗= 0 c∗=0意味着背景)。 L c l s ( s c ∗ ) = − l o g ( s c ∗ ) L_{cls}(s_{c^∗} ) = − log(s_{c^∗}) Lcls(sc∗)=−log(sc∗)是对分类的交叉熵损失, L r e g L_{reg} Lreg 是边界框回归损失像[6]中定义,和 t ∗ t ^∗ t∗代表了地面实况盒子。 [ c ∗ > 0 ] [c ^∗ > 0] [c∗>0]是一个指标,如果参数为真,则为1,否则为0。我们设置了平衡权重λ= 1[6]。我们将正例定义为交叉合并(IoU)与地面实况框重叠的roi至少0.5的,否则为负例。

It is easy for our method to adopt online hard example mining (OHEM) [22] during training. Our negligible per-RoI computation enables nearly cost-free example mining. Assuming N proposals per image, in the forward pass, we evaluate the loss of all N proposals. Then we sort all RoIs (positive and negative) by loss and select B RoIs that have the highest loss. Backpropagation [11] is performed based on the selected examples. Because our per-RoI computation is negligible, the forward time is nearly not affected by N, in contrast to OHEM Fast R-CNN in [22] that may double training time.We provide comprehensive timing statistics in Table 3 in the next section.

我们的方法很容易在训练过程中采用online hard example mining (OHEM)[22]。我们的pre-roi的计算可以忽略不计,几乎不需要成本。假设每幅图像有N个提案,在前向传递中,我们评估N个提案的所有损失。然后我们将所有RoIs(正RoIs和负RoIs)按损失进行排序,并选择损失最大的B RoIs。根据所选的example执行反向传播[11]。因为我们的per-RoI计算可以忽略不计,所以前向时间几乎不受N的影响,而[22]中的OHEM Fast R-CNN可能会使训练时间加倍。在下一节中,我们将在表3中提供全面的时间统计信息。

We use a weight decay of 0.0005 and a momentum of 0.9. By default we use single-scale training: images are resized such that the scale (shorter side of image) is 600 pixels [6, 18]. Each GPU holds 1 image and selects B = 128 RoIs for back-prop. We train the model with 8 GPUs (so the effective mini-batch size is 8×). We fine-tune R-FCN using a learning rate of 0.001 for 20k mini-batches and 0.0001 for 10k mini-batches on VOC. To have R-FCN share features with RPN (Figure 2), we adopt the 4-step alternating training in [18], alternating between training RPN and training R-FCN.(Although joint training [18] is applicable, it is not straightforward to perform example mining jointly.)

我们使用权重衰减0.0005和动量0.9。默认情况下,我们使用single-scale训练:图像的大小调整为600像素(图像的短边)[6,18]。每个GPU持有1张图片,选择B = 128 RoIs来向后传播(??)。我们用8 gpu训练模型(有效mini-batch大小是8×)。我们对R-FCN进行了微调,20k小批量的学习率为0.001,10k小批量的VOC学习率为0.0001。为了使R-FCN与RPN具有相同的特征(图2),我们在[18]中采用了4步交替训练,在训练RPN和训练R-FCN之间交替进行。(虽然联合训练[18]是可行的,但是进行example mining jointly并不容易。)

2.5 Inference. 推理

As illustrated in Figure 2, the feature maps shared between RPN and R-FCN are computed (on an image with a single scale of 600). Then the RPN part proposes RoIs, on which the R-FCN part evaluates category-wise scores and regresses bounding boxes. During inference we evaluate 300 RoIs as in [18] for fair comparisons. The results are post-processed by non-maximum suppression (NMS) using a threshold of 0.3 IoU [7], as standard practice.

如图2所示,计算RPN和R-FCN之间共享的feature map(在单尺度为600的图像上)。然后,RPN部分提出roi, R-FCN部分在roi上评估 category-wise scores并回归边界框。在推理过程中,我们对300个roi进行[18]中的公平比较。作为标准做法,使用0.3 IoU[7]的阈值对结果进行非极大值抑制(non-maximum suppression, NMS)的后处理。

2.6 À trous and stride.

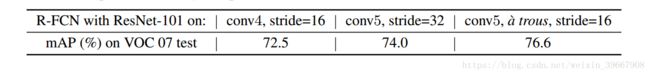

Our fully convolutional architecture enjoys the benefits of the network modifications that are widely used by FCNs for semantic segmentation [15, 2]. Particularly, we reduce ResNet-101’s effective stride from 32 pixels to 16 pixels, increasing the score map resolution. All layers before and on the conv4 stage [9] (stride=16) are unchanged; the stride=2 operations in the first conv5 block is modified to have stride=1, and all convolutional filters on the conv5 stage are modified by the “hole algorithm” [15, 2] (“Algorithme à trous” [16]) to compensate for the reduced stride. For fair comparisons, the RPN is computed on top of the conv4 stage (that are shared with R-FCN), as is the case in [9] with Faster R-CNN, so the RPN is not affected by the à trous trick. The following table shows the ablation results of R-FCN (k × k = 7 × 7, no hard example mining). The à trous trick improves mAP by 2.6 points.

我们的完全卷积架构得益于FCNs广泛用于语义分割的网络修改[15,2]。特别地,我们将ResNet-101的有效步幅从32像素降低到16像素,提高了feature map的分辨率。conv4阶段9的和之上的所有层都保持不变;对第一个conv5块中的stride=2操作进行修改,使其stride=1,并对conv5阶段的所有卷积滤波器进行“hole algorithm孔算法”[15,2](“Algorithme a trous”[16])来补偿降低的stride。为了公平比较,RPN是在conv4阶段(与R-FCN共享)之上计算的,与[9]中Faster R-CNN相同,因此RPN不受 à trous技巧的影响。下面的表显示了R-FCN的ablation 结果(k×k = 7×7, no hard example mining)。à trous技巧将mAP提高了2.6点。

2.7 Visualization

In Figure 3 and 4 we visualize the position-sensitive score maps learned by R-FCN when k × k = 3 × 3. These specialized maps are expected to be strongly activated at a specificrelative position of an object. For example, the “top-center-sensitive” score map exhibits high scores roughly near the top-center position of an object. If a candidate box precisely overlaps with a true object (Figure 3), most of the k 2 k^2 k2 bins in the RoI are strongly activated, and their voting leads to a high score. On the contrary, if a candidate box does not correctly overlaps with a true object (Figure 4), some of the k 2 k^2 k2 bins in the RoI are not activated, and the voting score is low.

我们设想在k×k = 3×3的R-FCN上所学到的 position-sensitive score maps如图3、4所示 。这些特定的映射在对象的特定相对位置被强烈激活。例如, “top-center-sensitive” score map会在大致接近物体的中上位置显示高分。如果候选框恰好与真正的对象重叠(图3),RoI中的大多数 k 2 k^2 k2 bins都被强烈激活,它们的投票会有高分的结果。相反,如果一个候选框没有正确地与一个真正的对象重叠(图4),RoI中的一些 k 2 k^2 k2 bins没有被激活,投票分数就会很低。

3 Related Work

R-CNN [7] has demonstrated the effectiveness of using region proposals [27, 28] with deep networks. R-CNN evaluates convolutional networks on cropped and warped regions, and computation is not shared among regions (Table 1). SPPnet [8], Fast R-CNN [6], and Faster R-CNN [18] are “semiconvolutional”, in which a convolutional subnetwork performs shared computation on the entire image and another subnetwork evaluates individual regions.

R-CNN[7]已经证明了在深度网络中使用区域提案的有效性[27,28]。R-CNN在卷积网络上对 cropped and warped区域进行评估,区域之间的计算不共享(表1)。SPPnet[8]、Fast R-CNN[6]和Faster R-CNN[18]是“半卷积”,其中卷积子网络对整个图像进行共享计算,另一个子网络对单个区域进行评估。

There have been object detectors that can be thought of as “fully convolutional” models. OverFeat [21] detects objects by sliding multi-scale windows on the shared convolutional feature maps; similarly, in Fast R-CNN [6] and [12], sliding windows that replace region proposals are investigated. In these cases, one can recast a sliding window of a single scale as a single convolutional layer. The RPN component in Faster R-CNN [18] is a fully convolutional detector that predicts bounding boxes with respect to reference boxes (anchors) of multiple sizes. The original RPN is class-agnostic in [18], but its class-specific counterpart is applicable (see also [14]) as we evaluate in the following.

有一些物体探测器可以被认为是“完全卷积”的模型。OverFeat[21]通过在shared convolutional feature maps上,滑动多尺度窗口检测对象;同样,在Fast R-CNN[6]和[12]中,研究了替换区域提议的滑动窗口。在这些情况下,可以将单个比例的滑动窗口重设为单个卷积层。在Faster R-CNN[18]中,RPN组件是一个完全卷积检测器,它根据不同大小的引用框(锚)预测边界框。最初的RPN在[18]中是类无关的,但是它对类特定的对应项是适用的,参见下面的评估 ([see also [14])。

Another family of object detectors resort to fully-connected (fc) layers for generating holistic object detection results on an entire image, such as [25, 4, 17].

另一类目标检测器依赖于完全连接(fc)层,用于在整个图像上生成整体目标检测结果,如[25,4,17]。

4 Experiments

4.1 Experiments on PASCAL VOC

We perform experiments on PASCAL VOC [5] that has 20 object categories. We train the models on the union set of VOC 2007 trainval and VOC 2012 trainval (“07+12”) following [6], and evaluate on VOC 2007 test set. Object detection accuracy is measured by mean Average Precision (mAP).

Comparisons with Other Fully Convolutional Strategies

Though fully convolutional detectors are available, experiments show that it is nontrivial for them to achieve good accuracy. We investigate the following fully convolutional strategies (or “almost” fully convolutional strategies that have only one classifier fc layer per RoI), using ResNet-101:

Naïve Faster R-CNN. As discussed in the introduction, one may use all convolutional layers in ResNet-101 to compute the shared feature maps, and adopt RoI pooling after the last convolutional layer (after conv5). An inexpensive 21-class fc layer is evaluated on each RoI (so this variant is “almost” fully convolutional). The à trous trick is used for fair comparisons.

Class-specific RPN. This RPN is trained following [18], except that the 2-class (object or not) convolutional classifier layer is replaced with a 21-class convolutional classifier layer. For fair comparisons, for this class-specific RPN we use ResNet-101’s conv5 layers with the à trous trick.

R-FCN without position-sensitivity. By setting k = 1 we remove the position-sensitivity of the R-FCN. This is equivalent to global pooling within each RoI.

Analysis. Table 2 shows the results. We note that the standard (not naïve) Faster R-CNN in the ResNet paper [9] achieves 76.4% mAP with ResNet-101 (see also Table 3), which inserts the RoI pooling layer between conv4 and conv5 [9]. As a comparison, the naïve Faster R-CNN (that applies RoI pooling after conv5) has a drastically lower mAP of 68.9% (Table 2). This comparison empirically justifies the importance of respecting spatial information by inserting RoI pooling between layers for the Faster R-CNN system. Similar observations are reported in [19].

The class-specific RPN has an mAP of 67.6% (Table 2), about 9 points lower than the standard Faster R-CNN’s 76.4%. This comparison is in line with the observations in [6, 12] — in fact, the class-specific RPN is similar to a special form of Fast R-CNN [6] that uses dense sliding windows as proposals, which shows inferior results as reported in [6, 12]. On the other hand, our R-FCN system has significantly better accuracy (Table 2). Its mAP (76.6%) is on par with the standard Faster R-CNN’s (76.4%, Table 3). These results indicate that our positionsensitive strategy manages to encode useful spatial information for locating objects, without using any learnable layer after RoI pooling.

The importance of position-sensitivity is further demonstrated by setting k = 1, for which R-FCN is unable to converge. In this degraded case, no spatial information can be explicitly captured within an RoI. Moreover, we report that naïve Faster R-CNN is able to converge if its RoI pooling output resolution is 1 × 1, but the mAP further drops by a large margin to 61.7% (Table 2).

Comparisons with Faster R-CNN Using ResNet-101

Next we compare with standard “Faster R-CNN + ResNet-101” [9] which is the strongest competitor and the top-performer on the PASCAL VOC, MS COCO, and ImageNet benchmarks. We use k × k = 7 × 7 in the following. Table 3 shows the comparisons. Faster R-CNN evaluates a 10-layer subnetwork for each region to achieve good accuracy, but R-FCN has negligible per-region cost. With 300 RoIs at test time, Faster R-CNN takes 0.42s per image, 2.5× slower than our R-FCN that takes 0.17s per image (on a K40 GPU; this number is 0.11s on a Titan X GPU). R-FCN also trains faster than Faster R-CNN. Moreover, hard example mining [22] adds no cost to R-FCN training (Table 3). It is feasible to train R-FCN when mining from 2000 RoIs, in which case Faster R-CNN is 6× slower (2.9s vs. 0.46s). But experiments show that mining from a larger set of candidates (e.g., 2000) has no benefit (Table 3). So we use 300 RoIs for both training and inference in other parts of this paper.

Table 4 shows more comparisons. Following the multi-scale training in [8], we resize the image in each training iteration such that the scale is randomly sampled from {400,500,600,700,800} pixels. We still test a single scale of 600 pixels, so add no test-time cost. The mAP is 80.5%. In addition, we train our model on the MS COCO [13] trainval set and then fine-tune it on the PASCAL VOC set. R-FCN achieves 83.6% mAP (Table 4), close to the “Faster R-CNN +++” system in [9] that uses ResNet-101 as well. We note that our competitive result is obtained at a test speed of 0.17 seconds per image, 20× faster than Faster R-CNN +++ that takes 3.36 seconds as it further incorporates iterative box regression, context, and multi-scale testing [9]. These comparisons are also observed on the PASCAL VOC 2012 test set (Table 5).

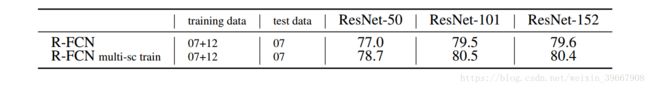

On the Impact of Depth

The following table shows the R-FCN results using ResNets of different depth [9]. Our detection accuracy increases when the depth is increased from 50 to 101, but gets saturated with a depth of 152.

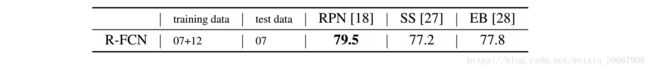

On the Impact of Region Proposals

R-FCN can be easily applied with other region proposal methods, such as Selective Search (SS) [27] and Edge Boxes (EB) [28]. The following table shows the results (using ResNet-101) with different proposals. R-FCN performs competitively using SS or EB, showing the generality of our method.

4.2 Experiments on MS COCO

Next we evaluate on the MS COCO dataset [13] that has 80 object categories. Our experiments

involve the 80k train set, 40k val set, and 20k test-dev set. We set the learning rate as 0.001 for 90k iterations and 0.0001 for next 30k iterations, with an effective mini-batch size of 8. We extend the alternating training [18] from 4-step to 5-step (i.e., stopping after one more RPN training step), which slightly improves accuracy on this dataset when the features are shared; we also report that 2-step training is sufficient to achieve comparably good accuracy but the features are not shared.

The results are in Table 6. Our single-scale trained R-FCN baseline has a val result of 48.9%/27.6%. This is comparable to the Faster R-CNN baseline (48.4%/27.2%), but ours is 2.5× faster testing.

It is noteworthy that our method performs better on objects of small sizes (defined by [13]). Our multi-scale trained (yet single-scale tested) R-FCN has a result of 49.1%/27.8% on the val set and 51.5%/29.2% on the test-dev set. Considering COCO’s wide range of object scales, we further evaluate a multi-scale testing variant following [9], and use testing scales of {200,400,600,800,1000}. The mAP is 53.2%/31.5%. This result is close to the 1st-place result (Faster R-CNN +++ with ResNet-101, 55.7%/34.9%) in the MS COCO 2015 competition. Nevertheless, our method is simpler and adds no bells and whistles such as context or iterative box regression that were used by [9], and is faster for both training and testing.

5 Conclusion and Future Work

We presented Region-based Fully Convolutional Networks, a simple but accurate and efficient framework for object detection. Our system naturally adopts the state-of-the-art image classification backbones, such as ResNets, that are by design fully convolutional. Our method achieves accuracy competitive with the Faster R-CNN counterpart, but is much faster during both training and inference.

We intentionally keep the R-FCN system presented in the paper simple. There have been a series of orthogonal extensions of FCNs that were developed for semantic segmentation (e.g., see [2]), as well as extensions of region-based methods for object detection (e.g., see [9, 1, 22]). We expect our system will easily enjoy the benefits of the progress in the field.

References

[1] S. Bell, C. L. Zitnick, K. Bala, and R. Girshick. Inside-outside net: Detecting objects in context with skip pooling and recurrent neural networks. In CVPR, 2016.

[2] L.-C. Chen, G. Papandreou, I. Kokkinos, K. Murphy, and A. L. Yuille. Semantic image segmentation with deep convolutional nets and fully connected crfs. In ICLR, 2015.

[3] J. Dai, K. He, Y. Li, S. Ren, and J. Sun. Instance-sensitive fully convolutional networks. arXiv:1603.08678, 2016.

[4] D. Erhan, C. Szegedy, A. Toshev, and D. Anguelov. Scalable object detection using deep neural networks. In CVPR, 2014.

[5] M. Everingham, L. Van Gool, C. K. Williams, J. Winn, and A. Zisserman. The PASCAL Visual Object Classes (VOC) Challenge. IJCV, 2010.

[6] R. Girshick. Fast R-CNN. In ICCV, 2015.

[7] R. Girshick, J. Donahue, T. Darrell, and J. Malik. Rich feature hierarchies for accurate object detection and semantic segmentation. In CVPR, 2014.

[8] K. He, X. Zhang, S. Ren, and J. Sun. Spatial pyramid pooling in deep convolutional networks for visual recognition. In ECCV. 2014.

[9] K. He, X. Zhang, S. Ren, and J. Sun. Deep residual learning for image recognition. In CVPR, 2016.

[10] A. Krizhevsky, I. Sutskever, and G. Hinton. Imagenet classification with deep convolutional neural networks. In NIPS, 2012.

[11] Y. LeCun, B. Boser, J. S. Denker, D. Henderson, R. E. Howard, W. Hubbard, and L. D. Jackel. Backpropagation applied to handwritten zip code recognition. Neural computation, 1989.

[12] K. Lenc and A. Vedaldi. R-CNN minus R. In BMVC, 2015.

[13] T.-Y. Lin, M. Maire, S. Belongie, J. Hays, P. Perona, D. Ramanan, P. Dollár, and C. L. Zitnick. Microsoft COCO: Common objects in context. In ECCV, 2014.

[14] W. Liu, D. Anguelov, D. Erhan, C. Szegedy, and S. Reed. SSD: Single shot multibox detector.

arXiv:1512.02325v2, 2015.

[15] J. Long, E. Shelhamer, and T. Darrell. Fully convolutional networks for semantic segmentation. In CVPR, 2015.

[16] S. Mallat. A wavelet tour of signal processing. Academic press, 1999.

[17] J. Redmon, S. Divvala, R. Girshick, and A. Farhadi. You only look once: Unified, real-time object detection. In CVPR, 2016.

[18] S. Ren, K. He, R. Girshick, and J. Sun. Faster R-CNN: Towards real-time object detection with region proposal networks. In NIPS, 2015.

[19] S. Ren, K. He, R. Girshick, X. Zhang, and J. Sun. Object detection networks on convolutional feature maps. arXiv:1504.06066, 2015.

[20] O. Russakovsky, J. Deng, H. Su, J. Krause, S. Satheesh, S. Ma, Z. Huang, A. Karpathy, A. Khosla, M. Bernstein, A. C. Berg, and L. Fei-Fei. ImageNet Large Scale Visual Recognition Challenge. IJCV, 2015.

[21] P. Sermanet, D. Eigen, X. Zhang, M. Mathieu, R. Fergus, and Y. LeCun. Overfeat: Integrated recognition, localization and detection using convolutional networks. In ICLR, 2014.

[22] A. Shrivastava, A. Gupta, and R. Girshick. Training region-based object detectors with online hard example mining. In CVPR, 2016.

[23] K. Simonyan and A. Zisserman. Very deep convolutional networks for large-scale image recognition. In ICLR, 2015.

[24] C. Szegedy, W. Liu, Y. Jia, P. Sermanet, S. Reed, D. Anguelov, D. Erhan, and A. Rabinovich. Going deeper with convolutions. In CVPR, 2015.

[25] C. Szegedy, A. Toshev, and D. Erhan. Deep neural networks for object detection. In NIPS, 2013.

[26] C. Szegedy, V. Vanhoucke, S. Ioffe, J. Shlens, and Z. Wojna. Rethinking the inception architecture for computer vision. In CVPR, 2016.

[27] J. R. Uijlings, K. E. van de Sande, T. Gevers, and A. W. Smeulders. Selective search for object recognition. IJCV, 2013.

[28] C. L. Zitnick and P. Dollár. Edge boxes: Locating object proposals from edges. In ECCV, 2014.