兴趣探测的多样性解决方案

问题背景

还是继续前文的问题,给用户展示新闻的时候,除了保证兴趣满足度之外,还要保证用户兴趣发散探测,不至于兴趣太收敛。我们上文讨论到了用地雷克雷分布==》再到用F(user, tag, var)=ctr的方式,虽然弥补了单个用户重依赖历史行为的倾向,用到全局信息/点击/展现信息,但是仍然遗漏了重要的屏信息。现在,有更优的整屏多样性的解决方法可供我们选择,DPP(Determinantal Point Process),是对一屏的数据选择时做多样性探测,很好地平衡了相关性和多样性之间的关系。

DPP基础

DPP有个特性,可以找到集合,该集合能够满足最大多样性条件下的概率最大化【5】,如此来对点间斥力建模。

假设有离散集 z = 1 , 2 , 3... M z={1,2,3...M} z=1,2,3...M,其任意子集 Y ⊆ Z Y \subseteq Z Y⊆Z,则必然存在一个矩阵 L ∈ R M × M L \in R^{M \times M} L∈RM×M,该子集的概率 P ( Y ) ∝ d e t ( L Y ) P(Y) \propto det(L_Y) P(Y)∝det(LY), L L L是实数半正定矩阵被 Z Z Z的元素索引成的,。 Y m a x = a r g m a x Y ⊆ Z d e t ( L Y ) Y_{max}=argmax_{Y \subseteq Z} det(L_Y) Ymax=argmaxY⊆Zdet(LY)

在k-DPP里, P ( Y ) P(Y) P(Y)的分母系数是特征向量的因子。

速度优化

1)贪婪解的采样优化。

略,详见参考1=>2=3,计算复杂度由 O ( M 4 ) ⟶ O ( M 3 ) ⟶ O ( N 2 M ) ∣ e i g e n d e c o m p o s i t i o n L O(M^4) \longrightarrow O(M^3) \longrightarrow O(N^2M)|_{eigendecompositionL} O(M4)⟶O(M3)⟶O(N2M)∣eigendecompositionL

2)贪婪解的非采样式优化。

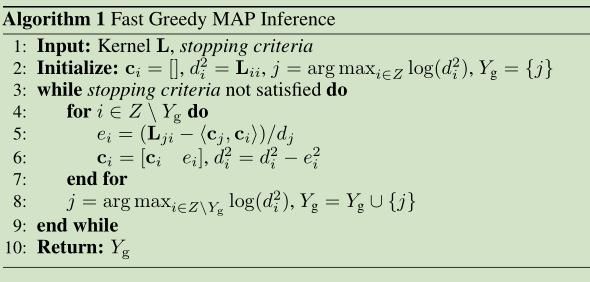

Colesky factor优化迭代步骤。参考1,也是本文关注的思路。

在每轮迭代中,找 j j j的过程如下 j = a r g m a x i ∈ Z ∖ Y g l o g d e t ( L Y g ∪ { i } ) − l o g d e t ( L Y g ) j=argmax_{i \in Z \setminus Y_g} log det(L_{Y_g \cup \{i\}}) - log det(L_{Y_g}) j=argmaxi∈Z∖Yglogdet(LYg∪{i})−logdet(LYg)

当 L L L是正定的,其主子式也是正定的,假设 d e t ( L Y g ) > 0 det(L_{Y_g})>0 det(LYg)>0,其Cholesky分解 L = V V T L=VV^T L=VVT, V V V是可逆下三角矩阵 L Y g ∖ { i } = [ L Y g L Y g , i L i , Y g L i i ] = [ V 0 c i d i ] [ V 0 c i d i ] T L_{Y_g \setminus \{i\} } = \begin{bmatrix} L_{Y_g} & L_{Y_g,i} \\ L_{i,Y_g} & L_{ii} \end{bmatrix} = \begin{bmatrix} V & 0 \\ c_i & d_i \end{bmatrix} \begin{bmatrix} V & 0 \\ c_i & d_i \end{bmatrix}^T LYg∖{i}=[LYgLi,YgLYg,iLii]=[Vci0di][Vci0di]T

其满足条件 ① V c i T = L Y g , i ① Vc_i^T=L_{Y_g, i} ①VciT=LYg,i ② d i 2 = L i i − ∣ ∣ c i ∣ ∣ 2 2 ② d_i^2 = L_{ii}-||c_i||_2^2 ②di2=Lii−∣∣ci∣∣22 ③ d i ≥ 0 ; ④ c i ③ d_i \geq 0; ④c_i ③di≥0;④ci是行向量。

将上式带入得到 d e t ( L Y g ∪ { i } ) = d e t ( V V T ) × d i 2 = d e t ( L Y g ) × d i 2 ⟶ j = a r g m a x i ∈ Z ∖ Y g l o g ( d i 2 ) det(L_{Y_g \cup \{i\} }) =det(VV^T) \times d_i^2 = det(L_{Y_g}) \times d_i^2 \longrightarrow j = argmax_{i \in Z \setminus Y_g} log(d_i^2) det(LYg∪{i})=det(VVT)×di2=det(LYg)×di2⟶j=argmaxi∈Z∖Yglog(di2)

于是查找下一个最优符合条件的的问题就简化了,而我们继续将当前最优解带回Cholesky分解约束条件里,在下一轮查找最优解 c i ′ c_i' ci′和 d i ′ {d_i'} di′时 i ′ ∈ Z ∖ { Y g ∪ j } i' \in Z \setminus \{Y_g \cup j\} i′∈Z∖{Yg∪j}, [ V 0 c j d j ] c i ′ T = [ L Y g , i L j i ] = L Y g ∪ { j } , i \begin{bmatrix} V & 0 \\ c_j & d_j \end{bmatrix} c_i'^T =\begin{bmatrix} L_{Y_g,i} & \\ L_ji & \end{bmatrix}= L_{Y_g \cup \{j\}, i} [Vcj0dj]ci′T=[LYg,iLji]=LYg∪{j},i,得到 c i ′ = [ c i , ( L j i − ⟨ c j , c i ⟩ ) / d j ] = [ c i , e i ] ; c_i'=[c_i , (L_{ji}-\left \langle c_j,c_i \right \rangle)/d_j] = [c_i, e_i]; ci′=[ci,(Lji−⟨cj,ci⟩)/dj]=[ci,ei]; d i ′ 2 = L i i − ∣ ∣ c i ′ ∣ ∣ 2 2 = L i i − ∣ ∣ c i ∣ ∣ 2 2 − e i 2 = d i 2 − e i 2 d_i'^2=L_{ii}-||c_i'||_2^2=L_{ii}-||c_i||_2^2-e_i^2=d_i^2-e_i^2 di′2=Lii−∣∣ci′∣∣22=Lii−∣∣ci∣∣22−ei2=di2−ei2

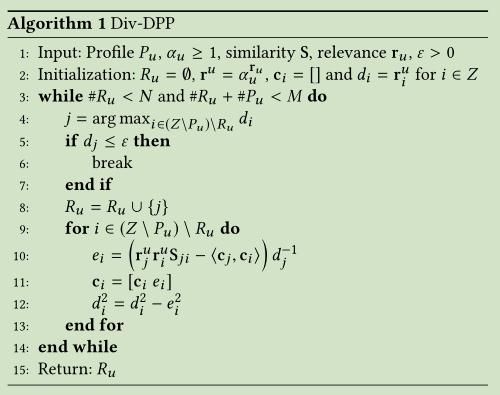

在实际业务中,候选集核矩阵 L m , n = r m × r n × S m , n = r m × r n × 1 + ⟨ E m , E n ⟩ 2 = R u × S R u = D i a g ( r u ) × S × D i a g ( r u ) L_{m,n}=r_m \times r_n \times S_{m,n}=r_m \times r_n \times \frac{1+\left \langle E_m, E_n \right \rangle}{2}=R_u \times S_{R_u}=Diag(r_u) \times S \times Diag(r_u) Lm,n=rm×rn×Sm,n=rm×rn×21+⟨Em,En⟩=Ru×SRu=Diag(ru)×S×Diag(ru),后验概率 l o g P ( R u ) ∝ l o g d e t ( L R u ) = ∑ i ∈ R u l o g ( r u , i 2 ) ⏟ l i k e − s c o r e + l o g d e t ( S R u ) ⏟ d i v e r s i t y − s c o r e log P(R_u) \propto log det(L_{R_u})= \underset{like-score}{\underbrace{\sum^{i \in R_u} log(r_{u,i}^2)}} + \underset{diversity-score}{\underbrace{log det(S_{R_u})}} logP(Ru)∝logdet(LRu)=like−score ∑i∈Rulog(ru,i2)+diversity−score logdet(SRu)

业务相关

优化1:组合超参 θ \theta θ

l o g P ( R u ) ∝ θ ∑ i ∈ R u l o g ( r u , i 2 ) + ( 1 − θ ) l o g d e t ( S R u ) log P(R_u) \propto \theta \sum_{i \in R_u} log(r_{u,i}^2) + (1-\theta)log det(S_{R_u}) logP(Ru)∝θ∑i∈Rulog(ru,i2)+(1−θ)logdet(SRu)

这种超参调整,是核矩阵 L → L ′ = D i a g ( e α r u ) × S × D i a g ( e α r u ) L \rightarrow L'=Diag(e^{\alpha r_u}) \times S \times Diag(e^{\alpha r_u}) L→L′=Diag(eαru)×S×Diag(eαru),其中 α = θ 2 ( 1 − θ ) \alpha=\frac{\theta}{2(1-\theta)} α=2(1−θ)θ

这样更新逻辑就变成了

优化2:多样超参 α \alpha α

l o g P ( R u ) ∝ ∑ i ∈ R u l o g ( r u , i 2 ) + α l o g d e t ( S R u ) log P(R_u) \propto \sum_{i \in R_u} log(r_{u,i}^2) + \alpha log det(S_{R_u}) logP(Ru)∝∑i∈Rulog(ru,i2)+αlogdet(SRu)

这种超参调整,是将Sim矩阵变为 S → α × S − ( α − 1 ) D i a g ( 1 ) S\rightarrow \alpha \times S -(\alpha-1)Diag(1) S→α×S−(α−1)Diag(1),对left-down对角线外乘以权重 α \alpha α得到:

L m , n = D i a g ( r u ) × ( α × S − ( α − 1 ) D i a g ( 1 ) ) × D i a g ( r u ) L_{m,n}=Diag(r_u) \times (\alpha \times S -(\alpha-1)Diag(1)) \times Diag(r_u) Lm,n=Diag(ru)×(α×S−(α−1)Diag(1))×Diag(ru)

= α D i a g ( r u ) × S × D i a g ( r u ) + ( 1 − α ) D i a g ( r u ) × D i a g ( 1 ) × D i a g ( r u ) =\alpha Diag(r_u) \times S \times Diag(r_u) +(1-\alpha)Diag(r_u) \times Diag(1) \times Diag(r_u) =αDiag(ru)×S×Diag(ru)+(1−α)Diag(ru)×Diag(1)×Diag(ru)

l o g P ( R u ) ∝ l o g d e t L m , n → log P(R_u) \propto logdet L_{m,n} \rightarrow logP(Ru)∝logdetLm,n→

= l o g d e t [ α D i a g ( r u ) × S × D i a g ( r u ) + ( 1 − α ) D i a g ( r u ) × D i a g ( 1 ) × D i a g ( r u ) ] = log det \left [ \alpha Diag(r_u) \times S \times Diag(r_u) +(1-\alpha)Diag(r_u) \times Diag(1) \times Diag(r_u) \right ] =logdet[αDiag(ru)×S×Diag(ru)+(1−α)Diag(ru)×Diag(1)×Diag(ru)]

= α ∑ i ∈ R u l o g ( r u , i 2 ) + α l o g d e t ( S R u ) + ( 1 − α ) ∑ i ∈ R u l o g ( r u , i 2 ) =\alpha \sum_{i \in R_u} log (r_{u,i}^2) + \alpha logdet(S_{R_u}) +(1-\alpha) \sum_{i\in R_u}log (r_{u,i}^2) =α∑i∈Rulog(ru,i2)+αlogdet(SRu)+(1−α)∑i∈Rulog(ru,i2)

= ∑ i ∈ R u l o g ( r u , i 2 ) + α l o g d e t ( S R u ) =\sum_{i \in R_u} log (r_{u,i}^2) +\alpha logdet(S_{R_u}) =∑i∈Rulog(ru,i2)+αlogdet(SRu)

此方式,不用改变求解方式,即可实现多样性权重的调整。

若是对rank矩阵做调权呢?比如 r i r j × ( 1 + β ) r_i r_j \times (1+\beta) rirj×(1+β),又或者是 ( r i + β ) × ( r j + β ) (r_i+\beta) \times (r_j+\beta) (ri+β)×(rj+β)如此调整呢?

优化3:多约束下融合

如果有多种约束矩阵呢?多个sim矩阵下,怎么融合到一起呢。

在求解argmax时,用超参叠加起来,且有一个作为主要的因子返回多个结果,用其他的作为调权,找本轮的最优值。

为什么不直接将多个sim矩阵用超参叠加到一起呢,容易各种bad-case。

notice

只是对当前展示屏做了多样性控制,而没有更多地考虑之前屏的多样性;并且在如何打破用户兴趣的层面,是没法解决的。现在感觉起来,强化学习才是未来啊,既能够克服多样性的问题,又能够获得最大化未来收益。

demo

DPP-code-demo

Reference

- G. L. Nemhauser, L. A. Wolsey, and M. L. Fisher. An analysis of approximations for maximizing submodular set functions–I. Mathematical Programming, 14(1):265–294, 1978. 贪婪解的出处O(M^4)

- I. Han, P. Kambadur, K. Park, and J. Shin. Faster greedy MAP inference for determinantal point processes. In Proceedings of ICML 2017, pages 1384–1393, 2017. 速度优化O(M3)

- J. Gillenwater. Approximate inference for determinantal point processes. University of Pennsyl-vania, 2014. 条件下O(N^2M)

- Chen L, Zhang G, Zhou E. Fast greedy map inference for determinantal point process to improve recommendation diversity[C]//Advances in Neural Information Processing Systems. 2018: 5622-5633.

- A. Kulesza and B. Taskar. Determinantal point processes for machine learning. Foundations and Trends R ? in Machine Learning, 5(2–3):123–286, 2012.

- Improving the Diversity of Top-N Recommendation via Determinantal Point Process