RTP解析音视频帧

RTP解析音视频帧

- RTP解析H264、AAC负载

- 解析H264

- 解析AAC

- 封装AAC的ADTS头部

- CADTS.h

- CADTS.cpp

- 采坑心得

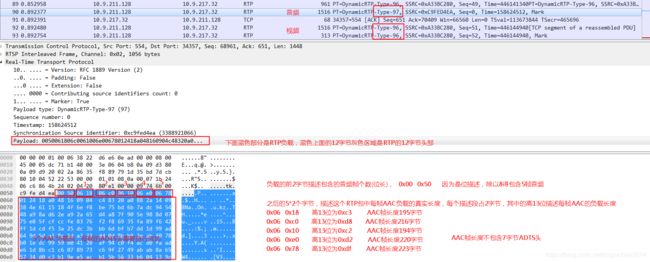

- 附录:音频抓包分析

RTP解析H264、AAC负载

RTSP中音视频是通过RTP传输的,本文记录从RTP解析出H264、AAC的过程。

协议介绍可参考 https://blog.csdn.net/lostyears/article/details/51374997

拿到RTP数据后,先去除12字节RTP头部,然后进行下面处理。

解析H264

数据较大的H264包,需要进行RTP分片发送。

实现代码:

/*

* @par pBufIn 待解析RTP(不包含12字节头)

* nLenIn 载荷长度

* pBufOut 装载H264的buf(外部传入,分配空间不小于nLenIn)

* nLenOut 一帧H264数据长度,函数返回true时有效

*

* @return true 一帧结束

* false 分片未结束

*/

bool UnpackRtpH264(const UInt8 *pBufIn, const Int32 nLenIn, UInt8 *pBufOut, Int32& nLenOut)

{

bool bFinished = true;

do

{

nLenOut = 0;

const Int32 eFrameType = pBufIn[0] & 0x1F;

if (eFrameType >= 1 && eFrameType <= 23) //单一NAL单元

{

pBufOut[0] = 0x00;//添加H264四字节头部

pBufOut[1] = 0x00;

pBufOut[2] = 0x00;

pBufOut[3] = 0x01;

memcpy(pBufOut + 4, pBufIn, nLenIn);

nLenOut = nLenIn + 4;

}

else //分片NAL单元,由多个RTP包拼接成完整的NAL单元

{

bFinished = false;

if (pBufIn[1] & 0x80) // 分片Nal单元开始位

{

m_nH264FrmeSize = 0;

pBufOut[0] = 0x00;

pBufOut[1] = 0x00;

pBufOut[2] = 0x00;

pBufOut[3] = 0x01;

pBufOut[4] = ((pBufIn[0] & 0xe0)|(pBufIn[1] & 0x1f));//取pBufIn[0]的前3位 与 pBufIn[1]的后5位

memcpy(pBufOut + 5, pBufIn + 2, nLenIn - 2); //跳过分片RTP的前两字节

m_nH264FrmeSize = nLenIn + 5 - 2;

}

else //后续的Nal单元载荷

{

Assert(m_nH264FrmeSize + nLenIn - 2 <= MAX_FRAME_SISE);

memcpy(pBufOut + m_nH264FrmeSize, pBufIn + 2, nLenIn -2);//跳过分片RTP的前两字节

m_nH264FrmeSize += nLenIn -2;

if (pBufIn[1] & 0x40) // 分片Nal单元结束位

{

nLenOut = m_nH264FrmeSize;

m_nH264FrmeSize = 0;

bFinished = true;

}

}

}

}while (0);

return bFinished;

}

解析AAC

这里要注意,可能是一个RTP包含多个AAC帧,之前按网上找的RTP后直接负载1帧AAC,大部分场景没问题,后面有个输入源解析AAC后没声音,最后发现是一个RTP包含了多个AAC负载。解析协议规范最好还是花时间研读协议规范文档,网上找的博客介绍可能不够全面,导致部分场景失效。

实现代码如下:

/*

* @par pBufIn 待解析RTP(不包含12字节头)

* nLenIn 载荷长度

* pBufOut 装载AAC的buf(外部传入,分配空间不小于nLenIn)

* nLenOut 一帧AAC数据长度,函数返回true时有效

*

* 注:可能一个RTP包中包含多个AAC帧,是通过AU_HEADER_LENGTH(除以8得帧个数)来判断

*

* @return true 一帧结束

* false 分片未结束

*/

bool UnpackRtpAAC(const UInt8 * pBufIn, const Int32 nLenIn, UInt8* pBufOut, Int32& nLenOut)

{

bool bFinished = true;

do

{

nLenOut = 0;

Int32 nAuHeaderOffset = 0;//查询头部的偏移,每次2字节

const UInt16 AU_HEADER_LENGTH = (((pBufIn[nAuHeaderOffset] << 8) | pBufIn[nAuHeaderOffset + 1]) >> 4);//首2字节表示Au-Header的长度,单位bit,所以除以16得到Au-Header字节数

nAuHeaderOffset += 2;

Assert(nLenIn > (2 + AU_HEADER_LENGTH*2));

vector<UInt32 > vecAacFrameLen[AU_HEADER_LENGTH];

for (int i = 0; i < AU_HEADER_LENGTH; ++i)

{

const UInt16 AU_HEADER = ((pBufIn[nAuHeaderOffset] << 8) | pBufIn[nAuHeaderOffset + 1]);//之后的2字节是AU_HEADER

UInt32 nAac = (AU_HEADER >> 3);//其中高13位表示一帧AAC负载的字节长度,低3位无用

vecAacFrameLen->push_back(nAac);

nAuHeaderOffset += 2;

}

const UInt8 *pAacPayload = pBufIn + nAuHeaderOffset;//真正AAC负载开始处

UInt32 nAacPayloadOffset = 0;

for (int j = 0; j < AU_HEADER_LENGTH; ++j)

{

const UInt32 nAac = vecAacFrameLen->at(j);

//生成ADTS头

SAacParam param(nAac, m_AudioInfo.nSample, m_AudioInfo.nChannel);

CADTS adts;

adts.Init(param);

//写入ADTS头

memcpy(pBufOut + nLenOut, adts.GetBuf(), adts.GetBufSize());

nLenOut += adts.GetBufSize();

//写入AAC负载

memcpy(pBufOut + nLenOut, pAacPayload + nAacPayloadOffset, nAac);

nLenOut += nAac;

nAacPayloadOffset += nAac;

}

Assert((nLenIn - nAuHeaderOffset) == nAacPayloadOffset);

} while (0);

return bFinished;

}

封装AAC的ADTS头部

CADTS.h

#ifndef max

#define max(a, b) (((a) > (b)) ? (a) : (b))

#endif

#ifndef min

#define min(a, b) (((a) < (b)) ? (a) : (b))

#endif

#define BYTE_NUMBIT 8 /* bits in byte (char) */

#define N_ADTS_SIZE 7

/*

* 定义是哪个级别的AAC

*/

enum eAACProfile

{

E_AAC_PROFILE_MAIN_PROFILE = 0,

E_AAC_PROFILE_LC,

E_AAC_PROFILE_SSR,

E_AAC_PROFILE_PROFILE_RESERVED,

};

enum eAACSample

{

E_AAC_SAMPLE_96000_HZ = 0,

E_AAC_SAMPLE_88200_HZ,

E_AAC_SAMPLE_64000_HZ,

E_AAC_SAMPLE_48000_HZ,

E_AAC_SAMPLE_44100_HZ,

E_AAC_SAMPLE_32000_HZ,

E_AAC_SAMPLE_24000_HZ,

E_AAC_SAMPLE_22050_HZ,

E_AAC_SAMPLE_16000_HZ,

E_AAC_SAMPLE_12000_HZ,

E_AAC_SAMPLE_11025_HZ,

E_AAC_SAMPLE_8000_HZ,

E_AAC_SAMPLE_7350_HZ,

E_AAC_SAMPLE_RESERVED,

};

enum eAACChannel

{

E_AAC_CHANNEL_SPECIFC_CONFIG = 0,

E_AAC_CHANNEL_MONO,

E_AAC_CHANNEL_STEREO,

E_AAC_CHANNEL_TRIPLE_TRACK,

E_AAC_CHANNEL_4,

E_AAC_CHANNEL_5,

E_AAC_CHANNEL_6,

E_AAC_CHANNEL_8,

E_AAC_CHANNEL_RESERVED,

};

enum eMpegId

{

E_MPEG4 = 0,

E_MPEG_2

};

struct SAacParam

{

SAacParam(UInt32 playod, Int32 sample, Int32 channel = 1, eAACProfile profile = E_AAC_PROFILE_LC, eMpegId id = E_MPEG4)

:eId(id), eProfile(profile), nChannel(channel), nSample(sample), nPlayod(playod)

{

};

eMpegId eId;

eAACProfile eProfile;

Int32 nChannel;

Int32 nSample;

UInt32 nPlayod;//aac负载大小(不包含ADTS头)

};

class CADTS

{

public:

CADTS();

public:

/*

* 初始化函数完成ADTS头的填充

*/

void Init(const SAacParam& aacHead);

/*

* 获取ADTS头地址

*/

UInt8* GetBuf();

/*

* 获取ADTS头长度(字节)

*/

UInt32 GetBufSize() const ;

private:

int PutBit(UInt32 data, int numBit);

int WriteByte(UInt32 data, int numBit);

/*

* 采样率下标

*/

static eAACSample GetSampleIndex(const UInt32 nSample);

/*

* 声道下标

*/

static eAACChannel GetChannelIndex(const UInt32 nChannel);

private:

UInt8 m_pBuf[N_ADTS_SIZE]; //buffer的头指针

const UInt32 m_nBit; //总位数

UInt32 m_curBit; //当前位数

};

CADTS.cpp

CADTS::CADTS():m_pBuf(),m_nBit(BYTE_NUMBIT*N_ADTS_SIZE),m_curBit(0)

{

}

void CADTS::Init(const SAacParam &aacHead)

{

/* Fixed ADTS header */

PutBit(0xFFFF, 12);// 12 bit Syncword

PutBit(aacHead.eId, 1); //ID == 0 for MPEG4 AAC, 1 for MPEG2 AAC

PutBit(0, 2); //layer == 0

PutBit(1, 1); //protection absent

PutBit(aacHead.eProfile, 2); //profile

PutBit(CADTS::GetSampleIndex(aacHead.nSample), 4); //sampling rate

PutBit(0, 1); //private bit

PutBit(CADTS::GetChannelIndex(aacHead.nChannel), 3); //numChannels

PutBit(0, 1); //original/copy

PutBit(0, 1); // home

/* Variable ADTS header */

PutBit(0, 1); // copyr. id. bit

PutBit(0, 1); // copyr. id. start

PutBit(GetBufSize() + aacHead.nPlayod, 13); //ADTS帧的长度包括ADTS头和AAC原始流

PutBit(0x7FF, 11); // buffer fullness (0x7FF for VBR)

PutBit(0 ,2); //raw data blocks (0+1=1)

}

UInt8 *CADTS::GetBuf()

{

return m_pBuf;

}

UInt32 CADTS::GetBufSize() const

{

return m_nBit/BYTE_NUMBIT;

}

int CADTS::PutBit(UInt32 data, int numBit)

{

int num,maxNum,curNum;

unsigned long bits;

if (numBit == 0)

return 0;

/* write bits in packets according to buffer byte boundaries */

num = 0;

maxNum = BYTE_NUMBIT - m_curBit % BYTE_NUMBIT;

while (num < numBit) {

curNum = min(numBit-num,maxNum);

bits = data>>(numBit-num-curNum);

if (WriteByte(bits, curNum)) {

return 1;

}

num += curNum;

maxNum = BYTE_NUMBIT;

}

return 0;

}

int CADTS::WriteByte(UInt32 data, int numBit)

{

long numUsed,idx;

idx = (m_curBit / BYTE_NUMBIT) % N_ADTS_SIZE;

numUsed = m_curBit % BYTE_NUMBIT;

#ifndef DRM

if (numUsed == 0)

m_pBuf[idx] = 0;

#endif

m_pBuf[idx] |= (data & ((1<<numBit)-1)) << (BYTE_NUMBIT-numUsed-numBit);

m_curBit += numBit;

return 0;

}

eAACSample CADTS::GetSampleIndex(const UInt32 nSample)

{

eAACSample eSample = E_AAC_SAMPLE_RESERVED;

static std::map<UInt32 , eAACSample> mpSample;

if (mpSample.empty())

{

mpSample[96000] = E_AAC_SAMPLE_96000_HZ;

mpSample[88200] = E_AAC_SAMPLE_88200_HZ;

mpSample[64000] = E_AAC_SAMPLE_64000_HZ;

mpSample[48000] = E_AAC_SAMPLE_48000_HZ;

mpSample[44100] = E_AAC_SAMPLE_44100_HZ;

mpSample[32000] = E_AAC_SAMPLE_32000_HZ;

mpSample[24000] = E_AAC_SAMPLE_24000_HZ;

mpSample[22050] = E_AAC_SAMPLE_22050_HZ;

mpSample[16000] = E_AAC_SAMPLE_16000_HZ;

mpSample[12000] = E_AAC_SAMPLE_12000_HZ;

mpSample[11025] = E_AAC_SAMPLE_11025_HZ;

mpSample[8000] = E_AAC_SAMPLE_8000_HZ;

mpSample[7350] = E_AAC_SAMPLE_7350_HZ;

};

if (mpSample.find(nSample) != mpSample.end())

{

eSample = mpSample[nSample];

}

return eSample;

}

eAACChannel CADTS::GetChannelIndex(const UInt32 nChannel)

{

eAACChannel eChannel = E_AAC_CHANNEL_RESERVED;

static std::map<UInt32 , eAACChannel> mpChannel;

if (mpChannel.empty())

{

mpChannel[0] = E_AAC_CHANNEL_SPECIFC_CONFIG;

mpChannel[1] = E_AAC_CHANNEL_MONO;

mpChannel[2] = E_AAC_CHANNEL_STEREO;

mpChannel[3] = E_AAC_CHANNEL_TRIPLE_TRACK;

mpChannel[4] = E_AAC_CHANNEL_4;

mpChannel[5] = E_AAC_CHANNEL_5;

mpChannel[6] = E_AAC_CHANNEL_6;

mpChannel[8] = E_AAC_CHANNEL_8;

};

if (mpChannel.find(nChannel) != mpChannel.end())

{

eChannel = mpChannel[nChannel];

}

return eChannel;

}

采坑心得

1、协议解析优先考虑成熟的开源代码,例如ffmpeg,流媒体相关的协议里面基本都有实现;

2、如果找不到成熟开源代码做参考,搜索协议规范文档,不复杂的话照着文档一步步做吧,规范文档比较系统全面,比网上东拼西凑找的靠谱,最后花的时间可能比乱搜一通要少,而且自己解析印象更深刻。