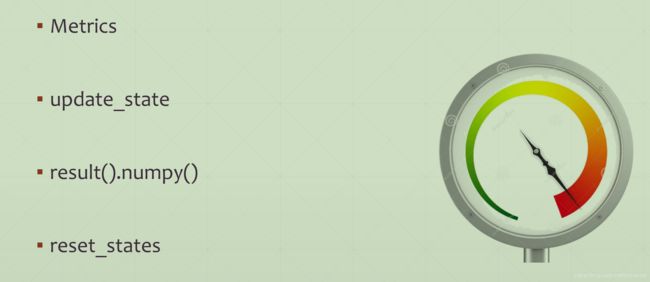

1. Keras.Metrics (度量指标)

1.1. Build a meter

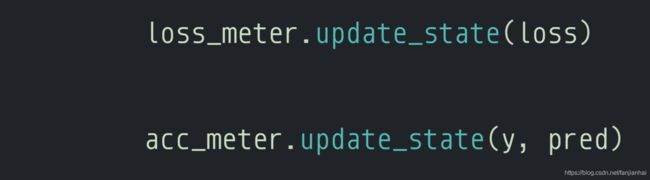

1.2. Update data

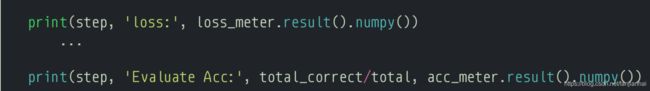

1.3. Get Average data

1.4. Clear buffer

1.5. Code

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

def pre_process(x, y):

x = tf.cast(x, dtype=tf.float32) / 255.

y = tf.cast(y, dtype=tf.int32)

return x, y

batch_size = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets: ', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x, y))

db = db.map(pre_process).shuffle(60000).batch(batch_size).repeat(10)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(pre_process).batch(batch_size)

model = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10, activation='relu')

])

model.build(input_shape=(None, 28 * 28))

model.summary()

optimizer = optimizers.Adam(learning_rate=1e-3)

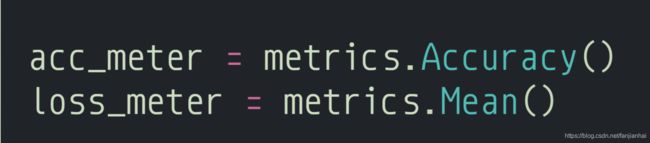

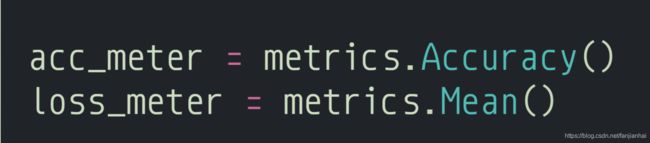

acc_meter = metrics.Accuracy()

loss_meter = metrics.Mean()

for step, (x, y) in enumerate(db):

with tf.GradientTape() as tape:

x = tf.reshape(x, (-1, 28 * 28))

out = model(x)

y_onehot = tf.one_hot(y, depth=10)

loss = tf.reduce_mean(tf.losses.categorical_crossentropy(y_onehot, out, from_logits=True))

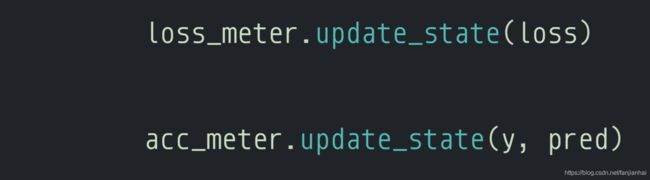

loss_meter.update_state(loss)

grads = tape.gradient(loss, model.trainable_variables)

optimizer.apply_gradients(zip(grads, model.trainable_variables))

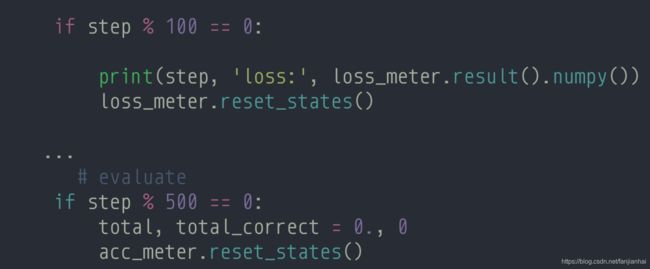

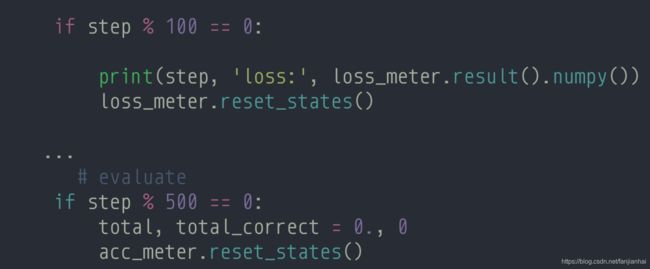

if step % 100 == 0:

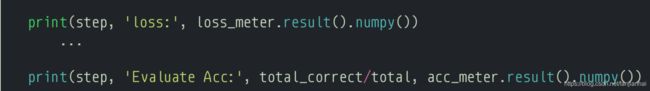

print(step, 'loss:', loss_meter.result().numpy())

if step % 500 == 0:

total, total_correct = 0., 0

acc_meter.reset_states()

for step, (x, y) in enumerate(ds_val):

x = tf.reshape(x, (-1, 28*28))

out = model(x)

pred = tf.argmax(out, axis=1)

pred = tf.cast(pred, dtype=tf.int32)

correct = tf.equal(pred, y)

total_correct += tf.reduce_sum(tf.cast(correct, dtype=tf.int32)).numpy()

total += x.shape[0]

acc_meter.update_state(y, pred)

print(step, " Evaluate acc:", total_correct/total, acc_meter.result().numpy())

1.6. 测试结果

0 loss: 2.2957244

78 Evaluate acc: 0.1831 0.1831

100 loss: 1.2260486

200 loss: 1.0626991

300 loss: 0.9883962

400 loss: 0.9474341

500 loss: 0.92040706

78 Evaluate acc: 0.6759 0.6759

600 loss: 0.8984385

700 loss: 0.88047

800 loss: 0.86719453

900 loss: 0.85594875

1000 loss: 0.8451226

78 Evaluate acc: 0.6831 0.6831

1100 loss: 0.83795416

1200 loss: 0.8301951

1300 loss: 0.8243892

1400 loss: 0.81990254

1500 loss: 0.8136047

78 Evaluate acc: 0.6837 0.6837

1600 loss: 0.80968654

1700 loss: 0.80532765

1800 loss: 0.8013762

1900 loss: 0.79705083

2000 loss: 0.792495

78 Evaluate acc: 0.6869 0.6869

2100 loss: 0.78891665

2200 loss: 0.78668064

2300 loss: 0.7847733

2400 loss: 0.7820607

2500 loss: 0.7791471

78 Evaluate acc: 0.6842 0.6842

2600 loss: 0.77668834

2700 loss: 0.77496046

2800 loss: 0.7732897

2900 loss: 0.7712144

3000 loss: 0.7693945

78 Evaluate acc: 0.6859 0.6859

3100 loss: 0.7674685

3200 loss: 0.7662053

3300 loss: 0.7646868

3400 loss: 0.7630929

3500 loss: 0.7568395

78 Evaluate acc: 0.7754 0.7754

3600 loss: 0.749667

3700 loss: 0.74337995

3800 loss: 0.73666996

3900 loss: 0.73019713

4000 loss: 0.7240193

78 Evaluate acc: 0.7788 0.7788

4100 loss: 0.7184695

4200 loss: 0.7131745

4300 loss: 0.70784307

4400 loss: 0.70276886

4500 loss: 0.6979761

78 Evaluate acc: 0.7794 0.7794

4600 loss: 0.69365555

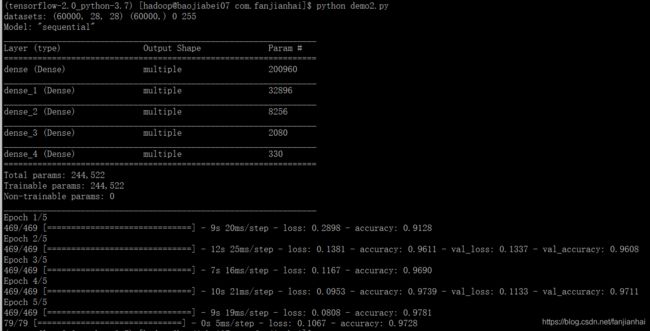

2. Compile&Fit

2.1. Code

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential

def pre_process(x, y):

"""

x is a simple image, not a batch

"""

x = tf.cast(x, dtype=tf.float32) / 255.

x = tf.reshape(x, [28 * 28])

y = tf.cast(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

return x, y

batch_size = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x, y))

db = db.map(pre_process).shuffle(60000).batch(batch_size)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(pre_process).batch(batch_size)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28 * 28))

network.summary()

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.fit(db, epochs=5, validation_data=ds_val, validation_freq=2)

network.evaluate(ds_val)

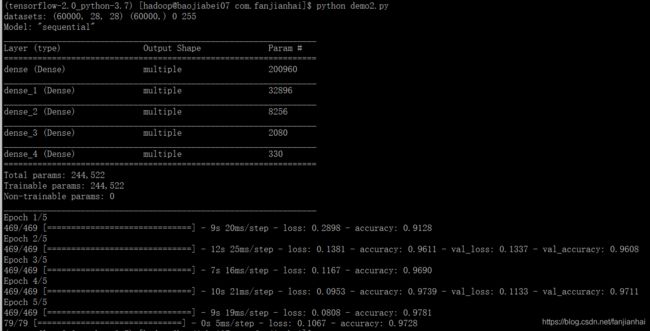

2.2. 测试结果

3. 自定义网络

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import datasets, layers, optimizers, Sequential

def pre_process(x, y):

"""

x is a simple image, not a batch

"""

x = tf.cast(x, dtype=tf.float32) / 255.

x = tf.reshape(x, [28 * 28])

y = tf.cast(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

return x, y

batch_size = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x, y))

db = db.map(pre_process).shuffle(60000).batch(batch_size)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(pre_process).batch(batch_size)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28 * 28))

network.summary()

class MyDense(layers.Layer):

def __init__(self, inp_dim, outp_dim):

super(MyDense, self).__init__()

self.kernel = self.add_weight('w', [inp_dim, outp_dim])

self.bias = self.add_weight('b', [outp_dim])

def call(self, inputs, training=None):

out = inputs @ self.kernel + self.bias

return out

class MyModel(keras.Model):

def __init__(self):

super(MyModel, self).__init__()

self.fc1 = MyDense(28 * 28, 256)

self.fc2 = MyDense(256, 128)

self.fc3 = MyDense(128, 64)

self.fc4 = MyDense(64, 32)

self.fc5 = MyDense(32, 10)

def call(self, inputs, training=None):

x = self.fc1(inputs)

x = tf.nn.relu(x)

x = self.fc2(x)

x = tf.nn.relu(x)

x = self.fc3(x)

x = tf.nn.relu(x)

x = self.fc4(x)

x = tf.nn.relu(x)

x = self.fc5(x)

return x

network = MyModel()

network.compile(optimizer=optimizers.Adam(lr=1e-3),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.fit(db, epochs=5, validation_data=ds_val,

validation_freq=2)

network.evaluate(ds_val)

4. 模型的保存与加载

4.1. save/load weights

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

def pre_process(x, y):

"""

x is a simple image, not a batch

"""

x = tf.cast(x, dtype=tf.float32) / 255.

x = tf.reshape(x, [28 * 28])

y = tf.cast(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

return x, y

batch_size = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x, y))

db = db.map(pre_process).shuffle(60000).batch(batch_size)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(pre_process).batch(batch_size)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28 * 28))

network.summary()

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.fit(db, epochs=3, validation_data=ds_val, validation_freq=2)

network.evaluate(ds_val)

network.save_weights('ckpt/weights.ckpt')

print('saved weights.')

del network

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.load_weights('ckpt/weights.ckpt')

print('loaded weights!')

network.evaluate(ds_val)

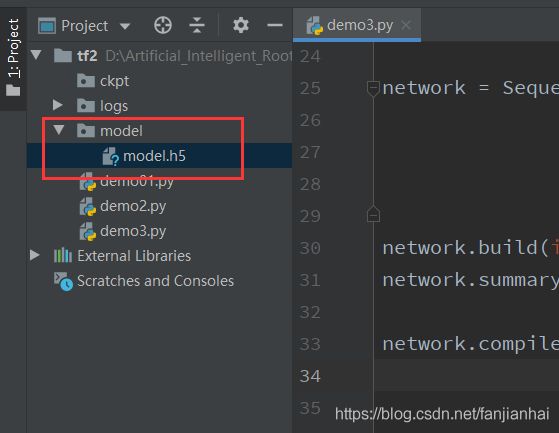

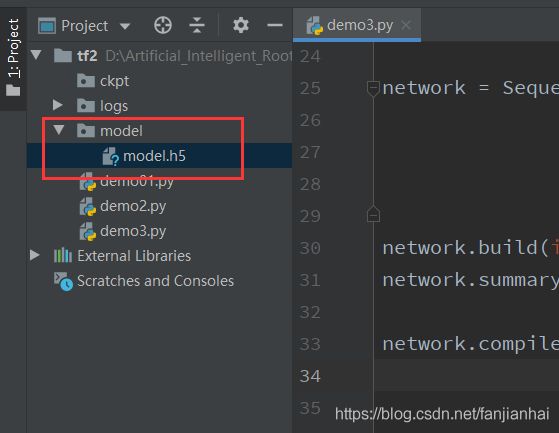

4.2. save/load entire model

import tensorflow as tf

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

def pre_process(x, y):

"""

x is a simple image, not a batch

"""

x = tf.cast(x, dtype=tf.float32) / 255.

x = tf.reshape(x, [28 * 28])

y = tf.cast(y, dtype=tf.int32)

y = tf.one_hot(y, depth=10)

return x, y

batch_size = 128

(x, y), (x_val, y_val) = datasets.mnist.load_data()

print('datasets:', x.shape, y.shape, x.min(), x.max())

db = tf.data.Dataset.from_tensor_slices((x, y))

db = db.map(pre_process).shuffle(60000).batch(batch_size)

ds_val = tf.data.Dataset.from_tensor_slices((x_val, y_val))

ds_val = ds_val.map(pre_process).batch(batch_size)

network = Sequential([layers.Dense(256, activation='relu'),

layers.Dense(128, activation='relu'),

layers.Dense(64, activation='relu'),

layers.Dense(32, activation='relu'),

layers.Dense(10)])

network.build(input_shape=(None, 28 * 28))

network.summary()

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.fit(db, epochs=3, validation_data=ds_val, validation_freq=2)

network.evaluate(ds_val)

network.save('model/model.h5')

print('saved total model.')

del network

print('loaded model from file.')

network = tf.keras.models.load_model('model/model.h5', compile=False)

network.compile(optimizer=optimizers.Adam(lr=0.01),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy']

)

network.evaluate(ds_val)

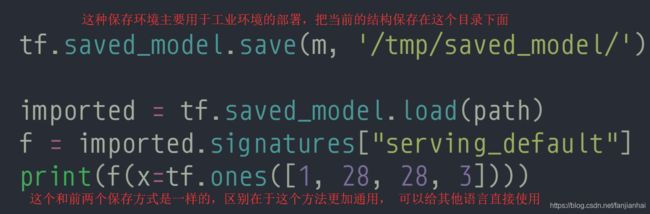

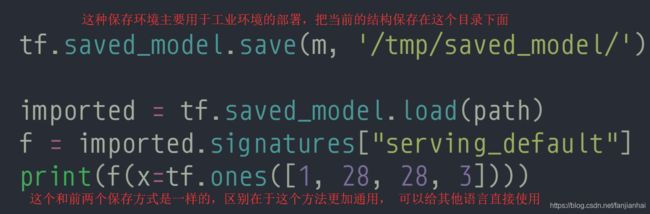

4.3. saved_model

5. Keras实战CIFAR10

5.1. CIFAR10

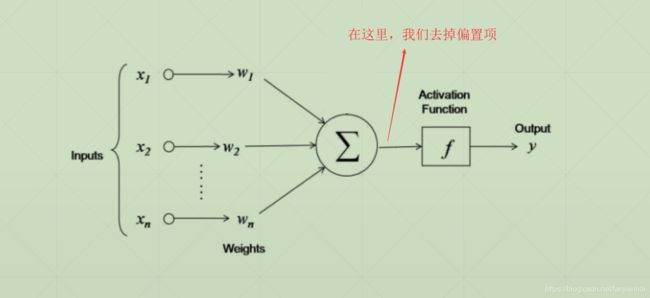

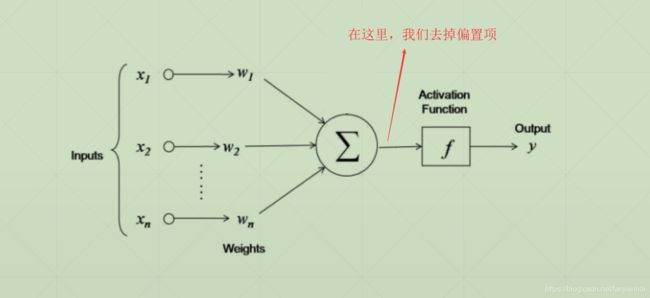

5.2. My Dense layer

5.3. Code

import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import datasets, layers, optimizers, Sequential, metrics

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '3'

def pre_process(x, y):

x = tf.cast(x, dtype=tf.float32) / 255.

y = tf.cast(y, dtype=tf.int32)

return x, y

batch_size = 128

(x, y), (x_val, y_val) = datasets.cifar10.load_data()

y = tf.squeeze(y)

y_val = tf.squeeze(y_val)

y = tf.one_hot(y, depth=10)

y_val = tf.one_hot(y_val, depth=10)

train_db = tf.data.Dataset.from_tensor_slices((x, y))

train_db = train_db.map(pre_process).shuffle(10000).batch(batch_size)

test_db = tf.data.Dataset.from_tensor_slices((x_val, y_val))

test_db = test_db.map(pre_process).batch(batch_size)

sample = next(iter(train_db))

print("batch: ", sample[0].shape, sample[1].shape)

class MyDense(layers.Layer):

"""自定义层"""

def __init__(self, input_dim, out_dim):

super(MyDense, self).__init__()

self.kernel = self.add_variable('w', [input_dim, out_dim])

def call(self, inputs, training=None):

"""前向传播的函数"""

x = inputs @ self.kernel

return x

class MyNetwork(keras.Model):

def __init__(self):

super(MyNetwork, self).__init__()

self.fc1 = MyDense(32*32*3, 256)

self.fc2 = MyDense(256, 128)

self.fc3 = MyDense(128, 64)

self.fc4 = MyDense(64, 32)

self.fc5 = MyDense(32, 10)

def call(self, inputs, training=None):

"""

前向传播

inputs: [b, 32, 32, 3]

"""

x = tf.reshape(inputs, [-1, 32*32*3])

x = self.fc1(x)

x = tf.nn.relu(x)

x = self.fc2(x)

x = tf.nn.relu(x)

x = self.fc3(x)

x = tf.nn.relu(x)

x = self.fc4(x)

x = tf.nn.relu(x)

x = self.fc5(x)

return x

network = MyNetwork()

network.compile(optimizer=optimizers.Adam(lr=1e-3),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

network.fit(train_db, epochs=5, validation_data=test_db, validation_freq=1)

network.evaluate(test_db)

network.save_weights('ckpt/weights.ckpt')

del network

print('saved to ckpt/weights.ckpt')

network = MyNetwork()

network.compile(optimizer=optimizers.Adam(lr=1e-3),

loss=tf.losses.CategoricalCrossentropy(from_logits=True),

metrics=['accuracy'])

network.load_weights('ckpt/weights.ckpt')

print('loaded weights from file.')

network.evaluate(test_db)

6. 需要全套课程视频+PPT+代码资源可以私聊我