基于MOOC嵩天《Python网络爬虫与信息提取》视频学习记录——第二周:Beautiful Soup库

文章目录

- 1.Beautiful Soup库基本使用方法

- Beautiful Soup解析器

- 2.Beautiful Soup库的基本元素

- 1)Tag

- 2)name

- 3)attrs

- 4)string

- 5)comment

- Beautiful Soup库的理解

- 3.基于bs4库的html遍历方法

- 标签树的下行遍历

- 标签树的上行遍历

- 标签树的平行遍历

- 4.基于bs4库的html格式输出

- 信息组织与提取方法

1.Beautiful Soup库基本使用方法

以requests库get方式解析:

import requests

from bs4 import BeautifulSoup

try:

kv = {'user_Agent':'Mozilla/5.0'}

demo = requests.get('http://www.ugirl.com',headers = kv)

demo.raise_for_status()

soup = BeautifulSoup(demo.content,'html.parser')

print(soup.prettify()) #以html文本格式打印

except:

print('解析错误')

from bs4 import BeautifulSoup

try:

soup = BeautifulSoup(open('D:\\ugirl.html',encoding='UTF8'),'html.parser')

print(soup.prettify()) #以html文本格式打印

except:

print('解析错误')

解析结果同上。

Beautiful Soup解析器

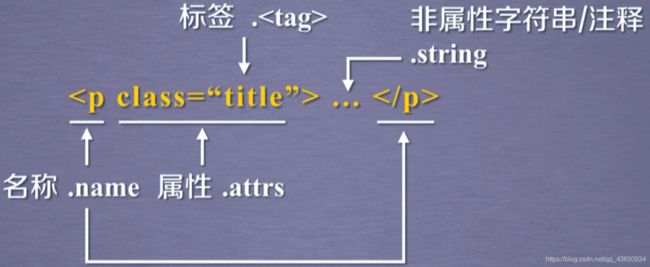

2.Beautiful Soup库的基本元素

1)Tag

title标签是页面在浏览器左上方显示的信息。

import requests

from bs4 import BeautifulSoup

try:

kv = {'user_Agent':'Mozilla/5.0'}

demo = requests.get('http://www.ugirl.com',headers = kv)

demo.raise_for_status()

soup = BeautifulSoup(demo.content,'html.parser')

print(soup.title)

print(type(soup.title))

except:

print('解析错误')

print(soup.a) #当存在多个a标签时只获取第一个a标签

print(type(soup.a))

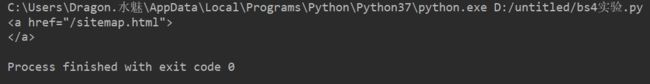

运行结果:

![]()

2)name

打印a标签的名字:

print(soup.a.name)

print(type(soup.a.name))

运行结果:

![]()

打印a标签父亲的名字(包含a标签的上一层标签):

print(soup.a.parent.name)

运行结果:

![]()

3)attrs

打印a标签的属性:

print(soup.a.attrs)

运行结果:

![]()

4)string

打印非属性字符长:

print(soup.a.string)

运行结果:

![]()

该网站无非属性字符串。

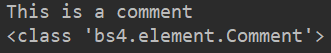

5)comment

打印注释:

newsoup =BeautifulSoup('This is not a comment,

','html.parser')

print(newsoup.b.string)

print(type(newsoup.b.string))

print(new)

Beautiful Soup库的理解

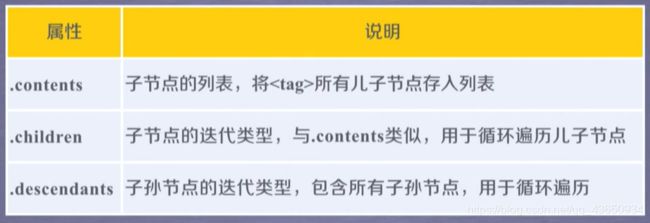

3.基于bs4库的html遍历方法

标签树的下行遍历

import requests

from bs4 import BeautifulSoup

try:

kv = {'user_Agent':'Mozilla/5.0'}

demo = requests.get('https://handmaid.cn/?ad_id=751',headers = kv)

demo.raise_for_status()

soup = BeautifulSoup(demo.content,'html.parser')

print(soup.head.contents) #把head标签的儿子结点放入列表中

print(type(soup.head.contents))

print(len(soup.head.contents))

except:

print('爬取失败')

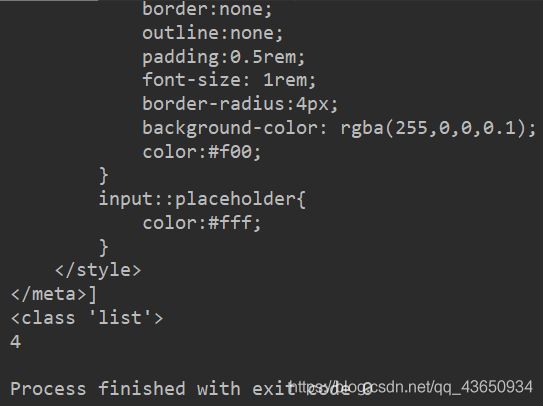

运行结果:

可以使用for i in soup.head.contents进行遍历。

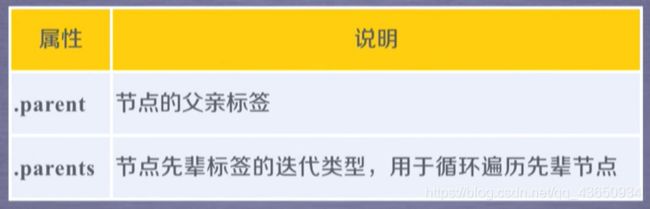

标签树的上行遍历

import requests

from bs4 import BeautifulSoup

try:

kv = {'user_Agent':'Mozilla/5.0'}

demo = requests.get('https://handmaid.cn/?ad_id=751',headers = kv)

demo.raise_for_status()

soup = BeautifulSoup(demo.content,'html.parser')

for parent in soup.a.parents:

if parent is None:

print(parent)

else:

print(parent.name) #打印a标签所有先辈的名字

except:

print('爬取失败')

标签树的平行遍历

注意:平行遍历必须发生在同一个父节点下的各节点间

可以使用for in 进行遍历。

4.基于bs4库的html格式输出

prettify( ):为html文本加入换行符,可以使用print打印

import requests

try:

from bs4 import BeautifulSoup

kv = {'user_Agent':'Mozilla/5.0'}

demo = requests.get('https://handmaid.cn/?ad_id=751',headers = kv)

soup = BeautifulSoup(demo.content,'html.parser')

print(soup.prettify())

except:

print('爬取错误')

运行结果:

print(soup.a.prettify())

信息组织与提取方法

find_all()