Kubernetes 部署contiv-vpp

简介

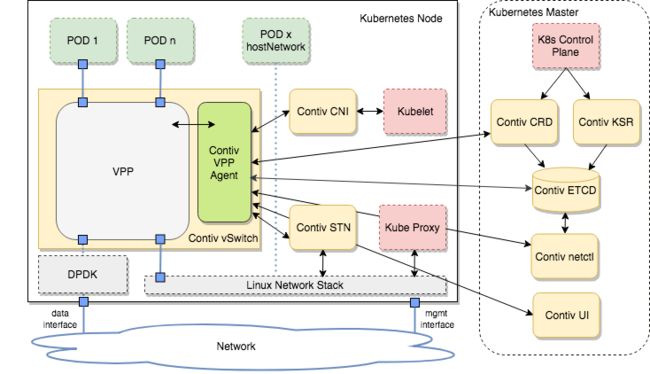

Contiv/VPP is a Kubernetes network plugin that uses FD.io VPP to provide network connectivity between PODs in a k8s cluster. It deploys itself as a set of system PODs in the kube-system namespace, some of them (contiv-ksr, contiv-crd, contiv-etcd) on the master node, and some of them (contiv-cni, contiv-vswitch, contiv-stn) on each node in the cluster.

Contiv/VPP is fully integrated with k8s via its components, and it automatically reprograms itself upon each change in the cluster via k8s API.

The main component of the solution, VPP, runs within the contiv-vswitch POD on each node in the cluster and provides POD-to-POD connectivity across the nodes in the cluster, as well as host-to-POD and outside-to-POD connectivity. While doing that, it leverages VPP’s fast data processing that runs completely in userspace and uses DPDK for fast access to the network IO layer.

Kubernetes services and policies are also reflected into VPP configuration, which means they are fully supported on VPP, without the need of forwarding packets into the Linux network stack (Kube Proxy), which makes them very effective and scalable.

Contiv-VPP架构

Contiv/VPP consists of several components, each of them packed and shipped as a Docker container. Three of them deploy on Kubernetes master node only:

- Contiv KSR

- Contiv CRD + netctl

- Contiv ETCD

and the rest of them deploy on all nodes within the k8s cluster (including the master node): - Contiv vSwitch

- Contiv CNI

- Contiv STN

Contiv-VPP架构图:

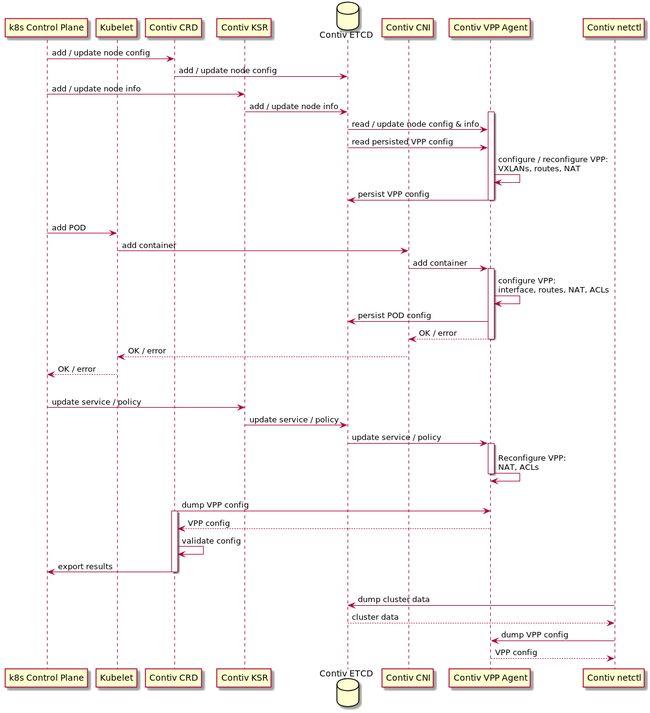

Contiv-VPP工作时序图:

K8S环境部署Contiv-VPP

1、配置Contiv-VPP运行环境:

使用Contiv-VPP提供的脚本setup-node.sh配置运行环境。使用DPDK项目提供的dpdk-setup.sh脚本配置大页内存。

[root@develop contiv]# ls

contiv-vpp.yaml dpdk-setup.sh pull-images.sh setup-node.sh

[root@develop contiv]# bash dpdk-setup.sh

------------------------------------------------------------------------------

RTE_SDK exported as /data/k8s

------------------------------------------------------------------------------

----------------------------------------------------------

Step 1: Select the DPDK environment to build

----------------------------------------------------------

[1] *

----------------------------------------------------------

Step 2: Setup linuxapp environment

----------------------------------------------------------

[2] Insert IGB UIO module

[3] Insert VFIO module

[4] Insert KNI module

[5] Setup hugepage mappings for non-NUMA systems

[6] Setup hugepage mappings for NUMA systems

[7] Display current Ethernet/Crypto device settings

[8] Bind Ethernet/Crypto device to IGB UIO module

[9] Bind Ethernet/Crypto device to VFIO module

[10] Setup VFIO permissions

----------------------------------------------------------

Step 3: Run test application for linuxapp environment

----------------------------------------------------------

[11] Run test application ($RTE_TARGET/app/test)

[12] Run testpmd application in interactive mode ($RTE_TARGET/app/testpmd)

----------------------------------------------------------

Step 4: Other tools

----------------------------------------------------------

[13] List hugepage info from /proc/meminfo

----------------------------------------------------------

Step 5: Uninstall and system cleanup

----------------------------------------------------------

[14] Unbind devices from IGB UIO or VFIO driver

[15] Remove IGB UIO module

[16] Remove VFIO module

[17] Remove KNI module

[18] Remove hugepage mappings

[19] Exit Script

Option: 6

Removing currently reserved hugepages

Unmounting /mnt/huge and removing directory

Input the number of 2048kB hugepages for each node

Example: to have 128MB of hugepages available per node in a 2MB huge page system,

enter '64' to reserve 64 * 2MB pages on each node

Number of pages for node0: 1024

Number of pages for node1: 1024

Reserving hugepages

Creating /mnt/huge and mounting as hugetlbfs

Press enter to continue ...

----------------------------------------------------------

Step 1: Select the DPDK environment to build

----------------------------------------------------------

[1] *

----------------------------------------------------------

Step 2: Setup linuxapp environment

----------------------------------------------------------

[2] Insert IGB UIO module

[3] Insert VFIO module

[4] Insert KNI module

[5] Setup hugepage mappings for non-NUMA systems

[6] Setup hugepage mappings for NUMA systems

[7] Display current Ethernet/Crypto device settings

[8] Bind Ethernet/Crypto device to IGB UIO module

[9] Bind Ethernet/Crypto device to VFIO module

[10] Setup VFIO permissions

----------------------------------------------------------

Step 3: Run test application for linuxapp environment

----------------------------------------------------------

[11] Run test application ($RTE_TARGET/app/test)

[12] Run testpmd application in interactive mode ($RTE_TARGET/app/testpmd)

----------------------------------------------------------

Step 4: Other tools

----------------------------------------------------------

[13] List hugepage info from /proc/meminfo

----------------------------------------------------------

Step 5: Uninstall and system cleanup

----------------------------------------------------------

[14] Unbind devices from IGB UIO or VFIO driver

[15] Remove IGB UIO module

[16] Remove VFIO module

[17] Remove KNI module

[18] Remove hugepage mappings

[19] Exit Script

Option: q

[root@develop contiv]# bash setup-node.sh

#########################################

# Contiv - VPP #

#########################################

Do you want to setup multinode cluster? [Y/n] y

PCI UIO driver is loaded

The following network devices were found

1) enp2s0f0 0000:02:00.0

2) enp2s0f1 0000:02:00.1

3) ens15f0 0000:04:00.0

4) ens15f1 0000:04:00.1

5) ens14f0 0000:81:00.0

6) ens14f1 0000:81:00.1

7) ens14f2 0000:81:00.2

8) ens14f3 0000:81:00.3

9) ens6f0 0000:83:00.0

10) ens6f1 0000:83:00.1

11) ens6f2 0000:83:00.2

12) ens6f3 0000:83:00.3

Select interface for node interconnect [1-12]:1

Device 'enp2s0f0' must be shutdown, do you want to proceed? [Y/n] y

unix {

nodaemon

cli-listen /run/vpp/cli.sock

cli-no-pager

poll-sleep-usec 100

}

nat {

endpoint-dependent

translation hash buckets 1048576

translation hash memory 268435456

user hash buckets 1024

max translations per user 10000

}

acl-plugin {

use tuple merge 0

}

api-trace {

on

nitems 5000

}

dpdk {

dev 0000:02:00.0

}

File /etc/vpp/contiv-vswitch.conf will be modified, do you want to proceed? [Y/n] y

Do you want to pull the latest images? [Y/n] n

Do you want to install STN Daemon? [Y/n] n

Configuration of the node finished successfully.

[root@develop contiv]#

部署K8S环境(Contos7系统)

[root@develop k8s]#kubeadm reset

[root@develop k8s]#swapoff -a

[root@develop k8s]#kubeadm init --image-repository registry.aliyuncs.com/google_containers --kubernetes-version v1.13.2 --pod-network-cidr=192.168.0.0/16

[root@develop k8s]#mkdir -p $HOME/.kube

[root@develop k8s]#cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

[root@develop k8s]#chown $(id -u):$(id -g) $HOME/.kube/config

[root@develop k8s]#kubectl taint nodes --all node-role.kubernetes.io/master-

[root@develop k8s]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system coredns-78d4cf999f-zgxqb 0/1 Pending 0 108s

kube-system coredns-78d4cf999f-zkwg8 0/1 Pending 0 108s

kube-system etcd-develop 1/1 Running 0 51s

kube-system kube-apiserver-develop 1/1 Running 0 62s

kube-system kube-controller-manager-develop 1/1 Running 0 68s

kube-system kube-proxy-25zlj 1/1 Running 0 108s

kube-system kube-scheduler-develop 1/1 Running 0 56s

[root@develop k8s]#

部署Contiv-VPP到K8S环境

1、下载Contiv-VPP编排文件

[root@develop contiv]# wget https://raw.githubusercontent.com/contiv/vpp/master/k8s/contiv-vpp.yaml

--2019-02-08 15:15:38-- https://raw.githubusercontent.com/contiv/vpp/master/k8s/contiv-vpp.yaml

Resolving raw.githubusercontent.com (raw.githubusercontent.com)... 151.101.0.133, 151.101.64.133, 151.101.128.133, ...

Connecting to raw.githubusercontent.com (raw.githubusercontent.com)|151.101.0.133|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 24303 (24K) [text/plain]

Saving to: ‘contiv-vpp.yaml.1’

100%[==============================================================================================================================================================>] 24,303 88.0KB/s in 0.3s

2019-02-08 15:15:40 (88.0 KB/s) - ‘contiv-vpp.yaml.1’ saved [24303/24303]

[root@develop contiv]#

2、使用contiv-vpp.yaml编排文件部署Contiv-VPP

[root@develop contiv]# kubectl apply -f contiv-vpp.yaml

configmap/contiv-agent-cfg created

configmap/vpp-agent-cfg created

statefulset.apps/contiv-etcd created

service/contiv-etcd created

configmap/contiv-ksr-http-cfg created

configmap/contiv-etcd-cfg created

configmap/contiv-etcd-withcompact-cfg created

daemonset.extensions/contiv-vswitch created

daemonset.extensions/contiv-ksr created

clusterrole.rbac.authorization.k8s.io/contiv-ksr created

serviceaccount/contiv-ksr created

clusterrolebinding.rbac.authorization.k8s.io/contiv-ksr created

daemonset.extensions/contiv-crd created

clusterrole.rbac.authorization.k8s.io/contiv-crd created

serviceaccount/contiv-crd created

clusterrolebinding.rbac.authorization.k8s.io/contiv-crd created

configmap/contiv-crd-http-cfg created

[root@develop contiv]#

等待5分钟左右部署完成:

[root@develop contiv]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system contiv-crd-qzv8j 1/1 Running 0 2m56s

kube-system contiv-etcd-0 1/1 Running 0 2m57s

kube-system contiv-ksr-tcxts 1/1 Running 0 2m56s

kube-system contiv-vswitch-kp9cd 1/1 Running 0 2m57s

kube-system coredns-78d4cf999f-zgxqb 1/1 Running 0 6m49s

kube-system coredns-78d4cf999f-zkwg8 1/1 Running 0 6m49s

kube-system etcd-develop 1/1 Running 0 5m52s

kube-system kube-apiserver-develop 1/1 Running 0 6m3s

kube-system kube-controller-manager-develop 1/1 Running 0 6m9s

kube-system kube-proxy-25zlj 1/1 Running 0 6m49s

kube-system kube-scheduler-develop 1/1 Running 0 5m57s

[root@develop contiv]# kubectl get daemonset --all-namespaces

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system contiv-crd 1 1 1 1 1 node-role.kubernetes.io/master= 3m8s

kube-system contiv-ksr 1 1 1 1 1 node-role.kubernetes.io/master= 3m8s

kube-system contiv-vswitch 1 1 1 1 1 3m8s

kube-system kube-proxy 1 1 1 1 1 7m14s

[root@develop contiv]# kubectl get configmap --all-namespaces

NAMESPACE NAME DATA AGE

kube-public cluster-info 2 7m25s

kube-system contiv-agent-cfg 3 3m18s

kube-system contiv-crd-http-cfg 2 3m18s

kube-system contiv-etcd-cfg 1 3m18s

kube-system contiv-etcd-withcompact-cfg 1 3m18s

kube-system contiv-ksr-http-cfg 1 3m18s

kube-system coredns 1 7m25s

kube-system extension-apiserver-authentication 6 7m28s

kube-system kube-proxy 2 7m24s

kube-system kubeadm-config 2 7m26s

kube-system kubelet-config-1.13 1 7m26s

kube-system vpp-agent-cfg 9 3m18s

[root@develop contiv]# vppctl show int

Name Idx State MTU (L3/IP4/IP6/MPLS) Counter Count

TenGigabitEthernet2/0/0 1 up 9000/0/0/0

local0 0 down 0/0/0/0

loop0 2 up 9000/0/0/0

loop1 4 up 9000/0/0/0

tap0 3 up 1450/0/0/0 rx packets 712

rx bytes 275063

tx packets 709

tx bytes 57569

drops 11

ip4 701

ip6 9

tap1 5 up 1450/0/0/0 rx packets 407

rx bytes 33798

tx packets 350

tx bytes 137136

drops 49

ip4 398

ip6 9

tap2 6 up 1450/0/0/0 rx packets 398

rx bytes 33043

tx packets 351

tx bytes 137105

drops 49

ip4 389

ip6 9

[root@develop contiv]#

注意事项:

1、如果先部署K8S环境,再部署Contiv-VPP,需要重启一下kubelet,不然容器识别不到宿主机的大页内存配置(虽然在容器内可以看到大页内存已经配置好),会导致contiv-vswitch-kp9cd容器启动失败(vpp启动失败),失败情况如下:

/usr/bin/vpp[46013]: dpdk: EAL init args: -c 2 -n 4 --huge-dir /run/vpp/hugepages --file-prefix vpp --master-lcore 1 --socket-mem 64,64

[New Thread 0x7ffea7a7a700 (LWP 46713)]

[New Thread 0x7ffea7279700 (LWP 46716)]

Thread 1 "vpp" hit Breakpoint 1, 0x00007fffb3f87ea0 in rte_eal_memory_init () from /usr/lib/vpp_plugins/dpdk_plugin.so

(gdb) s

Single stepping until exit from function rte_eal_memory_init,

which has no line number information.

Thread 1 "vpp" received signal SIGBUS, Bus error.

0x00007fffb3f8147e in ?? () from /usr/lib/vpp_plugins/dpdk_plugin.so

(gdb)