版本: spark 2.3.0 hadoop : cdh 5.14.2-2.6.0

配置情况:

- spark-env.sh

HADOOP_CONF_DIR=/etc/hadoop/conf

YARN_CONF_DIR=/etc/hadoop/conf

JAVA_HOME=/usr/java/jdk1.8.0_172

export LD_LIBRARY_PATH=:/usr/lib/hadoop/lib/native

(添加LD_LIBRARY_PATH 是因为: Unable to load native-hadoop library for your platform... 警告)

slaves文件 , 只配置一个节点。

使用sbin/start-all.sh ,启动集群

使用jps , 可以看到 , Master 、 Worker 已经正常启动。

查看master 日志

18/05/25 15:02:59 INFO Utils: Successfully started service 'sparkMaster' on port 7077.

18/05/25 15:03:00 INFO Master: Starting Spark master at spark://node202.hmbank.com:7077

18/05/25 15:03:00 INFO Master: Running Spark version 2.3.0

18/05/25 15:03:00 INFO Utils: Successfully started service 'MasterUI' on port 8080.

18/05/25 15:03:00 INFO MasterWebUI: Bound MasterWebUI to 0.0.0.0, and started at http://node202.hmbank.com:8080

18/05/25 15:03:00 INFO Utils: Successfully started service on port 6066.

18/05/25 15:03:00 INFO StandaloneRestServer: Started REST server for submitting applications on port 6066

18/05/25 15:03:01 INFO Master: I have been elected leader! New state: ALIVE

18/05/25 15:03:01 INFO Master: Registering worker 10.30.16.202:37422 with 2 cores, 6.7 GB RAM

其中, 8080 端口是web 端口, 6066是REST 服务器端口。

- 查看worker日志

Worker --webui-port 8081 spark://node202.hmbank.com:7077

18/05/25 15:02:59 INFO Utils: Successfully started service 'sparkWorker' on port 37422.

worker的webui端口是8081 , 连接到master的7077端口 。 其自身启动的端口是37422(随机得)。

从上面可以看到是Spark on Yarn 已经正常启动 。

- 执行 bin/spark-shell --master yarn

报错如下:

18/04/16 07:59:54 ERROR SparkContext: Error initializing SparkContext.

org.apache.spark.SparkException: Yarn application has already ended! It might have been killed or unable to launch application master.

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.waitForApplication(YarnClientSchedulerBackend.scala:85)

at org.apache.spark.scheduler.cluster.YarnClientSchedulerBackend.start(YarnClientSchedulerBackend.scala:62)

at org.apache.spark.scheduler.TaskSchedulerImpl.start(TaskSchedulerImpl.scala:173)

at org.apache.spark.SparkContext.(SparkContext.scala:509)

at org.apache.spark.SparkContext$.getOrCreate(SparkContext.scala:2516)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:918)

at org.apache.spark.sql.SparkSession$Builder$$anonfun$6.apply(SparkSession.scala:910)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.sql.SparkSession$Builder.getOrCreate(SparkSession.scala:910)

at org.apache.spark.repl.Main$.createSparkSession(Main.scala:101)

at $line3.$read$$iw$$iw.(:15)

-

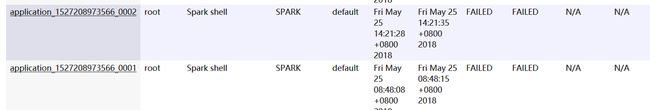

查看ResourceManager 状态 为

查看日志:

Diagnostics: Exception from container-launch.

Container id: container_1527208973566_0002_02_000001

Exit code: 1

Stack trace: ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:604)

at org.apache.hadoop.util.Shell.run(Shell.java:507)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:789)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:213)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

Container exited with a non-zero exit code 1

Failing this attempt. Failing the application.

这看不出来什么东西 。

- 查看nodemanager日志

2018-05-25 14:21:35,267 WARN org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor: Exception from container-launch with container ID: container_1527208973566_0002_02_000001 and exit code: 1

ExitCodeException exitCode=1:

at org.apache.hadoop.util.Shell.runCommand(Shell.java:604)

at org.apache.hadoop.util.Shell.run(Shell.java:507)

at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:789)

at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:213)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

at java.util.concurrent.FutureTask.run(FutureTask.java:262)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

at java.lang.Thread.run(Thread.java:745)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: Exception from container-launch.

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: Container id: container_1527208973566_0002_02_000001

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: Exit code: 1

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: Stack trace: ExitCodeException exitCode=1:

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at org.apache.hadoop.util.Shell.runCommand(Shell.java:604)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at org.apache.hadoop.util.Shell.run(Shell.java:507)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at org.apache.hadoop.util.Shell$ShellCommandExecutor.execute(Shell.java:789)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at org.apache.hadoop.yarn.server.nodemanager.DefaultContainerExecutor.launchContainer(DefaultContainerExecutor.java:213)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:302)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at org.apache.hadoop.yarn.server.nodemanager.containermanager.launcher.ContainerLaunch.call(ContainerLaunch.java:82)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at java.util.concurrent.FutureTask.run(FutureTask.java:262)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1145)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:615)

2018-05-25 14:21:35,267 INFO org.apache.hadoop.yarn.server.nodemanager.ContainerExecutor: at java.lang.Thread.run(Thread.java:745)

也没什么东西 ?

- 网上查看该问题的解决方案:

- 配置 yarn-site.xml , 关闭内存检查

yarn.nodemanager.pmem-check-enabled

false

yarn.nodemanager.vmem-check-enabled

false

没用!

- 添加spark.yarn.jars 的, 没用!

- 发现配置错误的, 仔细检查各个配置, 发现没错!

- 仔细查看官网 spark on yarn 的 调试章节 ,

yarn logs -applicationId

通过这个命令调出日志, 发现了问题:

Exception in thread "main" java.lang.UnsupportedClassVersionError: org/apache/spark/network/util/ByteUnit : Unsupported major.minor version 52.0

at java.lang.ClassLoader.defineClass1(Native Method)

at java.lang.ClassLoader.defineClass(ClassLoader.java:800)

at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142)

at java.net.URLClassLoader.defineClass(URLClassLoader.java:449)

at java.net.URLClassLoader.access$100(URLClassLoader.java:71)

at java.net.URLClassLoader$1.run(URLClassLoader.java:361)

at java.net.URLClassLoader$1.run(URLClassLoader.java:355)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:354)

at java.lang.ClassLoader.loadClass(ClassLoader.java:425)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308)

at java.lang.ClassLoader.loadClass(ClassLoader.java:358)

at org.apache.spark.deploy.history.config$.(config.scala:44)

at org.apache.spark.deploy.history.config$.(config.scala)

at org.apache.spark.SparkConf$.(SparkConf.scala:635)

at org.apache.spark.SparkConf$.(SparkConf.scala)

at org.apache.spark.SparkConf.set(SparkConf.scala:94)

at org.apache.spark.SparkConf$$anonfun$loadFromSystemProperties$3.apply(SparkConf.scala:76)

at org.apache.spark.SparkConf$$anonfun$loadFromSystemProperties$3.apply(SparkConf.scala:75)

at scala.collection.TraversableLike$WithFilter$$anonfun$foreach$1.apply(TraversableLike.scala:733)

at scala.collection.immutable.HashMap$HashMap1.foreach(HashMap.scala:221)

at scala.collection.immutable.HashMap$HashTrieMap.foreach(HashMap.scala:428)

at scala.collection.immutable.HashMap$HashTrieMap.foreach(HashMap.scala:428)

at scala.collection.immutable.HashMap$HashTrieMap.foreach(HashMap.scala:428)

at scala.collection.TraversableLike$WithFilter.foreach(TraversableLike.scala:732)

at org.apache.spark.SparkConf.loadFromSystemProperties(SparkConf.scala:75)

at org.apache.spark.SparkConf.(SparkConf.scala:70)

at org.apache.spark.SparkConf.(SparkConf.scala:57)

at org.apache.spark.deploy.yarn.ApplicationMaster.(ApplicationMaster.scala:62)

at org.apache.spark.deploy.yarn.ApplicationMaster$.main(ApplicationMaster.scala:823)

at org.apache.spark.deploy.yarn.ExecutorLauncher$.main(ApplicationMaster.scala:854)

at org.apache.spark.deploy.yarn.ExecutorLauncher.main(ApplicationMaster.scala)

明显使用的jdk不匹配。

spark使用的jdk8 , cdh hadoop 使用的是1.7 。

- 更改cdh hadoop 的jdk 为1.8

方法为 修改 /etc/default/bigtop-utils , cdh使用bigtop 来探测java目录, 参考: https://www.cnblogs.com/stillcoolme/p/7373303.html

export JAVA_HOME=/usr/java/jdk1.8.0_172

重启hadoop集群, 重试, 问题解决了!

为何使用yarn logs 可以找到日志 ???

在http://spark.apache.org/docs/latest/running-on-yarn.html官方文档中有说明。

我的hadoop集群是打开了聚合日志选项, 所以日志会被收集走。

如果打开日志聚合(使用yarn.log-aggregation-enable配置),容器日志将复制到HDFS中,而本地计算机上的日志将被删除。查看日志可以通过 yarn logs 命令从群集中的任何位置查看。

该命令会将指定的应用程序日志从所有的容器中打印所有的日志内容。您也可以使用HDFS shell 或API直接在HDFS中查看容器日志文件。他们所在的目录参考YARN配置(yarn.nodemanager.remote-app-log-dir和yarn.nodemanager.remote-app-log-dir-suffix)。日志也可以通过Spark Web UI中的Executors 标签页查看。你需要运行spark 历史服务器和MapReduce历史服务器,并在yarn-site.xml正确配置yarn.log.server.url。Spark历史记录服务器UI上的日志URL会将您重定向到MapReduce历史记录服务器以显示聚合日志。

当日志聚合未打开时,日志将保存在每台计算机上的本地YARN_APP_LOGS_DIR,通常配置为/tmp/logs或$HADOOP_HOME/logs/userlogs,取决于Hadoop版本和安装配置。查看容器的日志需要转到包含它们的主机并查看此目录。子目录按应用程序ID和容器ID组织日志文件。日志也可以在执行程序选项卡下的Spark Web UI上使用,并且不需要运行MapReduce历史记录服务器。