Kubeadmin自动化部署kubernetes-1.14.1

一、环境准备

1.设置主机名hostname,管理节点设置主机名为 master,各节点设置对应主机名

hostnamectl set-hostname master注:此步骤非必须

2.编辑 /etc/hosts 文件,添加域名解析

cat <>/etc/hosts

192.168.5.43 szx1-personal-liuchuang-dev-001

192.168.5.137 szx1-personal-liuchuang-dev-000

172.31.19.11 szx1-personal-liuchuang-dev-003

172.31.18.86 szx1-personal-liuchuang-dev-002

EOF 3.关闭防火墙、selinux和swap

systemctl stop firewalld

systemctl disable firewalld

setenforce 0

sed -i "s/^SELINUX=enforcing/SELINUX=disabled/g" /etc/selinux/config

swapoff -a

sed -i 's/.*swap.*/#&/' /etc/fstab4.配置内核参数,将桥接的IPv4流量传递到iptables的链

cat > /etc/sysctl.d/k8s.conf <5.配置国内yum源

yum install -y wget

mkdir /etc/yum.repos.d/bak && mv /etc/yum.repos.d/*.repo /etc/yum.repos.d/bak

wget -O /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo

wget -O /etc/yum.repos.d/epel.repo https://mirrors.aliyun.com/repo/epel-7.repo配置国内Kubernetes源

cat < /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF 配置 docker 源

wget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repo

yum clean all && yum makecache二、软件安装

注:在所有节点上进行如下操作

1.安装或升级docker

安装:

yum install -y docker-ce-18.09.6-3.el7

systemctl enable docker && systemctl start docker

docker –version升级:

已安装好docker,需要卸载原有docker

# rpm -qa | grep docker

docker-ce-17.12.1.ce-1.el7.centos.x86_64

# yum remove -y docker-ce-17.12.1.ce-1.el7.centos.x86_64

# yum install -y docker-ce-18.09.6-3.el7

# systemctl restart docker

# systemctl enable docker报错:dockerd[29505]: unable to configure the Docker daemon with file /etc/docker/daemon.json: the following directives are specified both as a flag and in the configuration file: hosts:

编辑vim /etc/docker/daemon.json

删除:

"hosts": [

"tcp://0.0.0.0:2375",

"unix:///var/run/docker.sock"

],2.安装kubeadm、kubelet、kubectl

yum remove -y kubelet-1.14.1 kubeadm-1.14.1 kubectl-1.14.1

yum install -y kubelet-1.14.1 kubeadm-1.14.1 kubectl-1.14.1

systemctl enable kubelet三、部署master 节点

注:在master节点上进行如下操作

1.在master进行Kubernetes集群初始化。

kubeadm init --kubernetes-version=1.14.1 \

--apiserver-advertise-address=192.168.5.43 \

--image-repository registry.aliyuncs.com/google_containers \

--service-cidr=10.1.0.0/16 \

--pod-network-cidr=10.254.0.0/16说明:

apiserver-advertise-address:master本机IP地址

service-cidr:service的网段

pod-network-cidr:定义POD的网段为: 10.254.0.0/16

这一步很关键,由于kubeadm 默认从官网k8s.grc.io下载所需镜像,国内无法访问,因此需要通过–image-repository指定阿里云镜像仓库地址,很多新手初次部署都卡在此环节无法进行后续配置。

执行完后输出如下:

kubeadm join 192.168.5.43:6443 --token 3n01sl.qjsscml383uzldzn \

--discovery-token-ca-cert-hash sha256:aa728efa0d4cacf2eb064f05ea3535152a0432a5a18c69cdf312bd646830f1f1报告警:detected "cgroupfs" as the Docker cgroup driver. The recommended driver is "systemd".

解决:

有两种方式, 一种是修改docker, 另一种是修改kubelet。

修改docker:

修改或创建/etc/docker/daemon.json,加入下面的内容:

{ "exec-opts": ["native.cgroupdriver=systemd"] }重启docker:

systemctl restart docker systemctl status docker修改kubelet:

vim /usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf

# Note: This dropin only works with kubeadm and kubelet v1.11+

[Service]

# 在bootstrap-kubelet.conf后加上--cgroup-driver=systemd

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf --cgroup-driver=systemd" Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

# This is a file that "kubeadm init" and "kubeadm join" generates at runtime, populating the KUBELET_KUBEADM_ARGS variable dynamically

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

# This is a file that the user can use for overrides of the kubelet args as a last resort. Preferably, the user should use

# the .NodeRegistration.KubeletExtraArgs object in the configuration files instead. KUBELET_EXTRA_ARGS should be sourced from this file.

EnvironmentFile=-/etc/sysconfig/kubelet

ExecStart= ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGS2.配置kubectl工具

mkdir -p /root/.kube

cp /etc/kubernetes/admin.conf /root/.kube/config kubectl get nodes kubectl get cs3.部署flannel网络

wget https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.yml

#修改kube-flannel.yml

sed -i 's/10.244.0.0\/16/10.254.0.0\/16/g' kube-flannel.yml

kubectl apply -f kube-flannel.yml注:此处的10.254.0.0要与kubeadm init的--pod-network-cidr值一样

四、部署node节点

注:在所有node节点上进行如下操作

执行如下命令,使所有node节点加入Kubernetes集群

kubeadm join 192.168.5.43:6443 --token 3n01sl.qjsscml383uzldzn \

--discovery-token-ca-cert-hash sha256:aa728efa0d4cacf2eb064f05ea3535152a0432a5a18c69cdf312bd646830f1f1此命令为集群初始化时(kubeadm init)返回结果中的内容。

输出如下则表示node正常加入集群:

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

[kubelet-start] Downloading configuration for the kubelet from the "kubelet-config-1.14" ConfigMap in the kube-system namespace

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Activating the kubelet service

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.五、集群状态检测

注:在master节点上进行如下操作

1.在master节点输入命令检查集群状态,返回如下结果则集群状态正常。

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

szx1-personal-liuchuang-dev-000 NotReady 3m59s v1.14.1

szx1-personal-liuchuang-dev-001 Ready master 21m v1.14.1

szx1-personal-liuchuang-dev-002 NotReady 4m20s v1.14.1

szx1-personal-liuchuang-dev-003 Ready 4m7s v1.14.1 注:刚开始有部分节点的status为NotReady,说明该节点还在拉取image,当所有节点的status都为Ready时,则集群状态正常。

# kubectl get nodes

NAME STATUS ROLES AGE VERSION

szx1-personal-liuchuang-dev-000 Ready 12m v1.14.1

szx1-personal-liuchuang-dev-001 Ready master 30m v1.14.1

szx1-personal-liuchuang-dev-002 Ready 13m v1.14.1

szx1-personal-liuchuang-dev-003 Ready 13m v1.14.1 2.创建Pod以验证集群是否正常。

kubectl create deployment nginx --image=nginx kubectl expose deployment nginx --port=80 --type=NodePort

kubectl get pod,svc -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod/nginx-65f88748fd-8jzzv 1/1 Running 0 6m15s 10.254.1.2 szx1-personal-liuchuang-dev-002

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service/kubernetes ClusterIP 10.1.0.1 443/TCP 38m

service/nginx NodePort 10.1.147.166 80:31756/TCP 6m12s app=nginx 访问http://172.31.18.86:31756/,出现Nginx的欢迎页面。

六、部署Dashboard

注:在master节点上进行如下操作

wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml修改kubernetes-dashboard.yaml

sed -i 's/k8s.gcr.io/loveone/g' kubernetes-dashboard.yaml

sed -i '/targetPort:/a\ \ \ \ \ \ nodePort: 30001\n\ \ type: NodePort' kubernetes-dashboard.yaml2.部署Dashboard

kubectl create -f kubernetes-dashboard.yaml3.创建完成后,检查相关服务运行状态

kubectl get deployment kubernetes-dashboard -n kube-system kubectl get pods -n kube-system -o wide kubectl get services -n kube-system

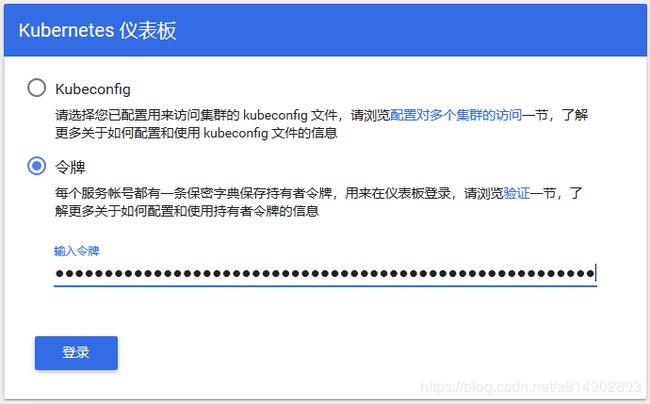

netstat -ntlp|grep 300014.在浏览器输入Dashboard访问地址:

https://192.168.5.43:30001

5.查看访问Dashboard的认证令牌

kubectl create serviceaccount dashboard-admin -n kube-system kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')

6.使用输出的token登录Dashboard

1.节点启动报错:failed to set bridge addr: "cni0" already has an IP address different from 10.254.2.1/24

创建网络和dns时有错误数据残留,需要重置kubernetes服务,重置网络,删除网络配置,link。

找到创建pod出问题的节点,执行以下操作:

kubeadm reset

systemctl stop kubelet

systemctl stop docker

rm -rf /var/lib/cni/

rm -rf /var/lib/kubelet/*

rm -rf /etc/cni/

ifconfig cni0 down

ifconfig flannel.1 down

ifconfig docker0 down

ip link delete cni0

ip link delete flannel.1

systemctl start docker

#获取master的join token

kubeadm token create --print-join-command