7、docker+k8s+kubesphere:helm与tiller安装

7、docker+k8s+kubesphere:helm安装

官网地址

https://kubesphere.io/docs/zh-CN/installation/install-on-k8s/

官网虽然说低配置可以使用,只能使用一个没有任何应用的框架

如果做CI/CD直接爆满

命令回顾

系统类命令

systemctl enable docker

systemctl enable docker.service

systemctl start docker

systemctl restart docker

systemctl stop docker

systemctl daemon-reload

systemctl enable kubelet

systemctl enable kubelet.service

systemctl status kubelet

systemctl restart kubelet

kubectl version

kubectl delete node --all

kubectl apply -f ......

watch kubectl get pod -n kube-system -o wide

kubectl get node

systemctl enable docker

systemctl enable docker.service

kubeadm join ...............

kubeadm init --config=xxxx.yaml --upload-certs

kubectl get pod --all-namespaces

kubeadm token list

kubeadm token delete xxxx.xxxxx

kubeadm token create --print-join-command

kubeadm token create --ttl 0 --print-join-command

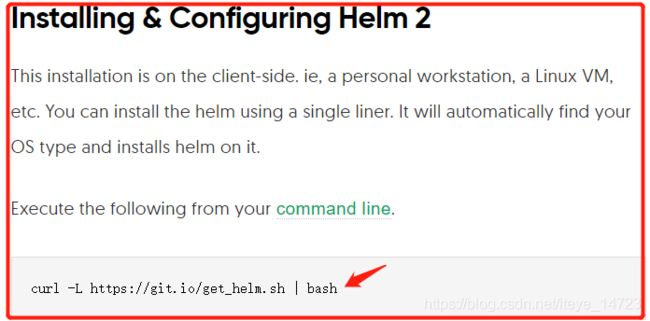

heml客户端安装自动安装(可能存在版本兼容)

heml官网

https://devopscube.com/install-configure-helm-kubernetes/

heml下载

https://github.com/helm/helm/releases

curl -L https://git.io/get_helm.sh | bash

安装结果如下(可能会出现无法下载)

[root@node151 ~]# curl -L https://git.io/get_helm.sh | bash

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

0 0 0 0 0 0 0 0 --:--:-- 0:00:01 --:--:-- 0

100 7185 100 7185 0 0 1883 0 0:00:03 0:00:03 --:--:-- 4904

Downloading https://get.helm.sh/helm-v2.16.9-linux-amd64.tar.gz

Preparing to install helm and tiller into /usr/local/bin

helm installed into /usr/local/bin/helm 安装的目录

tiller installed into /usr/local/bin/tiller 安装的目录

Run 'helm init' to configure helm.

heml客户端手动安装

下载,wget或者迅雷下载再传上都可以

https://get.helm.sh/helm-v2.16.9-linux-amd64.tar.gz

tar -zxvf helm-v2.16.9-linux-amd64.tar.gz

cp linux-amd64/helm /usr/local/bin

#cp linux-amd64/tiller /usr/local/bin

检查

[root@node151 bin]# helm version

Client: &version.Version{SemVer:"v2.16.9", GitCommit:"8ad7037828e5a0fca1009dabe290130da6368e39", GitTreeState:"clean"}

Error: could not find tiller

tiller服务端安装

node151 node152 node153安装

yum -y install socat

socat -h 检查

官网教程

https://devopscube.com/install-configure-helm-kubernetes/

创建文件helm-rbac.yam(官网直接复制)-权限

apiVersion: v1

kind: ServiceAccount

metadata:

name: tiller

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tiller

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: tiller

namespace: kube-system

最终效果如下

执行:

kubectl apply -f helm-rbac.yam

结果如下:

[root@node151 ~]# kubectl apply -f helm-rbac.yam 这里要等待几分钟可以在服务中看见tiller安装的服务

serviceaccount/tiller unchanged

clusterrolebinding.rbac.authorization.k8s.io/tiller unchanged

执行:

helm init \

> --upgrade \

> --service-account=tiller \

> --tiller-image=registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.9 \

> --history-max 300

或者

helm init \

--upgrade \

--service-account=tiller \

--tiller-image=sapcc/tiller:v2.16.9 \

--history-max 300

结果如下:

[root@node151 ~]# helm init \

> --upgrade \

> --service-account=tiller \

> --tiller-image=registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.9 \

> --history-max 300

Creating /root/.helm/repository

Creating /root/.helm/repository/cache

Creating /root/.helm/repository/local

Creating /root/.helm/plugins

Creating /root/.helm/starters

Creating /root/.helm/cache/archive

Creating /root/.helm/repository/repositories.yaml

Adding stable repo with URL: https://kubernetes-charts.storage.googleapis.com

Adding local repo with URL: http://127.0.0.1:8879/charts

$HELM_HOME has been configured at /root/.helm.

已经安装在集群中

Tiller (the Helm server-side component) has been installed into your Kubernetes Cluster.

Please note: by default, Tiller is deployed with an insecure 'allow unauthenticated users' policy. 执行helm init

To prevent this, run `helm init` with the --tiller-tls-verify flag.

For more information on securing your installation see: https://v2.helm.sh/docs/securing_installation/

安装成功后pod中就会多一个title服务

使用监控可以查看服务的状态

watch kubectl get pod -n kube-system -o wide

如果服务一直处于

kubectl describe pod 输入podname -n kube-system

如下

kubectl describe pod tiller-deploy-65c447f567-np4zc -n kube-system

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled <unknown> default-scheduler Successfully assigned kube-system/tiller-deploy-65c447f567-np4zc to node152

Normal SandboxChanged 13m (x6 over 13m) kubelet, node152 Pod sandbox changed, it will be killed and re-created.

Warning Failed 13m (x2 over 13m) kubelet, node152 Failed to pull image "registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.9": rpc error: code = Unknown desc = Error response from daemon: manifest for registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.9 not found

Warning Failed 13m (x2 over 13m) kubelet, node152 Error: ErrImagePull

Warning Failed 13m (x6 over 13m) kubelet, node152 Error: ImagePullBackOff

Normal Pulling 13m (x3 over 13m) kubelet, node152 Pulling image "registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.9"

Normal BackOff 3m48s (x46 over 13m) kubelet, node152 Back-off pulling image "registry.cn-hangzhou.aliyuncs.com/google_containers/tiller:v2.16.9"

执行:

helm version

结果如下:

[root@node151 ~]# helm version

Client: &version.Version{SemVer:"v2.16.3", GitCommit:"1ee0254c86d4ed6887327dabed7aa7da29d7eb0d", GitTreeState:"clean"}

Error: could not find tiller

执行:

kubectl get sa -n kube-system |grep tiller

结果如下:

[root@node151 ~]# kubectl get sa -n kube-system |grep tiller

tiller 1 11m

执行:kubectl get clusterrolebindings.rbac.authorization.k8s.io -n kube-system |grep tiller

结果如下:

[root@node151 ~]# kubectl get clusterrolebindings.rbac.authorization.k8s.io -n kube-system |grep tiller

tiller

执行:

kubectl get all --all-namespaces | grep tiller

结果如下:

[root@node151 ~]# kubectl get all --all-namespaces | grep tiller

kube-system pod/tiller-deploy-797955c678-nl5nv 1/1 Running 0 43m

kube-system service/tiller-deploy ClusterIP 10.96.197.118 <none> 44134/TCP 43m

kube-system deployment.apps/tiller-deploy 1/1 1 1 43m

kube-system replicaset.apps/tiller-deploy-797955c678 1 1 1 43m

执行:

kubectl -n kube-system get pods|grep tiller

结果如下:

验证tiller是否安装成功

[root@node151 ~]# kubectl -n kube-system get pods|grep tiller

tiller-deploy-797955c678-nl5nv 1/1 Running 0 50m

监控查看

执行:watch kubectl get pod -n kube-system -o wide

结果如下:

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

calico-kube-controllers-589b5f594b-ckfgz 1/1 Running 1 7h22m 10.20.235.4 node153 <none> <none>

calico-node-msd6f 1/1 Running 1 7h22m 192.168.5.152 node152 <none> <none>

calico-node-s9xf6 1/1 Running 2 7h22m 192.168.5.151 node151 <none> <none>

calico-node-wcztl 1/1 Running 1 7h22m 192.168.5.153 node153 <none> <none>

coredns-7f9c544f75-gmclr 1/1 Running 2 9h 10.20.223.67 node151 <none> <none>

coredns-7f9c544f75-t7jh6 1/1 Running 2 9h 10.20.235.3 node153 <none> <none>

etcd-node151 1/1 Running 4 9h 192.168.5.151 node151 <none> <none>

kube-apiserver-node151 1/1 Running 6 9h 192.168.5.151 node151 <none> <none>

kube-controller-manager-node151 1/1 Running 5 9h 192.168.5.151 node151 <none> <none>

kube-proxy-5t7jg 1/1 Running 3 9h 192.168.5.151 node151 <none> <none>

kube-proxy-fqjh2 1/1 Running 2 8h 192.168.5.152 node152 <none> <none>

kube-proxy-mbxtx 1/1 Running 2 8h 192.168.5.153 node153 <none> <none>

kube-scheduler-node151 1/1 Running 5 9h 192.168.5.151 node151 <none> <none>

tiller-deploy-797955c678-nl5nv 1/1 Running 0 40m 10.20.117.197 node152 <none> <none>

可以看见tiller服务启动

执行:

helm version

结果如下:

[root@node151 ~]# helm version

Client: &version.Version{SemVer:"v2.16.9", GitCommit:"8ad7037828e5a0fca1009dabe290130da6368e39", GitTreeState:"clean"}

Server: &version.Version{SemVer:"v2.16.9", GitCommit:"8ad7037828e5a0fca1009dabe290130da6368e39", GitTreeState:"clean"}

重装tiller

查看

[root@node151 ~]# kubectl get all --all-namespaces | grep tiller

kube-system pod/tiller-deploy-6974685dbc-qv5kp 0/1 ImagePullBackOff 0 21m

kube-system pod/tiller-deploy-78f458f4f8-zdqkm 0/1 ImagePullBackOff 0 9m9s

kube-system service/tiller-deploy ClusterIP 10.96.7.143 <none> 44134/TCP 21m

kube-system deployment.apps/tiller-deploy 0/1 1 0 21m

kube-system replicaset.apps/tiller-deploy-6974685dbc 1 1 0 21m

kube-system replicaset.apps/tiller-deploy-78f458f4f8 1 1 0 9m9s

删除

[root@node151 ~]# kubectl get all -n kube-system -l app=helm -o name|xargs kubectl delete -n kube-system

pod "tiller-deploy-797955c678-rwhls" deleted

service "tiller-deploy" deleted

deployment.apps "tiller-deploy" deleted

replicaset.apps "tiller-deploy-797955c678" deleted

再次查看

[root@node151 ~]# kubectl get all --all-namespaces | grep tiller

heml reset --remove-helm-home --force

命令集合

helm version

kubectl get pods -n kube-system

kubectl get pod --all-namespaces

kubectl get all --all-namespaces | grep tiller

kubectl get all -n kube-system -l app=helm -o name|xargs kubectl delete -n kube-system

watch kubectl get pod -n kube-system -o wide