实战 | WebMagic 爬取某保险经纪人网站经纪人列表之网站列表爬取

小小,这次开始使用webmagic爬取相关的网站,这里爬取的网站为 https://member.vobao.com/ 将会对该网站进行爬取,并进行实战。

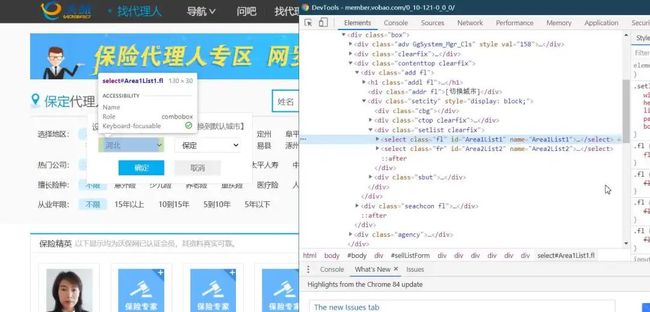

分析网站

打开devtool查看网站相关的链接。单击下一页的时候,可以看到发起一个请求,这里对这个请求进行解析。

可以看到有以下的一些参数,这里用到的将会是Area1List1,以及 Area2List2 这两个参数。

使用postman进行测试

这里使用postman进行相关的测试。可以发现输出如下的内容

这里的json发送的文件如下

{

"Area1List1":"10",

"Area2List2":"121",

"Search.KeyWord":"",

"Search.AreaID1":"10",

"Search.AreaID2":"121",

"Search.AreaID3":"0",

"Search.PyCode":"0",

"Search.CompanySymbol":"0",

"Search.Year":"0",

"Search.OrderTp":"1",

"Search.SearchTp":"1",

"Search.UserLevel":"0",

"Search.SortRule":"",

"Search.PageIndex":"1",

"Search.PageSize":"10",

"Search.LastHash":"0",

"Search.TotalPages":"8",

"Search.TotalCount":"80",

"sex":"1",

"X-Requested-With":"XMLHttpRequest"

}

这样就完成了一轮新的基本的测试。

获取链接URL

这里对页面的链接进行获取,获取到相关的URL。

可以发现,我们这里需要的链接是 http://www.syxdg.cn/ 这种类型的链接,所以进行一个正则匹配。书写如下的正则,进行相关的匹配,匹配相关的url。

进行正则匹配的链接如下

page.getHtml().links().regex("(http://www.(.*).cn)").all()

匹配的核心在于

http://www.(.*).cn

使用贪婪算法,匹配www和cn之间的任意字符,进行相关的匹配。

当发现为空的时候进行跳过

if(urls == null || urls.size() == 0){

page.setSkip(true);

}

并把相关的参数,汇入list集合中

page.putField("url", urls);

其中需要发送post请求,携带参数

这里需要发送post请求,并且将会需要携带参数,如下

Request request = new Request("https://member.vobao.com/Member/SellerList?Length=6");

request.setMethod(HttpConstant.Method.POST);

request.setRequestBody(HttpRequestBody.json(

"{n" +

" "Area1List1":" + " + count + " +",n" +

" "Area2List2":"1",n" +

" "Search.KeyWord":"",n" +

" "Search.AreaID1":"-100",n" +

" "Search.AreaID2":"0",n" +

" "Search.AreaID3":"0",n" +

" "Search.PyCode":"0",n" +

" "Search.CompanySymbol":"0",n" +

" "Search.Year":"0",n" +

" "Search.OrderTp":"1",n" +

" "Search.SearchTp":"1",n" +

" "Search.UserLevel":"0",n" +

" "Search.SortRule":"",n" +

" "Search.PageIndex":"1",n" +

" "Search.PageSize":"10",n" +

" "Search.LastHash":"0",n" +

" "Search.TotalPages":"8",n" +

" "Search.TotalCount":"80",n" +

" "sex":"1",n" +

" "X-Requested-With":"XMLHttpRequest"n" +

"}",

"utf-8"));

Spider.create(new vobao())

.addRequest(request)

.addPipeline(new vobaoMysql())

.thread(50000)

.run();

其中,HttpRequestBody将会是json相关的配置信息,将会在Request进行相关的传入,实现request请求的发送。

双重循环

这里需要进行双重循环 由于城市和二级城市,这两个需要做到双重循环,双重循环如下,并且插入的时候也需要做到双重循环。

一级城市循环

int count = 1;

for(count = 1; count <= 100; count++) {

System.out.println("count: -----------------" + count);

Request request = new Request("https://member.vobao.com/Member/SellerList?Length=6");

request.setMethod(HttpConstant.Method.POST);

request.setRequestBody(HttpRequestBody.json(

"{n" +

" "Area1List1":" + " + count + " +",n" +

" "Area2List2":"1",n" +

" "Search.KeyWord":"",n" +

" "Search.AreaID1":"-100",n" +

" "Search.AreaID2":"0",n" +

" "Search.AreaID3":"0",n" +

" "Search.PyCode":"0",n" +

" "Search.CompanySymbol":"0",n" +

" "Search.Year":"0",n" +

" "Search.OrderTp":"1",n" +

" "Search.SearchTp":"1",n" +

" "Search.UserLevel":"0",n" +

" "Search.SortRule":"",n" +

" "Search.PageIndex":"1",n" +

" "Search.PageSize":"10",n" +

" "Search.LastHash":"0",n" +

" "Search.TotalPages":"8",n" +

" "Search.TotalCount":"80",n" +

" "sex":"1",n" +

" "X-Requested-With":"XMLHttpRequest"n" +

"}",

"utf-8"));

Spider.create(new vobao())

.addRequest(request)

.addPipeline(new vobaoMysql())

.thread(50000)

.run();

}

每创建一次循环,就产生一个一级城市,并开始创建一个新的爬虫。

二级城市循环

这里使用webmagic相关的爬虫,实现二级城市的循环 其核心在于以下参数, 中途经过json的相关的转化,并实现其转化的完成以及失败

// 从body中获取inta和intb

String jsonRes = new String(page.getRequest().getRequestBody().getBody());

//System.out.println(jsonRes);

Gson gson = new Gson();

RequestInfo requestInfo = gson.fromJson(jsonRes, RequestInfo.class);

// 页数加上

//requestInfo.setArea1List1((Integer.parseInt(requestInfo.getArea1List1()) + 1) + "");

requestInfo.setArea2List2((Integer.parseInt(requestInfo.getArea2List2()) + 1) + "");

System.out.println("Area2List2---------------" + requestInfo.getArea2List2());

String resNewJson = gson.toJson(requestInfo);

//System.out.println(resNewJson);

Request request = new Request("https://member.vobao.com/Member/SellerList?Length=6");

request.setMethod(HttpConstant.Method.POST);

request.setRequestBody(HttpRequestBody.json(

resNewJson,

"utf-8"));

全部代码

这里全部代码如下

package com.example.demo;

import com.example.demo.db.PingAnMysql;

import com.example.demo.db.vobaoMysql;

import com.example.demo.model.JqBxInfo;

import com.example.demo.model.RequestInfo;

import com.google.gson.Gson;

import org.apache.http.NameValuePair;

import org.apache.http.message.BasicNameValuePair;

import us.codecraft.webmagic.Page;

import us.codecraft.webmagic.Request;

import us.codecraft.webmagic.Site;

import us.codecraft.webmagic.Spider;

import us.codecraft.webmagic.model.HttpRequestBody;

import us.codecraft.webmagic.processor.PageProcessor;

import us.codecraft.webmagic.utils.HttpConstant;

import java.util.List;

public class vobao implements PageProcessor {

private Site site = Site.me().setRetryTimes(50000).setSleepTime(0);

/**

* process the page, extract urls to fetch, extract the data and store

*

* @param page page

*/

@Override

public void process(Page page) {

// 从body中获取inta和intb

String jsonRes = new String(page.getRequest().getRequestBody().getBody());

//System.out.println(jsonRes);

Gson gson = new Gson();

RequestInfo requestInfo = gson.fromJson(jsonRes, RequestInfo.class);

// 页数加上

//requestInfo.setArea1List1((Integer.parseInt(requestInfo.getArea1List1()) + 1) + "");

requestInfo.setArea2List2((Integer.parseInt(requestInfo.getArea2List2()) + 1) + "");

System.out.println("Area2List2---------------" + requestInfo.getArea2List2());

String resNewJson = gson.toJson(requestInfo);

//System.out.println(resNewJson);

Request request = new Request("https://member.vobao.com/Member/SellerList?Length=6");

request.setMethod(HttpConstant.Method.POST);

request.setRequestBody(HttpRequestBody.json(

resNewJson,

"utf-8"));

// 获取页面url

List urls = page.getHtml().links().regex("(http://www.(.*).cn)").all();

if(urls == null || urls.size() == 0){

page.setSkip(true);

}

page.putField("url", urls);

page.addTargetRequest(request);

}

/**

* get the site settings

*

* @return site

* @see Site

*/

@Override

public Site getSite() {

return this.site;

}

public static void main(String[] args){

int count = 1;

for(count = 1; count <= 100; count++) {

System.out.println("count: -----------------" + count);

Request request = new Request("https://member.vobao.com/Member/SellerList?Length=6");

request.setMethod(HttpConstant.Method.POST);

request.setRequestBody(HttpRequestBody.json(

"{n" +

" "Area1List1":" + " + count + " +",n" +

" "Area2List2":"1",n" +

" "Search.KeyWord":"",n" +

" "Search.AreaID1":"-100",n" +

" "Search.AreaID2":"0",n" +

" "Search.AreaID3":"0",n" +

" "Search.PyCode":"0",n" +

" "Search.CompanySymbol":"0",n" +

" "Search.Year":"0",n" +

" "Search.OrderTp":"1",n" +

" "Search.SearchTp":"1",n" +

" "Search.UserLevel":"0",n" +

" "Search.SortRule":"",n" +

" "Search.PageIndex":"1",n" +

" "Search.PageSize":"10",n" +

" "Search.LastHash":"0",n" +

" "Search.TotalPages":"8",n" +

" "Search.TotalCount":"80",n" +

" "sex":"1",n" +

" "X-Requested-With":"XMLHttpRequest"n" +

"}",

"utf-8"));

Spider.create(new vobao())

.addRequest(request)

.addPipeline(new vobaoMysql())

.thread(50000)

.run();

}

}

}

Mysql

这里编写Mysql相关的内容,由于传入的是List,需要实现List的批量插入

package com.example.demo.db;

import com.example.demo.model.RequestInfo;

import us.codecraft.webmagic.ResultItems;

import us.codecraft.webmagic.Task;

import us.codecraft.webmagic.pipeline.Pipeline;

import java.sql.Connection;

import java.sql.DriverManager;

import java.sql.Statement;

import java.util.List;

public class vobaoMysql implements Pipeline {

/**

* Process extracted results.

*

* @param resultItems resultItems

* @param task task

*/

@Override

public void process(ResultItems resultItems, Task task) {

List list = resultItems.get("url");

for(int i = 0; i < list.size(); i++){

try {

Class.forName("com.mysql.cj.jdbc.Driver");

Connection connection = DriverManager.getConnection("jdbc:mysql://cdb-1yfd1mlm.cd.tencentcdb.com:10056/JqBxInfo", "root", "ABCcba20170607");

Statement statement = connection.createStatement();

String sql = "insert into url" + " values(" + """+ list.get(i) + """ + ")";

System.out.println(sql);

int res = statement.executeUpdate(sql);

System.out.println(res);

}catch (Exception e){

}

}

}

}

所以继续添加循环,实现批量的插入

执行效果

控制台打印出log如下

查看数据库

这样就完成了列表页面信息的搜集。

明天将会写保单关于页面的信息搜集。

小明菜市场

推荐阅读

● 实战 | WebMagic 实现分布式爬虫

● 实记 | MongoDB 多表连接查询

● 新知 | MongoDB 账号管理

● 方案 | Mongodb 高可用落地方案

● 介绍 | MyPerf4J 入门指南