Phoenix5.0 安装部署

文章目录

- 概述

- Phoenix5.0 shell 安装

- Phoenix5.0 shell 使用

- 增删改查

- phoenix shell下创建view映射hbase表

- 退出Phoenix

- psql.py 执行外部SQL文件

- 创建表

- 导入数据

- 复杂SQL:

- 计数

- 平均值

- 聚合

- SQuirrel客户端安装

概述

现有hbase的查询工具有很多如:Hive,Tez,Impala,Shark/Spark,Phoenix等。phoenix是一个在hbase上面实现的基于hadoop的OLTP技术,具有低延迟、事务性、可使用sql、提供jdbc接口的特点。 而且phoenix还提供了hbase二级索引的解决方案,丰富了hbase查询的多样性,继承了hbase海量数据快速随机查询的特点。但是在生产环境中,不可以用在OLTP中。在线事务处理的环境中,需要低延迟,而Phoenix在查询HBase时,虽然做了一些优化,但延迟还是不小。所以依然是用在OLAT中,再将结果返回存储下来。

Phoenix完全使用Java编写,作为HBase内嵌的JDBC驱动。Phoenix查询引擎会将SQL查询转换为一个或多个HBase扫描,并编排执行以生成标准的JDBC结果集。直接使用HBase API、协同处理器与自定义过滤器,对于简单查询来说,其性能量级是毫秒,对于百万级别的行数来说,其性能量级是秒。

环境说明:

hbase:2.1.5

springboot:2.1.1.RELEASE

hadoop :2.8.5

java: 8+

Phoenix:5.0.0

hadoop环境:Hadoop 2.8.5 完全分布式HA高可用安装(二)–环境搭建

hbase环境:hbase 2.1 环境搭建–完全分布式模式 Advanced - Fully Distributed

Phoenix5.0 shell 安装

官网:http://phoenix.apache.org/index.html

- 下载

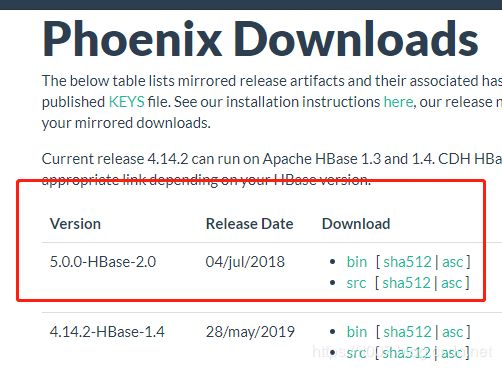

到下载页面 http://phoenix.apache.org/download.html 下载最新的Phoenix5.0.0-HBase-2.0版本。

- 上传解压安装包

我们使用hbase 2.1 环境搭建–完全分布式模式 Advanced - Fully Distributed 文中搭建的hbase环境。将apache-phoenix-5.0.0-HBase-2.0-bin.tar.gz上传到每一台机器,然后解压。我这里的目录是/data/program/apache-phoenix-5.0.0-HBase-2.0-bin。 - jar包拷贝

将phoenix-5.0.0-HBase-2.0-server.jar这个jar包拷贝到每一台机器的hbase的lib目录下

cd /data/program/apache-phoenix-5.0.0-HBase-2.0-bin

cp phoenix-5.0.0-HBase-2.0-server.jar ../hbase-2.1.5/lib/

- 重启hbase集群

cd /data/program/hbase-2.1.5/bin

./stop-hbase.sh

./start-hbase.sh

Phoenix5.0 shell 使用

验证是否部署成功,这里使用Phoenix提供的命令行工具。

cd /data/program/apache-phoenix-5.0.0-HBase-2.0-bin/bin

[root@node4 bin]# ./sqlline.py node1,node2,node3:2181

Setting property: [incremental, false]

Setting property: [isolation, TRANSACTION_READ_COMMITTED]

issuing: !connect jdbc:phoenix:node1,node2,node3:2181 none none org.apache.phoenix.jdbc.PhoenixDriver

Connecting to jdbc:phoenix:node1,node2,node3:2181

19/07/22 14:16:01 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Connected to: Phoenix (version 5.0)

Driver: PhoenixEmbeddedDriver (version 5.0)

Autocommit status: true

Transaction isolation: TRANSACTION_READ_COMMITTED

Building list of tables and columns for tab-completion (set fastconnect to true to skip)...

133/133 (100%) Done

Done

sqlline version 1.2.0

0: jdbc:phoenix:node1,node2,node3:2181> !tables

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+-----------+

| TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SALT_BUCKETS | MULTI_TEN |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+-----------+

| | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | null | false |

| | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | null | false |

| | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 | false |

| | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | null | false |

| | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | null | false |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+-----------+

可以看到Phoenix已经安装部署成功。

注意 ./sqlline.py node1,node2,node3:2181 后面跟的hbase使用的zk的地址。

!tables可以查看所有的表,这里列出来的表是上面再启动phoenix时创建的系统表。

phoenix语法:http://phoenix.apache.org/language/index.html

增删改查

下面演示一下创建表,插入语句,查询的操作。

0: jdbc:phoenix:node1,node2,node3:2181> create table person (id integer not null primary key,name varchar,age integer);

No rows affected (2.581 seconds)

0: jdbc:phoenix:node1,node2,node3:2181> upsert into person values (1,'zhangsan' ,18);

1 row affected (0.315 seconds)

0: jdbc:phoenix:node1,node2,node3:2181> select * from PERSON

. . . . . . . . . . . . . . . . . . . > ;

+-----+-----------+------+

| ID | NAME | AGE |

+-----+-----------+------+

| 1 | zhangsan | 18 |

+-----+-----------+------+

1 row selected (0.237 seconds)

注意Phoenix是区分大小写的,默认列名表名会全部转为大写。如果想要小写需要使用双引号来标识。

我们在hbase shell 中查看一下Phoenix创建的表:

hbase(main):007:0> list

TABLE

PERSON

SYSTEM.CATALOG

SYSTEM.FUNCTION

SYSTEM.LOG

SYSTEM.MUTEX

SYSTEM.SEQUENCE

SYSTEM.STATS

test

8 row(s)

Took 0.0812 seconds

=> ["PERSON", "SYSTEM.CATALOG", "SYSTEM.FUNCTION", "SYSTEM.LOG", "SYSTEM.MUTEX", "SYSTEM.SEQUENCE", "SYSTEM.STATS", "test"]

hbase(main):008:0> scan 'PERSON'

ROW COLUMN+CELL

\x80\x00\x00\x01 column=0:\x00\x00\x00\x00, timestamp=1563776971049, value=x

\x80\x00\x00\x01 column=0:\x80\x0B, timestamp=1563776971049, value=zhangsan

\x80\x00\x00\x01 column=0:\x80\x0C, timestamp=1563776971049, value=\x80\x00\x00\x12

1 row(s)

Took 0.0541 seconds

上面PERSON表只有一个rowkey,有三列,默认列族名称是0,这里rowkey,列族和列都是Phoenix处理过的。

可以看到,hbase shell可以把Phoenix的表都列出来,而且都是可以操作的。

phoenix shell下创建view映射hbase表

一切正常,但是我们之前已经在hbase中通过hbase shell 创建了一个test表,这里没有展示,如何是好?

先看一下我们test表都有哪些数据

hbase(main):013:0> scan 'test'

ROW COLUMN+CELL

row1 column=cf:a, timestamp=1563441734398, value=value1

row1 column=cf:age, timestamp=1563779499842, value=12

row2 column=cf:a, timestamp=1563451278532, value=value2a

row2 column=cf:age, timestamp=1563779513308, value=13

row2 column=cf:b, timestamp=1563441738877, value=value2

row3 column=cf:c, timestamp=1563441741609, value=value3

3 row(s)

我们需要创建一个view来匹配原有的hbase创建的表

CREATE view "test" (

"ROW" VARCHAR primary key,

"cf"."a" VARCHAR,

"cf"."b" VARCHAR,

"cf"."c" VARCHAR,

"cf"."age" SMALLINT

);

注意这里的age是SMALLINT,因为age序列化后只有两个字节。Phoenix与hbase数据类型映射比较苛刻,如果使用INTEGER会报错,因为INTEGER是4个字节。

读者可以试一下将age设置成INTEGER,会报错:Error: ERROR 201 (22000): Illegal data. Expected length of at least 4 bytes, but had 2 (state=22000,code=201)。

Phoenix数据类型参考 http://phoenix.apache.org/language/datatypes.html

完整示例:

0: jdbc:phoenix:node1,node2,node3:2181> CREATE view "test" (

. . . . . . . . . . . . . . . . . . . > "ROW" VARCHAR primary key,

. . . . . . . . . . . . . . . . . . . > "cf"."a" VARCHAR,

. . . . . . . . . . . . . . . . . . . > "cf"."b" VARCHAR,

. . . . . . . . . . . . . . . . . . . > "cf"."c" VARCHAR,

. . . . . . . . . . . . . . . . . . . > "cf"."age" SMALLINT

. . . . . . . . . . . . . . . . . . . > );

No rows affected (0.142 seconds)

0: jdbc:phoenix:node1,node2,node3:2181> !table

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+-----------+

| TABLE_CAT | TABLE_SCHEM | TABLE_NAME | TABLE_TYPE | REMARKS | TYPE_NAME | SELF_REFERENCING_COL_NAME | REF_GENERATION | INDEX_STATE | IMMUTABLE_ROWS | SALT_BUCKETS | MULTI_TEN |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+-----------+

| | SYSTEM | CATALOG | SYSTEM TABLE | | | | | | false | null | false |

| | SYSTEM | FUNCTION | SYSTEM TABLE | | | | | | false | null | false |

| | SYSTEM | LOG | SYSTEM TABLE | | | | | | true | 32 | false |

| | SYSTEM | SEQUENCE | SYSTEM TABLE | | | | | | false | null | false |

| | SYSTEM | STATS | SYSTEM TABLE | | | | | | false | null | false |

| | | PERSON | TABLE | | | | | | false | null | false |

| | | test | VIEW | | | | | | false | null | false |

+------------+--------------+-------------+---------------+----------+------------+----------------------------+-----------------+--------------+-----------------+---------------+-----------+

0: jdbc:phoenix:node1,node2,node3:2181> select * from "test";

+-------+----------+---------+---------+---------+

| ROW | a | b | c | age |

+-------+----------+---------+---------+---------+

| row1 | value1 | | | -20174 |

| row2 | value2a | value2 | | -20173 |

| row3 | | | value3 | null |

+-------+----------+---------+---------+---------+

3 rows selected (0.265 seconds)

虽然age使用SMALLINT进行映射不会报错,但是结果明显不是我们想要的结果,具体数据类型的对应后续在研究,这里先简单使用VARCHAR来映射。

删除视图:drop view "test"。重新创建视图:

0: jdbc:phoenix:node1,node2,node3:2181> drop view "test"

. . . . . . . . . . . . . . . . . . . > ;

No rows affected (0.013 seconds)

0: jdbc:phoenix:node1,node2,node3:2181> CREATE view "test" (

. . . . . . . . . . . . . . . . . . . > "ROW" VARCHAR primary key,

. . . . . . . . . . . . . . . . . . . > "cf"."a" VARCHAR,

. . . . . . . . . . . . . . . . . . . > "cf"."b" VARCHAR,

. . . . . . . . . . . . . . . . . . . > "cf"."c" VARCHAR,

. . . . . . . . . . . . . . . . . . . > "cf"."age" VARCHAR

. . . . . . . . . . . . . . . . . . . > );

No rows affected (0.123 seconds)

0: jdbc:phoenix:node1,node2,node3:2181> select * from "test";

+-------+----------+---------+---------+------+

| ROW | a | b | c | age |

+-------+----------+---------+---------+------+

| row1 | value1 | | | 12 |

| row2 | value2a | value2 | | 13 |

| row3 | | | value3 | |

+-------+----------+---------+---------+------+

3 rows selected (0.384 seconds)

这样age展示就正常了。

退出Phoenix

命令:!exit

psql.py 执行外部SQL文件

创建表

除了上面直接使用命令行,也可以使用psql.py命令来操作外部文件,一些复杂的SQL和数据这样操作更加方便。

cd /data/program/apache-phoenix-5.0.0-HBase-2.0-bin/bin

./psql.py node1,node2,node3:2181 ../examples/WEB_STAT.sql

上面命令执行完成,就在Phoenix创建了一个表WEB_STAT 。../examples/WEB_STAT.sql是压缩包自带的demo:

CREATE TABLE IF NOT EXISTS WEB_STAT (

HOST CHAR(2) NOT NULL,

DOMAIN VARCHAR NOT NULL,

FEATURE VARCHAR NOT NULL,

DATE DATE NOT NULL,

USAGE.CORE BIGINT,

USAGE.DB BIGINT,

STATS.ACTIVE_VISITOR INTEGER

CONSTRAINT PK PRIMARY KEY (HOST, DOMAIN, FEATURE, DATE)

);

导入数据

命令:./psql.py -t WEB_STAT node1,node2,node3:2181 ../examples/WEB_STAT.csv

…/examples/WEB_STAT.csv 文件是数据文件,上面命令将其导入到表WEB_STAT 中。

[root@node4 bin]# cat ../examples/WEB_STAT.csv

NA,Salesforce.com,Login,2013-01-01 01:01:01,35,42,10

EU,Salesforce.com,Reports,2013-01-02 12:02:01,25,11,2

EU,Salesforce.com,Reports,2013-01-02 14:32:01,125,131,42

NA,Apple.com,Login,2013-01-01 01:01:01,35,22,40

NA,Salesforce.com,Dashboard,2013-01-03 11:01:01,88,66,44

NA,Salesforce.com,Login,2013-01-04 06:01:21,3,52,1

EU,Apple.com,Mac,2013-01-01 01:01:01,35,22,34

NA,Salesforce.com,Login,2013-01-04 11:01:11,23,56,45

EU,Salesforce.com,Reports,2013-01-05 03:11:12,75,22,3

EU,Salesforce.com,Dashboard,2013-01-06 05:04:05,12,22,43

EU,Salesforce.com,Reports,2013-01-05 04:14:12,475,252,53

NA,Google.com,Analytics,2013-01-07 06:01:01,23,1,57

NA,Apple.com,Mac,2013-01-02 04:01:01,345,255,155

NA,Google.com,Search,2013-01-08 08:01:01,345,242,46

NA,Salesforce.com,Login,2013-01-08 14:11:01,345,242,10

NA,Salesforce.com,Reports,2013-01-09 16:33:01,35,42,15

NA,Salesforce.com,Reports,2013-01-09 17:36:01,355,432,315

EU,Apple.com,Store,2013-01-03 01:01:01,345,722,170

NA,Salesforce.com,Login,2013-01-10 01:01:01,345,252,150

EU,Google.com,Search,2013-01-09 01:01:01,395,922,190

NA,Apple.com,Login,2013-01-04 01:01:01,135,2,110

NA,Salesforce.com,Dashboard,2013-01-11 01:01:01,335,32,30

NA,Apple.com,iPad,2013-01-05 01:01:01,85,2,18

EU,Salesforce.com,Login,2013-01-12 01:01:01,5,62,150

NA,Google.com,Search,2013-01-10 01:05:01,835,282,80

NA,Apple.com,iPad,2013-01-06 01:01:01,35,22,10

EU,Salesforce.com,Reports,2013-01-13 08:04:04,355,52,5

NA,Google.com,Analytics,2013-01-11 01:02:01,7,2,7

NA,Google.com,Search,2013-01-12 01:01:01,8,7,6

NA,Apple.com,iPad,2013-01-07 01:01:01,9,27,7

NA,Salesforce.com,Dashboard,2013-01-14 04:07:01,5,2,9

NA,Salesforce.com,Reports,2013-01-15 04:09:01,65,26,6

NA,Salesforce.com,Reports,2013-01-15 07:09:01,655,426,46

EU,Google.com,Analytics,2013-01-13 08:06:01,25,2,6

NA,Apple.com,Mac,2013-01-08 01:01:01,3,2,10

NA,Salesforce.com,Login,2013-01-16 01:01:01,785,782,80

NA,Google.com,Analytics,2013-01-14 01:01:01,65,252,56

NA,Salesforce.com,Login,2013-01-17 01:01:01,355,242,33

NA,Salesforce.com,Login,2013-01-17 02:20:01,1235,2422,243

执行查询:

0: jdbc:phoenix:node1,node2,node3:2181> select * from WEB_STAT;

+-------+-----------------+------------+--------------------------+-------+-------+-----------------+

| HOST | DOMAIN | FEATURE | DATE | CORE | DB | ACTIVE_VISITOR |

+-------+-----------------+------------+--------------------------+-------+-------+-----------------+

| EU | Apple.com | Mac | 2013-01-01 01:01:01.000 | 35 | 22 | 34 |

| EU | Apple.com | Store | 2013-01-03 01:01:01.000 | 345 | 722 | 170 |

| EU | Google.com | Analytics | 2013-01-13 08:06:01.000 | 25 | 2 | 6 |

| EU | Google.com | Search | 2013-01-09 01:01:01.000 | 395 | 922 | 190 |

| EU | Salesforce.com | Dashboard | 2013-01-06 05:04:05.000 | 12 | 22 | 43 |

| EU | Salesforce.com | Login | 2013-01-12 01:01:01.000 | 5 | 62 | 150 |

| EU | Salesforce.com | Reports | 2013-01-02 12:02:01.000 | 25 | 11 | 2 |

| EU | Salesforce.com | Reports | 2013-01-02 14:32:01.000 | 125 | 131 | 42 |

复杂SQL:

计数

0: jdbc:phoenix:node1,node2,node3:2181> select count(1) from WEB_STAT;

+-----------+

| COUNT(1) |

+-----------+

| 39 |

+-----------+

1 row selected (0.148 seconds)

平均值

0: jdbc:phoenix:node1,node2,node3:2181> select avg(core) from WEB_STAT;

+------------------+

| AVG(USAGE.CORE) |

+------------------+

| 217.0769 |

+------------------+

聚合

0: jdbc:phoenix:node1,node2,node3:2181> select domain,count(1) c from WEB_STAT group by domain order by c ;

+-----------------+-----+

| DOMAIN | C |

+-----------------+-----+

| Google.com | 8 |

| Apple.com | 9 |

| Salesforce.com | 22 |

+-----------------+-----+

3 rows selected (0.126 seconds)

0: jdbc:phoenix:node1,node2,node3:2181> select domain,count(1) c, avg(core),sum(db) from WEB_STAT group by domain order by c ;

+-----------------+-----+------------------+----------------+

| DOMAIN | C | AVG(USAGE.CORE) | SUM(USAGE.DB) |

+-----------------+-----+------------------+----------------+

| Google.com | 8 | 212.875 | 1710 |

| Apple.com | 9 | 114.1111 | 1076 |

| Salesforce.com | 22 | 260.7272 | 5668 |

+-----------------+-----+------------------+----------------+

3 rows selected (0.247 seconds)

更多phoenix语法请参考官网:http://phoenix.apache.org/language/index.html

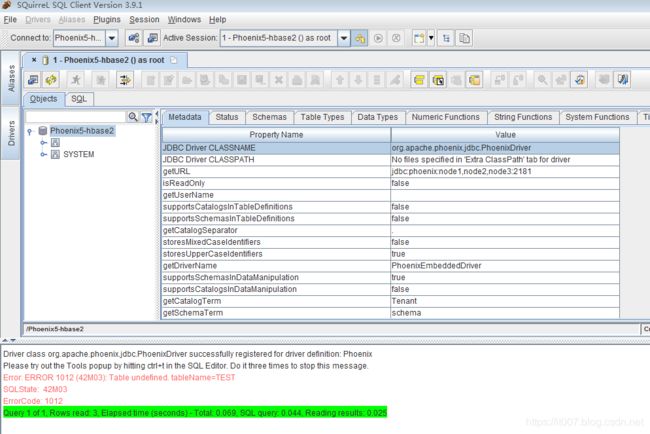

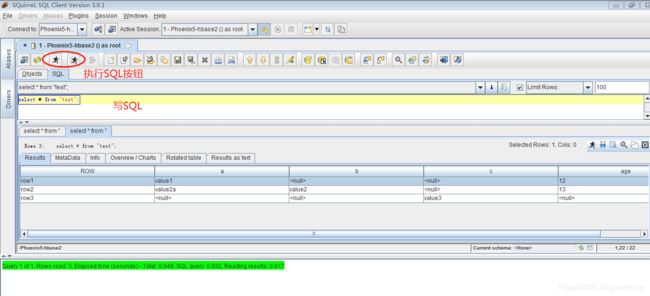

SQuirrel客户端安装

SQuirrel是一个图形化界面工具。由于Phoenix是一个JDBC驱动程序,因此与此类工具的集成是无缝的。通过SQuirrel,您可以在SQL选项卡中发出SQL语句(创建表,插入数据,运行查询),并在“对象”选项卡中检查表元数据(即列表,列,主键和类型)。

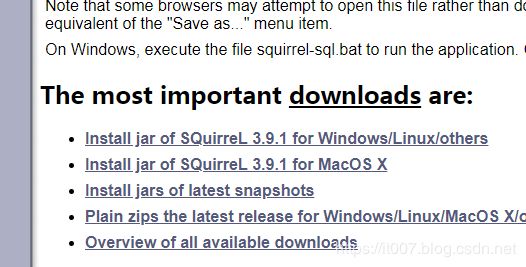

下载地址:http://squirrel-sql.sourceforge.net/#installation

下载下来是squirrel-sql-3.9.1-standard.jar。

我们的hbase和hadoop等都是安装在虚拟机中的,这里squirrel客户端我们安装在宿主机Windows机器上。

使用命令java -jar squirrel-sql-3.9.1-standard.jar 进行安装,全部默认点击next即可。

安装完成后,默认是安装在C:\Program Files\squirrel-sql-3.9.1文件夹。

配置Phoenix客户端并启动

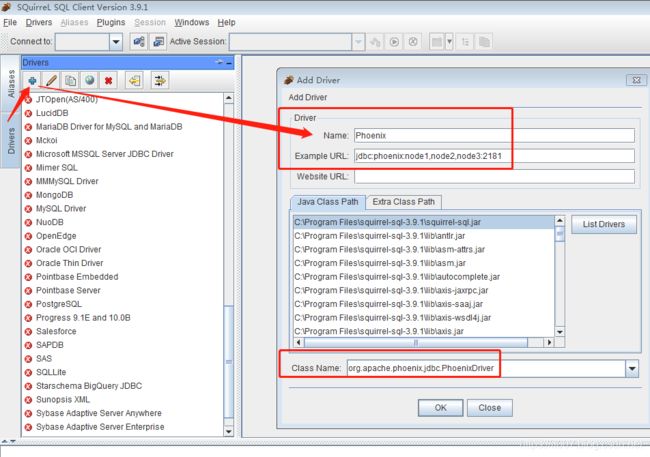

- 配置Phoenix客户端jar包

将apache-phoenix-5.0.0-HBase-2.0-bin.tar.gz中的phoenix-5.0.0-HBase-2.0-client.jarjar包拷贝到squirrel-sql-3.9.1\lib文件夹下。

然后双击squirrel-sql.bat 文件启动squirrel-sql-client。

- 配置驱动

这里name随便写,

URL填jdbc:phoenix:node1,node2,node3:2181,

className填org.apache.phoenix.jdbc.PhoenixDriver

点击OK保存。

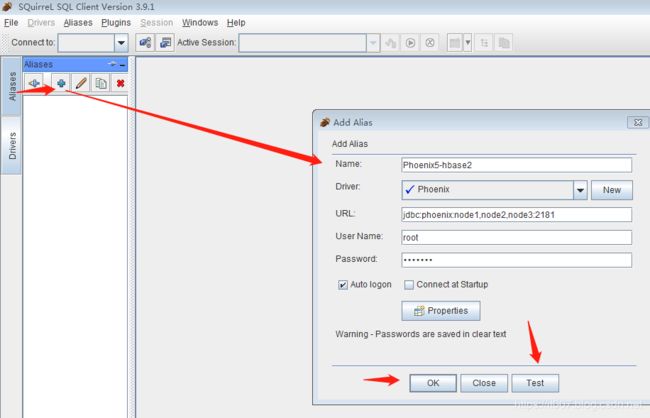

- 配置alias别名

name随便写

driver选刚才创建的驱动

URL自动显示

username和password是我们虚拟机登录密码

auto logon 前面的复选框选中

点击Test按钮测试链接是否可用

点击OK按钮保存

之后进入squirrel-sql-client界面。

注意:SQL区分大小写,默认会转为大写,如果我们想要小写,需要加双引号。