第四十期-ARM Linux内核的中断(10)

作者:罗宇哲,中国科学院软件研究所智能软件研究中心

上一期中我们介绍了ARM Linux内核中添加工作项的关键函数,这一期我们继续介绍其他与工作队列相关的关键函数。

一、ARM Linux内核与工作队列相关的关键函数

从第38期我们可以知道,一个worker_pool数据结构中维护了两个工作者列表:一个空闲的工作者列表和一个工作者的busy hash table。事实上每个工作者都对应了一个内核线程[1],而工作者线程调用函数worker_thread(),该函数的源码在openeuler/kernel/blob/kernel-4.19/kernel/workqueue.c文件中可以找到(以下代码没有特别说明的都可以在workqueue.c文件中找到):

static int worker_thread(void *__worker)

{

/* tell the scheduler that this is a workqueue worker */

set_pf_worker(true);

woke_up:

spin_lock_irq(&pool->lock);

/* am I supposed to die? */

if (unlikely(worker->flags & WORKER_DIE)) {

spin_unlock_irq(&pool->lock);

WARN_ON_ONCE(!list_empty(&worker->entry));

set_pf_worker(false);

set_task_comm(worker->task, "kworker/dying");

ida_simple_remove(&pool->worker_ida, worker->id);

worker_detach_from_pool(worker);

kfree(worker);

return 0;

}

worker_leave_idle(worker);//工作者离开idle状态

recheck:

/* no more worker necessary? */

if (!need_more_worker(pool))

goto sleep;

/* do we need to manage? */

if (unlikely(!may_start_working(pool)) && manage_workers(worker))

goto recheck;

/*

* ->scheduled list can only be filled while a worker is

* preparing to process a work or actually processing it.

* Make sure nobody diddled with it while I was sleeping.

*/

WARN_ON_ONCE(!list_empty(&worker->scheduled));

/*

* Finish PREP stage. We're guaranteed to have at least one idle

* worker or that someone else has already assumed the manager

* role. This is where \@worker starts participating in concurrency

* management if applicable and concurrency management is restored

* after being rebound. See rebind_workers() for details.

*/

worker_clr_flags(worker, WORKER_PREP | WORKER_REBOUND);

do {

struct work_struct *work =

list_first_entry(&pool->worklist,

struct work_struct, entry);

pool->watchdog_ts = jiffies;

if (likely(!(*work_data_bits(work) & WORK_STRUCT_LINKED)))

{//工作之间没有连接关系

/* optimization path, not strictly necessary */

process_one_work(worker, work);/*处理工作*/

if (unlikely(!list_empty(&worker->scheduled)))

process_scheduled_works(worker);/*处理调度列表中的工作*/

} else {//工作之间有连接关系

move_linked_works(work, &worker->scheduled, NULL);/*将工作项放入调度列表中*/

process_scheduled_works(worker);

}

} while (keep_working(pool));

sleep:

/*

* pool->lock is held and there's no work to process and no need to

* manage, sleep. Workers are woken up only while holding

* pool->lock or from local cpu, so setting the current state

* before releasing pool->lock is enough to prevent losing any

* event.

*/

worker_enter_idle(worker);//工作者进入idle状态

__set_current_state(TASK_IDLE);

spin_unlock_irq(&pool->lock);

schedule();

goto woke_up;

}

worker_thread()函数遍历worker_pool数据结构中的pending工作项列表worklist并处理工作项。Linux内核中likely()函数表示条件条件值为真的可能性比较大,从而在编译成二进制程序时使if判断后面的语句紧跟前面的语句,便于cache预取从而增加程序执行的速度。unlikely()函数则相反,优化的是条件值为假的可能性比较大的情形,有else分支时便于else分支中指令的预取。WORK_STRUCT_LINKED的定义在openeuler/kernel/blob/kernel-4.19/include/linux/workqueue.h文件中可以找到:

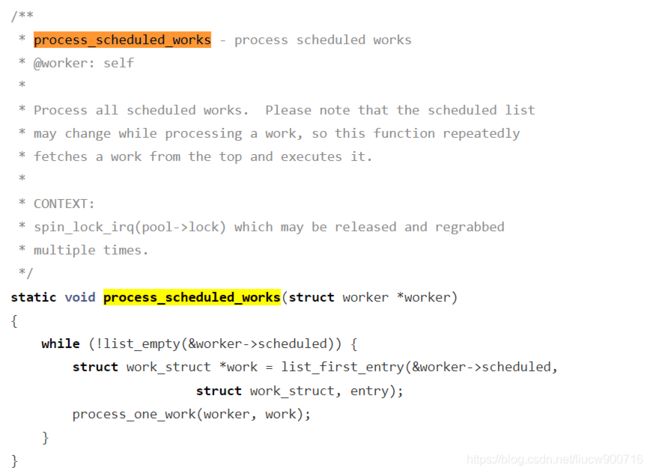

该位用于表示工作项之间是否有连接关系。如果没有连接关系(这也是最常见的情形)那么调用process_one_work()函数处理工作项,process_one_work()函数在后文详细介绍。当worker中的调度链表(scheduled链表)不为空时,还需要调用process_scheduled_works()遍历worker中的调度链表中的工作项并调用process_one_work()函数处理工作项,process_scheduled_works()函数的代码如下:

当工作项之间有关联时,调用move_linked_works()函数将工作项加入调度链表中。move_linked_works()函数用于将一系列需要连续执行的工作项添加到参数列表里的链表里以便调度执行,其源代码如下:

/**

* move_linked_works - move linked works to a list

* @work: start of series of works to be scheduled

* @head: target list to append @work to

* @nextp: out parameter for nested worklist walking

*

* Schedule linked works starting from @work to @head. Work series to

* be scheduled starts at @work and includes any consecutive work with

* WORK_STRUCT_LINKED set in its predecessor.

*/

static void move_linked_works(struct work_struct *work, struct list_head *head,

struct work_struct **nextp)

{

struct work_struct *n;

/*

* Linked worklist will always end before the end of the list,

* use NULL for list head.

*/

list_for_each_entry_safe_from(work, n, NULL, entry) {

list_move_tail(&work->entry,head);/*将entry从其原来所在的链表中删除并添加到head所在的链表的尾部\*/

if (!(*work_data_bits(work) & WORK_STRUCT_LINKED))

break;

}

/*

* If we're already inside safe list traversal and have moved

* multiple works to the scheduled queue, the next position

* needs to be updated.

*/

if (nextp)

*nextp = n;

}

下面我们着重考察一下process_one_work()函数,该函数完成了工作项处理流程的主要部分,其代码如下:

/**

* process_one_work - process single work

* @worker: self

* @work: work to process

*

* Process @work. This function contains all the logics necessary to

* process a single work including synchronization against and

* interaction with other workers on the same cpu, queueing and

* flushing. As long as context requirement is met, any worker can

* call this function to process a work.

*

* CONTEXT:

* spin_lock_irq(pool-\>lock) which is released and regrabbed.

*/

static void process_one_work(struct worker *worker, struct work_struct *work)

__releases(&pool->lock)

__acquires(&pool->lock)

{

struct pool_workqueue *pwq = get_work_pwq(work);

struct worker_pool *pool = worker->pool;

bool cpu_intensive = pwq->wq->flags & WQ_CPU_INTENSIVE;

int work_color;

struct worker *collision;

……

/*

* A single work shouldn't be executed concurrently by

* multiple workers on a single cpu. Check whether anyone is

* already processing the work. If so, defer the work to the

* currently executing one.

*/

collision = find_worker_executing_work(pool, work);

if (unlikely(collision)) {

move_linked_works(work, &collision->scheduled,

NULL);/*当有其他工作者在处理该工作项时,将该工作项加入正在处理相同工作项的工作者的调度链表*/

return;

}

/* claim and dequeue */

debug_work_deactivate(work);

hash_add(pool->busy_hash, &worker->hentry, (unsigned

long)work);/*将worker加入busy_hash table中,以工作项作为key*/

worker->current_work = work;

worker->current_func = work->func;//设置工作者的当前工作

worker->current_pwq = pwq;

……

/*

* CPU intensive works don't participate in concurrency management.

* They're the scheduler's responsibility. This takes @worker out

* of concurrency management and the next code block will chain

* execution of the pending work items.

*/

if (unlikely(cpu_intensive))

worker_set_flags(worker, WORKER_CPU_INTENSIVE);

/*

* Wake up another worker if necessary. The condition is always

* false for normal per-cpu workers since nr_running would always

* be >= 1 at this point. This is used to chain execution of the

* pending work items for WORKER_NOT_RUNNING workers such as the

* UNBOUND and CPU_INTENSIVE ones.

*/

if (need_more_worker(pool))

wake_up_worker(pool);

……

worker->current_func(work);//处理当前工作

……

/* we're done with it, release */

hash_del(&worker->hentry);

worker->current_work = NULL;

worker->current_func = NULL;

worker->current_pwq = NULL;

……

}

process_one_work()函数会在指定的工作者所在的worker_pool中寻找是否有其他的工作者在执行该工作项,如果有则将待处理的工作项添加到找到的工作者的调度链表里并返回。从worker_thread()函数中我们知道,工作者处理完一个工作项后会调用process_scheduled_works()函数处理调度链表里面的工作项。使用这种方式可以延迟工作项的执行,从而避免同一个处理器上多个工作者执行同一工作项的情况。如果没有其他工作者执行该工作项,则将参数列表指定的工作者加入worker_pool的busy

hash table中,然后将工作项的工作设置为工作者当前的工作。

处理器密集型工作是一类特殊的工作,这类工作会长时间占用处理器,从而可能会阻塞后续工作的执行。对于处理器密集型工作,工作队列分配一个工作者执行它并使该工作者不受worker_pool调度的影响,然后使用新的工作者处理后续的工作项[1]。

worker_pool负责管理工作者的动态添加和删除。工作者有三种工作状态[1]:

- 空闲(idle):没有执行工作;

- 运行(running):正在执行工作;

- 挂起(suspend):执行工作的过程中睡眠。

worker_enter_idle()函数(在worker_thread()函数中被调用)使工作者进入空闲状态,它更改了worker_pool中的idle工作者计数,并将工作者加入worker_pool的idle链表里,其代码如下:

/**

* worker_enter_idle - enter idle state

* @worker: worker which is entering idle state

*

* @worker is entering idle state. Update stats and idle timer if

* necessary.

*

* LOCKING:

* spin_lock_irq(pool-\>lock).

*/

static void worker_enter_idle(struct worker *worker)

{

struct worker_pool *pool = worker->pool;

if (WARN_ON_ONCE(worker->flags & WORKER_IDLE) ||

WARN_ON_ONCE(!list_empty(&worker->entry) &&

(worker->hentry.next || worker->hentry.pprev)))

return;

/* can't use worker_set_flags(), also called from create_worker() */

worker->flags |= WORKER_IDLE;

pool->nr_idle++;//更改idle工作者计数

worker->last_active = jiffies;

/* idle_list is LIFO */

list_add(&worker->entry, &pool->idle_list);//将工作者加入idle链表

if (too_many_workers(pool) && !timer_pending(&pool->idle_timer))

mod_timer(&pool->idle_timer, jiffies + IDLE_WORKER_TIMEOUT);

/*

* Sanity check nr_running. Because unbind_workers() releases

* pool->lock between setting %WORKER_UNBOUND and zapping

* nr_running, the warning may trigger spuriously. Check iff

* unbind is not in progress.

*/

WARN_ON_ONCE(!(pool->flags & POOL_DISASSOCIATED) &&

pool->nr_workers == pool->nr_idle &&

atomic_read(&pool->nr_running));

}

worker_leave_idle()函数(在worker_thread()函数中被调用)使工作者离开idle状态,它使worker_pool

idle计数减一并将工作者从idle链表中删除:

/**

* worker_leave_idle - leave idle state

* @worker: worker which is leaving idle state

*

* @worker is leaving idle state. Update stats.

*

* LOCKING:

* spin_lock_irq(pool->lock).

*/

static void worker_leave_idle(struct worker *worker)

{

struct worker_pool *pool = worker->pool;

if (WARN_ON_ONCE(!(worker->flags & WORKER_IDLE)))

return;

worker_clr_flags(worker, WORKER_IDLE);

pool->nr_idle--;//idle计数减一

list_del_init(&worker->entry);//将工作者从idle链表中删除

}

工作者的调度遵循以下规则[1]:

- 如果worker_pool中有待处理的工作,至少保持一个工作者来处理;

- 如果处于运行状态的工作者在执行工作的过程中进入挂起状态,为保证其他工作的执行,需要唤醒空闲的工作者进行处理;

- 如果有工作需要处理,并且在运行的工作者数量大于1,那么让多余的工作者进入空闲状态;

- 如果没有工作需要处理,所有工作者进入空闲状态。

有关工作者调度的详细情况可以在work_thread()函数的完整代码中看到。

二、结语

本期我们介绍了ARM Linux内核中的工作队列相关的关键函数,下一期我们将介绍ARM Linux内核中的系统调用。

参考文献

- 《Linux内核深度解析》,余华兵著,2019