FTRL-Proximal全称Followthe-Regularized-Leader Proximal,是谷歌公司提出的在线学习算法,在处理带非光滑正则项(例如$l_1$范数)的凸优化问题上表现出色。传统的基于batch的算法无法有效地处理大规模的数据和在线数据,而许多互联网应用,例如广告,数据是一条一条过来的,每过来一条样本数据,模型的参数需要根据这个样本进行迭代更新。面对这样的应用场景,谷歌提出了FTRL-Proximal算法,并给出了工程化实现。在线学习算法根据每一个样本更新模型参数时,由于梯度方向不是全局的,会存在误差,FTRL算法很好地解决了这个问题,在保证模型精度的同时还获得了更好的稀疏性(减轻了线上预测时的内存消耗和计算压力)。

原理

FTRL算法的梯度更新方式如式(1)所示,

$$\begin{equation}W^{t+1} = \mathop{\arg\min}_{W} \{G^{(1:t)}W+\lambda_1||W||_1+\frac{1}{2}\lambda_2||W||_2^2+\frac{1}{2}\sum_{s=1}^{t}\sigma^{(1:t)}||W-W^{(s)}||_2^2\}\tag{1}\end{equation}$$

其中,$G^{(1:t)}=\sum_{s=1}^{t}G^{(s)}$,$\sigma^{(s)}=\frac{1}{\eta^(s)}-\frac{1}{\eta^{(s-1)}}$,$\sigma^{(1:t)}=\sum^{t}_{s=1}\sigma^{(s)}=\frac{1}{\eta^{(t)}}$,对式(1)展开,

$$\begin{equation}W^{t+1} = \mathop{\arg\min}_{W} \{(G^{(1:t)}-\sum_{t=1}^s\sigma^{(s)}W^{(s)})W+\lambda_1||W||_1+\frac{1}{2}(\lambda_2+\sum_{t=1}^s\sigma^{(s)})||W||_2^2+\frac{1}{2}\sum_{s=1}^{t}\sigma^{(s)}||W^{(s)}||_2^2\}\tag{2}\end{equation}$$

式(2)中的$\frac{1}{2}\sum_{s=1}^{t}\sigma^{(s)}||W^{(s)}||_2^2$可以看作是常数,令$Z^{(t)}=G^{(1:t)}-\sum_{t=1}^s\sigma^{(s)}W^{(s)}$,式(2)写为

$$\begin{equation}W^{t+1} = \mathop{\arg\min}_{W} \{Z^{(t)}W+\lambda_1||W||_1+\frac{1}{2}(\lambda_2+\sum_{t=1}^s\sigma^{(s)})||W||_2^2\}\tag{3}\end{equation}$$

拆分到每一个维度,

$$\begin{equation}w^{t+1}_i = \mathop{\arg\min}_{w_i} \{z^{(t)}_i w_i+\lambda_1|w_i|_1+\frac{1}{2}(\lambda_2+\sum_{t=1}^s\sigma^{(s)})w_i^2\}\tag{4}\end{equation}$$

通过计算得到,

$$\begin{equation}w_i^{(t+1)}=\begin{cases}0, if \quad |z_i^{(t)}|<\lambda_1\\-(\lambda_2+\sum_{s=1}^{t}\sigma(s))(z_i^{(t)}-\lambda_1*sign(z_i^{(t)})),otherwise\end{cases}\tag{5}\end{equation}$$

每一个维度的学习率都是单独考虑的,

$$\begin{equation}\eta_{i}^{(t)}=\frac{\alpha}{\beta+\sqrt{\sum_{s=1}^t (g_i^{(s)})^2}}\tag{6}\end{equation}$$

其中$g_i^{(s)}$为$W$的第$i$个维度$w_i$在时间步$s$的梯度。

代码实现

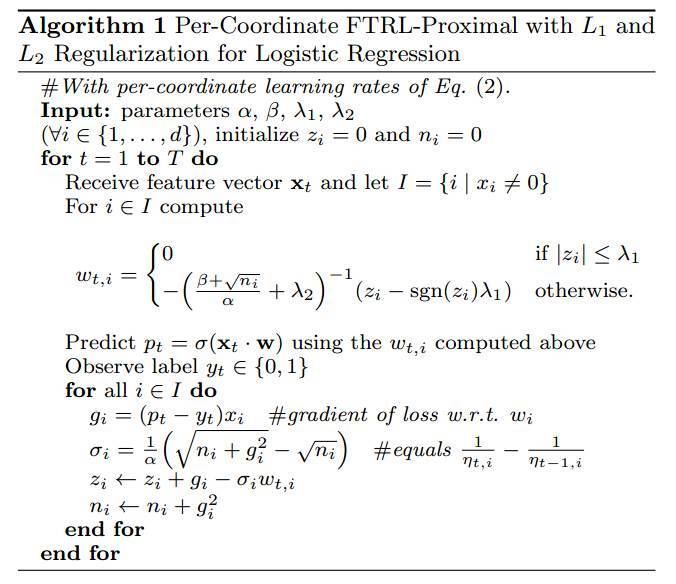

下列代码为FTRL的实现,其中FTRLProximal根据参照Algorithm1的伪代码实现,FTRLProximal_TF借助于tensorflow自带的优化器tf.train.FtrlOptimizer。

# -*- coding:utf-8 -*-

import tensorflow as tf

import math

import json

from sklearn.datasets import load_breast_cancer

import numpy as np

from sklearn.model_selection import train_test_split

class FTRLProximal(object):

def __init__(self, alpha, beta, L1, L2, D):

super().__init__()

self.alpha = alpha

self.beta = beta

self.L1 = L1 # L1范数系数

self.L2 = L2 # L2范数系数

self.D = D # 特征的dimension

self.N = [0.0] * D

self.Z = [0.0] * D

self.W = [0.0] * D

def predict(self, x):

"""

:param x: 输入的样本数据,维度0的值为1.0

"""

wTx = 0.0

for j, i in enumerate(x):

wTx += self.W[j] * i

# bounded sigmoid function

return 1.0 / (1.0 + math.exp(-max(min(wTx, 35.0), -35.0)))

def update(self, x, y):

"""

:param x: 输入的样本数据

:param y: 标签

"""

p = self.predict(x)

loss = p - y

for i, x_i in enumerate(x):

g_i = loss * x_i

sigma_i = (math.sqrt(self.N[i] + g_i * g_i) - math.sqrt(self.N[i])) / self.alpha

self.Z[i] += g_i - sigma_i * self.W[i]

self.N[i] += g_i * g_i

sign = -1.0 if self.Z[i] < 0 else 1.0 # 跃阶函数

if sign * self.Z[i] < self.L1:

# w[i] vanishes due to L1 regularization

self.W[i] = 0.0

else:

self.W[i] = (sign * self.L1 - self.Z[i]) / ((self.beta + math.sqrt(self.N[i])) / self.alpha + self.L2)

def loss(self, y, y_):

"""

:param y: 标签

:param y_: 预测值

"""

p = max(min(y_, 1. - 10e-15), 10e-15) # 保证0 0.5 else 0.0

if predict == y:

count += 1

return count / X_test.shape[0]

class FTRLProximal_TF(object):

def __init__(self, sess, D):

super().__init__()

self.placeholders= {}

self.sess = sess

self.D = D

self.optimizer = tf.train.FtrlOptimizer(learning_rate=0.03, l1_regularization_strength=0.01, l2_regularization_strength=0.01)

def predict(self, X, reuse=False):

with tf.variable_scope("parameters", reuse=reuse):

self.W = tf.get_variable(name="W", shape=[self.D, 1], dtype=tf.float64, initializer=tf.random_normal_initializer(stddev=0.1))

# self.b = tf.get_variable(name="b", shape=[1, 1], dtype=tf.float32, initializer=tf.constant_initializer(0.0))

return tf.matmul(X, self.W)

def build_graph(self):

self.make_placeholders()

X = self.placeholders['feature']

y = self.placeholders['label']

predict = self.predict(X, reuse=False)

self.loss = tf.nn.sigmoid_cross_entropy_with_logits(labels=y, logits=predict)

self.update_op = self.optimizer.minimize(self.loss)

def train(self, X, labels):

tf.global_variables_initializer().run()

for i, (x, y) in enumerate(zip(X, labels)):

loss, _ = self.sess.run([self.loss, self.update_op], feed_dict={self.placeholders['feature']:x.reshape((1, -1)), self.placeholders['label']:y.reshape((1, -1))})

print("Round {}: {}".format(i, loss))

def test(self, X, labels):

count = 0

for i, (x, y) in enumerate(zip(X, labels)):

y_ = self.sess.run(tf.nn.sigmoid(self.predict(x.reshape((1, -1)), reuse=True)), feed_dict={self.placeholders['feature']:x.reshape((1, -1)), self.placeholders['label']:y.reshape((1, -1))})

predict = 1.0 if y_ > 0.5 else 0.0

if predict == y:

count += 1

return count / X.shape[0]

def make_placeholders(self):

self.placeholders['feature'] = tf.placeholder(dtype=tf.float64, shape=[1, self.D])

self.placeholders['label'] = tf.placeholder(dtype=tf.float64, shape=[1, 1])

if __name__ == "__main__":

data, labels = load_breast_cancer(return_X_y=True)

a, b = data.shape

print(a, b)

data = np.concatenate((np.ones(shape=(a, 1)), data), axis=1)

X_train, X_test, y_train, y_test = train_test_split(data, labels, test_size=0.3)

model1 = FTRLProximal(alpha=1.0, beta=1.0, L1=0.01, L2=0.01, D=b+1)

model1.train(X_train, y_train)

acc1 = model1.test(X_test, y_test)

print("model1 acc: {}".format(acc1))

with tf.Session() as sess:

model2 = FTRLProximal_TF(sess, D=b+1)

model2.build_graph()

model2.train(X_train, y_train)

acc2 = model2.test(X_test, y_test)

print("model2 acc: {}".format(acc2))

参考

- H Brendan McMahan and others. 2013. Ad click prediction: a view from the trenches. In Proceedings of the 19th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM, 1222–1230.

- http://www.cnblogs.com/EE-Nov...

- https://zhuanlan.zhihu.com/p/...