强化学习论文(6): Distributed Distributional Deterministic Policy Gradients (D4PG)

分布式-分布DDPG,发表在ICLR 2018

论文链接:https://arxiv.org/pdf/1804.08617.pdf

要点总结

从两个方面对DDPG进行扩展:

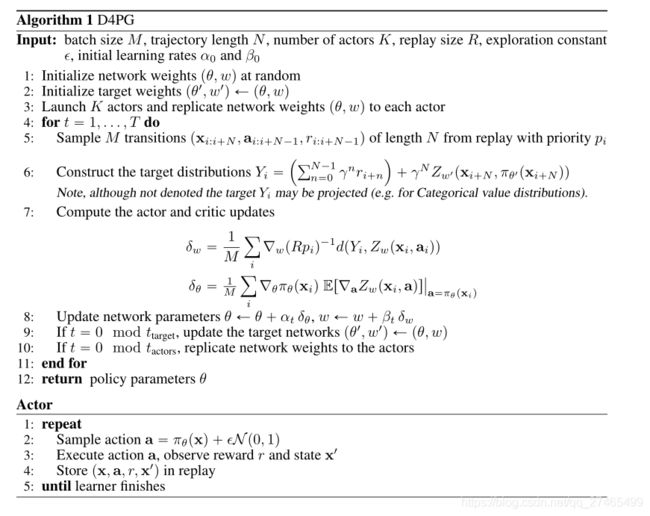

- Distributed:对Actor,将单一Actor扩展至多个,并行收集experience,如算法Actor部分所示

- Distributional:对Critic,将Critic由一个函数扩展成一个分布

在DDPG中:

Q π ( x , a ) = E [ ∑ t = 0 ∞ γ t r ( x t , a t ) ] where x 0 = x , a 0 = a x t ∼ p ( ⋅ ∣ x t − 1 , a t − 1 ) a t = π ( x t ) \begin{aligned} Q_{\pi}(\mathbf{x}, \mathbf{a})=\mathbb{E}\left[\sum_{t=0}^{\infty} \gamma^{t} r\left(\mathbf{x}_{t}, \mathbf{a}_{t}\right)\right] \text { where } & \mathbf{x}_{0}=\mathbf{x}, \mathbf{a}_{0}=\mathbf{a} \\ & \mathbf{x}_{t} \sim p\left(\cdot | \mathbf{x}_{t-1}, \mathbf{a}_{t-1}\right) \\ & \mathbf{a}_{t}=\pi\left(\mathbf{x}_{t}\right) \end{aligned} Qπ(x,a)=E[t=0∑∞γtr(xt,at)] where x0=x,a0=axt∼p(⋅∣xt−1,at−1)at=π(xt)

使用参数化的Critic近似 Q ( x , a ) Q(x,a) Q(x,a)的函数值

从分布观点来看,把收益看作是随机变量 Z π Z_{\pi} Zπ,则 Q π ( x , a ) = E Z π ( x , a ) Q_{\pi}(\mathbf{x}, \mathbf{a})=\mathbb{E} Z_{\pi}(\mathbf{x}, \mathbf{a}) Qπ(x,a)=EZπ(x,a)

分布贝尔曼操作可定义为:

( T π Z ) ( x , a ) = r ( x , a ) + γ E [ Z ( x ′ , π ( x ′ ) ) ∣ x , a ] \left(\mathcal{T}_{\pi} Z\right)(\mathbf{x}, \mathbf{a})=r(\mathbf{x}, \mathbf{a})+\gamma \mathbb{E}\left[Z\left(\mathbf{x}^{\prime}, \pi\left(\mathbf{x}^{\prime}\right)\right) | \mathbf{x}, \mathbf{a}\right] (TπZ)(x,a)=r(x,a)+γE[Z(x′,π(x′))∣x,a]

相应的Critic和Actor Loss为:

L ( w ) = E ρ [ d ( T π θ ′ Z w ′ ( x , a ) , Z w ( x , a ) ) ] L(w)=\mathbb{E}_{\rho}\left[d\left(\mathcal{T}_{\pi_{\theta^{\prime}}} Z_{w^{\prime}}(\mathbf{x}, \mathbf{a}), Z_{w}(\mathbf{x}, \mathbf{a})\right)\right] L(w)=Eρ[d(Tπθ′Zw′(x,a),Zw(x,a))]

∇ θ J ( θ ) ≈ E ρ [ ∇ θ π θ ( x ) ∇ a Q w ( x , a ) ∣ a = π θ ( x ) ] = E ρ [ ∇ θ π θ ( x ) E [ ∇ a Z w ( x , a ) ] ∣ a = π θ ( x ) ] \nabla_{\theta}J(\theta)\approx\mathbb{E}_{\rho}\left[\nabla_{\theta}\pi_{\theta}(\mathbf{x})\nabla_{\mathbf{a}}Q_{w}(\mathbf{x}, \mathbf{a})|_{\mathbf{a}=\pi_\theta(\mathbf{x})}\right]=\mathbb{E}_{\rho}\left[\nabla_{\theta} \pi_{\theta}(\mathbf{x}) \mathbb{E}\left[\nabla_{\mathbf{a}} Z_{w}(\mathbf{x}, \mathbf{a})\right]|_{\mathbf{a}=\pi_\theta(\mathbf{x})}\right] ∇θJ(θ)≈Eρ[∇θπθ(x)∇aQw(x,a)∣a=πθ(x)]=Eρ[∇θπθ(x)E[∇aZw(x,a)]∣a=πθ(x)]

实现时,把Critic的输出作为收益分布 Z π Z_\pi Zπ的参数