机器学习简单回顾:2 naive bayes

Generative model: In the theory of probability and statistics, the generative model refers to a model that can randomly generate observation data, especially given certain implicit parameters. It assigns a joint probability distribution to observations and labeled data series. In machine learning, generative models can be used to directly model data (for example, data sampling based on the probability density function of a variable), or can be used to establish a conditional probability distribution between variables. The conditional probability distribution can be formed by the generative model according to Bayes’ theorem. Common generative model-based algorithms include Gaussian mixture model and other mixture models, hidden Markov model, random context-free grammar, naive Bayes classifier, AODE classifier, latent Dirichlet allocation model, restricted Boltzmann machine

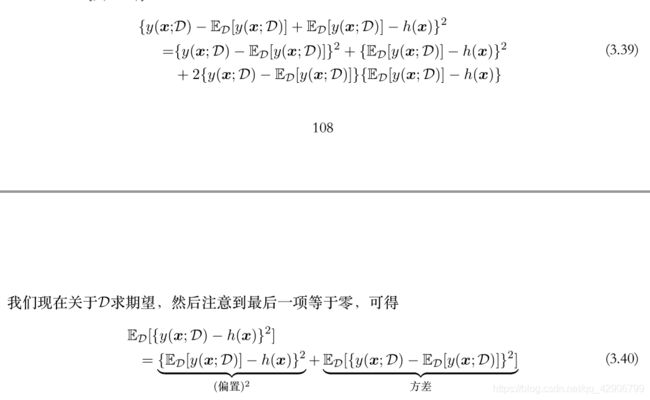

regulation term is used for bias-variance trade-off, which use λ \lambda λ control the regulation term whether too big to have great bias or too small to have great variance.

The zero probability problem is that when calculating the probability of an instance, if a certain amount x does not appear in the observation sample library (training set), the probability result of the entire instance will be 0.

In the actual model training process, the problem of zero probability may occur (because the a priori probability and the anti-conditional probability are calculated based on the training sample, but the number of training samples is not unlimited, so there may be situations that exist in practice, but Not in the training sample, resulting in a probability value of 0, which affects the calculation of the posterior probability.) Even if the amount of training data can continue to be increased, for some problems, how to increase the data is not enough. At this time we say that the model is not smooth, and we want to make it smooth. One way is to replace the training (learning) method with Bayesian estimation.

Inherent flaw of the Naive Bayes algorithm: the information carried by other attributes is “erased” by the attribute value that does not appear under a certain category in the training set, resulting in the predicted probability being absolutely zero. In order to make up for this defect, the predecessors introduced the Laplace smoothing method: add 1 to the numerator (divided count) of the prior probability, and the number of categories; add 1 to the numerator of the conditional probability, and add the corresponding The number of possible values of the feature. In this way, while solving the problem of zero probability, the probability sum is also guaranteed to be 1:

Advantage

Naive Bayes model has a stable classification efficiency.

It performs well on small-scale data, can handle multi-classification tasks, and is suitable for incremental training. Especially when the amount of data exceeds the memory, batch incremental training can be performed.

It is not sensitive to missing data, and the algorithm is relatively simple, often used for text classification.

Disadvantages:

In theory, the Naive Bayes model has the smallest error rate compared with other classification methods. However, this is not always the case, because the naive Bayes model gives an output category, and assumes that the attributes are independent of each other. This assumption is often not true in practical applications. When there are many attributes or attributes When the correlation between them is large, the classification effect is not good. When the attribute correlation is small, Naive Bayes has the best performance. For this, there are algorithms such as semi-Naive Bayes that are moderately improved by considering partial relevance.

It is necessary to know the a priori probability, and the a priori probability often depends on the hypothesis. There may be many hypothetical models, so in some cases, the prediction effect is not good due to the hypothetical a priori model.

Since we determine the probability of the posterior by the prior and data to determine the classification, there is a certain error rate in the classification decision.

Very sensitive to the expression of input data.