第1.5 章 elasticsearch备份过程记录

每个人使用elasticsearch的起点不一样,遇到的问题也参差不齐。

一开始elasticsearch不是我安装,配置信息如下:

cluster.name: dzm_dev

node.name: node-185

network.host: 192.168.5.185

http.port: 9200

transport.tcp.port: 9300

http.cors.enabled: true

http.cors.allow-origin: "*"

node.master: true

node.data: true

discovery.zen.ping.unicast.hosts: ["192.168.5.185","192.168.5.186", "192.168.5.187"]

如果我想备份,那么我先需要在elasticsearch.yml中添加一行配置path.repo: ["/usr/hadoop/application/el_bak"]这个’/usr/hadoop/application/el_bak’就是备份文件的路径,根据自己需要指定,不过一定要注意用户权限。在权限内,这个文件夹会自动创建。

配置好后,先删掉elasticsearch进程,然后再启动elasticsearch。

/usr/hadoop/application/elasticsearch/bin/elasticsearch -d

#日志

tail -fn 100 /usr/hadoop/application/elasticsearch/logs/dzm_dev.log

还有一点需要注意,因为discovery.zen.ping.unicast.hosts: ["192.168.5.185","192.168.5.186", "192.168.5.187"]这里面的其他机器也都需要加上path.repo的配置,否则创建仓库的时候,会提示下面的错误

org.elasticsearch.transport.RemoteTransportException: [node-187][192.168.5.187:9300][cluster:admin/repository/put]

Caused by: org.elasticsearch.repositories.RepositoryException: [el_back] failed to create repository

at org.elasticsearch.repositories.RepositoriesService.createRepository(RepositoriesService.java:388) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.RepositoriesService.registerRepository(RepositoriesService.java:356) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.RepositoriesService.access$100(RepositoriesService.java:56) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.RepositoriesService$1.execute(RepositoriesService.java:109) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.ClusterStateUpdateTask.execute(ClusterStateUpdateTask.java:45) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.service.ClusterService.executeTasks(ClusterService.java:634) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.service.ClusterService.calculateTaskOutputs(ClusterService.java:612) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.service.ClusterService.runTasks(ClusterService.java:571) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.service.ClusterService$ClusterServiceTaskBatcher.run(ClusterService.java:263) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.service.TaskBatcher.runIfNotProcessed(TaskBatcher.java:150) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.cluster.service.TaskBatcher$BatchedTask.run(TaskBatcher.java:188) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.common.util.concurrent.ThreadContext$ContextPreservingRunnable.run(ThreadContext.java:569) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.runAndClean(PrioritizedEsThreadPoolExecutor.java:247) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.common.util.concurrent.PrioritizedEsThreadPoolExecutor$TieBreakingPrioritizedRunnable.run(PrioritizedEsThreadPoolExecutor.java:210) ~[elasticsearch-5.5.0.jar:5.5.0]

当我把185,186,187三台机器都配置好后,创建操作,结果提示下面的错误

[2018-01-05T10:24:34,860][WARN ][o.e.r.VerifyNodeRepositoryAction] [node-185] [el_back] failed to verify repository

org.elasticsearch.repositories.RepositoryVerificationException: [el_back] a file written by master to the store [/usr/hadoop/application/el_bak] cannot be accessed on the node [{node-185}{yyAyGv1mQcKEsGDVMa84ZQ}{E5jcL1_5R3CBSzhrwJQ8mQ}{192.168.5.185}{192.168.5.185:9300}]. This might indicate that the store [/usr/hadoop/application/el_bak] is not shared between this node and the master node or that permissions on the store don't allow reading files written by the master node

at org.elasticsearch.repositories.blobstore.BlobStoreRepository.verify(BlobStoreRepository.java:1025) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.VerifyNodeRepositoryAction.doVerify(VerifyNodeRepositoryAction.java:117) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.VerifyNodeRepositoryAction.access$300(VerifyNodeRepositoryAction.java:50) ~[elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.VerifyNodeRepositoryAction$VerifyNodeRepositoryRequestHandler.messageReceived(VerifyNodeRepositoryAction.java:153) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.repositories.VerifyNodeRepositoryAction$VerifyNodeRepositoryRequestHandler.messageReceived(VerifyNodeRepositoryAction.java:148) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.transport.TransportRequestHandler.messageReceived(TransportRequestHandler.java:33) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.transport.RequestHandlerRegistry.processMessageReceived(RequestHandlerRegistry.java:69) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.transport.TcpTransport$RequestHandler.doRun(TcpTransport.java:1544) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.common.util.concurrent.AbstractRunnable.run(AbstractRunnable.java:37) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.common.util.concurrent.EsExecutors$1.execute(EsExecutors.java:110) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.transport.TcpTransport.handleRequest(TcpTransport.java:1501) [elasticsearch-5.5.0.jar:5.5.0]

at org.elasticsearch.transport.TcpTransport.messageReceived(TcpTransport.java:1385) [elasticsearch-5.5.0.jar:5.5.0]

在elasticsearch5使用snapshot接口备份索引我找到了答案,我的elasticsearch集群需要配置共享.

1 采用nfs挂载

参考Linux—centos安装配置并挂载NFS

注意vim /etc/exports,内容如下,其中/usr/hadoop/application/el_bak是挂载的目录,括号里面是对权限进行设置。

/usr/hadoop/application/el_bak *(rw,sync,no_root_squash)

下面是centos6下面的操作,

如果没有安装nfs,可以通过yum -y install nfs-utils rpcbind,参考

Linux—centos安装配置并挂载NFS

185服务端

/etc/init.d/nfs status

/etc/init.d/rpcbind status

# 未启动服务的要先启动服务

/etc/init.d/nfs start

/etc/init.d/rpcbind start

#编辑配置文件

vi /etc/exports

/usr/hadoop/application/el_bak *(rw,sync,no_root_squash)

186客户端

/etc/init.d/rpcbind status

# 未启动服务的要先启动服务

/etc/init.d/rpcbind start

#挂载

showmount -e 192.168.5.185

mount -t nfs 192.168.5.185

:/usr/hadoop/application/el_bak /usr/hadoop/application/el_bak

#卸载

umount /usr/hadoop/application/el_bak

在centos7下面nfs有所区别,通过yum install -y nfs-utils进行安装,参考Centos7安装配置NFS服务和挂载

#在master节点上执行

#先为rpcbind和nfs做开机启动:(必须先启动rpcbind服务)

systemctl enable rpcbind.service

systemctl enable nfs-server.service

#然后分别启动rpcbind和nfs服务:

systemctl start rpcbind.service

systemctl start nfs-server.service

#确认NFS服务器启动成功:

rpcinfo -p

# 使配置生效

exportfs -r

# 查看配置情况

exportfs

#在slave节点,不需要启动nfs服务

systemctl enable rpcbind.service

systemctl start rpcbind.service

showmount -e 192.168.5.185

mount -t nfs 192.168.5.185 :/application/el_bak /application/el_bak

2 采用sshfs挂载

参考ElasticSearch集群数据迁移备份方案

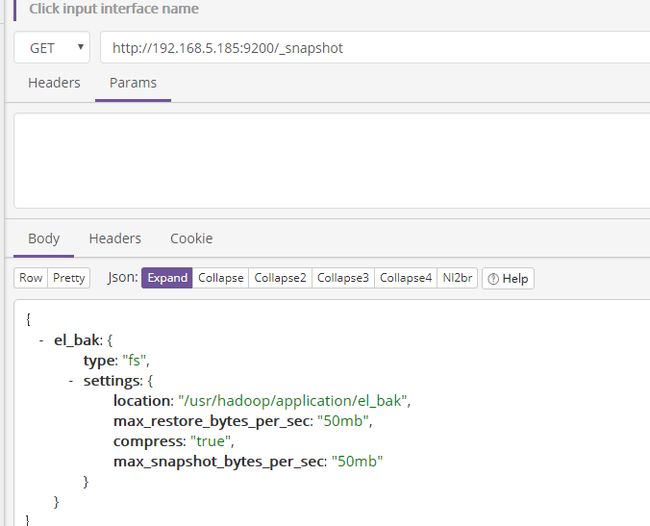

3 快照仓库操作REST接口

删除仓库

DELETE http://192.168.5.185:9200/_snapshot/my_backup

创建仓库

curl -XPUT 'http://192.168.5.185:9200/_snapshot/el_bak' -d '{"type": "fs","settings": {"location":"/usr/hadoop/application/el_bak","max_snapshot_bytes_per_sec" : "50mb", "max_restore_bytes_per_sec" :"50mb","compress":true}}'

创建的时候出现了下面的错误

{"error":{"root_cause":[{"type":"repository_verification_exception","reason":"[el_bak] [[yyAyGv1mQcKEsGDVMa84ZQ, 'RemoteTransportException[[node-185][192.168.5.185:9300][internal:admin/repository/verify]]; nested: RepositoryVerificationException[[el_bak] store location [/usr/hadoop/application/el_bak] is not accessible on the node [{node-185}{yyAyGv1mQcKEsGDVMa84ZQ}{1yS2vGSXQjSRYjraptiIcw}{192.168.5.185}{192.168.5.185:9300}]]; nested: AccessDeniedException[/usr/hadoop/application/el_bak/tests-km6E2_MpQ2aE4REyClbPTg/data-yyAyGv1mQcKEsGDVMa84ZQ.dat];'], [QjyiZHI8R1SnJfue-7x6Bg, 'RemoteTransportException[[node-187][192.168.5.187:9300][internal:admin/repository/verify]]; nested: RepositoryVerificationException[[el_bak] store location [/usr/hadoop/application/el_bak] is not accessible on the node [{node-187}{QjyiZHI8R1SnJfue-7x6Bg}{cLXcXL7XR16WokqHnwZ14g}{192.168.5.187}{192.168.5.187:9300}]]; nested: AccessDeniedException[/usr/hadoop/application/el_bak/tests-km6E2_MpQ2aE4REyClbPTg/data-QjyiZHI8R1SnJfue-7x6Bg.dat];']]"}],"type":"repository_verification_exception","reason":"[el_bak] [[yyAyGv1mQcKEsGDVMa84ZQ, 'RemoteTransportException[[node-185][192.168.5.185:9300][internal:admin/repository/verify]]; nested: RepositoryVerificationException[[el_bak] store location [/usr/hadoop/application/el_bak] is not accessible on the node [{node-185}{yyAyGv1mQcKEsGDVMa84ZQ}{1yS2vGSXQjSRYjraptiIcw}{192.168.5.185}{192.168.5.185:9300}]]; nested: AccessDeniedException[/usr/hadoop/application/el_bak/tests-km6E2_MpQ2aE4REyClbPTg/data-yyAyGv1mQcKEsGDVMa84ZQ.dat];'], [QjyiZHI8R1SnJfue-7x6Bg, 'RemoteTransportException[[node-187][192.168.5.187:9300][internal:admin/repository/verify]]; nested: RepositoryVerificationException[[el_bak] store location [/usr/hadoop/application/el_bak] is not accessible on the node [{node-187}{QjyiZHI8R1SnJfue-7x6Bg}{cLXcXL7XR16WokqHnwZ14g}{192.168.5.187}{192.168.5.187:9300}]]; nested: AccessDeniedException[/usr/hadoop/application/el_bak/tests-km6E2_MpQ2aE4REyClbPTg/data-QjyiZHI8R1SnJfue-7x6Bg.dat];']]"},"status":500}

但是有可以查询到,很是奇怪了

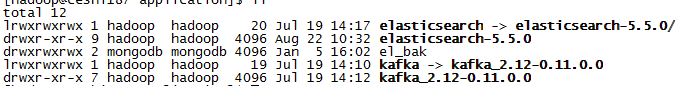

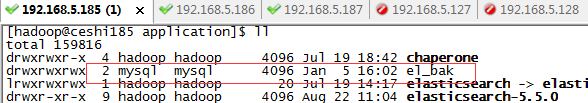

跟踪el_bak文件的权限,可以看到el_bak所属用户居然会自己变,很有意思。

通过cat /etc/passwd命令查看到三台机器的hadoop用户名对应的id不一致,所以造成了上面的情况。

这个问题比较隐蔽,先将对用户下面的进程全部关闭掉,然后更改\etc\password中的pid为相同的,重启elasticsearch在执行就ok了。

curl -XPUT 'http://192.168.5.185:9200/_snapshot/el_bak' -d '{"type": "fs","settings": {"location":"/usr/hadoop/application/el_bak","max_snapshot_bytes_per_sec" : "50mb", "max_restore_bytes_per_sec" :"50mb","compress":true}}'

POST http://192.168.5.185:9200/_snapshot/el_bak/20180109_3/_restore

6 删除type,然后想还原索引带来的问题

这个时候如果执行版本恢复,则提示下面的错误

{

"error": {

"root_cause": [

{

"type": "snapshot_restore_exception",

"reason": "[el_bak:20180109_3/W8DWSBr1TXOX3tDwUocTjQ] cannot restore index [xxxinfo] because it's open"

}

],

"type": "snapshot_restore_exception",

"reason": "[el_bak:20180109_3/W8DWSBr1TXOX3tDwUocTjQ] cannot restore index [xxxinfo] because it's open"

},

"status": 500

}

看来需要删掉索引,然后通过索引恢复才是正确的

我在生产环境中执行,在备份时还出现这样的问题,不得不再搜索资料。那是因为仓库没有创建

{

"error": {

"root_cause": [

{

"type": "repository_missing_exception",

"reason": "[el_bak] missing"

}

],

"type": "repository_missing_exception",

"reason": "[el_bak] missing"

},

"status": 404

}

7 elasticdump

elasticsearch迁移工具–elasticdump的使用

nohup elasticdump \

--input=http://elastic:[email protected]:9200/test \

--searchBody='{

"query": {

"range": {

"createdate": {

"lte": "2020-07-20 23:59:59"

}

}

}

}' \

--output=/appdata/test_bak_20200720.json \

--limit=2000 \

--type=data &

如果只想获取部分字段,则参考

nohup elasticdump --input=http://elastic:[email protected]:9200/test --output=test.json --sourceOnly --searchBody='

{

"query": {

"bool": {

"must": [

{

"match_phrase":

{"xhdwsbh": "11111"}

},

{

"range": {

"kprq": {

"gte": "20191105",

"lte": "20191106"

}

}

}

]

}

} ,

"_source": ["fplxdm","fpdm","fphm","fpzt","kprq","xhdwsbh","xhdwmc","xhdwdzdh","xhdwyhzh","ghdwsbh","ghdwmc","ghdwdzdh","ghdwyhzh","hsslbs","zhsl","hjje","hjse","jshj","bz","zyspmc"]

}' &