FCOS官方代码详解(一):Architecture(backbone)

FCOS官方代码详解(一):Architecture[backbone]

- tools/train_net.py

- main()

- train()

- fcos_core/modeling/detector/detectors.py

- fcos_core/modeling/detector/generalized_rcnn.py

- build_backbone()

- resnet.py

- fpn.py

- fcos_head

- 下一篇地址

- References

关于FCOS的论文讲解网上也有挺多了,但是从代码角度理解的不多,最近看了一下,想把自己的理解写出来,记录一下,可以忘记后又来看一眼,代码能理解,论文肯定能理解。个人还是比较喜欢这种one-stage的anchor-free的方法,简单,好理解,。不要着急,本片有点长,刚开始接触一天能看完就不错了~~

论文理解:FCOS: Fully Convolutional One-Stage Object Detection 【国内镜像】

官方源码:https://github.com/tianzhi0549/FCOS【基于maskrcnn-benchmark】

放一篇博客吧:FCOS: 最新的one-stage逐像素目标检测算法

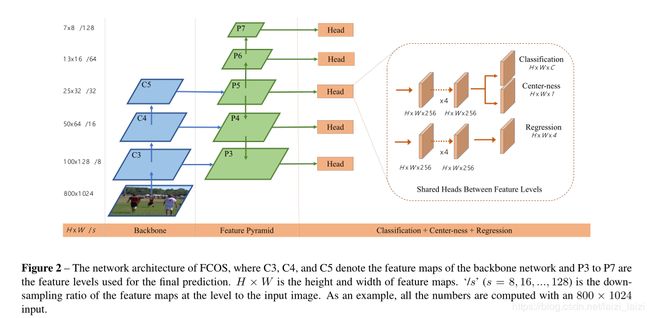

从论文里面可以看出,整个architecture就是由三部分构成的:Backbone、FPN、Head(里面又分成Classification,Center-ness,Regression三个分支)

现在就来看源码里面关于这三部分是怎么构造的吧:

tools/train_net.py

我们按着程序运行流向来逐个理解,最终形成整个pipeline的印象。这是官方repo中的README.md中训练部分的命令:

python -m torch.distributed.launch \

--nproc_per_node=8 \

--master_port=$((RANDOM + 10000)) \

tools/train_net.py \

--config-file configs/fcos/fcos_imprv_R_50_FPN_1x.yaml \

DATALOADER.NUM_WORKERS 2 \

OUTPUT_DIR training_dir/fcos_imprv_R_50_FPN_1x

- 咋一看怎么这个都看不懂,瞬间信心失掉一半,还是硬着头皮看吧,前三个参数都是有关分布式训练的,因为我只有单卡,所以我先没有管这三个参数,如果有多卡的,可以去看这一篇相似的高质量教程:Pytorch中多GPU训练指北。关于python -m的用法,可看这一篇:python -m是拿来干啥用的?

- 这里还是啰一嘴:

python -m使得torch.distributed.launch.py能像模块一样运行,因为分布式用的DistributedDataParallel,torch.distributed.launch为我们触发了n个train_net.py进程,nproc_per_node和master_port都是torch.distributed.launch.py的命令行参数。该文件位于miniconda3/lib/python3.7/site-packages/torch/distributed/launch.py - 训练入口就是

tools/train_net.py,之后在configs/fcos/下有很多.yaml后缀的配置文件,就像json,xml等文件,只不过需要利用yacs这个包进行读入,后面的DATALOADER.NUM_WORKERS和OUTPUT_DIR就是配置文件里面的某些项,这个等一下还要讲,不过建议先去看一下rgb大神写的yacs的说明:项目地址,就先看一下README就行,因为配置文件不是我们的重点。

main()

按着调用关系,最先来到的就是main()方法:

def main():

# 这个就是解析命令行参数,如上面的--config-file configs/fcos/fcos_imprv_R_50_FPN_1x.yaml

parser = argparse.ArgumentParser(description="PyTorch Object Detection Training")

parser.add_argument(

"--config-file",

default="",

metavar="FILE",

help="path to config file",

type=str,

)

# 这个参数是torch.distributed.launch传递过来的,我们设置位置参数来接受

# local_rank代表当前程序进程使用的GPU标号

parser.add_argument(

"--local_rank",

type=int,

default=0,

help="local_rank is used by torch.distributed.launch to leverage multiple GPUs",

)

parser.add_argument(

"--skip-test",

dest="skip_test",

help="Do not test the final model",

action="store_true",

)

parser.add_argument(

"opts",

help="Modify config options using the command-line",

default=None,

nargs=argparse.REMAINDER,

)

args = parser.parse_args()

# 判断机器上GPU的数量,大于1时自动使用分布式训练

# WORLD_SIZE 由torch.distributed.launch.py产生

# 具体数值为 nproc_per_node*node(node就是主机数)

num_gpus = int(os.environ["WORLD_SIZE"]) if "WORLD_SIZE" in os.environ else 1

args.distributed = num_gpus > 1

if args.distributed: # 因为我没有多卡,我就没管这个

torch.cuda.set_device(args.local_rank) # 这些都是分布式训练需要的,local_rank用于这里

torch.distributed.init_process_group(

backend="nccl", init_method="env://"

)

synchronize()

# 参数默认是在fcos_core/config/defaults.py中,其余由config_file,opts覆盖

cfg.merge_from_file(args.config_file) # 从yaml文件中读取参数

cfg.merge_from_list(args.opts) # 也可以从命令行参数重写

cfg.freeze() # 冻住参数,为了防止之后不小心被更改,cfg被传入train()

# 可以在这里打印cfg看看,我以fcos_R_50_FPN_1x.yaml为例

output_dir = cfg.OUTPUT_DIR # 创建输出文件夹,存放一些日志信息

if output_dir:

mkdir(output_dir)

# 写入日志文件,包括GPU数量,系统环境,配置文件参数等

logger = setup_logger("fcos_core", output_dir, get_rank())

logger.info("Using {} GPUs".format(num_gpus))

logger.info(args)

logger.info("Collecting env info (might take some time)")

logger.info("\n" + collect_env_info())

logger.info("Loaded configuration file {}".format(args.config_file))

with open(args.config_file, "r") as cf:

config_str = "\n" + cf.read()

logger.info(config_str)

logger.info("Running with config:\n{}".format(cfg))

# 这句话是下一个入口,关注train()方法,里面第一步就是构建模型

model = train(cfg, args.local_rank, args.distributed) # cfg, 0, 0

if not args.skip_test: # 如果不跳过test,那就ran一下它

run_test(cfg, model, args.distributed)

我们可以看到config-file和local_rank前面都有--表明他们是可选参数(optional arguments),但是opts没有,意味这他是位置参数(positional arguments),而nargs=argparse.REMAINDER表明:所有剩余的命令行参数都被收集到一个列表中(这通常用于命令行工具分发命令到其它命令行工具),所以args.opts就传入到了cfg.merge_from_list()中

train()

下面要进入的就是train()方法,在main中被这样调用:

model = train(cfg, args.local_rank, args.distributed) # cfg, 0, 0 返回一个model

model = build_detection_model(cfg) # 本篇只写到模型的构建,所以只用看到这一句

fcos_core/modeling/detector/detectors.py

train中的build_detection_model(cfg) 指向这个文件:

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

from .generalized_rcnn import GeneralizedRCNN

_DETECTION_META_ARCHITECTURES = {"GeneralizedRCNN": GeneralizedRCNN}

def build_detection_model(cfg):

meta_arch = _DETECTION_META_ARCHITECTURES[cfg.MODEL.META_ARCHITECTURE]

return meta_arch(cfg)

从打印出来的cfg可以看出:

MODEL:

...

META_ARCHITECTURE: GeneralizedRCNN

build_detection_model返回的就是GeneralizedRCNN(cfg)

fcos_core/modeling/detector/generalized_rcnn.py

generalized_rcnn.py这个模块就是Implements the Generalized R-CNN framework,当然作者没有改变太多maskrcnn-benchmark的代码,一开始看还很好奇,怎么FCOS里面还有roi的,后来发现其实是没用的。当然这里的rpn也不是R-CNN framework中的rpn,而是FCOS的Head。所以看下build_backbone和build_rpn

class GeneralizedRCNN(nn.Module):

"""

Main class for Generalized R-CNN. Currently supports boxes and masks.

It consists of three main parts:

- backbone

- rpn

- heads: takes the features + the proposals from the RPN and computes

detections / masks from it.

"""

def __init__(self, cfg):

super(GeneralizedRCNN, self).__init__()

self.backbone = build_backbone(cfg) #返回的是一个nn.Sequential的model,其中FPN出来的就是那多层特征

self.rpn = build_rpn(cfg, self.backbone.out_channels) # 这里就是FCOS头部

self.roi_heads = build_roi_heads(cfg, self.backbone.out_channels) # 这里roi_heads是空列表

def forward(self, images, targets=None):

pass # 这里暂时不用,所以我pass掉了

build_backbone()

build_backbone是fcos_core/modeling/backbone/backbone.py下的一个函数,我这里是以:fcos_R_50_FPN_1x.yaml为例(旁边标的参数都是这个里面的,下不赘述),因为其中的CONV_BODY是 R-50-FPN-RETINANET,所以我只关注 R-50-FPN-RETINANET的注册(register),当然这个会了,其他的如 R-50-C4的注册都没有问题:

from collections import OrderedDict

from torch import nn

from fcos_core.modeling import registry

from fcos_core.modeling.make_layers import conv_with_kaiming_uniform

from . import fpn as fpn_module

from . import resnet

from . import mobilenet

@registry.BACKBONES.register("R-50-FPN-RETINANET")

@registry.BACKBONES.register("R-101-FPN-RETINANET")

def build_resnet_fpn_p3p7_backbone(cfg):

# 为节省篇幅,方便阅读,这里就先不放这一函数内容,下面会再说

return model

def build_backbone(cfg):

# 如果CONV_BOD不在registry.BACKBONES中就抛出异常

assert cfg.MODEL.BACKBONE.CONV_BODY in registry.BACKBONES, \

"cfg.MODEL.BACKBONE.CONV_BODY: {} are not registered in registry".format(

cfg.MODEL.BACKBONE.CONV_BODY

)

return registry.BACKBONES[cfg.MODEL.BACKBONE.CONV_BODY](cfg) # usage of decorator

# registry.BACKBONES[cfg.MODEL.BACKBONE.CONV_BODY] ==> 指代build_resnet_fpn_p3p7_backbone()

# 所以后面加一个参数:cfg

- build_backbone下面首先就是个assert语句,

CONV_BODY-->R-50-FPN-RETINANET是一个字符串,registry.BACKBONES是fcos_core/modeling/registry.py下的Registry类实例化的一个对象,该类定义在fcos_core/utils/registry.py中,继承了dict类,所以registry.BACKBONES也有字典的用法,上面的【11行】用到了装饰器(decorator)的语法:@registry.BACKBONES.register("R-50-FPN-RETINANET"),这个往下看:

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

def _register_generic(module_dict, module_name, module):

assert module_name not in module_dict

module_dict[module_name] = module

class Registry(dict):

'''

A helper class for managing registering modules, it extends a dictionary

and provides a register functions.

Eg. creeting a registry:

some_registry = Registry({"default": default_module})

There're two ways of registering new modules:

1): normal way is just calling register function:

def foo():

...

some_registry.register("foo_module", foo)

2): used as decorator when declaring the module:

@some_registry.register("foo_module")

@some_registry.register("foo_modeul_nickname")

def foo():

...

Access of module is just like using a dictionary, eg:

f = some_registry["foo_modeul"]

'''

def __init__(self, *args, **kwargs):

super(Registry, self).__init__(*args, **kwargs)

def register(self, module_name, module=None):

# used as function call

if module is not None:

_register_generic(self, module_name, module)

return

# used as decorator

def register_fn(fn):

_register_generic(self, module_name, fn)

return fn

return register_fn

- 可以看到在类Registry()中有定义返回函数register_fn(fn)的函数register,那么这样就可以被当作装饰器使用(关于装饰器的语法可以参考这个:装饰器)。那么当

@registry.BACKBONES.register("R-50-FPN-RETINANET")这句话“装饰”了build_resnet_fpn_p3p7_backbone这个函数的时候,就完成了一个键值对的写入:registry.BACKBONES[“R-50-FPN-RETINANET”]=build_resnet_fpn_p3p7_backbone,所以build_backbone(cfg)返回的就是build_resnet_fpn_p3p7_backbone(cfg),那我们就来看一下这个函数是怎么构造backbone的:

@registry.BACKBONES.register("R-50-FPN-RETINANET")

@registry.BACKBONES.register("R-101-FPN-RETINANET")

def build_resnet_fpn_p3p7_backbone(cfg):

body = resnet.ResNet(cfg)

# 获取 fpn 所需的channels参数

in_channels_stage2 = cfg.MODEL.RESNETS.RES2_OUT_CHANNELS # 256

out_channels = cfg.MODEL.RESNETS.BACKBONE_OUT_CHANNELS # 256

in_channels_p6p7 = in_channels_stage2 * 8 if cfg.MODEL.RETINANET.USE_C5 \

else out_channels

fpn = fpn_module.FPN(

in_channels_list=[

0,

in_channels_stage2 * 2,

in_channels_stage2 * 4,

in_channels_stage2 * 8,

],

out_channels=out_channels,

conv_block=conv_with_kaiming_uniform( # 这个conv如果stride=1的话就不变size,返回的是一个函数

cfg.MODEL.FPN.USE_GN, cfg.MODEL.FPN.USE_RELU

),

top_blocks=fpn_module.LastLevelP6P7(in_channels_p6p7, out_channels),

)

# 通过有序字典将body和fpn送入nn.Sequential构造模型

model = nn.Sequential(OrderedDict([("body", body), ("fpn", fpn)])) # 写成一个,body的输出作为fpn的输入

# 这个是为了之后有用,再赋一次值

model.out_channels = out_channels

return model

- build_resnet_fpn_p3p7_backbone顾名思义,就是构造上述论文图中的backbone部分,并且FPN部分从P3到P7。这里分别由resnet.ResNet产生body,由fpn_module.FPN产生fpn部分。下面依次来看这两部分:

resnet.py

下面就是来构造一个resnet了,这在torchvision里面都有实现,这里也比较类似,如果之前看过torchvision里面的resnet代码,这里就会非常好理解。先来看一些配置内容和较容易理解的部分:

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

"""

Variant of the resnet module that takes cfg as an argument.

Example usage. Strings may be specified in the config file.

model = ResNet(

"StemWithFixedBatchNorm",

"BottleneckWithFixedBatchNorm",

"ResNet50StagesTo4",

)

OR:

model = ResNet(

"StemWithGN",

"BottleneckWithGN",

"ResNet50StagesTo4",

)

Custom implementations may be written in user code and hooked in via the

`register_*` functions.

"""

# 上面是一个使用说明,下面先导入一些必要包

from collections import namedtuple

import torch

import torch.nn.functional as F

from torch import nn

from fcos_core.layers import FrozenBatchNorm2d

from fcos_core.layers import Conv2d

from fcos_core.layers import DFConv2d

from fcos_core.modeling.make_layers import group_norm

from fcos_core.utils.registry import Registry

# ResNet stage specification 通过一个命名元组来设定resnet各阶段的参数

StageSpec = namedtuple(

"StageSpec",

[

"index", # Index of the stage, eg 1, 2, ..,. 5

"block_count", # Number of residual blocks in the stage

"return_features", # True => return the last feature map from this stage

],

)

# -----------------------------------------------------------------------------

# Standard ResNet models

# -----------------------------------------------------------------------------

# 下面这些元组会通过_STAGE_SPECS[cfg.MODEL.BACKBONE.CONV_BODY]来选定,我只放了resnet50的

# ResNet-50 (including all stages)

ResNet50StagesTo5 = tuple(

StageSpec(index=i, block_count=c, return_features=r)

for (i, c, r) in ((1, 3, False), (2, 4, False), (3, 6, False), (4, 3, True))

)

# ResNet-50 up to stage 4 (excludes stage 5)只使用到第四阶段输出的特征图

ResNet50StagesTo4 = tuple(

StageSpec(index=i, block_count=c, return_features=r)

for (i, c, r) in ((1, 3, False), (2, 4, False), (3, 6, True))

)

# ResNet-50-FPN (including all stages)由于fpn需要用到每一个阶段输出的特征图, 故return_features参数均为True

ResNet50FPNStagesTo5 = tuple(

StageSpec(index=i, block_count=c, return_features=r)

for (i, c, r) in ((1, 3, True), (2, 4, True), (3, 6, True), (4, 3, True))

)

# 这个指定resnet的Bottleneck结构用FixedBatchNorm还是GroupNorm

_TRANSFORMATION_MODULES = Registry({

"BottleneckWithFixedBatchNorm": BottleneckWithFixedBatchNorm,

"BottleneckWithGN": BottleneckWithGN,

})

# 这个指定resnet的Stem结构用FixedBatchNorm还是GroupNorm

_STEM_MODULES = Registry({

"StemWithFixedBatchNorm": StemWithFixedBatchNorm,

"StemWithGN": StemWithGN,

})

# 这个指定具体构建resnet的哪个深度的模型,并且到第几个stage

_STAGE_SPECS = Registry({

"R-50-C4": ResNet50StagesTo4,

"R-50-C5": ResNet50StagesTo5,

"R-101-C4": ResNet101StagesTo4,

"R-101-C5": ResNet101StagesTo5,

"R-50-FPN": ResNet50FPNStagesTo5,

"R-50-FPN-RETINANET": ResNet50FPNStagesTo5,

"R-101-FPN": ResNet101FPNStagesTo5,

"R-101-FPN-RETINANET": ResNet101FPNStagesTo5,

"R-152-FPN": ResNet152FPNStagesTo5,

})

想要了解一下命名元组(namedtuple)的使用语法的可以看一下这一篇,可以打印看一下ResNet50FPNStagesTo5是什么样的:

(StageSpec(index=1, block_count=3, return_features=True),

StageSpec(index=2, block_count=4, return_features=True),

StageSpec(index=3, block_count=6, return_features=True),

StageSpec(index=4, block_count=3, return_features=True))

这个可以对照着下面的图看就很容易理解,block_count就是每个stage里面的block数量,return_features表明该stage是否输出特征

下面在来看整体resnet构造之前看一下基本单元的构造,包括stem(主干)和Bottleneck(瓶颈结构),通过这些堆叠,就构成了整个resnet:其中stem(主干)部分就是上图中conv1的7×7卷积和con2_x中的3×3的max pool;而对于Bottleneck(瓶颈结构),可以看到对于resnet50,resnet101,resnet152都是一样的结构,只是block在每个stage的数量不同,所以可以用循环很好地构造出来:

# 为了观看方便我把同类内容放一起了

class BaseStem(nn.Module):

def __init__(self, cfg, norm_func):

super(BaseStem, self).__init__()

out_channels = cfg.MODEL.RESNETS.STEM_OUT_CHANNELS #64->主干的输出通道数

self.conv1 = Conv2d(

3, out_channels, kernel_size=7, stride=2, padding=3, bias=False

)

self.bn1 = norm_func(out_channels) # 通过对应的norm_func归一化层

for l in [self.conv1,]: # 凯明初始化

nn.init.kaiming_uniform_(l.weight, a=1)

def forward(self, x): # 定义前向传播过程

x = self.conv1(x)

x = self.bn1(x)

x = F.relu_(x) # 这里stem也包括了max pool,因为无参数,直接写在forward里

x = F.max_pool2d(x, kernel_size=3, stride=2, padding=1)

return x

# 下面的StemWithFixedBatchNorm和StemWithGN继承了类BaseStem

# 只不过初始化的时候是FrozenBatchNorm2d还是group_norm

class StemWithFixedBatchNorm(BaseStem):

def __init__(self, cfg):

super(StemWithFixedBatchNorm, self).__init__(

cfg, norm_func=FrozenBatchNorm2d

)

class StemWithGN(BaseStem):

def __init__(self, cfg):

super(StemWithGN, self).__init__(cfg, norm_func=group_norm)

class Bottleneck(nn.Module):

def __init__(

self,

in_channels, # bottleneck的输入channels

bottleneck_channels, # bottleneck压缩后的channels

out_channels, # bottleneck的输出channels

num_groups, # bottleneck分组的num

stride_in_1x1, # 在每个stage的开始的1x1conv中的stride

stride, # 卷积步长

dilation, # 空洞卷积的间隔

norm_func, # 用哪一个归一化函数

dcn_config # Deformable Convolutional Networks配置情况

):

super(Bottleneck, self).__init__()

# downsample: 当 bottleneck 的输入和输出的 channels 不相等时, 则需要采用一定的策略

# 在原文中, 有 A, B, C三种策略, 本文采用的是 B 策略(也是原文推荐的)

# 即只有在输入输出通道数不相等时才使用 projection shortcuts,

# 也就是利用参数矩阵映射使得输入输出的 channels 相等

self.downsample = None

# 当输入输出通道数不同时, 额外添加一个1×1的卷积层使得输入通道数映射成输出通道数

if in_channels != out_channels:

down_stride = stride if dilation == 1 else 1

self.downsample = nn.Sequential(

Conv2d(

in_channels, out_channels,

kernel_size=1, stride=down_stride, bias=False

),

norm_func(out_channels),

)

for modules in [self.downsample,]:

for l in modules.modules():

if isinstance(l, Conv2d):

nn.init.kaiming_uniform_(l.weight, a=1)

if dilation > 1:

stride = 1 # reset to be 1

# The original MSRA ResNet models have stride in the first 1x1 conv

# The subsequent fb.torch.resnet and Caffe2 ResNe[X]t implementations have

# stride in the 3x3 conv

# 这里的意思就是本来论文里的stride=2的卷积用在stage3-5的第一个1x1conv上,现在用在

# 3x3conv里,但是这里因为是原来框架的,我打印出来还是在1x1conv上,系没有删除注释

# 因为下面调用的时候都是stride_in_1x1=True

stride_1x1, stride_3x3 = (stride, 1) if stride_in_1x1 else (1, stride)

self.conv1 = Conv2d(

in_channels,

bottleneck_channels,

kernel_size=1,

stride=stride_1x1,

bias=False,

)

self.bn1 = norm_func(bottleneck_channels)

# TODO: specify init for the above

# dcn_config字典中有键"stage_with_dcn",则返回对应的值,否则为False

with_dcn = dcn_config.get("stage_with_dcn", False)

# 判断bottleneck的第二层卷积层是否使用可变形卷积

if with_dcn:

deformable_groups = dcn_config.get("deformable_groups", 1)

with_modulated_dcn = dcn_config.get("with_modulated_dcn", False)

self.conv2 = DFConv2d(

bottleneck_channels,

bottleneck_channels,

with_modulated_dcn=with_modulated_dcn,

kernel_size=3,

stride=stride_3x3,

groups=num_groups,

dilation=dilation,

deformable_groups=deformable_groups,

bias=False

)

else:

self.conv2 = Conv2d(

bottleneck_channels,

bottleneck_channels,

kernel_size=3,

stride=stride_3x3,

padding=dilation,

bias=False,

groups=num_groups,

dilation=dilation

)

nn.init.kaiming_uniform_(self.conv2.weight, a=1)

self.bn2 = norm_func(bottleneck_channels)

# 创建bottleneck的第3层卷积层

self.conv3 = Conv2d(

bottleneck_channels, out_channels, kernel_size=1, bias=False

)

self.bn3 = norm_func(out_channels)

for l in [self.conv1, self.conv3,]:

nn.init.kaiming_uniform_(l.weight, a=1)

def forward(self, x): # 定义前向传播过程

identity = x

out = self.conv1(x)

out = self.bn1(out)

out = F.relu_(out)

out = self.conv2(out)

out = self.bn2(out)

out = F.relu_(out)

out0 = self.conv3(out)

out = self.bn3(out0)

if self.downsample is not None:

identity = self.downsample(x)

out += identity # 跳连结构,此时add起来

out = F.relu_(out) # 本地relu

return out

# 当Bottleneck类实现好的时候,BottleneckWithFixedBatchNorm和BottleneckWithGN

# 就是简单的继承它就好了,然后初始化自己的参数,唯一的区别就是norm_func是FrozenBatchNorm2d

# 还是group_norm

class BottleneckWithFixedBatchNorm(Bottleneck):

def __init__(

self,

in_channels,

bottleneck_channels,

out_channels,

num_groups=1,

stride_in_1x1=True,

stride=1,

dilation=1,

dcn_config=None

):

super(BottleneckWithFixedBatchNorm, self).__init__(

in_channels=in_channels,

bottleneck_channels=bottleneck_channels,

out_channels=out_channels,

num_groups=num_groups,

stride_in_1x1=stride_in_1x1,

stride=stride,

dilation=dilation,

norm_func=FrozenBatchNorm2d,

dcn_config=dcn_config

)

class BottleneckWithGN(Bottleneck):

def __init__(

self,

in_channels,

bottleneck_channels,

out_channels,

num_groups=1,

stride_in_1x1=True,

stride=1,

dilation=1,

dcn_config=None

):

super(BottleneckWithGN, self).__init__(

in_channels=in_channels,

bottleneck_channels=bottleneck_channels,

out_channels=out_channels,

num_groups=num_groups,

stride_in_1x1=stride_in_1x1,

stride=stride,

dilation=dilation,

norm_func=group_norm,

dcn_config=dcn_config

)

在看下面resnet主体调用上面这些基本单元时,我强烈建议可以打印出build_backbone(cfg)的结果,对照着看会更容易理解下面的代码:

class ResNet(nn.Module):

def __init__(self, cfg):

super(ResNet, self).__init__()

# If we want to use the cfg in forward(), then we should make a copy

# of it and store it for later use:

# self.cfg = cfg.clone()

# Translate string names to implementations 根据cfg选取具体实现

stem_module = _STEM_MODULES[cfg.MODEL.RESNETS.STEM_FUNC] # eg: "StemWithFixedBatchNorm"

stage_specs = _STAGE_SPECS[cfg.MODEL.BACKBONE.CONV_BODY] # eg: "R-50-FPN-RETINANET"

transformation_module = _TRANSFORMATION_MODULES[cfg.MODEL.RESNETS.TRANS_FUNC]

# Construct the stem module 这里是stem的实现, 也就是resnet的第一阶段conv1

self.stem = stem_module(cfg)

# Constuct the specified ResNet stages resnet conv2_x~conv5_x的实现

num_groups = cfg.MODEL.RESNETS.NUM_GROUPS # eg:1 1时为ResNet, >1 时为ResNeXt

width_per_group = cfg.MODEL.RESNETS.WIDTH_PER_GROUP # eg:64

in_channels = cfg.MODEL.RESNETS.STEM_OUT_CHANNELS # eg:64

stage2_bottleneck_channels = num_groups * width_per_group # eg:64

stage2_out_channels = cfg.MODEL.RESNETS.RES2_OUT_CHANNELS # eg:256

self.stages = []

self.return_features = {}

for stage_spec in stage_specs:

name = "layer" + str(stage_spec.index)

stage2_relative_factor = 2 ** (stage_spec.index - 1)

# 每过一个stage,bottleneck_channels和out_channels 翻倍

bottleneck_channels = stage2_bottleneck_channels * stage2_relative_factor

out_channels = stage2_out_channels * stage2_relative_factor

stage_with_dcn = cfg.MODEL.RESNETS.STAGE_WITH_DCN[stage_spec.index - 1]

# 循环调用_make_stage,依次实现conv2_x~conv5_x

module = _make_stage(

transformation_module, # BottleneckWithFixedBatchNorm

in_channels,

bottleneck_channels,

out_channels,

stage_spec.block_count,

num_groups,

cfg.MODEL.RESNETS.STRIDE_IN_1X1,

first_stride=int(stage_spec.index > 1) + 1, # 当处于stage3~5时, 使用stride=2来downsize

dcn_config={

"stage_with_dcn": stage_with_dcn,

"with_modulated_dcn": cfg.MODEL.RESNETS.WITH_MODULATED_DCN,

"deformable_groups": cfg.MODEL.RESNETS.DEFORMABLE_GROUPS,

}

)

in_channels = out_channels

self.add_module(name, module)

self.stages.append(name)

self.return_features[name] = stage_spec.return_features

# Optionally freeze (requires_grad=False) parts of the backbone

self._freeze_backbone(cfg.MODEL.BACKBONE.FREEZE_CONV_BODY_AT)

def _freeze_backbone(self, freeze_at):

# 根据给定的freeze_at参数冻结相应层的参数更新

if freeze_at < 0:

return

for stage_index in range(freeze_at):

if stage_index == 0:

m = self.stem # stage 0 is the stem

else:

m = getattr(self, "layer" + str(stage_index))

for p in m.parameters():

p.requires_grad = False

def forward(self, x):

outputs = []

x = self.stem(x)

for stage_name in self.stages:

x = getattr(self, stage_name)(x)

# 将stage2~5中需要返回的某些层的特征图以列表形式保存,作为FPN的输入

if self.return_features[stage_name]:

outputs.append(x)

return outputs

def _make_stage(

transformation_module,

in_channels,

bottleneck_channels,

out_channels,

block_count,

num_groups,

stride_in_1x1,

first_stride,

dilation=1,

dcn_config=None

):

blocks = []

stride = first_stride

# 循环调用类Bottleneck,每调用一次构造一个瓶颈结构

for _ in range(block_count):

blocks.append(

transformation_module(

in_channels,

bottleneck_channels,

out_channels,

num_groups,

stride_in_1x1,

stride,

dilation=dilation,

dcn_config=dcn_config

)

)

stride = 1 # 注意就是第一次的stride=first_stride,之后都等于1

in_channels = out_channels

return nn.Sequential(*blocks)

经过这么长看下来,终于构造好了resnet,不要忘了,接下来还有fpn和fcos_head没有构造,继续加油!!!!

fpn.py

build_resnet_fpn_p3p7_backbone的第二部分就是构造fpn,涉及到的代码如下:

from . import fpn as fpn_module

fpn = fpn_module.FPN(

in_channels_list=[

0, # 因为从C3起才有P3,所以stage2跳过,设置为0

in_channels_stage2 * 2,

in_channels_stage2 * 4,

in_channels_stage2 * 8,

],

out_channels=out_channels, # cfg.MODEL.RESNETS.BACKBONE_OUT_CHANNELS

conv_block=conv_with_kaiming_uniform( # 这个conv如果stride=1的话就不变size,返回的是nn.Conv2d

cfg.MODEL.FPN.USE_GN, cfg.MODEL.FPN.USE_RELU # eg: False, False

),

top_blocks=fpn_module.LastLevelP6P7(in_channels_p6p7, out_channels), # eg: 256, 256

)

其中的fpn_module就是fcos_core/modeling/backbone/fpn.py,其中有FPN类,看看代码:

# Copyright (c) Facebook, Inc. and its affiliates. All Rights Reserved.

import torch

import torch.nn.functional as F

from torch import nn

class FPN(nn.Module):

"""

Module that adds FPN on top of a list of feature maps. 在特征图列表上添加FPN

The feature maps are currently supposed to be in increasing depth

order, and must be consecutive 假设特征图在列表中是按深度连续递增排列

"""

def __init__(

self, in_channels_list, out_channels, conv_block, top_blocks=None

):

"""

Arguments:

in_channels_list (list[int]): number of channels for each feature map that

will be fed

out_channels (int): number of channels of the FPN representation

top_blocks (nn.Module or None): if provided, an extra operation will

be performed on the output of the last (smallest resolution)

FPN output, and the result will extend the result list

"""

super(FPN, self).__init__()

self.inner_blocks = []

self.layer_blocks = []

for idx, in_channels in enumerate(in_channels_list, 1): # 从1开始计idx

inner_block = "fpn_inner{}".format(idx)

layer_block = "fpn_layer{}".format(idx)

if in_channels == 0:

continue

inner_block_module = conv_block(in_channels, out_channels, 1)

# 注意这里layer_block_module输出通道数都是out_channels,比如256

# 也就是说fpn每一层级的特征图输出通道数是一样的

layer_block_module = conv_block(out_channels, out_channels, 3, 1)

self.add_module(inner_block, inner_block_module)

self.add_module(layer_block, layer_block_module)

self.inner_blocks.append(inner_block)

self.layer_blocks.append(layer_block)

self.top_blocks = top_blocks

def forward(self, x):

"""

具体每层流向可以对照着看我下面一幅图

Arguments:

x (list[Tensor]): feature maps for each feature level.

C系列,i.e. [C3, C4, C5] 其实就是resnet(body)的输出

Returns:

results (tuple[Tensor]): feature maps after FPN layers.

They are ordered from highest resolution first.

P系列,i.e. [P3, P4, P5, P6, P7]

"""

last_inner = getattr(self, self.inner_blocks[-1])(x[-1])

results = []

results.append(getattr(self, self.layer_blocks[-1])(last_inner))

for feature, inner_block, layer_block in zip(

x[:-1][::-1], self.inner_blocks[:-1][::-1], self.layer_blocks[:-1][::-1]

):

if not inner_block:

continue

# inner_top_down = F.interpolate(last_inner, scale_factor=2, mode="nearest")

inner_lateral = getattr(self, inner_block)(feature)

inner_top_down = F.interpolate(

last_inner, size=(int(inner_lateral.shape[-2]), int(inner_lateral.shape[-1])),

mode='nearest'

)

last_inner = inner_lateral + inner_top_down

results.insert(0, getattr(self, layer_block)(last_inner))

if isinstance(self.top_blocks, LastLevelP6P7):

last_results = self.top_blocks(x[-1], results[-1])

results.extend(last_results)

elif isinstance(self.top_blocks, LastLevelMaxPool):

last_results = self.top_blocks(results[-1])

results.extend(last_results)

return tuple(results)

class LastLevelMaxPool(nn.Module):

def forward(self, x):

return [F.max_pool2d(x, 1, 2, 0)]

class LastLevelP6P7(nn.Module):

"""

This module is used in RetinaNet to generate extra layers, P6 and P7.

"""

def __init__(self, in_channels, out_channels):

super(LastLevelP6P7, self).__init__()

# 在C5或者P5的基础上再来两层卷积得到P6,P7

self.p6 = nn.Conv2d(in_channels, out_channels, 3, 2, 1)

self.p7 = nn.Conv2d(out_channels, out_channels, 3, 2, 1)

for module in [self.p6, self.p7]:

nn.init.kaiming_uniform_(module.weight, a=1)

nn.init.constant_(module.bias, 0)

self.use_P5 = in_channels == out_channels

def forward(self, c5, p5):

x = p5 if self.use_P5 else c5

p6 = self.p6(x)

p7 = self.p7(F.relu(p6))

return [p6, p7]

打印出来看一下是这样的:

(fpn): FPN(

(fpn_inner2): Conv2d(512, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_layer2): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(fpn_inner3): Conv2d(1024, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_layer3): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(fpn_inner4): Conv2d(2048, 256, kernel_size=(1, 1), stride=(1, 1))

(fpn_layer4): Conv2d(256, 256, kernel_size=(3, 3), stride=(1, 1), padding=(1, 1))

(top_blocks): LastLevelP6P7(

(p6): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

(p7): Conv2d(256, 256, kernel_size=(3, 3), stride=(2, 2), padding=(1, 1))

)

)

里面有几个关于python列表的用法:results.append,results.insert,results.extend可以看一下这篇,下图是我照着画的fpn的前向流程图,可以对照forward部分帮助记忆:

其实我这里有个小疑惑,其实我上面写的fpn的forward中的x是[C3, C4, C5],但是实际分析不应该是[C2, C3, C4, C5]吗,这样的话就和后面的数量对不起来了,这还得实际跑起来验证一下

至此,fpn部分就以元组形式返回了(P3, P4, P5, P6, P7)的各层级特征,准备送入后面的tower和head

fcos_head

在类GeneralizedRCNN初始化的时候还有这么一句:self.rpn = build_rpn(cfg, self.backbone.out_channels),其实这里没改过来,实际构造的是fcos_head,返回的是build_fcos(cfg, in_channels),具体代码在fcos_core/modeling/rpn/fcos/fcos.py

太长了,先停一下,换下一篇继续写。。。。。。

下一篇地址

FCOS官方代码详解(二):Architecture(head)

References

MaskrcnnBenchmark 源码解析-模型定义(modeling)之骨架网络(backbone)