本文将基于android6.0的源码,对Camera API2.0下Camera的preview的流程进行分析。在文章android6.0源码分析之Camera API2.0下的初始化流程分析中,已经对Camera2内置应用的Open即初始化流程进行了详细的分析,而在open过程中,定义了一个PreviewCallback,当时并未详细分析,即Open过程中,会自动开启预览过程,即会调用OneCameraImpl的startPreview方法,它是捕获和绘制屏幕预览帧的开始,预览才会真正开始提供一个表面。

Camera2文章分析目录:

android6.0源码分析之Camera API2.0简介

android6.0源码分析之Camera2 HAL分析

android6.0源码分析之Camera API2.0下的初始化流程分析

android6.0源码分析之Camera API2.0下的Preview(预览)流程分析

android6.0源码分析之Camera API2.0下的Capture流程分析

android6.0源码分析之Camera API2.0下的video流程分析

Camera API2.0的应用

1、Camera2 preview的应用层流程分析

preview流程都是从startPreview开始的,所以来看startPreview方法的代码:

//OneCameraImpl.java

@Override

public void startPreview(Surface previewSurface, CaptureReadyCallback listener) {

mPreviewSurface = previewSurface;

//根据Surface以及CaptureReadyCallback回调来建立preview环境

setupAsync(mPreviewSurface, listener);

}

这其中有一个比较重要的回调CaptureReadyCallback,先分析setupAsync方法:

//OneCameraImpl.java

private void setupAsync(final Surface previewSurface, final CaptureReadyCallback listener) {

mCameraHandler.post(new Runnable() {

@Override

public void run() {

//建立preview环境

setup(previewSurface, listener);

}

});

}

这里通过CameraHandler来post一个Runnable对象,它只会调用Runnable的run方法,它仍然属于UI线程,并没有创建新的线程。所以,继续分析setup方法:

// OneCameraImpl.java

private void setup(Surface previewSurface, final CaptureReadyCallback listener) {

try {

if (mCaptureSession != null) {

mCaptureSession.abortCaptures();

mCaptureSession = null;

}

List

outputSurfaces.add(previewSurface);

outputSurfaces.add(mCaptureImageReader.getSurface());

//创建CaptureSession会话来与Camera Device发送Preview请求

mDevice.createCaptureSession(outputSurfaces, new CameraCaptureSession.StateCallback() {

@Override

public void onConfigureFailed(CameraCaptureSession session) {

//如果配置失败,则回调CaptureReadyCallback的onSetupFailed方法

listener.onSetupFailed();

}

@Override

public void onConfigured(CameraCaptureSession session) {

mCaptureSession = session;

mAFRegions = ZERO_WEIGHT_3A_REGION;

mAERegions = ZERO_WEIGHT_3A_REGION;

mZoomValue = 1f;

mCropRegion = cropRegionForZoom(mZoomValue);

//调用repeatingPreview来启动preview

boolean success = repeatingPreview(null);

if (success) {

//若启动成功,则回调CaptureReadyCallback的onReadyForCapture,表示准备拍照成功

listener.onReadyForCapture();

} else {

//若启动失败,则回调CaptureReadyCallback的onSetupFailed,表示preview建立失败

listener.onSetupFailed();

}

}

@Override

public void onClosed(CameraCaptureSession session) {

super.onClosed(session);

}

}, mCameraHandler);

} catch (CameraAccessException ex) {

Log.e(TAG, "Could not set up capture session", ex);

listener.onSetupFailed();

}

}

首先,调用Device的createCaptureSession方法来创建一个会话,并定义了会话的状态回调CameraCaptureSession.StateCallback(),其中,当会话创建成功,则会回调onConfigured()方法,在其中,首先调用repeatingPreview来启动preview,然后处理preview的结果并调用先前定义的CaptureReadyCallback来通知用户进行Capture操作。先分析repeatingPreview方法:

// OneCameraImpl.java

private boolean repeatingPreview(Object tag) {

try {

//通过CameraDevice对象创建一个CaptureRequest的preview请求

CaptureRequest.Builder builder = mDevice.createCaptureRequest(

CameraDevice.TEMPLATE_PREVIEW);

//添加预览的目标Surface

builder.addTarget(mPreviewSurface);

//设置预览模式

builder.set(CaptureRequest.CONTROL_MODE, CameraMetadata.CONTROL_MODE_AUTO);

addBaselineCaptureKeysToRequest(builder);

//利用会话发送请求,mCaptureCallback为

mCaptureSession.setRepeatingRequest(builder.build(), mCaptureCallback,mCameraHandler);

Log.v(TAG, String.format("Sent repeating Preview request, zoom = %.2f", mZoomValue));

return true;

} catch (CameraAccessException ex) {

Log.e(TAG, "Could not access camera setting up preview.", ex);

return false;

}

}

首先调用CameraDeviceImpl的createCaptureRequest方法创建类型为TEMPLATE_PREVIEW 的CaptureRequest,然后调用CameraCaptureSessionImpl的setRepeatingRequest方法将此请求发送出去:

//CameraCaptureSessionImpl.java

@Override

public synchronized int setRepeatingRequest(CaptureRequest request, CaptureCallback callback,

Handler handler) throws CameraAccessException {

if (request == null) {

throw new IllegalArgumentException("request must not be null");

} else if (request.isReprocess()) {

throw new IllegalArgumentException("repeating reprocess requests are not supported");

}

checkNotClosed();

handler = checkHandler(handler, callback);

...

//将此请求添加到待处理的序列里

return addPendingSequence(mDeviceImpl.setRepeatingRequest(request,createCaptureCallbackProxy(

handler, callback), mDeviceHandler));

}

至此应用层的preview的请求流程分析结束,继续分析其结果处理,如果preview开启成功,则会回调CaptureReadyCallback的onReadyForCapture方法,现在分析CaptureReadyCallback回调:

//CaptureModule.java

new CaptureReadyCallback() {

@Override

public void onSetupFailed() {

mCameraOpenCloseLock.release();

Log.e(TAG, "Could not set up preview.");

mMainThread.execute(new Runnable() {

@Override

public void run() {

if (mCamera == null) {

Log.d(TAG, "Camera closed, aborting.");

return;

}

mCamera.close();

mCamera = null;

}

});

}

@Override

public void onReadyForCapture() {

mCameraOpenCloseLock.release();

mMainThread.execute(new Runnable() {

@Override

public void run() {

Log.d(TAG, "Ready for capture.");

if (mCamera == null) {

Log.d(TAG, "Camera closed, aborting.");

return;

}

//

onPreviewStarted();

onReadyStateChanged(true);

mCamera.setReadyStateChangedListener(CaptureModule.this);

mUI.initializeZoom(mCamera.getMaxZoom());

mCamera.setFocusStateListener(CaptureModule.this);

}

});

}

}

根据前面的分析,预览成功后会回调onReadyForCapture方法,它主要是通知主线程的状态改变,并设置Camera的ReadyStateChangedListener的监听,其回调方法如下:

//CaptureModule.java

@Override

public void onReadyStateChanged(boolean readyForCapture) {

if (readyForCapture) {

mAppController.getCameraAppUI().enableModeOptions();

}

mAppController.setShutterEnabled(readyForCapture);

}

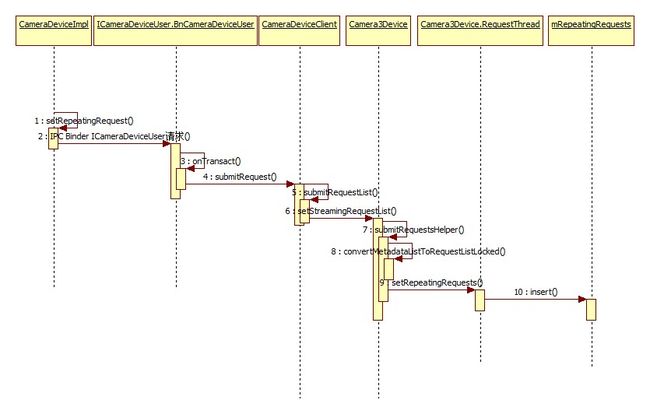

如代码所示,当其状态变成准备好拍照,则将会调用CameraActivity的setShutterEnabled方法,即使能快门按键,此时也就是说预览成功结束,可以按快门进行拍照了,所以,到这里,应用层的preview的流程基本分析完毕,下图是应用层的关键调用的流程时序图:

2、Camera2 preview的Native层流程分析

分析Preview的Native的代码真是费了九牛二虎之力,若有分析不正确之处,请各位大神指正,在第一小节的后段最后会调用CameraDeviceImpl的setRepeatingRequest方法来提交请求,而在android6.0源码分析之Camera API2.0简介中,分析了Camera2框架Java IPC通信使用了CameraDeviceUser来进行通信,所以看Native层的ICameraDeviceUser的onTransact方法来处理请求的提交:

//ICameraDeviceUser.cpp

status_t BnCameraDeviceUser::onTransact(uint32_t code, const Parcel& data, Parcel* reply,

uint32_t flags){

switch(code) {

…

//请求提交

case SUBMIT_REQUEST: {

CHECK_INTERFACE(ICameraDeviceUser, data, reply);

// arg0 = request

sp

if (data.readInt32() != 0) {

request = new CaptureRequest();

request->readFromParcel(const_cast

}

// arg1 = streaming (bool)

bool repeating = data.readInt32();

// return code: requestId (int32)

reply->writeNoException();

int64_t lastFrameNumber = -1;

//将实现BnCameraDeviceUser的对下岗的submitRequest方法代码写入Binder

reply->writeInt32(submitRequest(request, repeating, &lastFrameNumber));

reply->writeInt32(1);

reply->writeInt64(lastFrameNumber);

return NO_ERROR;

} break;

...

}

CameraDeviceClientBase继承了BnCameraDeviceUser类,所以CameraDeviceClientBase相当于IPC Binder中的client,所以会调用其submitRequest方法,此处,至于IPC Binder通信原理不做分析,其参照其它资料:

//CameraDeviceClient.cpp

status_t CameraDeviceClient::submitRequest(sp

/*out*/int64_t* lastFrameNumber) {

List

requestList.push_back(request);

return submitRequestList(requestList, streaming, lastFrameNumber);

}

简单的调用,继续分析submitRequestList:

// CameraDeviceClient

status_t CameraDeviceClient::submitRequestList(List

int64_t* lastFrameNumber) {

...

//Metadata链表

List

...

for (List

sp

...

//初始化Metadata数据

CameraMetadata metadata(request->mMetadata);

...

//设置Stream的容量

Vector

outputStreamIds.setCapacity(request->mSurfaceList.size());

//循环初始化Surface

for (size_t i = 0; i < request->mSurfaceList.size(); ++i) {

sp

if (surface == 0) continue;

sp

int idx = mStreamMap.indexOfKey(IInterface::asBinder(gbp));

...

int streamId = mStreamMap.valueAt(idx);

outputStreamIds.push_back(streamId);

}

//更新数据

metadata.update(ANDROID_REQUEST_OUTPUT_STREAMS, &outputStreamIds[0],

outputStreamIds.size());

if (request->mIsReprocess) {

metadata.update(ANDROID_REQUEST_INPUT_STREAMS, &mInputStream.id, 1);

}

metadata.update(ANDROID_REQUEST_ID, &requestId, /*size*/1);

loopCounter++; // loopCounter starts from 1

//压栈

metadataRequestList.push_back(metadata);

}

mRequestIdCounter++;

if (streaming) {

//预览会走此条通道

res = mDevice->setStreamingRequestList(metadataRequestList, lastFrameNumber);

if (res != OK) {

...

} else {

mStreamingRequestList.push_back(requestId);

}

} else {

//Capture等走此条通道

res = mDevice->captureList(metadataRequestList, lastFrameNumber);

if (res != OK) {

...

}

}

if (res == OK) {

return requestId;

}

return res;

}

setStreamingRequestList和captureList方法都调用了submitRequestsHelper方法,只是他们的repeating参数一个ture,一个为false,而本节分析的preview调用的是setStreamingRequestList方法,并且API2.0下Device的实现为Camera3Device,所以看它的submitRequestsHelper实现:

// Camera3Device.cpp

status_t Camera3Device::submitRequestsHelper(const List

bool repeating,/*out*/int64_t *lastFrameNumber) {

...

RequestList requestList;

//在这里面会进行CaptureRequest的创建,并调用configureStreamLocked进行stream的配置,主要是设置了一个回调captureResultCb,即后面要分析的重要的回调

res = convertMetadataListToRequestListLocked(requests, /*out*/&requestList);

...

if (repeating) {

//眼熟不,这个方法名和应用层中CameraDevice的setRepeatingRequests一样

res = mRequestThread->setRepeatingRequests(requestList, lastFrameNumber);

} else {

//不需重复,即repeating为false时,调用此方法来讲请求提交

res = mRequestThread->queueRequestList(requestList, lastFrameNumber);

}

...

return res;

}

从代码可知,在Camera3Device里创建了要给RequestThread线程,调用它的setRepeatingRequests或者queueRequestList方法来将应用层发送过来的Request提交,继续看setRepeatingRequests方法:

// Camera3Device.cpp

status_t Camera3Device::RequestThread::setRepeatingRequests(const RequestList &requests,

/*out*/int64_t *lastFrameNumber) {

Mutex::Autolock l(mRequestLock);

if (lastFrameNumber != NULL) {

*lastFrameNumber = mRepeatingLastFrameNumber;

}

mRepeatingRequests.clear();

//将其插入mRepeatingRequest链表

mRepeatingRequests.insert(mRepeatingRequests.begin(),

requests.begin(), requests.end());

unpauseForNewRequests();

mRepeatingLastFrameNumber = NO_IN_FLIGHT_REPEATING_FRAMES;

return OK;

}

至此,Native层的preview过程基本分析结束,下面的工作将会交给Camera HAL层来处理,先给出Native层的调用时序图:

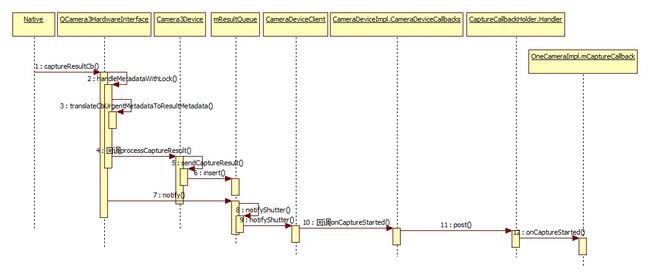

3、Camera2 preview的CameraHAL层流程分析

本节将不再对Camera的HAL层的初始化以及相关配置进行分析,只对preview等相关流程中的frame metadata的处理流程进行分析,具体的CameraHAL分析请参考android6.0源码分析之Camera2 HAL分析.在第二小节的submitRequestsHelper方法中调用convertMetadataListToRequestListLocked的时候会进行CaptureRequest的创建,并调用configureStreamLocked进行stream的配置,主要是设置了一个回调captureResultCb,所以Native层在request提交后,会回调此captureResultCb方法,首先分析captureResultCb:

// QCamera3HWI.cpp

void QCamera3HardwareInterface::captureResultCb(mm_camera_super_buf_t *metadata_buf,

camera3_stream_buffer_t *buffer, uint32_t frame_number)

{

if (metadata_buf) {

if (mBatchSize) {

//批处理模式,但代码也是循环调用handleMetadataWithLock方法

handleBatchMetadata(metadata_buf, true /* free_and_bufdone_meta_buf */);

} else { /* mBatchSize = 0 */

pthread_mutex_lock(&mMutex);

//处理元数据

handleMetadataWithLock(metadata_buf, true /* free_and_bufdone_meta_buf */);

pthread_mutex_unlock(&mMutex);

}

} else {

pthread_mutex_lock(&mMutex);

handleBufferWithLock(buffer, frame_number);

pthread_mutex_unlock(&mMutex);

}

return;

}

一种是通过循环来进行元数据的批处理,另一种是直接进行元数据的处理,但是批处理最终也是循环调用handleMetadataWithLock来处理:

// QCamera3HWI.cpp

void QCamera3HardwareInterface::handleMetadataWithLock(mm_camera_super_buf_t *metadata_buf,

bool free_and_bufdone_meta_buf){

...

//Partial result on process_capture_result for timestamp

if (urgent_frame_number_valid) {

...

for (List

i != mPendingRequestsList.end(); i++) {

...

if (i->frame_number == urgent_frame_number &&i->bUrgentReceived == 0) {

camera3_capture_result_t result;

memset(&result, 0, sizeof(camera3_capture_result_t));

i->partial_result_cnt++;

i->bUrgentReceived = 1;

//提取3A数据

result.result =translateCbUrgentMetadataToResultMetadata(metadata);

...

//对Capture Result进行处理

mCallbackOps->process_capture_result(mCallbackOps, &result);

//释放camera_metadata_t

free_camera_metadata((camera_metadata_t *)result.result);

break;

}

}

}

...

for (List

i != mPendingRequestsList.end() && i->frame_number <= frame_number;) {

camera3_capture_result_t result;

memset(&result, 0, sizeof(camera3_capture_result_t));

...

if (i->frame_number < frame_number) {

//清空数据结构

camera3_notify_msg_t notify_msg;

memset(¬ify_msg, 0, sizeof(camera3_notify_msg_t));

//定义消息类型

notify_msg.type = CAMERA3_MSG_SHUTTER;

notify_msg.message.shutter.frame_number = i->frame_number;

notify_msg.message.shutter.timestamp = (uint64_t)capture_time (urgent_frame_number -

i->frame_number) * NSEC_PER_33MSEC;

//调用回调通知应用层发生CAMERA3_MSG_SHUTTER消息

mCallbackOps->notify(mCallbackOps, ¬ify_msg);

...

CameraMetadata dummyMetadata;

//更新元数据

dummyMetadata.update(ANDROID_SENSOR_TIMESTAMP,

&i->timestamp, 1);

dummyMetadata.update(ANDROID_REQUEST_ID,

&(i->request_id), 1);

//得到元数据释放结果

result.result = dummyMetadata.release();

} else {

camera3_notify_msg_t notify_msg;

memset(¬ify_msg, 0, sizeof(camera3_notify_msg_t));

// Send shutter notify to frameworks

notify_msg.type = CAMERA3_MSG_SHUTTER;

...

//从HAL中获得Metadata

result.result = translateFromHalMetadata(metadata,

i->timestamp, i->request_id, i->jpegMetadata, i->pipeline_depth,

i->capture_intent);

saveExifParams(metadata);

if (i->blob_request) {

...

if (enabled && metadata->is_tuning_params_valid) {

//将Metadata复制到文件

dumpMetadataToFile(metadata->tuning_params, mMetaFrameCount, enabled,

"Snapshot",frame_number);

}

mPictureChannel->queueReprocMetadata(metadata_buf);

} else {

// Return metadata buffer

if (free_and_bufdone_meta_buf) {

mMetadataChannel->bufDone(metadata_buf);

free(metadata_buf);

}

}

}

...

}

}

其中,首先会调用回调的process_capture_result方法来对Capture Result进行处理,然后会调用回调的notify方法来发送一个CAMERA3_MSG_SHUTTER消息,而process_capture_result所对应的实现其实就是Camera3Device的processCaptureResult方法,先分析processCaptureResult:

//Camera3Device.cpp

void Camera3Device::processCaptureResult(const camera3_capture_result *result) {

...

//对于HAL3.2+,如果HAL不支持partial,当metadata被包含在result中时,它必须将partial_result设置为1

...

{

Mutex::Autolock l(mInFlightLock);

ssize_t idx = mInFlightMap.indexOfKey(frameNumber);

...

InFlightRequest &request = mInFlightMap.editValueAt(idx);

if (result->partial_result != 0)

request.resultExtras.partialResultCount = result->partial_result;

// 检查结果是否只有partial metadata

if (mUsePartialResult && result->result != NULL) {

if (mDeviceVersion >= CAMERA_DEVICE_API_VERSION_3_2) {//HAL版本高于3.2

if (result->partial_result > mNumPartialResults || result->partial_result < 1) {

//Log显示错误

return;

}

isPartialResult = (result->partial_result < mNumPartialResults);

if (isPartialResult) {

//将结果加入到请求的结果集中

request.partialResult.collectedResult.append(result->result);

}

} else {//低于3.2

...

}

if (isPartialResult) {

// Fire off a 3A-only result if possible

if (!request.partialResult.haveSent3A) {

request.partialResult.haveSent3A =processPartial3AResult(frameNumber,

request.partialResult.collectedResult,request.resultExtras);

}

}

}

...

if (result->result != NULL && !isPartialResult) {

if (shutterTimestamp == 0) {

request.pendingMetadata = result->result;

request.partialResult.collectedResult = collectedPartialResult;

} else {

CameraMetadata metadata;

metadata = result->result;

//发送Capture Result

sendCaptureResult(metadata, request.resultExtras, collectedPartialResult,

frameNumber, hasInputBufferInRequest,request.aeTriggerCancelOverride);

}

}

//结果处理好了,将请求移除

removeInFlightRequestIfReadyLocked(idx);

} // scope for mInFlightLock

...

}

由代码可知,它会处理局部的或者全部的metadata数据,最后如果result不为空,且得到的是请求处理的全部数据,则会调用sendCaptureResult方法来将请求结果发送出去:

//Camera3Device.cpp

void Camera3Device::sendCaptureResult(CameraMetadata &pendingMetadata,CaptureResultExtras

&resultExtras,CameraMetadata &collectedPartialResult,uint32_t frameNumber,bool reprocess,

const AeTriggerCancelOverride_t &aeTriggerCancelOverride) {

if (pendingMetadata.isEmpty())//如果数据为空,直接返回

return;

...

CaptureResult captureResult;

captureResult.mResultExtras = resultExtras;

captureResult.mMetadata = pendingMetadata;

//更新metadata

if (captureResult.mMetadata.update(ANDROID_REQUEST_FRAME_COUNT(int32_t*)&frameNumber, 1)

!= OK) {

SET_ERR("Failed to set frame# in metadata (%d)",frameNumber);

return;

} else {

...

}

// Append any previous partials to form a complete result

if (mUsePartialResult && !collectedPartialResult.isEmpty()) {

captureResult.mMetadata.append(collectedPartialResult);

}

//排序

captureResult.mMetadata.sort();

// Check that there's a timestamp in the result metadata

camera_metadata_entry entry = captureResult.mMetadata.find(ANDROID_SENSOR_TIMESTAMP);

...

overrideResultForPrecaptureCancel(&captureResult.mMetadata, aeTriggerCancelOverride);

// 有效的结果,将其插入Buffer

List

CaptureResult(captureResult));

...

mResultSignal.signal();

}

最后,它将Capture Result插入了结果队列,并释放了结果的信号量,所以到这里,Capture Result处理成功,下面分析前面的notify发送CAMERA3_MSG_SHUTTER消息:

//Camera3Device.cpp

void Camera3Device::notify(const camera3_notify_msg *msg) {

NotificationListener *listener;

{

Mutex::Autolock l(mOutputLock);

listener = mListener;

}

...

switch (msg->type) {

case CAMERA3_MSG_ERROR: {

notifyError(msg->message.error, listener);

break;

}

case CAMERA3_MSG_SHUTTER: {

notifyShutter(msg->message.shutter, listener);

break;

}

default:

SET_ERR("Unknown notify message from HAL: %d",

msg->type);

}

}

它调用了notifyShutter方法:

// Camera3Device.cpp

void Camera3Device::notifyShutter(const camera3_shutter_msg_t &msg,

NotificationListener *listener) {

...

// Set timestamp for the request in the in-flight tracking

// and get the request ID to send upstream

{

Mutex::Autolock l(mInFlightLock);

idx = mInFlightMap.indexOfKey(msg.frame_number);

if (idx >= 0) {

InFlightRequest &r = mInFlightMap.editValueAt(idx);

// Call listener, if any

if (listener != NULL) {

//调用监听的notifyShutter法国法

listener->notifyShutter(r.resultExtras, msg.timestamp);

}

...

//将待处理的result发送到Buffer

sendCaptureResult(r.pendingMetadata, r.resultExtras,

r.partialResult.collectedResult, msg.frame_number,

r.hasInputBuffer, r.aeTriggerCancelOverride);

returnOutputBuffers(r.pendingOutputBuffers.array(),

r.pendingOutputBuffers.size(), r.shutterTimestamp);

r.pendingOutputBuffers.clear();

removeInFlightRequestIfReadyLocked(idx);

}

}

...

}

首先它会通知listener preview成功,最后会调用sendCaptureResult将结果加入到结果队列。它会调用listener的notifyShutter方法,此处的listener其实是CameraDeviceClient类,所以会调用CameraDeviceClient类的notifyShutter方法:

//CameraDeviceClient.cpp

void CameraDeviceClient::notifyShutter(const CaptureResultExtras& resultExtras,nsecs_t timestamp) {

// Thread safe. Don't bother locking.

sp

if (remoteCb != 0) {

//调用应用层的回调(CaptureCallback的onCaptureStarted方法)

remoteCb->onCaptureStarted(resultExtras, timestamp);

}

}

此处的ICameraDeviceCallbacks对应的是Java层的CameraDeviceImpl.java中的内部类CameraDeviceCallbacks,所以会调用它的onCaptureStarted方法:

//CameraDeviceImpl.java

@Override

public void onCaptureStarted(final CaptureResultExtras resultExtras, final long timestamp) {

int requestId = resultExtras.getRequestId();

final long frameNumber = resultExtras.getFrameNumber();

final CaptureCallbackHolder holder;

synchronized(mInterfaceLock) {

if (mRemoteDevice == null) return; // Camera already closed

// Get the callback for this frame ID, if there is one

holder = CameraDeviceImpl.this.mCaptureCallbackMap.get(requestId);

...

// Dispatch capture start notice

holder.getHandler().post(new Runnable() {

@Override

public void run() {

if (!CameraDeviceImpl.this.isClosed()) {

holder.getCallback().onCaptureStarted(CameraDeviceImpl.this,holder.getRequest(

resultExtras.getSubsequenceId()),timestamp, frameNumber);

}

}

});

}

}

它会调用OneCameraImpl.java中的mCaptureCallback的onCaptureStarted方法:

//OneCameraImpl.java

//Common listener for preview frame metadata.

private final CameraCaptureSession.CaptureCallback mCaptureCallback =

new CameraCaptureSession.CaptureCallback() {

@Override

public void onCaptureStarted(CameraCaptureSession session,CaptureRequest request,

long timestamp,long frameNumber) {

if (request.getTag() == RequestTag.CAPTURE&& mLastPictureCallback != null) {

mLastPictureCallback.onQuickExpose();

}

}

…

}

注意:Capture,preview以及autoFocus都是使用的这个回调,而Capture调用的时候,其RequestTag为CAPTURE,而autoFocus的时候为TAP_TO_FOCUS,而preview请求时没有对RequestTag进行设置,所以回调到onCaptureStarted方法时,不需要进行处理,但是到此时,preview已经启动成功,可以进行预览了,其数据都在buffer里。所以到此时,preview的流程全部分析结束,下面给出HAL层上的流程时序图